A Taxonomy of Botnet Structures

(Taxonomy is the practice and science of classification.)

Introduction

Who are we?

Erlend Westbye

- Taking master in Informatics: Programming and Networks

- Chat bot enthusiast

Rune Johan Borgli

- Taking master in Informatics: Programming and Networks

- Machine Learning enthusiast

What does the article describe

- Proposes a system for classifying botnet structures

- Proposes metrics for measuring overall performance of a botnet

- Efficiency

- Effectiveness

- Robustness

- Discusses different botnet structures

What is a botnet?

- A type of virus/malware

- Large collection of connected computers

- Used for stealing computer resources

- DDoS

- Clickfarming

- Mining cryptocurrency

- Bots also used for stealing personal information (eg. keylogger, file extraction, ect)

Background for paper

- Botnets are expected to continue to be a dynamic and evolving threat in the future

- Authors believe that future botnet research will share a common goal of reducing utility of botnets for botmasters

- A need for the botnet research community to better define metrics

- Paper proposes a taxonomy of botnet topologies, based on the utility of the communication structure and their corresponding metrics

Introduction to Network Models

Paper makes use of 4 different network models:

- Random Graph Model

- Small World Model

- Scale Free Model

- Peer to Peer (P2P) Model

Power law link distribution

Erdös-Rényi

Random Graph Model

- Each node is connected with equal probability to the other N - 1 nodes

- The chance a bot has a degree of k is the normal distribution

Watts-Strogatz

Small World Model

- Nodes connected as a ring within a range r

- Each bot is further connected with probability P to nodes on the opposite side of the ring through a "shortcut"

Barabási-Albert

Scale Free Model

- Distribution of k decays as a power law

- Contains a small number of central, highly connected "hubs" nodes, and many leaf nodes with fewer connections

- Resistant to random patching and loss, but weak to targeted responses

P2P Models

Paper differentiates between structured and unstructured P2P models and considers them special cases of scale free models and random graph models.

This is because the selected metrics concern only basic botnet properties and would not be sufficient to distinguish P2P from random and scale free networks.

Measurements introduced by the article

Effectiveness - Efficiency - Robustness

| Major Botnet Utilities | Key Metrics | Suggested Variables |

|---|---|---|

| Effectiveness | Giant portion | S |

| Effectiveness (cont) | Average Available Bandwidth | B |

| Efficiency | Diameter | |

| Robustness | Local transitivity |

Measurement: Effectiveness

- "The effectiveness of a botnet is an estimate of overall utility, to accomplish a given purpose"

- Says something about ability to participate in for example ddos attacks

Giant Portion

"largest connected (or online) portion

of the graph"

- Denoted by S

- How many bots can participate in an attack

- Affected diurnal changes

Bot groups

- Denoted by i

- Group 1 : modem

- Group 2 : DSL, cable

- Group 3 : High speed

By average bandwidth, B, we mean the cumulative available bandwidth in a bot that a botmaster could generate from the various bots

Average bandwidth

The series of all groups i

Average maximum bandwidth

Average normal bandwidth use

Probability of bot being in group i

average online hours per day

- Available resources,

- Giant portion

- Average available bandwith

- Network diameter l

- l is the "average geodistic length"

- Short average path = fast = harder to intercept messages

- Long average path = slower = easier to intercept messages

- Says something about the robustness of the network

Botnet Efficiency

Measurement: Robustness

- Measures redundancy in nodes

- Node triads

- Important in filesharing, qued tasks (higher degree connections are favourable)

- Clustering coefficient mesures the average degree of Local transitivity

- generally captures the robustnes of a network

The average clustering coefficient gamma/ measures the number of triads divided by the maximal number of possible triads

Botnet Taxonomies

- Based on the utility to the botmaster

- Paper models different responses to each botnet category

- Command-and-control (C&C) messages are often the weak link of a botnet

- Targeting the botnet C&C is not always an effective response and new response strategies must be provided in the future

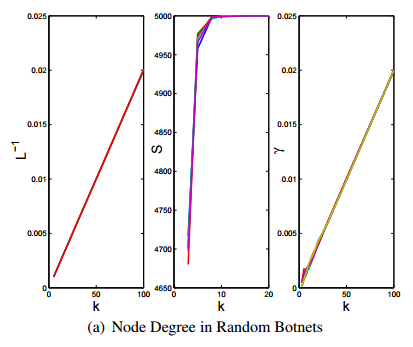

Erdös-Rényi Random Graph Models

- Botmasters must choose an average degree of k appropriate to botnets to not rise suspicion

- Often some value similar to P2P

- Has to distribute a central collection or record of vertices

- This is worked around by distributing a subset of vertices

Erdös-Rényi Random Graph Models (cont)

- The analysis of the paper shows that increasing k in a random botnet also increases , S and

- Botmasters therefore have incentives to increase k

Erdös-Rényi Random Graph Models (cont)

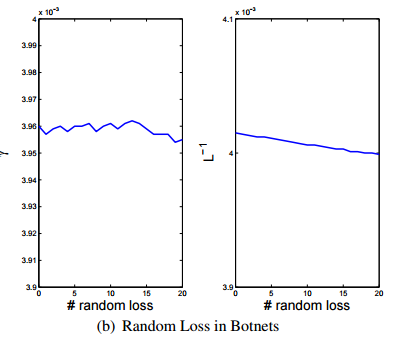

- The paper presents analysis that shows that random loss will not diminish the number of triads in the botnet

- Targeting nodes can at best remove a few nodes with k slightly higher than the average k, which is the same result as random loss

Watts-Strogatz Small World Models

- Botnets using small world models must spread by passing a list of r prior victims, so that each new bot can connect to the previous r victims

- To avoid transmitting a list of victims and reveal parts of the botnet, botmasters avoid using normal shortcuts

Watts-Strogatz Small World Models (cont)

- The average degree in a small world is around the same as the number of local links in a graph,

- Therefore, random and targeted responses means the loss of r links with each removed node

- Paper notes that small world botnets do not have benefits different from random networks

Barabási-Albert Scale Free Models

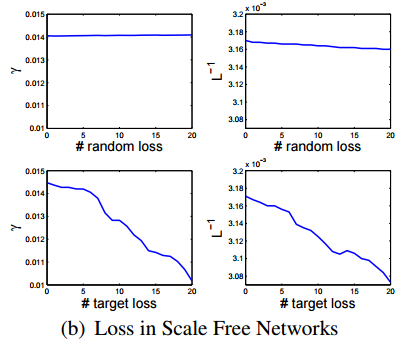

- Random node failure tend to strike low-degree bots, making the network resistant to random patching and loss

- Targeted responses can select high degree nodes, which leads to dramatic decay in the operation of the network

Barabási-Albert Scale Free Models (cont)

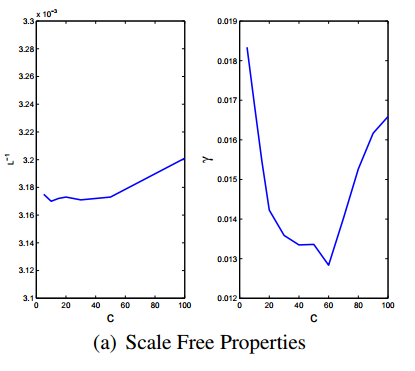

- Core size, C, is the number of high-degree central nodes. As more core nodes are added, the diameter of the scale free botnet stays nearly constant for small regions of C. Splitting a hub into smaller hubs does not significantly increase the length of the overall network

Barabási-Albert Scale Free Models (cont)

- Random losses in scale free botnets are easily absorbed because of power law distribution

- Targeted responses on selected key nodes results in dramatic increase in diameter and loss of transitivity

- By removing C&C, the network quickly disintegrates into a collection of discrete, uncoordinated infections

Power law link distribution

Botnet Response Strategies

- Detecting and cleaning up large numbers of victims appears to be the most viable response strategy for random networks as well as index poisoning

- Random targeting is ineffective for scale free networks, but targeting of high-degree nodes is very effective

- With scale free, targeting high speed nodes is more likely to increase the diameter of the network, increasing message distrobution time

- Keeping watch of effectiveness (available bandwith), helps with monitoring success rate

The attacker inserts a large amount of invalid information into the index (found in P2P file sharing systems) to prevent users from finding the correct resource

Methodology/Findings

- Nugache

- used for spam email

- data collection

- distributed via limewire

- Made by a 19 year old!

- Used emulator infected with nugache

- observed connections to computer in the wild

- Observed mostly > 6 links, but up to 30 links in some case

- This indicated scale free

- Cleaning highly connected nodes can have a high impact.

Power law link distribution

Bandwith as a metric

- Tested on two botnets, focus on DDoS

- Probed bandwith on random nodes

- Used to find an average, method described in metric for estmating bandwith

- Botnet size of 50k = 1Gbps total bandwith

- Botnet 1: 53.3004 Kbps, Botnet 2: 34.8164 Kbps

- Not adjusted for diurnal = roughly the same available bandwith

- Adjusted for diurnal = Botnet 2 has 50% capacity of botnet 1

Conclusion

- Random network models give botnets considerable resilience

- Both direct Erdös-Rényi models and structured P2P systems

- Resists both random and targeted responses

- Targeted removals on scale free botnets offer the best response

- New metrics have been included, but needs to be refined in future work

Thoughts on the article

Sources

- http://faculty.cs.tamu.edu/guofei/paper/Dagon_acsac07_botax.pdf

- http://www.network-science.org/fig_complex_networks_powerlaw_scalefree_node_degree_distribution.png