Data Analysis:

The Essential Bits

May IHE Lunch and Learn

Branden DuPont, Data Analyst

80% of Data Analysis is Cleaning & Preparing Data

-Not just the first step, iterative

- Encompasses a truly breathtaking amount of tasks

- Data viz and statistical modeling (e.g. hypothesis testing) most focused on in teaching, represents 20% of time

- Giving you a comprehensive overview of concepts/tools could easily fill 1-2 graduate courses.

- Tool agnostic

Concepts via Hadley Wickham

- Tidy Data

- Split-Apply-Combine Strategy

Current Work and Research

Technical Work:

- Visualization (spatial emphasis)

- Reproducible, data pipelines (Apache Airflow)

- ML and analytics for social good

- Tools: Python, SQL, Tableau, QGIS

- Jekyll sites

Projects:

- Building tools to monitor and identify exploitative landlords: retaliatory evictions

- Analytics for Local Prosecutor Offices

- CJC Dashboard

- Urban Informatics: Near West Side Partners

References

- Joins.

- Split-Apply-Combine: pandas

- Code vs GUI tools

- Split-Apply-Combine Paper by Hadley Wickham

- Tidy Data Paper - Hadley Wickham

- Spatial Joins-postgis

- Tableau Spatial Join

- Intro to maps in Tableau

- Choropleth maps - data classification

- When to use choropleth maps

- Color in Data Viz

- R for Data Science *The best*

Tidy Data

Has Anyone Ever Taught You How Data Should Be Structured for Analysis?

Usually:

-

Asked to create presentation spreadsheet table

-

Encounter one in the wild

-

Tidy, beautiful datasets in class

-

Data are usually one table per analysis

Messy Datasets Cause Real Pain.

Source: xkcd

“[Of statistics/data analysis] Total disconnect between what people need to actually understand data and what was being taught.” - Hadley Wickam

Tidy Data

1. Each variable forms a column.

2. Each observation forms a row.

3. Each type of observational unit forms a table.

Hadley Wickham - "Like families, tidy datasets are all alike but every messy dataset is messy in its own way."

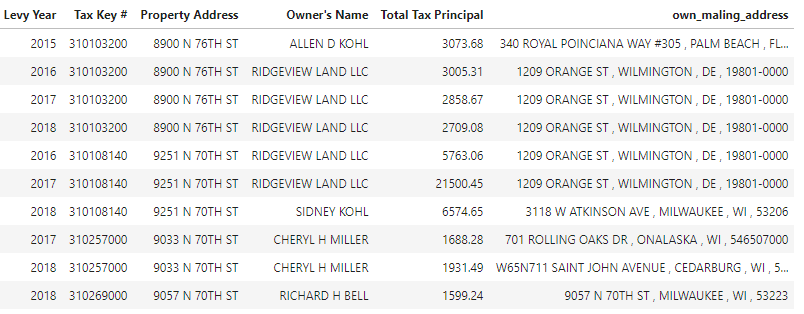

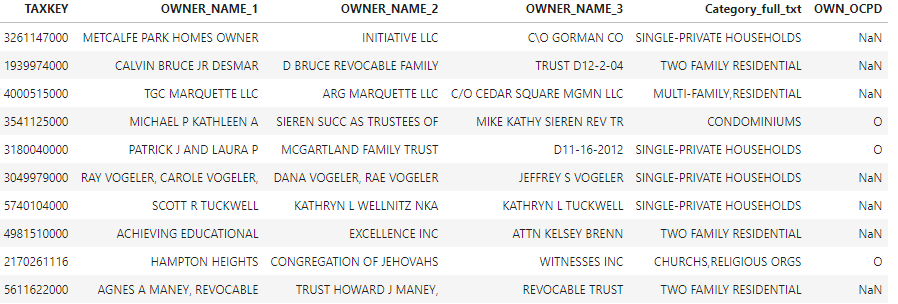

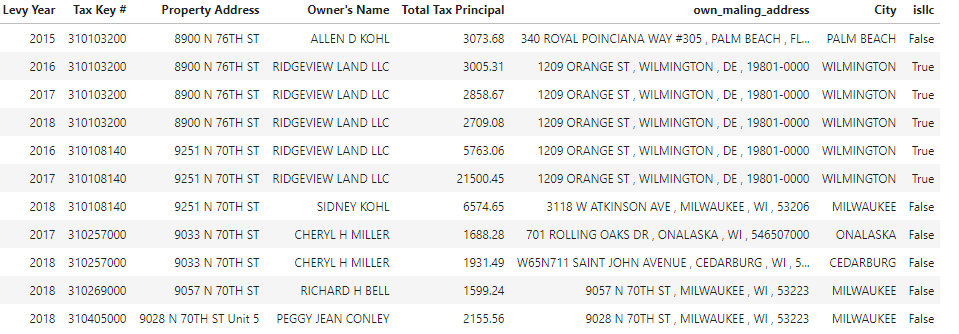

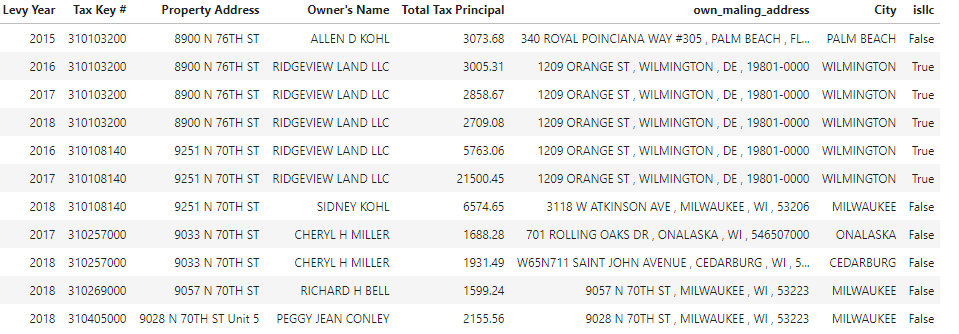

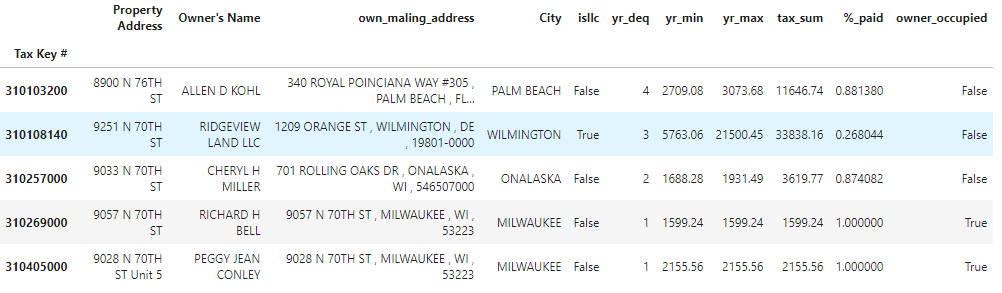

Example: Enforcement of Tax Delinquent Properties.

- Goal: City of Milwaukee wants to foreclose on properties who are not paying property taxes.

- Does not want to target poor homeowners

- Has limited resources:

- landlords who have been delinquent for more than 3 years

- Wants to target landlords who are not making any payments. (% of minimum principal is >= 75% of max principal)

- companies vs small landlords/homeowners (LLC, Company)

- non-local ownership

Data Tidying for Analysis

- What seems Tidy may change based on your data analytic question.

- Administrative data is often tidy from the perspective of the agency, but not a researcher or analyst

- Goal: create analysis ready table

- This is abstract, so we will walk through a real example

Tax Delinquency

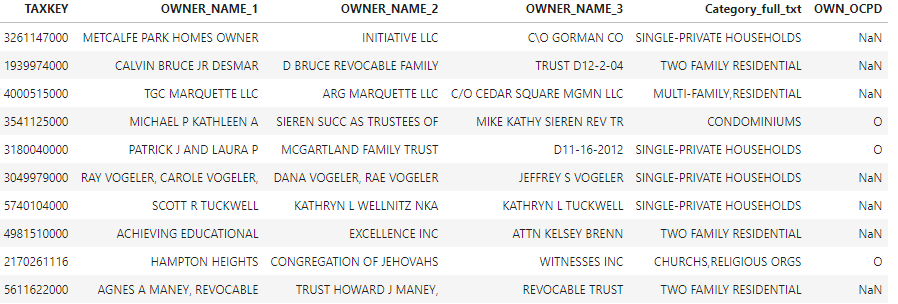

MPROP

Each Variable Does Not Form A Column

Each Observation is Not A Row

Each Observational Unit is Not a Table: variables are columns

Each Observational Unit is Not a Table

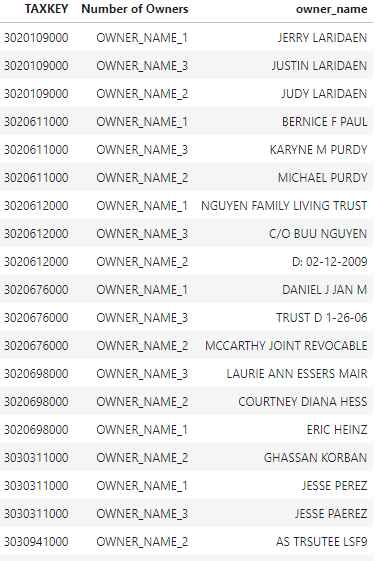

Split Apply Combine Strategy

Intro to Split-Apply-Combine

- Many data analysis problems involve the application of a split-apply-combine strategy

- Break up a big problem into manageable pieces, operate on each piece independently and then put all the pieces back together

- Various tools use this strategy: Pivot tables in Excel, Groupby in SQL and Pandas, LOD in Tableau, and plyr in R

- It's a type of problem that crops up everywhere

- Understanding the pattern allows for more sophisticated data analysis and variable creation

Diagram of Split-Apply-Combine

Split Apply Combine Example

- How many years has this parcel been tax delinquent.

- Split (by year), apply count for each row, and combine into one observation per row

- What is the minimum taxes owed per year?

- What is the maximum taxes owed per year?

Split Apply Combine Example

Split Apply Combine Example

- Little bit more complex: How many parcels are tax delinquent for more than three years and have the same owner each of those years?

- Know problem landlords will change their LLC after a year to avoid identification. Group and sort to get potential LL names.

Thanks!

Questions?

Why Learn Python (or R)?

(Besides the feeling of superiority it gives you.)

Seriously, Why Code?

-

You only need one: "Python as Super Glue for the Modern Scientific Workflow" Data analysis requires you to port data to and from different programs and tools. Python can (almost) do it all.

- Reproducibility - anyone can recreate your work to get the same result

- Reuse - data analysis involves solving many similar sub-problems. A solution for one issue can be reused for another. Also loops.

- Automation - need to rerun the analysis multiple times/production.

- Free - SAS, SPSS, ArcGis are expensive.

- Can scale - Excel and ArcGis do not handle large data well/at all. Shapefile has a 2 GB limit. Excel's maximum rows: 1,048,576

- Collaboration - easier to assign sub-tasks and track progress.

- Required by Employers and Academics - look at any job posting

- Learn programming - learning to code opens the door to other tasks: build websites, web scraping.

Mike Bostock on Efficiency vs Generality:

" Despite these challenges, we’re still writing code, for myriad applications, more than ever before. Why? Are we masochists? (Maybe.) Are we unable to change? (In part.) Is there no better solution?

In general — and that is the critical qualifier — no. Code is often the best tool we have because it is the most general tool we have; code has almost unlimited expressiveness. Alternatives to code, as well as higher-level programming interfaces and languages, do well in specific domains. But these alternatives must sacrifice generality to offer greater efficiency within their domain."

Chris Moffit on Escaping Excel Hell:

Excel Hell: "A place of torment and misery caused by using Excel as your primary data manipulation tool."