Sensor Data

Introduction

Sensors are devices that detect or measure a physical property of the environment in which they reside

For most known physical properties there exists devices that can measure them

Some examples of quantifiable properties include light, radiation, sound, pull of gravity and magnetic field

For each of these properties we can measure not only their intensity but also their direction of application

We can use these measurements to gain information about the state of our environment

The goal of sensor data processing is to take this information and transform it into useful representation

In the case of autonomous navigation, we use this information to try and accurately predict the physical location and orientation of our vehicle

Sensor Types

Sensor Types

There are two primary ways to sense, directly and remotely

Direct sensing involves coming into direct contact with the object you are measuring

Some examples of direct sensors are the Voltmeters and Strain Gauges you will be using in ENAE362

Indirect sensing, aka remote sensing, involves measuring the property of an object or environment from afar

(no direct contact)

There are two main methods of remote sensing, passive and active

Passive sensing is the act of detecting EXISTING energy that is reflected off or emitted from the environment

whereas active sensing is the act of detecting energy that the system itself EMITS and reflects off its environment

In essence, an active sensor supplies its own medium of information

Passive Sensors

Let's first take a look at some examples of passive sensors

The passive sensors we are all most familiar with are likely our own

Our eyes and ears, among several other complex sensors, feed us information about our environment

From day to day, they help guide us, allowing us to learn and react to stimuli and even communicate with one another

Without them we would lack the means to perceive our environment

# A biological example of a passive visual sensor is the human

# eye

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye, onto photoreceptors that comprise the

# inner nerve lining of the eye, known as the retina

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye, onto photoreceptors that comprise the

# inner nerve lining of the eye, known as the retina. The

# optic nerve that connects the retina to the brain sends this

# information to the visual cortex to be interpreted

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye, onto photoreceptors that comprise the

# inner nerve lining of the eye, known as the retina. The

# optic nerve that connects the retina to the brain sends this

# information to the visual cortex to be interpreted

# The passive sensor in this example is specifically the

# retina

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye, onto photoreceptors that comprise the

# inner nerve lining of the eye, known as the retina. The

# optic nerve that connects the retina to the brain sends this

# information to the visual cortex to be interpreted

# The passive sensor in this example is specifically the

# retina. The other components of the eye, such as the lens,

# are there either to protect the eye or aid the process of

# sensing

# A biological example of a passive visual sensor is the human

# eye. The human eye senses visual information by focusing the

# light emitted by our environment through a lens, located at

# the front of the eye, onto photoreceptors that comprise the

# inner nerve lining of the eye, known as the retina. The

# optic nerve that connects the retina to the brain sends this

# information to the visual cortex to be interpreted

# The passive sensor in this example is specifically the

# retina. The other components of the eye, such as the lens,

# are there either to protect the eye or aid the process of

# sensing

# The end of the optic nerve that reads electrochemical

# impulses information from the retina could be considered an

# active sensor

# Man-made inventions often take inspiration from the fruits

# of evolution

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Again, the camera itself is not a sensor but is comprised of

# several components, such as a lens or a viewfinder to make

# the system more usable

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Again, the camera itself is not a sensor but is comprised of

# several components, such as a lens or a viewfinder to make

# the system more usable. The sensor inside the camera is the

# ACTUAL sensor

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Again, the camera itself is not a sensor but is comprised of

# several components, such as a lens or a viewfinder to make

# the system more usable. The sensor inside the camera is the

# ACTUAL sensor

# It is important to make this distinction now to avoid

# confusion later on

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Again, the camera itself is not a sensor but is comprised of

# several components, such as a lens or a viewfinder to make

# the system more usable. The sensor inside the camera is the

# ACTUAL sensor

# It is important to make this distinction now to avoid

# confusion later on. Colloquially, industries refer to the

# entire sensor-system, rather than just the sensor component,

# as the sensor

# Man-made inventions often take inspiration from the fruits

# of evolution. An example of a man-made passive visual sensor

# is the camera. Much like our eyes, the modern camera focuses

# light through a lens onto a sensor located inside the camera

# Again, the camera itself is not a sensor but is comprised of

# several components, such as a lens or a viewfinder to make

# the system more usable. The sensor inside the camera is the

# ACTUAL sensor

# It is important to make this distinction now to avoid

# confusion later on. Colloquially, industries refer to the

# entire sensor-system, rather than just the sensor component,

# as the sensor. This is for simplicity of communication, and

# I will use the term to describe both. We'll see this more

# commonly in the case of active "sensors"

# A biological example of a passive auditory sensor is the ear

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# Can you guess which part of this is the actual sensor?

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# Can you guess which part of this is the actual sensor?

# Technically, you could argue that the ear drum and osicular

# chain along with the hair cells are all sensors, since they

# each respond to a physical property

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# Can you guess which part of this is the actual sensor?

# Technically, you could argue that the ear drum and osicular

# chain along with the hair cells are all sensors, since they

# each respond to a physical property. The definition is broad

# enough to encompass them all, and I would agree that each

# qualifies as at least a direct sensor

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# Can you guess which part of this is the actual sensor?

# Technically, you could argue that the ear drum and osicular

# chain along with the hair cells are all sensors, since they

# each respond to a physical property. The definition is broad

# enough to encompass them all, and I would agree that each

# qualifies as at least a direct sensor. Together, these

# components comprise the remote auditory "sensor" of the ear

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# A biological example of a passive auditory sensor is the ear

# Sound waves from the environment travel into the ear through

# the ear canal and first reach the ear drum. As the ear drum

# vibrates, it sets the osicular chain in motion. The osicular

# chain transfers these vibrations to the inner ear, where the

# resulting movement of fluid in the cochlea excites the hair

# cells lining its inner wall. The movements of these hair

# cells are translated to electrical signals and read by

# auditory neurons

# For a visualization of how human hearing works watch the

# video linked in the references. Words clearly don't the

# concept justice

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful)

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone. These

# microphones are comprised of a thin membrane connected to a

# metal coil housed inside a magnet

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone. These

# microphones are comprised of a thin membrane connected to a

# metal coil housed inside a magnet. When the membrane comes

# into contact with vibrations in the air it shifts back and

# forth, moving the metal coil with it

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone. These

# microphones are comprised of a thin membrane connected to a

# metal coil housed inside a magnet. When the membrane comes

# into contact with vibrations in the air it shifts back and

# forth, moving the metal coil with it

# For those of you who actually did your physics labs, what

# happens when you move a conductive coil through a magnetic

# field?

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone. These

# microphones are comprised of a thin membrane connected to a

# metal coil housed inside a magnet. When the membrane comes

# into contact with vibrations in the air it shifts back and

# forth, moving the metal coil with it

# For those of you who actually did your physics labs, what

# happens when you move a conductive coil through a magnetic

# field?

# That's right! It produces an electric current

# A microphone is an example of a man-made passive auditory

# sensor (whew what a mouthful). A microphone is an instrument

# for translating vibrations into electric signals

# One type of microphone is a dynamic microphone. These

# microphones are comprised of a thin membrane connected to a

# metal coil housed inside a magnet. When the membrane comes

# into contact with vibrations in the air it shifts back and

# forth, moving the metal coil with it

# For those of you who actually did your physics labs, what

# happens when you move a conductive coil through a magnetic

# field?

# That's right! It produces an electric current. By connecting

# the ends of this coiled wire to a computer or chip we can

# record the effect of sound as electric signalsActive Sensors

As mentioned previously, the difference between passive and active sensors is that active sensors supply their own medium of information

Based on this logic, we can convert a passive sensor into an active one by introducing an information source

In the last section, we described a camera being used as a passive sensor

A camera can also be used as an active sensor when supplying its own light source

You may know this as flash photography

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head. On the other of this spine is the esca, the

# bioluminescent bulb the anglerfish uses as a lure

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head. On the other of this spine is the esca, the

# bioluminescent bulb the anglerfish uses as a lure

# The anglerfish commonly inhabits the bathypelagic zone of

# the open ocean at depths up to at least 2000m below the

# surface

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head. On the other of this spine is the esca, the

# bioluminescent bulb the anglerfish uses as a lure

# The anglerfish commonly inhabits the bathypelagic zone of

# the open ocean at depths up to at least 2000m below the

# surface. Little to no light reaches this layer of the ocean,

# so the esca doubles as a source of light the anglerfish uses

# to augment its sight

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head. On the other of this spine is the esca, the

# bioluminescent bulb the anglerfish uses as a lure.

# The anglerfish commonly inhabits the bathypelagic zone of

# the open ocean at depths up to at least 2000m below the

# surface. Little to no light reaches this layer of the ocean,

# so the esca doubles as a source of light the anglerfish uses

# to augment its sight.

# In a way, the anglerfish's visual system is an active sensor

# system

# Humans have no innate active sensors (that I can think of),

# but there are other members of the animal kingdom that do.

# The anglerfish, best known for its appearance on Finding

# Nemo, gets its name from the spine that protrudes from atop

# its head. On the other of this spine is the esca, the

# bioluminescent bulb the anglerfish uses as a lure

# The anglerfish commonly inhabits the bathypelagic zone of

# the open ocean at depths up to at least 2000m below the

# surface. Little to no light reaches this layer of the ocean,

# so the esca doubles as a source of light the anglerfish uses

# to augment its sight

# In a way, the anglerfish's visual system is an active sensor

# system. It supplies its own light source, the property by

# which the fish's eyes perceive information

# A more popular example of active sensing in the animal

# kingdom is echolocation

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# Bats use screeches to determine the distance of obstacles

# and prey while in flight based on the time delay of the

# reflected sound

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# Bats use screeches to determine the distance of obstacles

# and prey while in flight based on the time delay of the

# reflected sound. Dolphins use clicks in a similar fashion

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# Bats use screeches to determine the distance of obstacles

# and prey while in flight based on the time delay of the

# reflected sound. Dolphins use clicks in a similar fashion

# The sonic distance sensor of our GoPiGo functions by the

# same basic principal

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# Bats use screeches to determine the distance of obstacles

# and prey while in flight based on the time delay of the

# reflected sound. Dolphins use clicks in a similar fashion

# The sonic distance sensor of our GoPiGo functions by the

# same basic principal, measuring the time between when it

# sends out a sound wave to when it receives the same sound

# wave reflected back to it

# A more popular example of active sensing in the animal

# kingdom is echolocation. Echolocation is the act of

# perceiving the location of objects by reflected sound

# Bats use screeches to determine the distance of obstacles

# and prey while in flight based on the time delay of the

# reflected sound. Dolphins use clicks in a similar fashion

# The sonic distance sensor of our GoPiGo functions by the

# same basic principal, measuring the time between when it

# sends out a sound wave to when it receives the same sound

# wave reflected back to it. Although the sensor is

# unidirectional and incorporates far less complex methods

# than the auditory processing used by bats and dolphins, it

# supplies us with useful information nonethelessDistance Data

Hardware

One form of raw data we can acquire from sensors is distance data

This data can be drawn using different kinds of measurements such as sound or various wavelengths of the E/M spectrum

# Some examples of these distance sensors are..

# Some examples of these distance sensors are..

# RADAR - uses reflected radio waves

# Some examples of these distance sensors are..

# RADAR - uses reflected radio waves

# LiDAR - uses reflected visible light waves

# Some examples of these distance sensors are..

# RADAR - uses reflected radio waves

# LiDAR - uses reflected visible light waves

# Sonic Distance Sensor - uses relfected sound waves

# Some examples of these distance sensors are..

# RADAR - uses reflected radio waves

# LiDAR - uses reflected visible light waves

# Sonic Distance Sensor - uses relfected sound waves

# Much like in echolocation, these active sensors rely on the

# time-delay between sending out a signal and receiving the

# reflected signal back from the environment

# Some examples of these distance sensors are..

# RADAR - uses reflected radio waves

# LiDAR - uses reflected visible light waves

# Sonic Distance Sensor - uses relfected sound waves

# Much like in echolocation, these active sensors rely on the

# time-delay between sending out a signal and receiving the

# reflected signal back from the environment. By multiplying

# the time delay by the known speed of the signal, we can get

# a pretty accurate estimate of an object's distance and a

# general idea of its normal velocity

# LiDAR - uses reflected visible light waves

# LiDAR - uses reflected visible light waves

# With targeted measurements we can measure the distance of an

# object located at a specific angle from our vantage point

# LiDAR - uses reflected visible light waves

# With targeted measurements we can measure the distance of an

# object located at a specific angle from our vantage point

# With quick successive measurements we can sample a greater

# number of angle locations within a given window of time

# LiDAR - uses reflected visible light waves

# With targeted measurements we can measure the distance of an

# object located at a specific angle from our vantage point

# With quick successive measurements we can sample a greater

# number of angle locations within a given window of time

# By combining these two capabilities a LiDAR can actually map

# out the state of an environment, rendering a 3-D image from

# the distance of obstacles seen from a single vantage point

# LiDAR - uses reflected visible light waves

# With targeted measurements we can measure the distance of an

# object located at a specific angle from our vantage point

# With quick successive measurements we can sample a greater

# number of angle locations within a given window of time

# By combining these two capabilities a LiDAR can actually map

# out the state of an environment, rendering a 3-D image from

# the distance of obstacles seen from a single vantage point

# Google's self-driving cars rely on this piece of hardware to

# map out and navigate their environment

# LiDAR - uses reflected visible light waves

# With targeted measurements we can measure the distance of an

# object located at a specific angle from our vantage point

# With quick successive measurements we can sample a greater

# number of angle locations within a given window of time

# By combining these two capabilities a LiDAR can actually map

# out the state of an environment, rendering a 3-D image from

# the distance of obstacles seen from a single vantage point

# Google's self-driving cars rely on this piece of hardware to

# map out and navigate their environment. LiDAR's often used

# in the scientific community due to their precision, but are

# often too expensive to be applicable commercially

# Sonic Distance Sensor - uses reflected sound waves

# Sonic Distance Sensor - uses reflected sound waves

# The sensor we'll be using in class is the cheapest of the

# three

# Sonic Distance Sensor - uses reflected sound waves

# The sensor we'll be using in class is the cheapest of the

# three. While LiDARs will easily cost several thousands of

# dollars, a SDS costs only ~$20

# Sonic Distance Sensor - uses reflected sound waves

# The sensor we'll be using in class is the cheapest of the

# three. While LiDARs will easily cost several thousands of

# dollars, a SDS costs only ~$20. It's much more feasible to

# be using in the classroom as we can barely trust our juniors

# in engineering to properly clothe themselves

# Sonic Distance Sensor - uses reflected sound waves

# The sensor we'll be using in class is the cheapest of the

# three. While LiDARs will easily cost several thousands of

# dollars, a SDS costs only ~$20. It's much more feasible to

# be using in the classroom as we can barely trust our juniors

# in engineering to properly clothe themselves

# The downside is that the SDS is much less precise than both

# the LiDAR and RADAR as it relies on sound waves, which are

# both slower and influenced by many more environmental

# factors than light waves

# Sonic Distance Sensor - uses reflected sound waves

# The sensor we'll be using in class is the cheapest of the

# three. While LiDARs will easily cost several thousands of

# dollars, a SDS costs only ~$20. It's much more feasible to

# be using in the classroom as we can barely trust our juniors

# in engineering to properly clothe themselves

# The downside is that the SDS is much less precise than both

# the LiDAR and RADAR as it relies on sound waves, which are

# both slower and influenced by many more environmental

# factors than light waves. At close distances and slow enough

# speeds, however, the SDS is precise enough to provide the

# sensing capabilities our PiGos requireRepresentation

When we receive reflected signal back from the environment, we are really measuring the time delay

Usually this is translated into a distance measurement for us by the accompanying API (Application Programming Interface) of the hardware

The API we will be using for our GoPiGos does this for us too

It, of course, makes assumptions on some properties of the environment, such as temp, to estimate the speed of sound

# The data that we pull from the API can either be in mm or in

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

import time

import easygopigo3 as easy

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

import time

import easygopigo3 as easy

# we must create a GoPiGo

gpg = easy.EasyGoPiGo3()

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

import time

import easygopigo3 as easy

# we must create a GoPiGo

gpg = easy.EasyGoPiGo3()

# and initialize a distance sensor object from the GoPiGo

my_distance_sensor = gpg.init_distance_sensor()

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

import time

import easygopigo3 as easy

# we must create a GoPiGo

gpg = easy.EasyGoPiGo3()

# and initialize a distance sensor object from the GoPiGo

my_distance_sensor = gpg.init_distance_sensor()

# from there we simply call the read function from that object

my_distance_sensor.read_mm()

# The data that we pull from the API can either be in mm or in

# first we have a few modules to import

import time

import easygopigo3 as easy

# we must create a GoPiGo

gpg = easy.EasyGoPiGo3()

# and initialize a distance sensor object from the GoPiGo

my_distance_sensor = gpg.init_distance_sensor()

# from there we simply call the read function from that object

my_distance_sensor.read_mm()

# the above will read the current distance read from the SDSSensory Needs

In Humans

From birth, our sensors help us perceive our environment and even communicate with one another

Often we take them for granted but without them even the simplest tasks could be significantly more difficult

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this.

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# How about trying to sit through an Electronics lecture

# without being able to hear Dr. Winkelmann?

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# How about trying to sit through an Electronics lecture

# without being able to hear Dr. Winkelmann?

# You'd be spared from his classwide roasts, but I don't think

# the tradeoff would be worth it

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# How about trying to sit through an Electronics lecture

# without being able to hear Dr. Winkelmann?

# You'd be spared from his classwide roasts, but I don't think

# the tradeoff would be worth it

# The key takeaway here is that you rely on your sensors

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# How about trying to sit through an Electronics lecture

# without being able to hear Dr. Winkelmann?

# You'd be spared from his classwide roasts, but I don't think

# the tradeoff would be worth it

# The key takeaway here is that you rely on your sensors

# To function as an autonomous being you need to be able to

# sense you environment

# As an exercise, imagine learning Dynamics from Dr. Paley

# using only your ears. Don't actually do this. Just imagine.

# So can you?

# Difficult, right?

# How about trying to sit through an Electronics lecture

# without being able to hear Dr. Winkelmann?

# You'd be spared from his classwide roasts, but I don't think

# the tradeoff would be worth it

# The key takeaway here is that you rely on your sensors

# To function as an autonomous being you need to be able to

# sense you environment. Yes, you could survive without one or

# two of your senses, but navigating your environment would

# become increasingly difficult as a resultIn Machines

In the same way we rely on our sensors, so do machines

For a system to autonomously navigate through its environment, it must sense it

A system without sensors can neither locate its goal nor perceive obstacles it must avoid

Heck, a system without any sensors can't even predict its own state

Is an isolated computer chip in a tree going to know its in a tree?

No! It's not even plugged in!

All jokes aside, a system needs sensors

Even ones we intend to rely on human input often require sensors to offer their pilot feedback

# Typically, greater autonomy requires greater sensing ability

# Typically, greater autonomy requires greater sensing ability

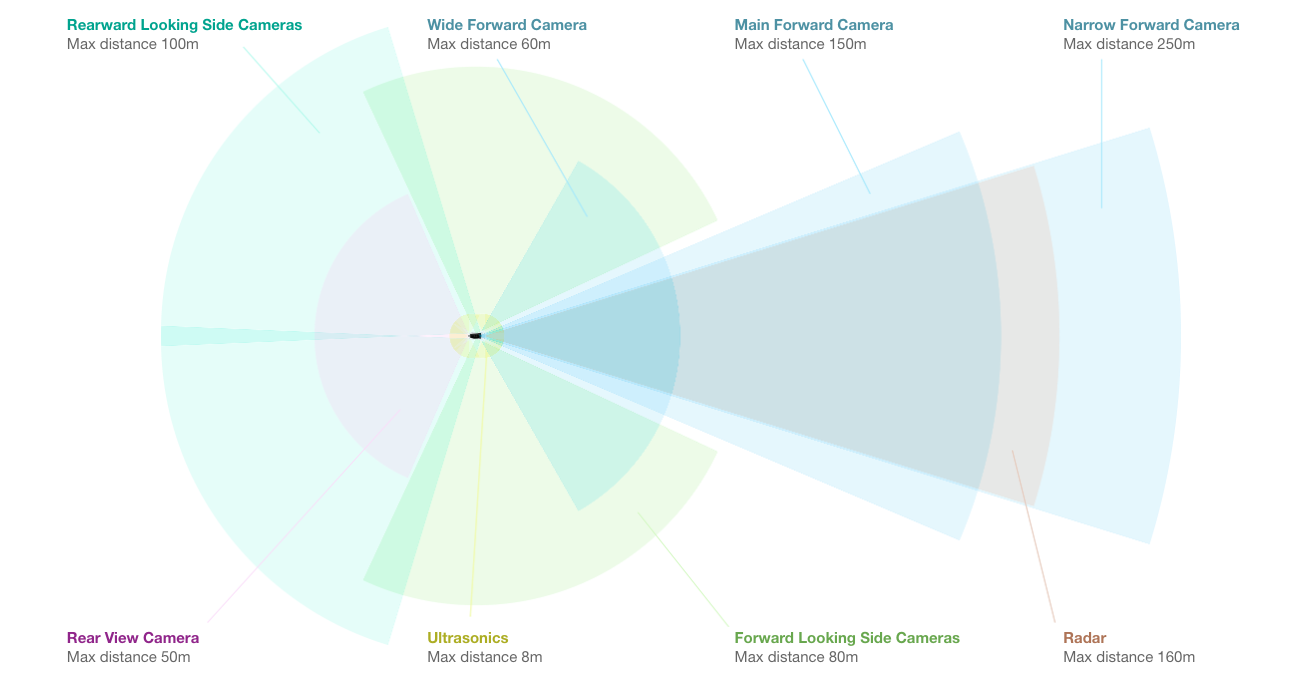

# Take Tesla, for example

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# That's a lot of sensors!

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# That's a lot of sensors! Compare that to your average sedan,

# which is lucky to have a single rear camera and some

# ultrasonics to keep you from backing into your neighbor's

# minivan

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# That's a lot of sensors! Compare that to your average sedan,

# which is lucky to have a single rear camera and some

# ultrasonics to keep you from backing into your neighbor's

# minivan. I'd sooner drive a boulder than trust it to

# autopilot me anywhere

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# That's a lot of sensors! Compare that to your average sedan,

# which is lucky to have a single rear camera and some

# ultrasonics to keep you from backing into your neighbor's

# minivan. I'd sooner drive a boulder than trust it to

# autopilot me anywhere. Of course, some of the sensors on

# a Tesla are redundant to provide more system reliability,

# but the point remains

# Typically, greater autonomy requires greater sensing ability

# Take Tesla, for example. Its cars, which have autopilot

# capabilities, rely on 8 surround cameras that provide

# coverage at various lengths and angles as well as a single

# forward facing radar and multiple ultrasonic sensors

# That's a lot of sensors! Compare that to your average sedan,

# which is lucky to have a single rear camera and some

# ultrasonics to keep you from backing into your neighbor's

# minivan. I'd sooner drive a boulder than trust it to

# autopilot me anywhere. Of course, some of the sensors on

# a Tesla are redundant to provide more system reliability,

# but the point remains - Greater autonomy requires greater

# sensing ability!

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment. Sensor

# data processing is the art of doing just that

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment. Sensor

# data processing is the art of doing just that. By

# transforming the data absorbed from the environment into a

# usable form, we can more easily predict the state of our

# environment

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment. Sensor

# data processing is the art of doing just that. By

# transforming the data absorbed from the environment into a

# usable form, we can more easily predict the state of our

# environment as well as the state of our vehicle within the

# environment as we navigate from one state to another.

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment. Sensor

# data processing is the art of doing just that. By

# transforming the data absorbed from the environment into a

# usable form, we can more easily predict the state of our

# environment as well as the state of our vehicle within the

# environment as we navigate from one state to another.

# Just as paint is much more powerful in the hands of a

# trained artist, our sensors are made much more capable

# through the proper processing techniques

# It's important to keep in mind that, although greater

# capacity to perceive ones environment is critical to

# building an autonomous system, it's not all that matters.

# It's important to interpret this data in the right way to

# draw meaningful conclusions about ones environment. Sensor

# data processing is the art of doing just that. By

# transforming the data absorbed from the environment into a

# usable form, we can more easily predict the state of our

# environment as well as the state of our vehicle within the

# environment as we navigate from one state to another.

# Just as paint is much more powerful in the hands of a

# trained artist, our sensors are made much more capable

# through the proper processing techniques. Without them, our

# sensor data is useless!