Speaking of cool Web stuff...

The Web Speech APIs

Here's the idea...

- Efforts to bring speech to the Web / Current state of standardization

- Code examples / walkthrough of APIs (with demos)

- Discussion of what's good and bad about the APIs

- What's next?

Believe it or not...

There's history of us trying to make

machines that 'speak' or 'listen' going

back to the 1700s

Bell Labs demonstrated digital voice

synthesis at the World's Fair in 1939

If you find this interesting, I wrote a whole

piece on The History of Speech

-

1991: The Web

-

October 1, 1994: W3C Founded

-

December 15, 1994: Netscape Released

-

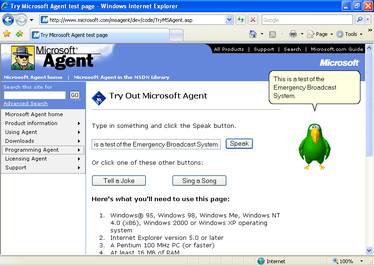

Microsoft didn't really enter the picture in a serious way until ~1996

<object ID="AgentControl" width="0" height="0" CLASSID="clsid:D45FD31B-5C6E-11D1-9EC1-00C04FD7081F" CODEBASE="http://server/path/msagent.exe#VERSION=2,0,0,0">

</object>

But wow.... 1997!!!

That got a lot of people thinking about speech on the Web...

1998 CSS2 Aural Stylesheets

March 1999

AT&T Corporation, IBM, Lucent, and Motorola formed the VoiceXML Forum

Handed over to W3C in 2000

AskJeves, AT&T, Avaya, BT, Canon, Cisco, France Telecon, General Magic, Hitachi, HP, IBM, isSound, Intel, Locus Dialogue, Lucent, Microsoft, Mitre, Motorola, Nokia, Nortel, Nuance, Phillips, PipeBeach, Speech Works, Sun, Telecon Italia, TellMe.com, and Unisys

OMG!

Like HTML, but for... wait... I'm confused.

For the next decade...

Standards

2010: Speech XG Community Group

They got a lot more than they bargained for...

But more than that they got actual competing draft proposals from Google, Microsoft, Mozilla and Voxeo as well

It didn't.

2012: Another Community Group!

OK... so let's talk about where we are now...

The Web: Present Day

The Web: Present Day

The Web: Present Day

- Implementations are buggy / inconsistent

- Implementations and bugs are low priority

- The APIs aren't super great

- There is no W3C standard, official draft or WG

But wait...

Let's go to the details!

window.speechSynthesizer

A top-level object

speechSynthesizer.speak(...)SpeechSynthesisUtterance

An utterance is the thing that the synthesizer "speaks"

speechSynthesizer.speak(

new SpeechSynthesisUtterance(

`Hello Darkness, my old friend`

)

)Slides.com is no fun, examples are blocked :(

Now, speak!