Entomology

A collection of the toughest bugs I encountered

12x document

very small chunks + tls = inflated documents

How it began

Guess what? It took 3 days to figure out.

Sigma: "The /result request timeouts in pre-production. Can you look into it?"

Me: "Sure, just a sec."

New feature released on the pre-prod server.

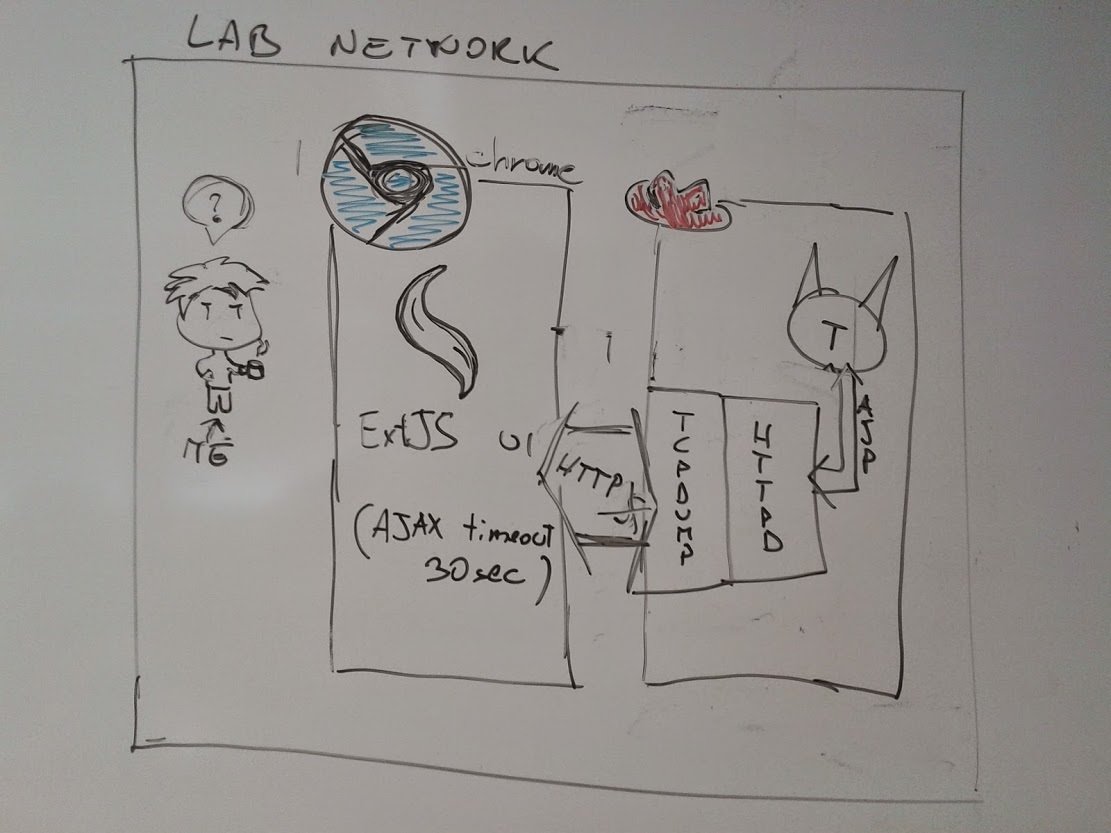

Setup

Chrome DevTools

Disabling timeout:

Transferred = 12x body size. WTF?!?!

(Ignore the weird time/latency value, I GIMPed the image because the real screenshot was lost)

First guess

We don't know what there is in the middle here, but we know it sometimes causes problems.

Check

CURL from a machine on the same network, tcpdump to measure. Still 12x.

Integration environment?

No timeouts, but still 12x! Link is fast enough to transfer that data before the timeout.

Local machine?

Only 2x! No TLS, no gzip, no AJP here.

tcpdump traffic (no TLS)

WTF is this?

Chunking fault?

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

Content-Type: application/json;charset=UTF-8

Transfer-Encoding: chunked

(.. snip head...)

c

]],"687":[[0

2

,2

4

,234

4

],[1

2

,3

4

,234

(.. snip tail...)

Some investigations...

- Tried other resources, some were inflated but not so much

- Tomcat seems to chunk anything that exceeds its buffers

- This resource uses a custom jackson serializer very heavily, that writes a lot of numbers in a lot of arrays one by one

An applicative error?

Jackson properties

And tomcat writes a chunk with every flush.

Document transformations?

chunk, ajp, gzip, tls

Conclusions

- jackson writes an integer (+2~13 byte -> 2~13 byte)

- tomcat chunks it (+~5 byte -> 7~18 byte)

- httpd gzips it (-0~4 byte -> 7~14 byte)

- httpd puts frames in TLS record (±~32 bytes -> 39~46 byte)

Some messages actually contain strings that httpd can compress, sometimes the ratio is better... so in the end, ~12x

Started with 2 bytes, ended with 39 (worst case)

Happy end

Now gzip can compress!

disabled FLUSH_PASSED_TO_STREAM