搞懂 Python 協同程序(Coroutine): 快樂學會非同步程式開發

Jason

20181013 Taichung.py

協同程序是最沒人寫到、晦澀,且顯然無用的 Python 功能

-David Beazley

難以理解也已是 2016 年前的事了吧? 使用協程在 IO 密集的程式中作為非同步策略現在已經非常非常的流行 (例如: 爬蟲、資料庫的 ETL 等等)

所以今天就從協同程序開始,想用簡單的說明讓大家了解

因為是深入淺出,所以

-

協同程序概念

-

語法產生協同程序

-

不會深入 Python asyncio

-

會有 Live demo (

不是 Live coding)

如果稍後大家沒聽懂分享的內容,那應該就是我講得不夠淺顯

所以,如果真沒聽懂,還是點點頭喔,咪啾!

總會有個故事起頭...

推薦一本書

然後,開始吧

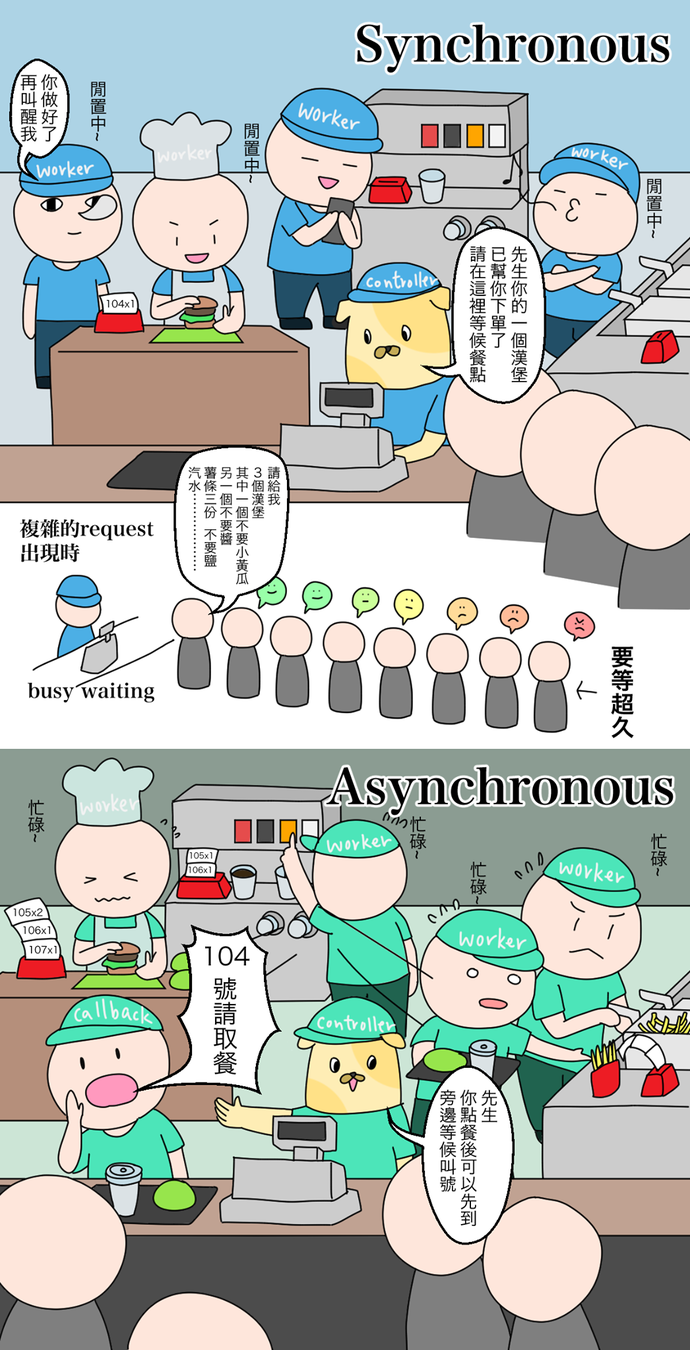

非同步的策略

-

阻塞式多行程

-

阻塞式多行程多執行緒

-

非阻塞式事件驅動

-

非阻塞式 Coroutine

By Chetan Giridhar, PyCon India 2014

雖然 CPython 的 GIL 特性適合 I/O 密集的任務,但...

| 設備 | CPU實際時間 | 相對於人類時間 |

|---|---|---|

| 執行指令 | 0.38ns | 1s |

| L1暫存 | 0.5ns | 1.3s |

| L2暫存 | 7ns | 18.2s |

| 遞迴互斥鎖 | 25ns | 1min 5s |

|

上下文切換/ 系統呼叫 |

1.5us | 1h 5min |

| RAM 讀 1M | 250us | 7.5 day |

| SSD 讀 1M | 1ms | 1 month |

| 網路 | 150 ms | 12.5 年 |

以上假設用 2.6Ghz 的 CPU 做模擬,參考

-

阻塞式多行程 -

阻塞式多行程多執行緒 -

非阻塞式事件驅動

-

非阻塞式 Coroutine

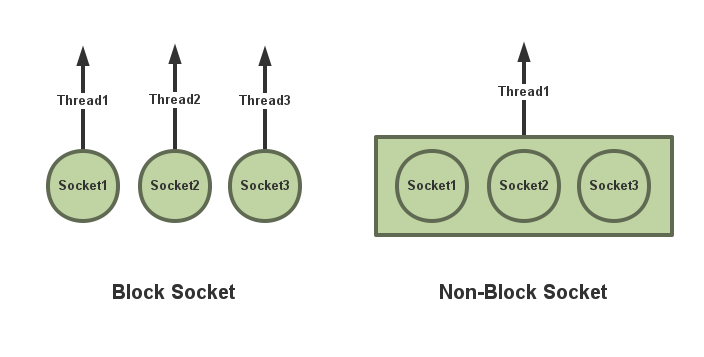

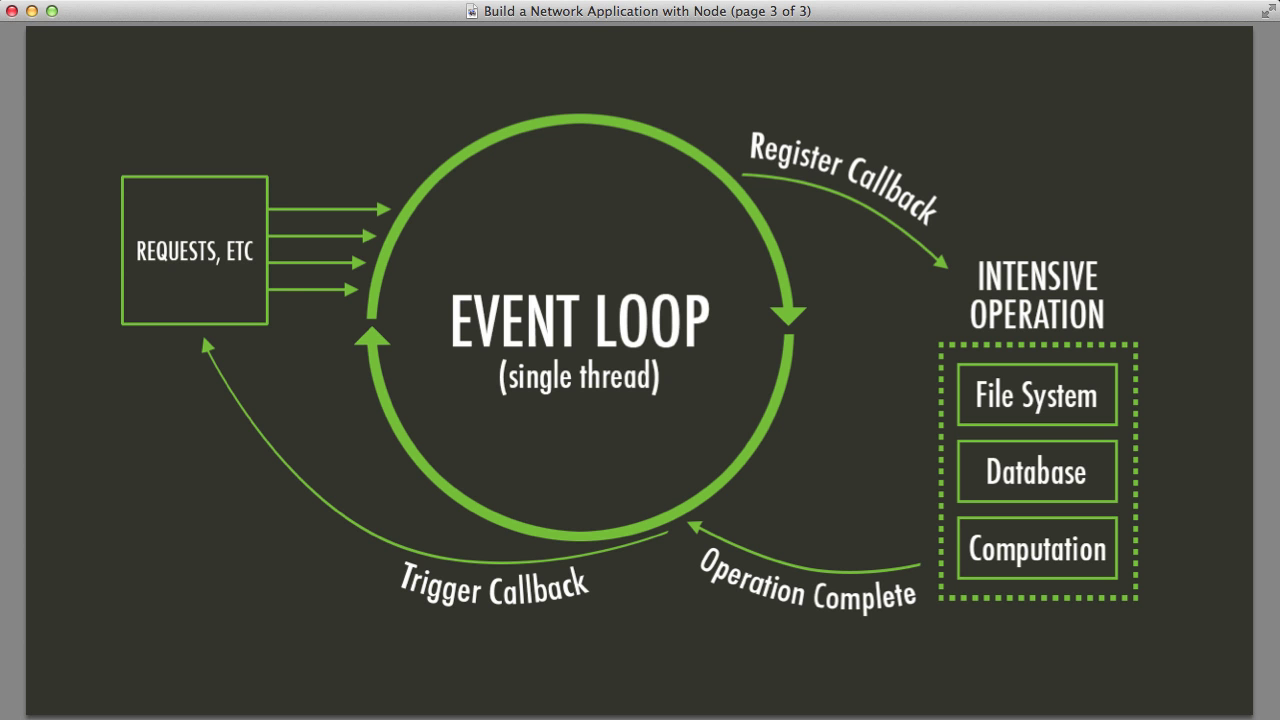

非阻塞事件驅動

非阻塞事件驅動

-

Non-blocking I/O

-

Callback

-

Event loop

非阻塞事件驅動

-

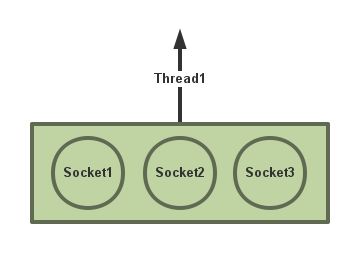

Non-blocking I/O

Non-blocking I/O

-

非同步的呼叫

-

Event-driven

-

Socket Multiplexing

-

Unix: Select, poll, epoll

-

Windows, Solaris: IOCP

-

BSD/OS X: kqueue

-

*I/O 觀點: 多路復用實際上還是一種順序

*非阻塞不一定是非同步,但非同步一定要非阻塞

非阻塞事件驅動

-

Callback

Callback

傳統方法,註冊一個事情發生呼叫函式,而不是等候回應,Node.js 的發明者 Ryan Dahl 推崇

api_call1(request1, function (response1) {

//step1

var request2 = step1(response1);

api_call2(request2, function (response2) {

//step2

var request3 = step2(response2);

api_call3(request, function (response3) {

//step3

step3(response3);

});

});

});def stage1(response1):

request2 = step1(response1)

api_call2(request2, stage2)

def stage2(response2):

requests3 = step2(response2)

api_call3(requests3, stage3)

def stage3(response3):

step3(response3)

if __name__ == '__main__':

api_call1(request1, stage1)Callback 的缺點

-

回呼地獄(邪惡金字塔)

-

程式碼:厚,有夠難閱讀

-

程式碼:厚,有夠難寫

首先用我們熟悉的多執行緒來寫

import socket

import time

import concurrent.futures

URLS = ['/foo', '/bar']

def get(path):

s = socket.socket()

s.connect(('localhost', 5000))

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

while True:

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returnstart = time.time()

with concurrent.futures.ThreadPoolExecutor(max_workers=len(URLS)) as executor:

future_to_url = {executor.submit(get, url): url for url in URLS}

for future in concurrent.futures.as_completed(future_to_url):

url = future_to_url[future]

try:

data = future.result()

except Exception as exc:

print('%r generated an exception: %s' % (url, exc))

print('multithreading took %.1f sec' % (time.time() - start))例如:

多執行緒預設開數量? 總數量除以 2? 3?

有可能遇到很多設定等動態問題

範例

Reference from A. Jesse Jiryu Davis's coroutin in Python 3.x tutorial

def get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

# non-blocking sockets

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

while True:

# non-blocking sockets

selector.register(s.fileno(), EVENT_READ)

selector.select()

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returndef get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()def get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returndef get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

# non-blocking sockets

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

while True:

# non-blocking sockets

selector.register(s.fileno(), EVENT_READ)

selector.select()

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returndef get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returndef get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()非阻塞事件驅動

-

Event loop

Event-loop

你做好了就叫我

範例

def get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returnn_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected_event(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected_event(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable_event(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

def readable_event(s, chunks):

global n_jobs

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable_event(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1n_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

def readable(s, chunks):

global n_jobs

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1

def get(path):

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE)

selector.select()

callback()

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returnn_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returnn_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

def readable(s, chunks):

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ)

selector.select()

callback()

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

returndef eventloop():

start = time.time()

for url in URLS:

get(url)

while n_jobs:

events = selector.select()

for key, mask in events:

cb = key.data

cb()非阻塞 Coroutine

非阻塞 Coroutine

-

Non-blocking I/O

-

Callback -> Coroutine

-

Event loop

Coroutine?

揪竟是什麼!可以吃嗎!?

Coroutine

-

Generator

-

Future

-

Task

Generator

-

__iter__, __next__

-

語法 yield

-

控制流程

-

PEP 342: 語句變成表達式 x = yield

-

產生器演化成協同程序

Generator

-

yield: __iter__, __next__

def fibonacci():

a, b = (0, 1)

while True:

yield a

a, b = b, a+b

fibos = fibonacci()

next(fibos) #=> 0

next(fibos) #=> 1

next(fibos) #=> 1

next(fibos) #=> 2class Fibonacci():

def __init__(self):

self.a, self.b = (0, 1)

def __iter__(self):

return self

def __next__(self):

result = self.a

self.a, self.b = self.b, self.a + self.b

return result

fibos = Fibonacci()

next(fibos) #=> 0

next(fibos) #=> 1

next(fibos) #=> 1

next(fibos) #=> 2Generator

-

控制流程

def generator():

print('before')

yield # break 1

print('middle')

yield # break 2

print('after')

x = generator()

next(x)

#=> before

next(x)

#=> middle

next(x)

#=> after

#=> exception StopIterationFuture

-

記錄要工作狀態

-

將來執行或沒有執行的任務的結果

-

類似 Promise

Task

-

事件循環交互,執行協同程序任務

-

一個協程對象就是一個原生可以暫停執行的函數,任務則是對協程進一步封裝,其中包含任務的各種狀態。Task 對象是 Future 的子類,它將 coroutine 和 Future 聯繫在一起,將 coroutine 封裝成一個 Future 對象。

範例

class Future:

def __init__(self):

self.callbacks = []

def resolve(self):

for fn in self.callbacks:

fn()

class Task:

def __init__(self, coro):

self.coro = coro

self.step()

def step(self):

try:

future = next(self.coro)

except StopIteration:

return

future.callbacks.append(self.step)n_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

def readable(s, chunks):

global n_jobs

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1n_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

f = Future()

selector.register(s.fileno(), EVENT_WRITE, data=f)

yield f

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

while True:

f = Future()

selector.register(s.fileno(), EVENT_READ, data=f)

yield f

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1

return n_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

callback = lambda: connected(s, path)

selector.register(s.fileno(), EVENT_WRITE, data=callback)

def connected(s, path):

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

def readable(s, chunks):

global n_jobs

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

callback = lambda: readable(s, chunks)

selector.register(s.fileno(), EVENT_READ, data=callback)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1n_jobs = 0

def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

f = Future()

selector.register(s.fileno(), EVENT_WRITE, data=f)

yield f

selector.unregister(s.fileno())

request = 'GET %s HTTP/1.0\r\n\r\n' % path

s.send(request.encode())

chunks = []

while True:

f = Future()

selector.register(s.fileno(), EVENT_READ, data=f)

yield f

selector.unregister(s.fileno())

chunk = s.recv(1000)

if chunk:

chunks.append(chunk)

else:

body = (b''.join(chunks)).decode()

print(body.split('\n')[0])

n_jobs -= 1

return def eventloop():

start = time.time()

for url in URLS:

Task(get(url))

while n_jobs:

events = selector.select()

# what next?

for key, mask in events:

fut = key.data

fut.resolve()

沒關係,老實講我也不明白

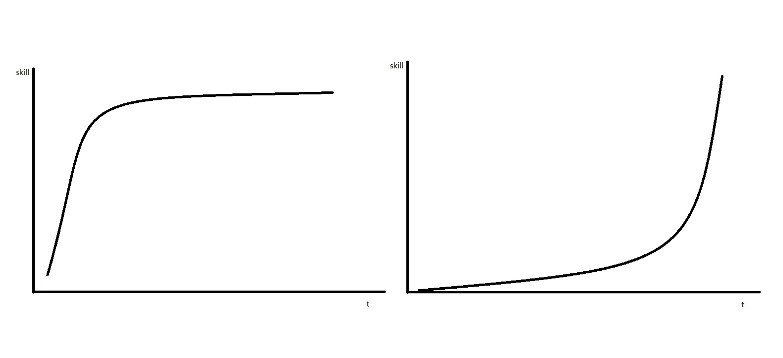

Python

Async programming

學習曲線

Coroutine 如果都要這樣手刻,似乎是有點麻煩

產生器和協同程序語法不區隔讓人有點混亂

登愣!async, await 登場

-

清楚區分產生器和協同程序

-

原生協程對象不實現 __iter__ 和 __next__ 方法

-

沒有裝飾的產生器不能 yield from 一個原生協程

-

基於產生器的協程在經過 @asyncio.coroutine 裝飾後,可以 yield from 原生協程對象。

-

對於原生協程對象和原生協程函數,呼叫inspect.isgenerator() inspect.isgeneratorfunction()會返回 False

-

def subgenerator():

for j in range(10):

yield j

def generetor():

x = yield from subgenerator()

return x

if __name__ == '__main__':

g = list(generator)

print(g) # [0,1,2,3,4,5,6,7,8,9]

import asyncio

def subgenerator():

for j in range(10):

yield j

@asyncio.coroutine

def generetor():

x = yield from subgenerator() #not future/coro

return x

if __name__ == '__main__':

loop = asyncio.get_event_loop()

# runtime error

loop.run_until_complete(generetor())

loop.close()

暫時拋離

-

@asyncio.coroutine

-

yield from

原生的協同程序

-

@asyncio.coroutine -> async def

-

yield from -> await

import asyncio

@asyncio.coroutine # generator-based coroutines

def generator_async(x):

yield from asyncio.sleep(x)

async def native_async(x): # native coroutines

await asyncio.sleep(x)

import dis

dis.dis(generator_async)

dis.dis(native_async)

list(generator_async)

list(native_async回到剛剛範例

class Future:

def __init__(self):

self.callbacks = None

def resolve(self):

self.callbacks()

def __await__(self):

yield self

class Task:

def __init__(self, coro):

self.coro = coro

self.step()

def step(self):

try:

f = self.coro.send(None)

except StopIteration:

return

f.callbacks = self.stepclass Future:

def __init__(self):

self.callbacks = []

def resolve(self):

for fn in self.callbacks:

fn()

class Task:

def __init__(self, coro):

self.coro = coro

self.step()

def step(self):

try:

future = next(self.coro)

except StopIteration:

return

future.callbacks.append(self.step)async def get(path):

global n_jobs

n_jobs += 1

s = socket.socket()

s.setblocking(False)

try:

s.connect(('localhost', 5000))

except BlockingIOError:

pass

f = Future()

selector.register(s.fileno(), EVENT_WRITE, data=f)

#yield f

await f

......可以被 await

async def

import asyncio

import aiohttp

async def async_fetch_page(url):

....async with

import asyncio

import aiohttp

async def async_fetch_page(url):

async with aiohttp.ClientSession() as session:

resp = await session.get(url)

if resp.status == 200:

text = await resp.text()

print(f"GET {resp.url} HTTP/1.0 {resp.status} OK")Event loop

asyncio— Asynchronous I/O

url = 'localhost:5000'

URLS = ['/foo', '/bar']

loop = asyncio.get_event_loop()

tasks = [

asyncio.ensure_future(async_fetch_page(f"{url}{u}")) for u in URLS

]

loop.run_until_complete(asyncio.wait(tasks))

loop.close()T1

T2

T3

sleep 3

T1

T2

T3

sleep 3

Producing

async for Consuming

T1

T2

T3

sleep 3

for Consuming

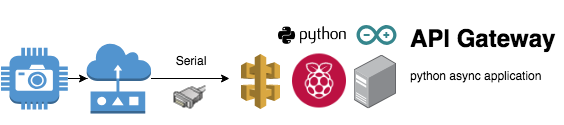

Demo

-

視覺機器計算數據

-

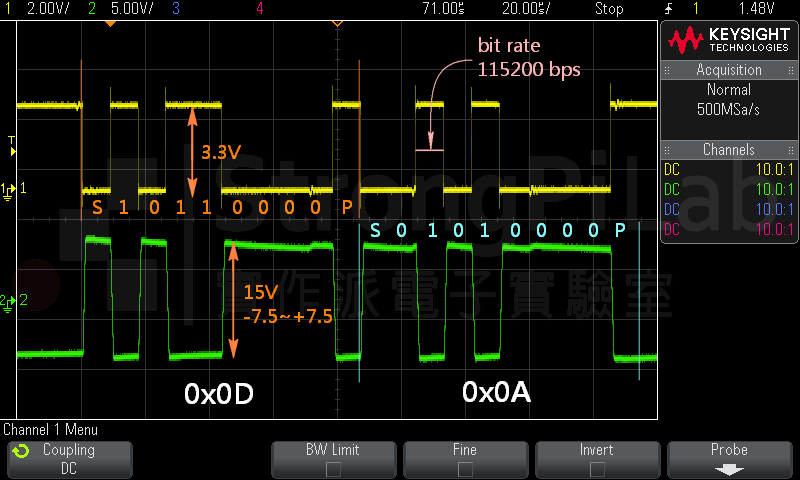

serial port

-

Django restful service

- 序列資料通訊的介面標準

Python 使用 pyserial 模組讀寫 Serial port

>>> import serial

>>> # Open named port at “19200,8,N,1”, 1s timeout:

>>> with serial.Serial('/dev/ttyS1', 19200, timeout=1) as ser:

... x = ser.read() # read one byte

... s = ser.read(10) # read up to ten bytes (timeout)

... line = ser.readline() # read a '\n' terminated line

>>> # Open port at “38400,8,E,1”, non blocking HW handshaking:

>>> ser = serial.Serial('COM3', 38400, timeout=0,

... parity=serial.PARITY_EVEN, rtscts=1)

>>> s = ser.read(100) # read up to one hundred bytes

... # or as much is in the bufferData Flow

-

視覺機器產生資料,Gateway 透過 RS-232 取得資料 -

Gateway 接收訊號接著與 web service 取得 id 再透過 API 寫入資料庫

Vision

Gateway

Django

Serial Port

TCP/IP

Synchronous 的寫法

-

While 迴圈等待 serial port 資料?

-

1 + 1 = 1??

-

多執行緒?(Python GIL 👍)

-

想要更潮一點

Program Flow

-

Gateway 裡的 Producer() 不斷讀 serial port,資料產生就放入 Queue 中。Gateway 的 Consumer() 會不斷檢查,當 Queue 有資料就執行 API 將資料存到 Server

-

非同步架構?Asyncio!

Producer

Queue

Consumer

def consumer():

with serial.Serial(com, port, timeout=0) as ser:

buffer = ''

while True:

buffer += ser.readline().decode()

if '\r' in buffer:

buf = buffer.split('\r')

last_received, buffer = buffer.split('\r')[-2:]

yield last_received

async def async_producer(session, q):

start = time.time()

while len(q):

data = q.popleft()

status, result = await async_post(session, url_seq, url_films, data)

if status != 201:

q.append(data)

if time.time() - start >= 1:

break專業養蟲師

專業養蟲師

q = deque()

com = 'COM5'

port = 9600

last_received = ''

async def async_main():

global q

async with aiohttp.ClientSession() as session:

for data in consumer():

q.append(data)

d = q.popleft()

status, result = await async_post(session, url_seq, url_films, data=d) # post data

if status != 201:

q.append(d)

if len(q) >= 2:

await async_producer(session, q)

loop = asyncio.get_event_loop()

loop.run_until_complete(async_main())

loop.close()是位擅長產生 bug 的朋友呢

錯誤在那呢?

快快樂樂的讓 serial port 非同步

pyserial -asyncio API

-

Protocol (Low level api)

-

Stream (High level api)

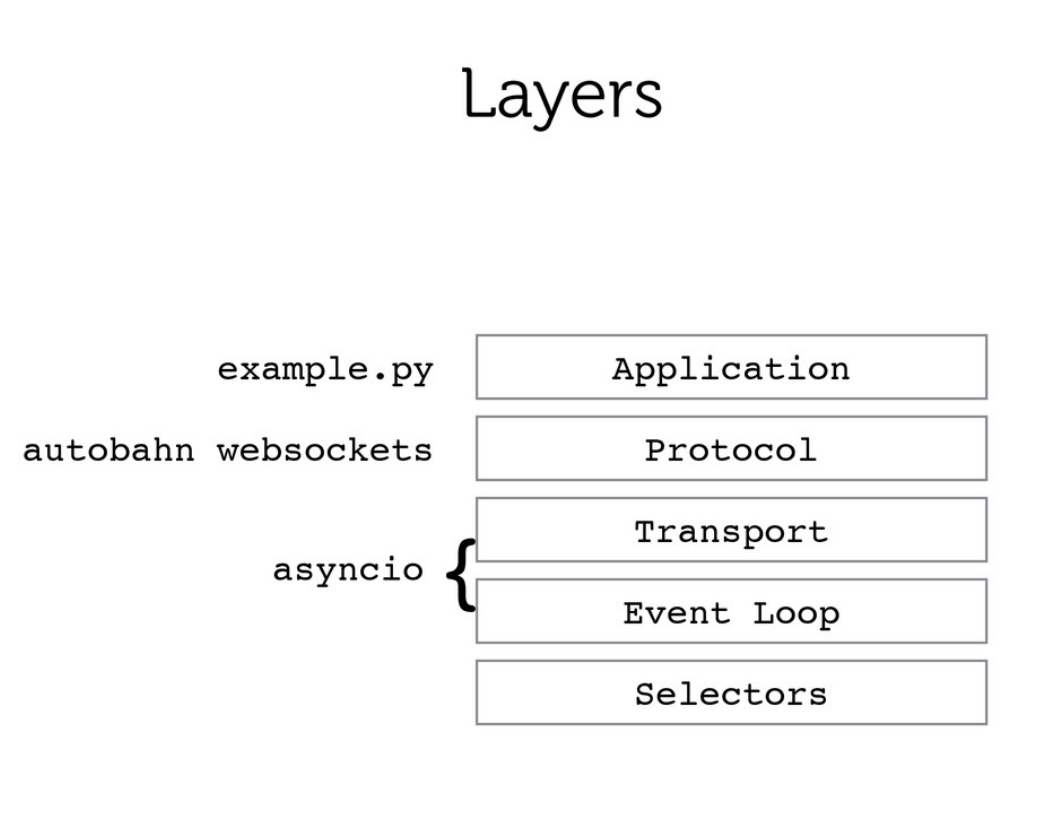

Asyncio

• protocols

- represent applications such as HTTP client/server, SMTP, and FTP

- Async HTTP operation

- [loop.create_connection()]

• transport

- represent connections such as sockets, SSL connection, and pipes

-

Async socket operations

- Usually, frameworks implement e.g. Tornado

Asyncio

StreamReader

- Represents a reader object that provides APIs to read data from the IO stream

Stream 說明

import serial_asyncio

# serial setting

url = '/dev/cu.usbmodem1421'

port = 9600

async def produce(queue, **kwargs):

"""get serial data use recv() define format with non-blocking

"""

reader, writer = await serial_asyncio.open_serial_connection(url=url, **kwargs)

buffers = recv(reader)

async for buf in buffers:

# TODO: can handle data format here

print(f"produce id: {id(buf)}")

async def recv(r):

"""

Handle stream data with different StreamReader:

'read', 'readexactly', 'readuntil', or 'readline'

"""

while True:

msg = await r.readuntil(b'\r')

yield msg.rstrip().decode('utf-8')async def consume(queue):

"""Get serail data with async"""

while True:

data = await queue.get()

print(f'consuming: {id(data)}')

"""handle data from here"""

await asyncio.sleep(random.random())

queue.task_done()

loop = asyncio.get_event_loop()

queue = asyncio.Queue(loop=loop)

producer_coro = produce(queue, url=url, baudrate=port)

consumer_coro = consume(queue)

loop.run_until_complete(asyncio.gather(producer_coro, consumer_coro))

loop.close()資料處理

然後...說好的 DEMO呢?

後端大概4這樣

前端 js 可以設定幾秒後動態去更新資料(Demo 手按的 XD)

前端大概4這樣

最後 ipython 也支援 async REPL

-

https://github.com/ipython/ipython/pull/11265 -

https://github.com/ipython/ipykernel