👩🚀 👨🚀

Scaling a

Single Page Application

with GraphQL

#whoami

Charly POLY

Past

➡️ JobTeaser alumni

➡️ 1 year @ A line

Now

Senior Software Engineer at

The context

Première plateforme collaborative

de conseil, création et développement pour des projets marketing.

- 2 products:

- ACommunity:

- marketplace

- The platform:

- projects

- chat

- ACL

- timeline

- selection lists

- ACommunity:

The starting point

- Redux store for data

- one redux action per "CRUD":

-

updateModel, createModel, deleteModel

-

- Rest CRUD based service: HttpService

{

models: {

chats: {

"8660f534-c425-4688-b4a9-d9ab11c6af85": { /* ... */ }

}

}

// ...

}The problem

The chat and the timeline components

-

Timeline has posts that:

- have different types: media, note, text, links with preview

- many types of medias: photos, videos

- theater view (Facebook like)

-

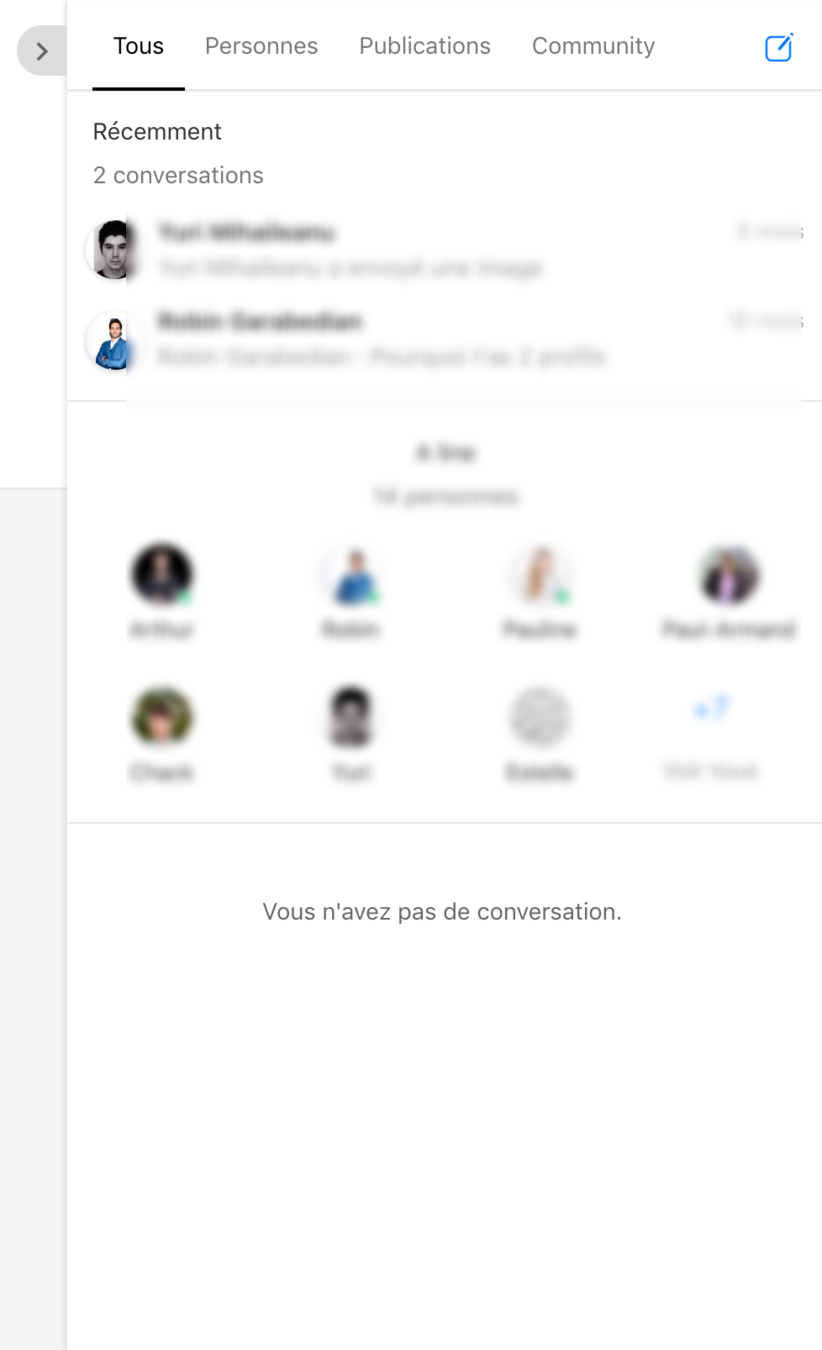

Chat are contextual:

- people chat (1-1, group chats)

- post chat

The chat data

The chat data

The chat data

- post with file

- post with text only

- post with image

- post with note attached

- post with video

The chat REST journey

Numbers

For a average "list chats" query:

➡️ 20-50 chats of all types (without paging)

➡️ lot of n+1, n+2 requests per chat

➡️ lot of redux store updates

➡️ lot of react components re-render 💥💥💥

Solutions

paging

➡️ didn't solved requests issues

"includes" on API side with n+1 objects included in response

➡️ do not resolve n+2 queries issue

preload all chats in a dedicated /preload API endpoint

➡️ still some perf issue with realtime and updates refetches

The chat REST journey

The first working solution

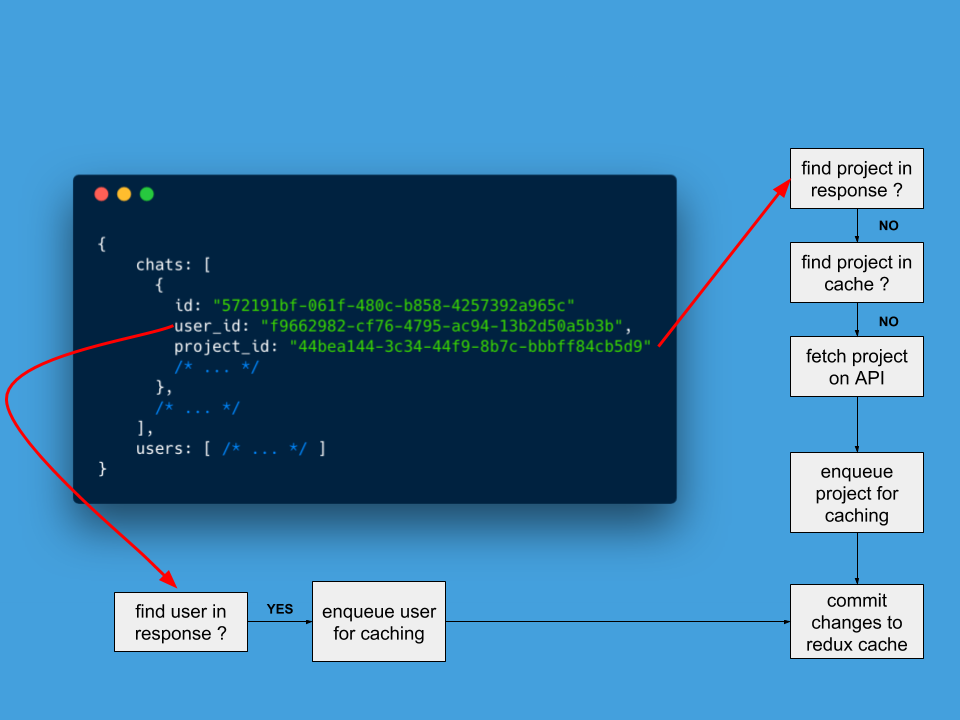

Hydra

a custom client side relational cache with transactional redux dispatch

➡️ discover API request based on response data shape

➡️ wait all requests to finish before commit to redux

➡️ on update, ensure redux cache object relations are up-to-date

Example: a query on a chat can update a project object in cache

The chat REST journey

The chat REST journey

Hydra

The first working solution fail

Users now have average of 80-100 chats

- client cache to many times invalid (too aggressive) 🔥

- API too slow 🔥

The chat REST journey

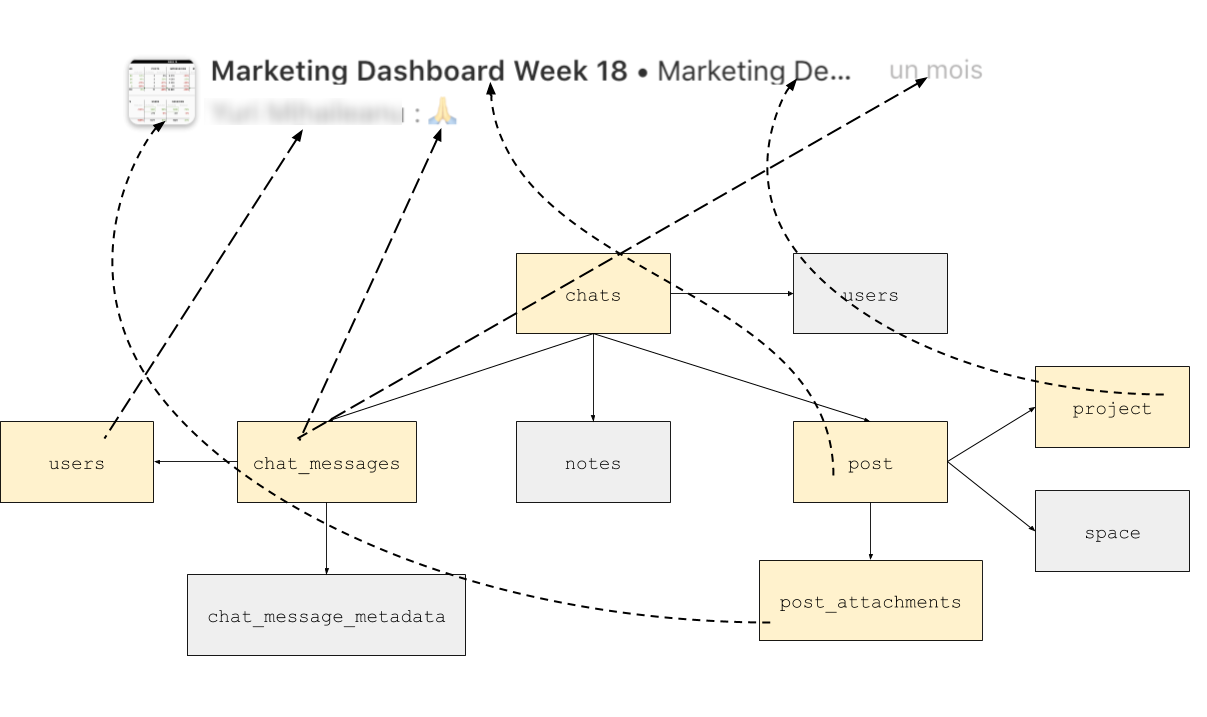

GraphQL and Apollo at the rescue

➡️ specific chat query with server-side optimisation

➡️ no more nesting issue (up to 4 levels easily)

➡️ models/data state handled by Apollo using Observables

➡️ advanced caching strategies for better UX

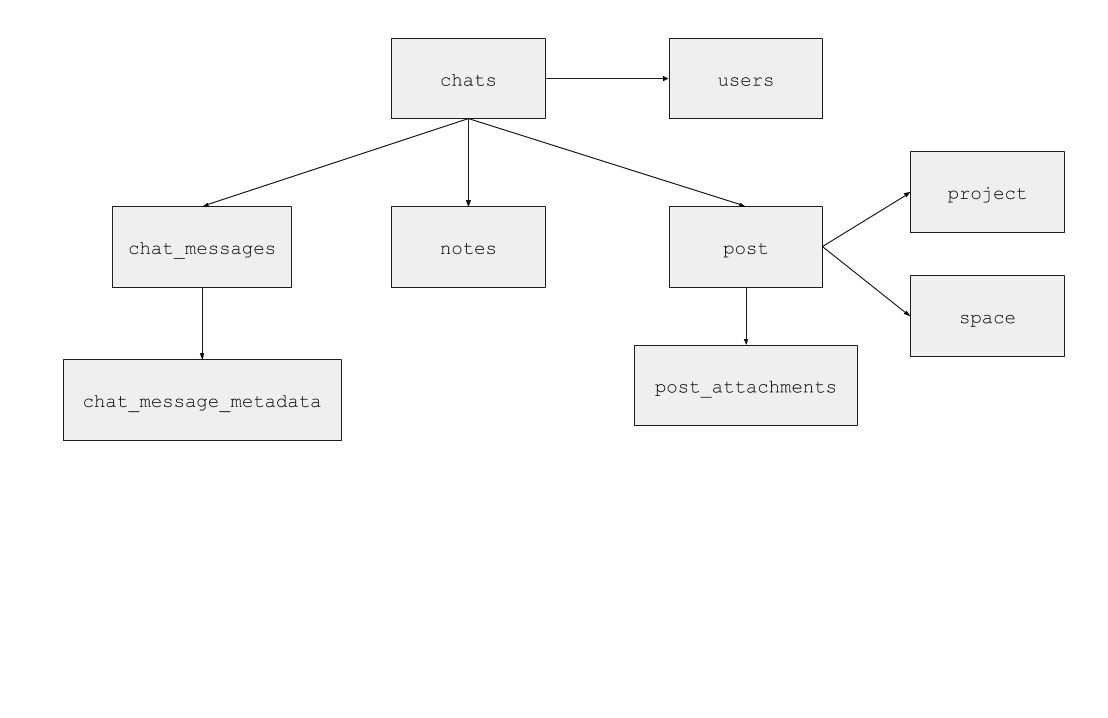

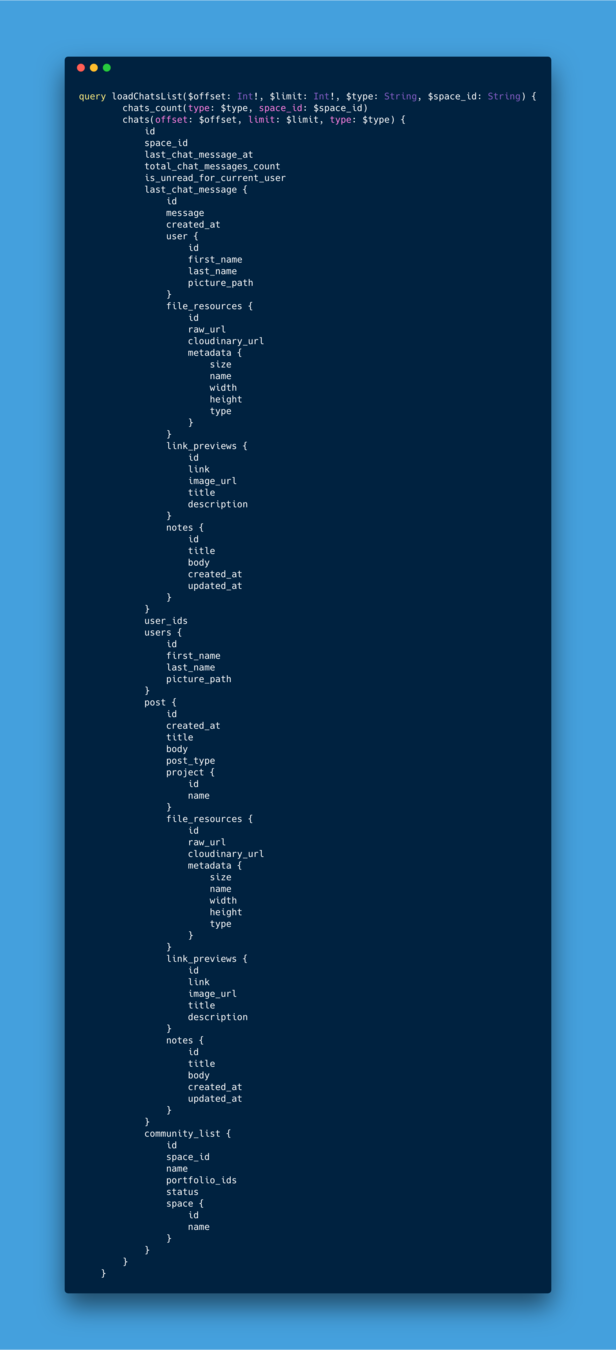

The chat in GraphQL

The chat in GraphQL

The chat in GraphQL

Why Apollo and not Relay?

➡️ Very flexible and composable API

➡️ Support custom GraphQL schema without "Relay edges"

➡️ more complete options on caching strategies

➡️ easier migration

➡️ possibility to have a local GraphQL schema (state-link)

The chat in GraphQL

Apollo: the smooth migration

The chat in GraphQL

Apollo: the caching strategies

-

"cache-first" (default)

-

"cache-and-network"

-

"network-only"

-

"cache-only"

-

"no-cache"

The chat in GraphQL

The Apollo super-powers

- apollo-codegen: TypeScript/Flow/Swift types generation

- Link pattern: like rake middleware on front side

- Local state: a nice alternative to redux

Going further with GraphQL

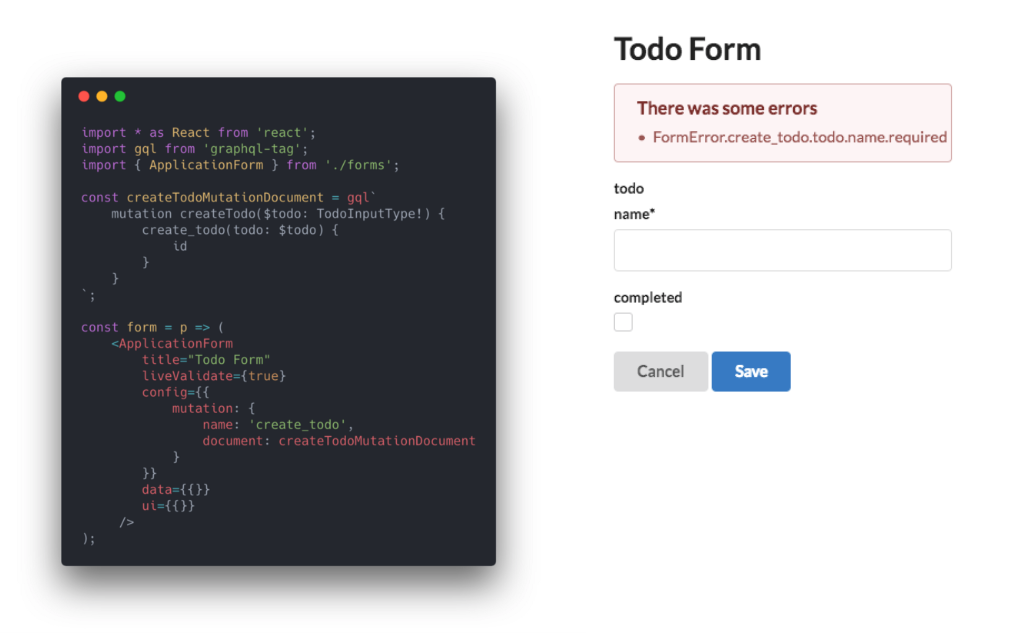

<ApolloForm>

Going further with GraphQL

<ApolloForm>

- Introspect your API GraphQL Schema

- Build a JSON-Schema on available types and mutations

- Create configuration files

- Automatic form bootstrapping 🎉

Going further with GraphQL

<ApolloForm> advantages

-

distinct separation between data and UI:

- Data structure: what kind of data and validation are exposed

- UI structure: how we want to display this data

- Your front application is always synced to your API

- Easier UI-kit installation and maintainability

Conclusion

- GraphQL is useful for rich front-end application

- With Apollo, it can even replace local state management

- With TypeScript/Flow or Swift,

it allows to keep clients and APIs synced