Grab the slides:

slides.com/cheukting_ho/legend-data-boosting-algrithms

Every Monday 5pm UK time

by Cheuk Ting Ho

Gradient Boosting

Gradient boosting is a machine learning technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision trees. It builds the model in a stage-wise fashion like other boosting methods do, and it generalizes them by allowing optimization of an arbitrary differentiable loss function. -wikipedia

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html

AdaBoost (Adaptive Boosting)

AdaBoost is adaptive in the sense that subsequent weak learners are tweaked in favor of those instances misclassified by previous classifiers. AdaBoost is sensitive to noisy data and outliers.

XGBoost

XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable.

- Clever penalization of trees

- A proportional shrinking of leaf nodes

- Newton Boosting

- Extra randomization parameter

- Popular in Kaggle community

Light GBM

LightGBM is a gradient boosting framework that uses tree based learning algorithms. It is designed to be distributed and efficient with the following advantages:

-

Faster training speed and higher efficiency.

-

Lower memory usage.

-

Better accuracy.

-

Support of parallel and GPU learning.

-

Capable of handling large-scale data.

XGBoost vs Light GBM

XGBoost - pre-sorting splitting

- For each node, enumerate over all features

- For each feature, sort the instances by feature value

- Use a linear scan to decide the best split along that feature basis information gain

- Take the best split solution along all the features

LightGBM - Gradient-based One-Side Sampling (GOSS)

- finding the split by evaluating the gradients of the data instances

- achieve faster performance

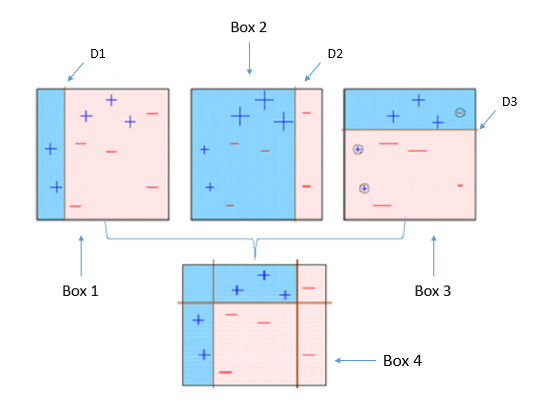

CatBoost

CatBoost is an algorithm for gradient boosting on decision trees.

CatBoost has the flexibility of giving indices of categorical columns so that it can be encoded as one-hot encoding or following the following formula:

Next week:

TBC

Every Monday 5pm UK time

Get the notebooks: https://github.com/Cheukting/legend_data