Deep Learning with Keras

Building an AI that Talks like Shakespeare or Trump

By Cheuk Ting Ho

Twitter: @cheukting_ho

https://github.com/Cheukting

@PyLondinium

AI writes Harry Potter Fan-Fiction... Really?

@cheukting_ho | https://github.com/Cheukting

@PyLondinium

Build a

Very simple Neural Network

using Keras

from keras import layers

model = keras.models.Sequential()

model.add(layers.Embedding(max_num_word, 256, input_length=maxlen))

model.add(layers.LSTM(256))

model.add(layers.Dense(max_num_word, activation='softmax'))@cheukting_ho | https://github.com/Cheukting

@PyLondinium

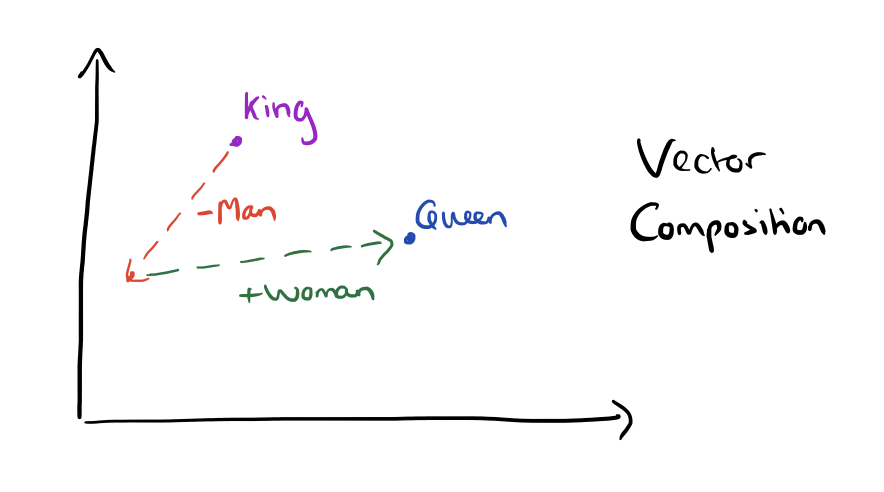

- Words or phrases from the vocabulary are represented as a vector in a vector space.

- In this vector space, words have similar meanings are close to each other and calculations like

“king” – “man” + ”woman” = “queen”

is valid. - 2 approaches (or more) is used to help find the mapping to this vector space (GloVe vs Word2Vec).

- Preserve the context of the word.

Wording Embeddings

@cheukting_ho | https://github.com/Cheukting

@PyLondinium

Wording Embeddings

in 2 approaches

GloVe

word2vec

"Global Vectors for Word Representation"

"count-based" model

based on factorizing a matrix of word co-occurence statistics

"words to vectors" ?

"predictive" model

feed-forward neural network and optimized

@cheukting_ho | https://github.com/Cheukting

@PyLondinium

Long Short-term-memory

- Recurrent Neural Network – the previous training data affects the next training data

- Useful for sequential data

- Have “gates” to control what previous contents to keep

@cheukting_ho | https://github.com/Cheukting

@PyLondinium

Reference