Grab the slides:

slides.com/cheukting_ho/legend-data-decision-trees

Every Monday 5pm UK time

by Cheuk Ting Ho

Supervised Learning

Supervised learning is the machine learning task of learning a function that maps an input to an output based on example input-output pairs. It infers a function from labeled training data consisting of a set of training examples.

- wikipeida

- data are labeled

- lot's of human effort to aquired training data

- let's of data cleaning before training

Unsupervised Learning

Unsupervised learning is a type of machine learning that looks for previously undetected patterns in a data set with no pre-existing labels and with a minimum of human supervision.

- wikipeida

- data are not labeled

- usually involved clustering

- train to classify data based on how they cluster

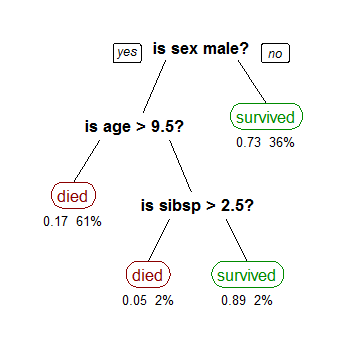

Decision Trees

Decision Tree

- supervised learning

- predictive model

- epresent decisions and decision making

- classification trees vs regression trees

- no need to create dummy variables

- in built feature selection

- performs well with large datasets however non-robust

- overfits easily

Let's have a look at Decision Trees at Sci-kit Learn

Decision Trees

Finding which feature(s) can help seperating one outcome from the others (making "decisions")

Sometimes a combination of decision trees can give a better picture of the "decision" and outcome statistically

- Random forests - comprised of a large number of decision trees.

- Tree boosting - build trees one at a time; each new tree helping to correct errors from the previously trained tree.

Random Forest

- Generate a very large number of trees, with different sample of training data and features averaging out their solutions

- Combining smaller trees to make the model more robust and prevent overfitting

- It will take longer to train and harder to interpert the decision making

Let's have a look at Random Forest at Sci-kit Learn

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html

Gradient Boosting Trees

- Faster than Random Forest because it is tuning how trees are built and combining trees on the go

- But it is easily be mislead by the noises and reach a false optimization (local minimum)

Let's have a look at Gradient Boosting at Sci-kit Learn

https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.GradientBoostingClassifier.html

Next week:

Boosting Algrithms

Every Monday 5pm UK time

Get the notebooks: https://github.com/Cheukting/legend_data