Level Up Your HomeLab Without Kubernetes

About Me

- UVU Computer Science alumnus

- Network Security Engineer

- Homelab hobbyist

- A bunch of spare equipment lying around

- Too much free time

Follow

Along!

Contact:

saintcon@cronocide.com

@cronocide

Daniel Dayley

- Some history and impetus for this talk

- TrueNAS quick setup on Proxmox

- Build consistent VMs with Packer

- Keep track of your growing service collection with Consul

- Codify your your workloads with Nomad

- Secure your secrets with Vault and 1Password

Overview

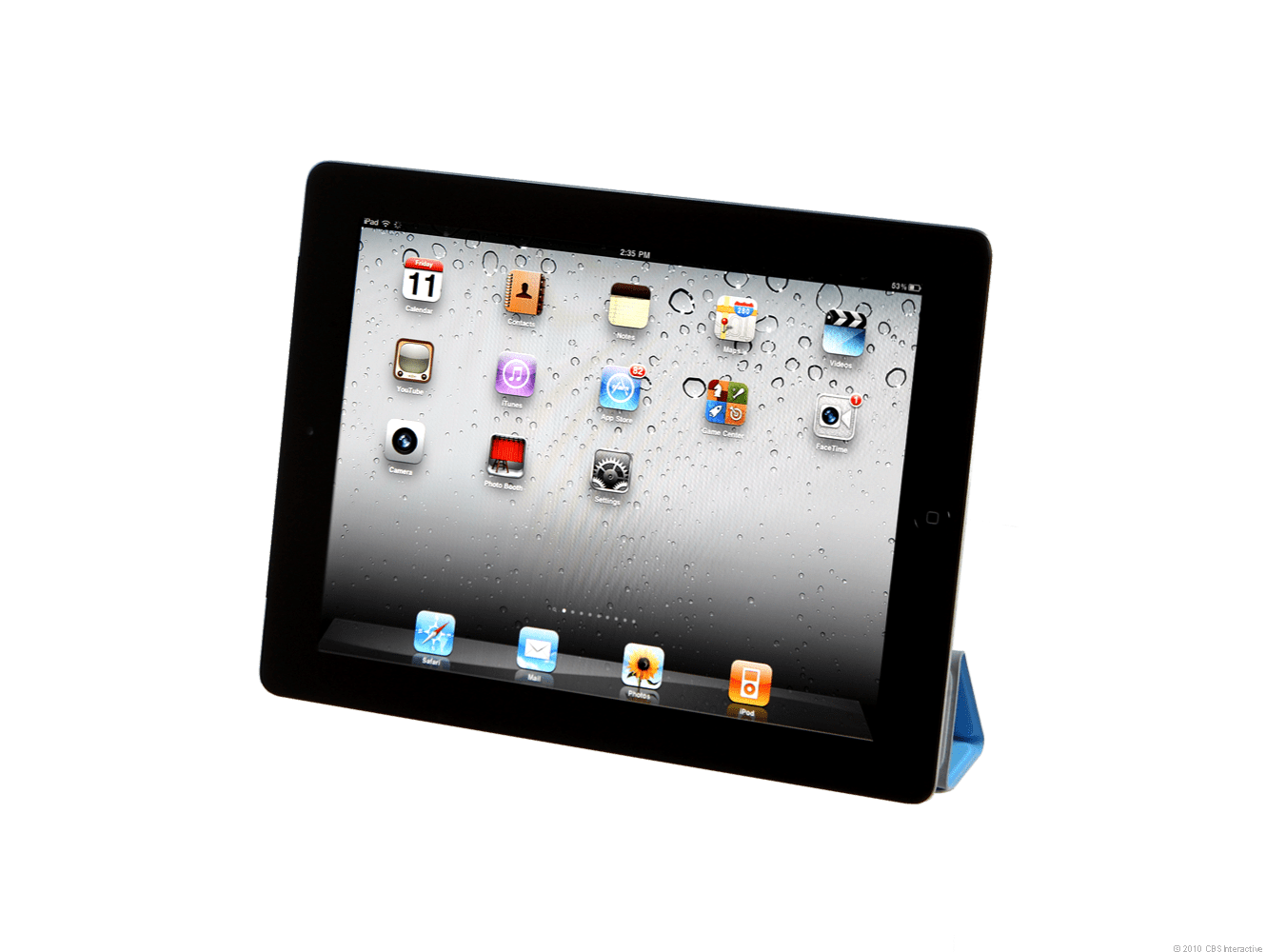

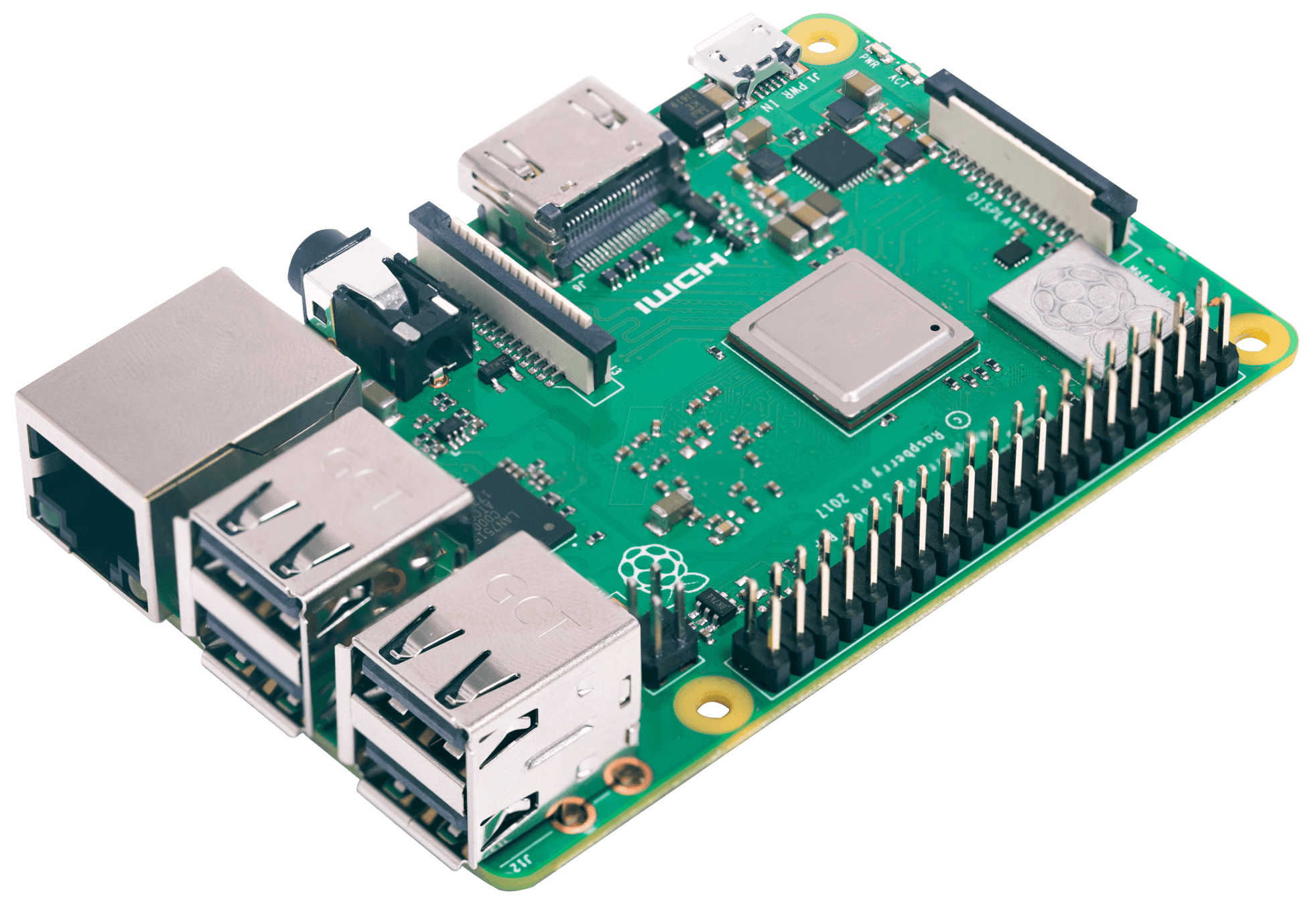

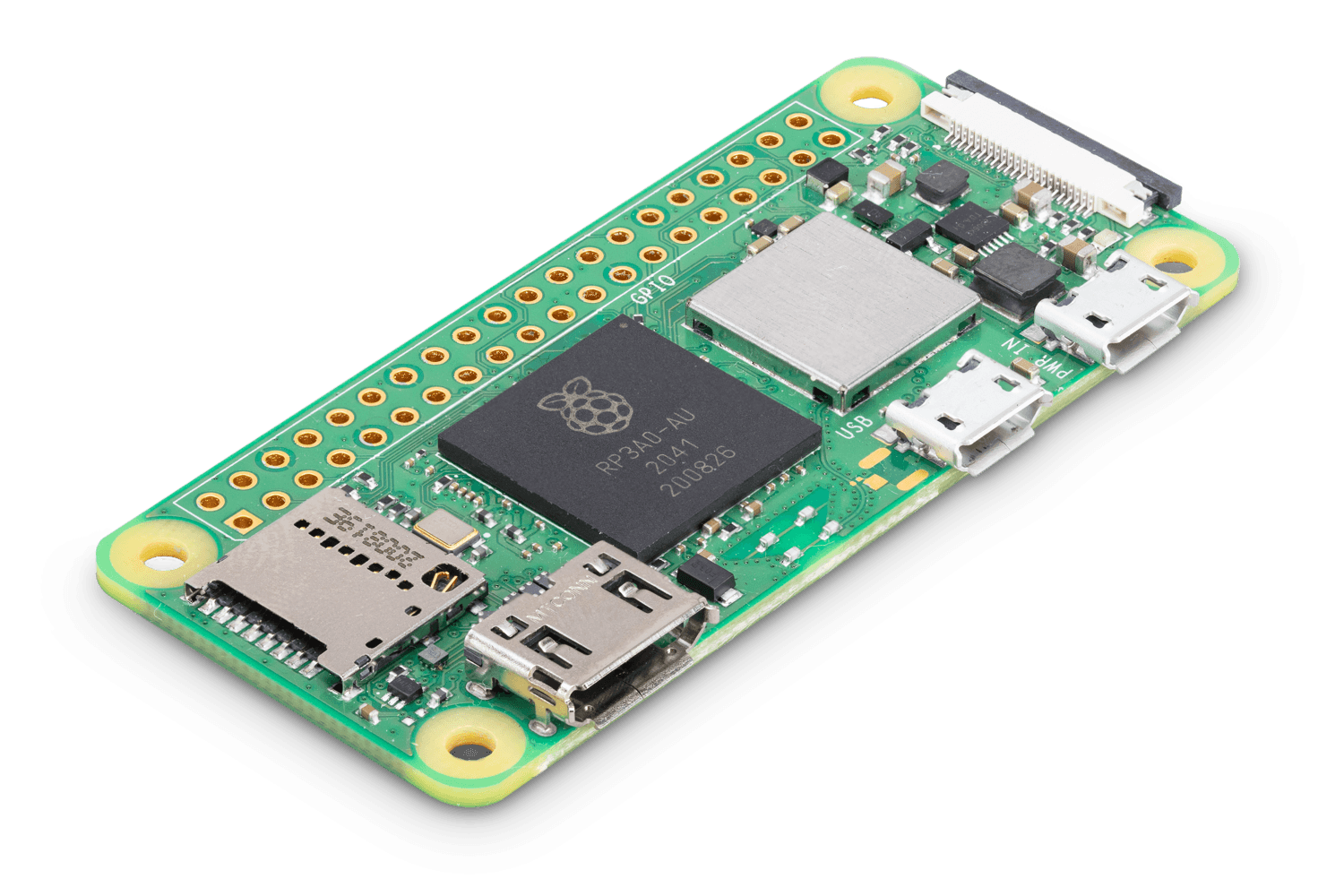

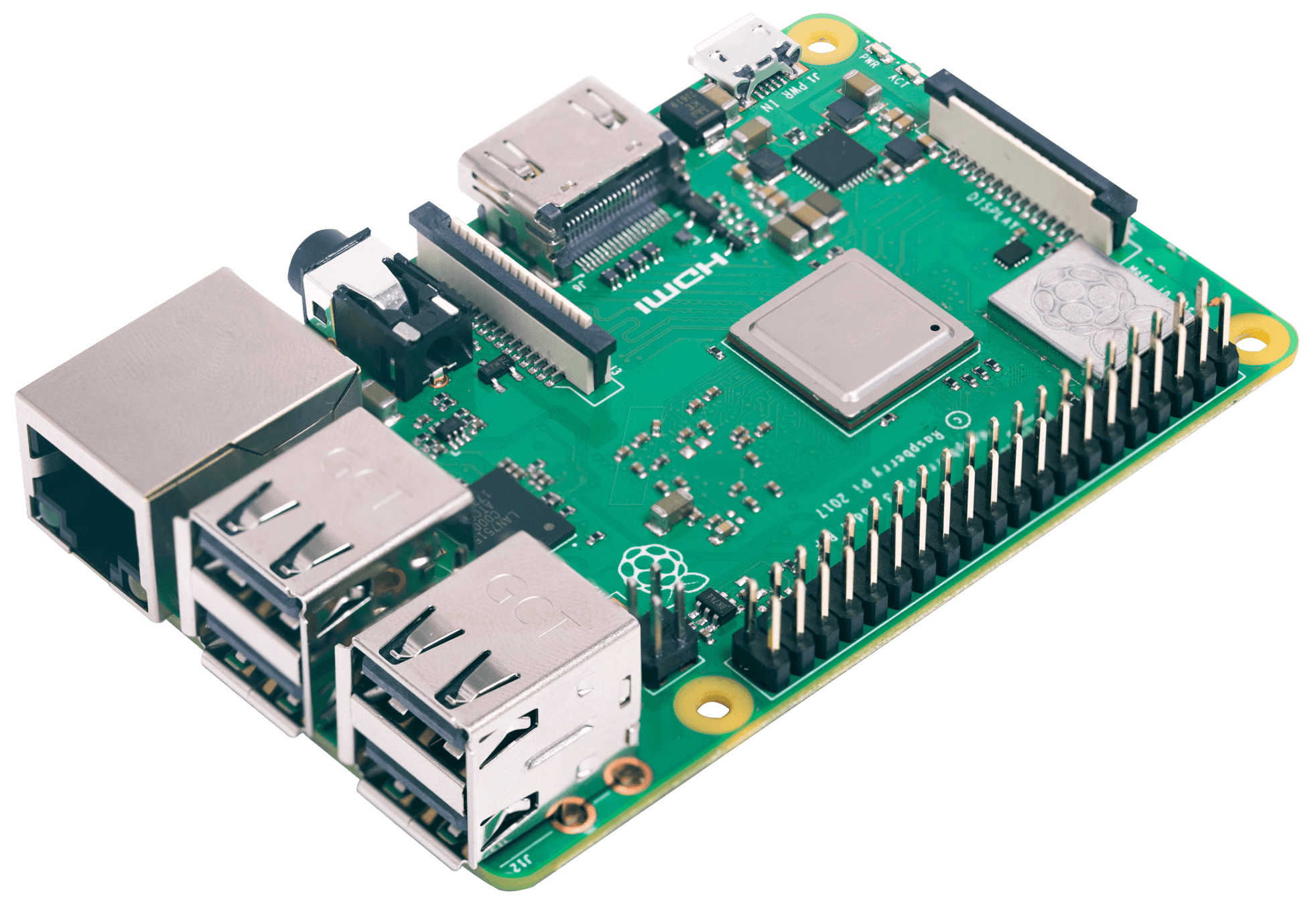

My Homelab Experience

My Homelab Experience

Problems

- Storage & Backups

- Reproducibility

- Velocity

- Secrets and

Configuration

Management

Problems

- Storage & Backups

- Reproducibility

- Velocity

- Secrets and

Configuration

Management

Kubernetes

- High availability and redundancy

- Best-in-Class container orchestration

- Highly declarative IAC

- Many Out-of-Box solutions for bootstrap

- Excellent SaaS quality of life

Kubernetes

- Not every workload is a container

- Bootstrapping still requires other tools

- Homelab components are loosely coupled

- Learning components individually is easier than learning the platform all at once

Storage & Backups

- Centralized

- Resilient

- Easy access control

- Easy backups

- Easy disaster recovery

Storage & Backups

- Centralized

- Resilient

- Easy access control

- Easy backups

- Easy disaster recovery

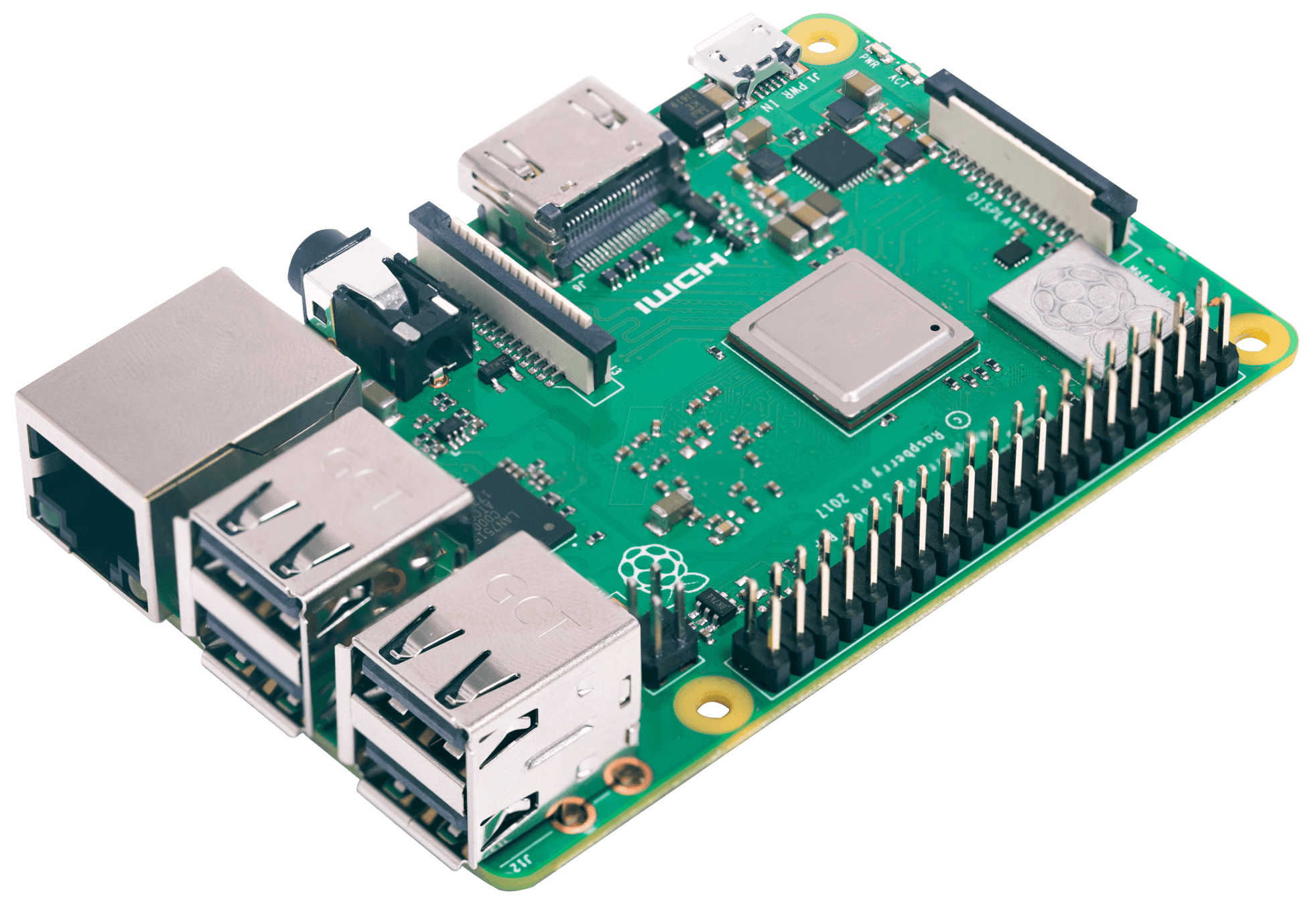

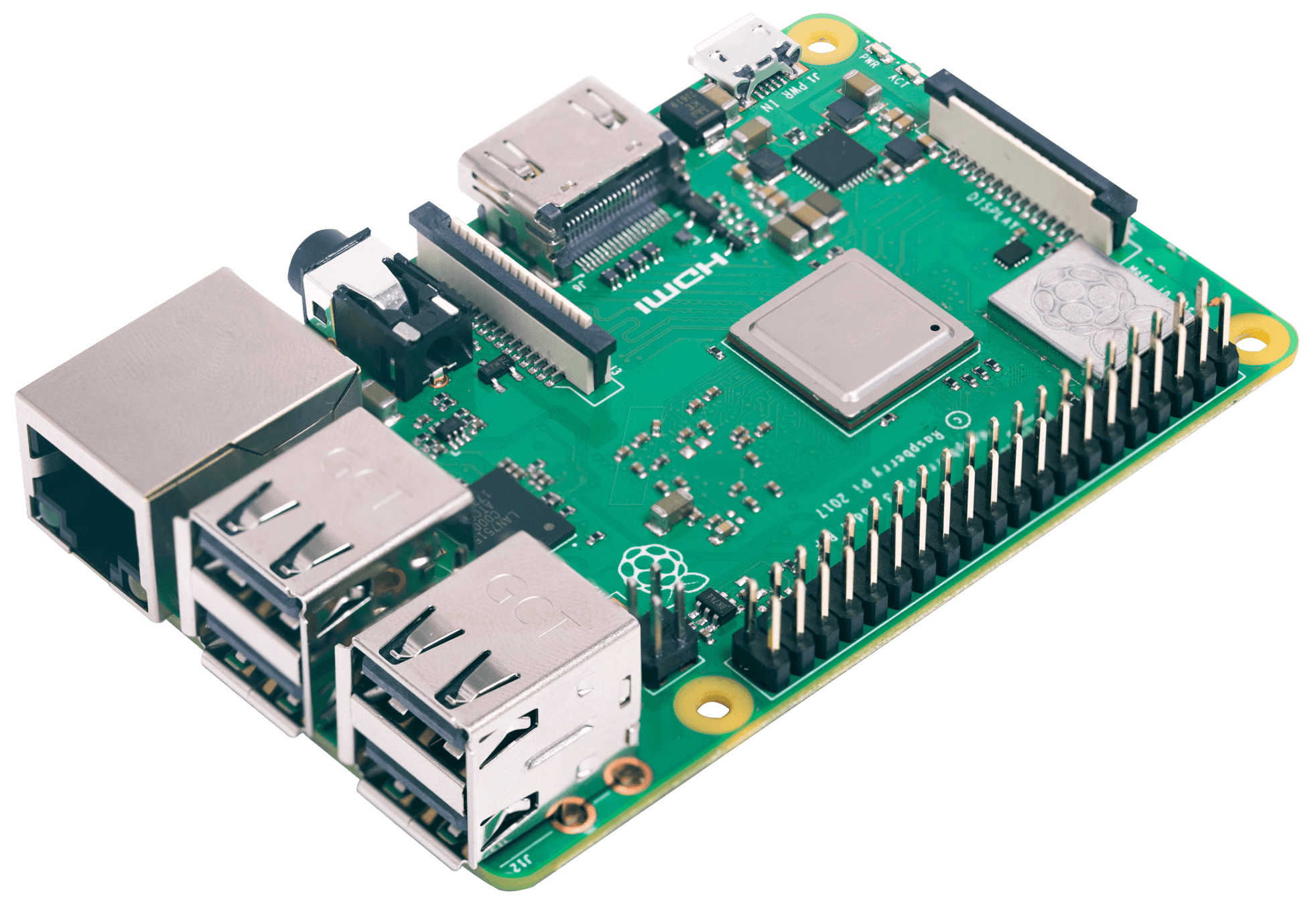

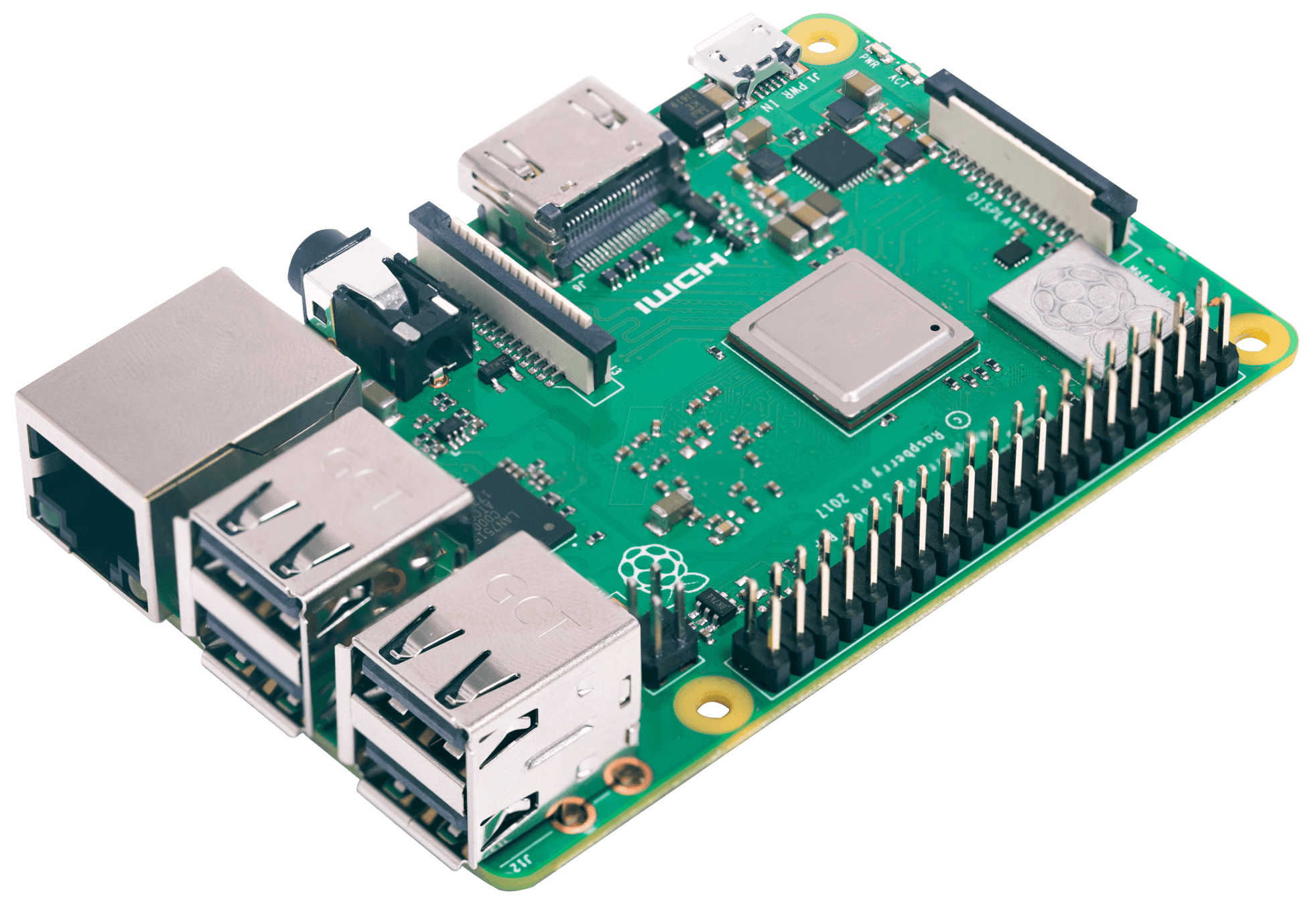

Reproducibility & Velocity

- Homelab-as-Code (HaC)

- Plug-N-Play hardware expansion

- Automatic hardware/software alignment

- Easy to tear down and rebuild

- Documentable

Reproducibility & Velocity

- Homelab-as-Code (HaC)

- Plug-N-Play hardware expansion

- Automatic hardware/software alignment

- Easy to tear down and rebuild

- Documentable

Secrets & Configuration

Management

- Defense In Depth

- Consolidated

- Access-controlled

Secrets & Configuration

Management

- Storage & Backups

- Reproducibility

- TrueNAS quick setup on Proxmox

- Build consistent VMs with Packer

- Keep track of your growing service

collection with Consul - Codify your your workloads

with Nomad - Secure your secrets with Vault

and 1Password

How-To Demos

Demo

Code

TrueNAS Setup

NFS Setup

ISCSI Setup

Packer

Make VM's Automatically!

Packer on Proxmox

HCL

option = "value"

# HCL supports comments on their own line

service "named service" {

arguments = [

"list_item_1", "list_item_2"

]

boolean_key = true

another_key = "String"

}

another_app_specific_key = "value"

configurable_via_var = "${variable}"

/* Multi-line comments,

like this one,

are also available. */Hashicorp

Configuration

Language

YAML that

tastes like

JSON!

Packer Repo

📁 hashistack_demo

┗ 📁 packerfiles

┗ proxmox.pkr.hcl

┗ 📁 scripts

┗ install_apps.sh

┗ setup_network.sh

┗ 📁 nomad

┗ 📁 consul

┗ 📁 vault

...etc

Packer files, read by Packer

Shell scripts sent to the VM and ran

Folders sent to the VM containing anything the scripts need

Packerfiles

build {

sources = ["source.proxmox-iso.saintcon-packer-demo"]

provisioner "file" {

destination = "/tmp"

source = "consul"

}

provisioner "file" {

destination = "/tmp"

source = "nomad"

}

provisioner "file" {

destination = "/tmp"

source = "vault"

}

provisioner "file" {

destination = "/tmp"

source = "keepalived"

}

provisioner "file" {

destination = "/tmp"

source = "netplan"

}

provisioner "file" {

destination = "/tmp"

source = "firstrun"

}

provisioner "shell" {

environment_vars = ["ROLE=${var.ROLE}", "NFS_MOUNTS=${var.NFS_MOUNTS}", "USERNAME=${var.USERNAME}", "USER_KEY=${var.USER_KEY}", "SETUP_USER=${var.SETUP_USER}"]

execute_command = "echo '${var.SETUP_USER}' | {{ .Vars }} sudo --stdin --preserve-env sh -eux '{{ .Path }}'"

expect_disconnect = true

scripts = ["scripts/setup_user.sh", "scripts/install_apps.sh", "scripts/install_vault.sh", "scripts/configure_network.sh", "scripts/cleanup.sh"]

}

}source "proxmox-iso" "saintcon-packer-demo" {

bios = "ovmf"

boot_command = [

"<wait><shift><wait><shift><wait><shift><shift><shift><wait><shift>",

"<wait>e<down><down><down><down><left><bs><bs><bs> ",

"autoinstall ds='nocloud;seedfrom=http://{{ .HTTPIP }}:{{ .HTTPPort }}/' ---<wait>",

"<f10><wait>"

]

boot_wait = "10s"

communicator = "ssh"

sockets = "1"

cores = "4"

cpu_type = "host"

efi_config {

efi_storage_pool = "local-zfs"

}

boot_iso {

iso_file = "local:iso/ubuntu-24.04.1-live-server-amd64.iso"

iso_checksum = "e240e4b801f7bb68c20d1356b60968ad0c33a41d00d828e74ceb3364a0317be9"

type = "ide"

unmount = true

}

http_directory = "./cloudinit"

insecure_skip_tls_verify = true

memory = "16384"

node = "saintcon-demo"

proxmox_url = "https://10.10.64.205:8006/api2/json"

qemu_agent = true

ssh_username = "${var.SETUP_USER}"

ssh_password = "${var.SETUP_USER}"

ssh_timeout = "30m"

ssh_read_write_timeout = "30m"

tags = "${var.ROLE}"

template_description = "# ${var.ROLE} VM\n### Built by Packer\n\n| | |\n|--------------|-----------|\n| **User** | ${var.USERNAME}"

template_name = "${var.ROLE}-${local.timestamp}"

username = "packer@pam!build"

token = "c3f30982-42c2-4dae-a1ca-ef788eba25bb"

vm_name = "${var.ROLE}-${local.timestamp}"

network_adapters {

bridge = "vmbr0"

firewall = true

model = "e1000"

}

disks {

disk_size = "32G"

format = "raw"

storage_pool = "local-zfs"

type = "ide"

}

}

Source

Build

Run Packer

Consul

Service Catalag

Service Catalag

- Plex (10.10.64.10:32400)

- Home Assistant (10.10.64.10:8123)

- Unifi (10.10.64.1:443)

- Proxmox (10.10.64.205:8006)

Service Catalag

- Plex

- Home Assistant

- Unifi

- Proxmox

$> dig -t srv proxmox.service.consul proxmox.service.consul. 0 IN SRV 1 1 8006 0a0a0b2e.addr.saintcon.consul.

plex.service.consul

ha.service.consul

unifi.service.consul

proxmox.service.consul

Service Files

# proxmox.hcl

service {

name = "proxmox"

tags = ["management","https"]

address = "10.10.64.205"

port = 8006

check {

interval = "1m"

failures_before_critical = 1

http = "https://10.10.64.205:8006"

tls_skip_verify = true

}

}# truenas.hcl

service {

name = "truenas"

tags = ["management","https"]

address = "10.10.64.53"

port = 443

check {

interval = "1m"

failures_before_critical = 1

http = "https://10.10.64.53:443"

tls_skip_verify = true

}

}📁 /etc/consul.d/

Consul Template

plex.service.consul

ha.service.consul

unifi.service.consul

proxmox.service.consul

Service plex is located at 10.10.64.10:32400 Service ha is located at 10.10.64.10:8123 Service unifi is located at 10.10.64.1:443 Service proxmox is located at 10.10.64.205:8006

+

# template.txt

{{range services}}{{range $service:=service .Name}}

Service {{.Name}} is located at {{.Address}}:{{.Port}}{{end}}{{end}}

Consul Cluster

Consul

Nomad

Job

Job

Job

Job

Job

Nomad

- Docker

- containerd

- podman

- qemu

- libvirt

- exec

- raw exec

- ...and more!

Drivers

- service

- batch

- system

- sysbatch

Schedules

Nomad Jobs

- Architecture

- Cores

- RAM

- Hardware

- Networking

- Software Versions

- Custom metadata

Constraints

- exec

- batch

Schedule

- RAM

Constraints

Drivers

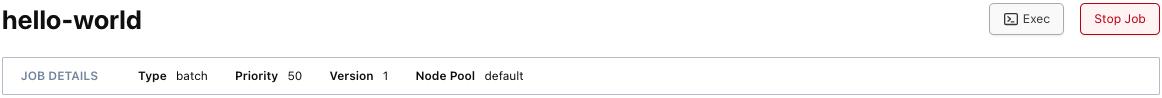

# hello_world.hcl

job "hello-world" {

type = "batch"

group "primary" {

task "hello-world" {

driver = "exec"

config {

command = "echo"

args = ["Hello World!"]

}

resources {

memory = 128

}

}

}

}Nomad Jobs

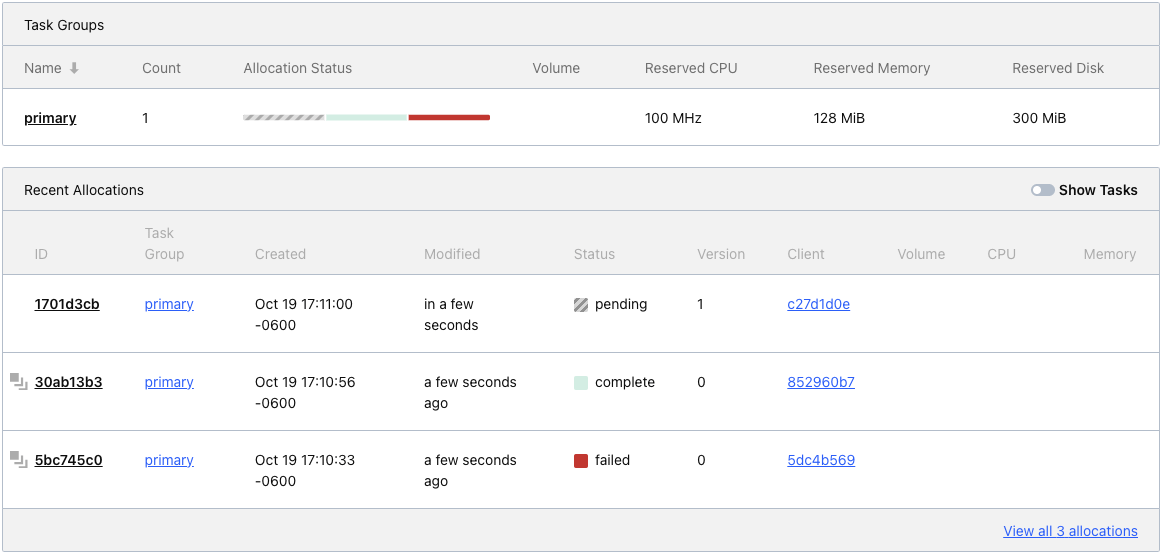

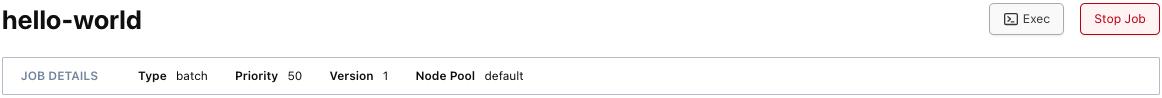

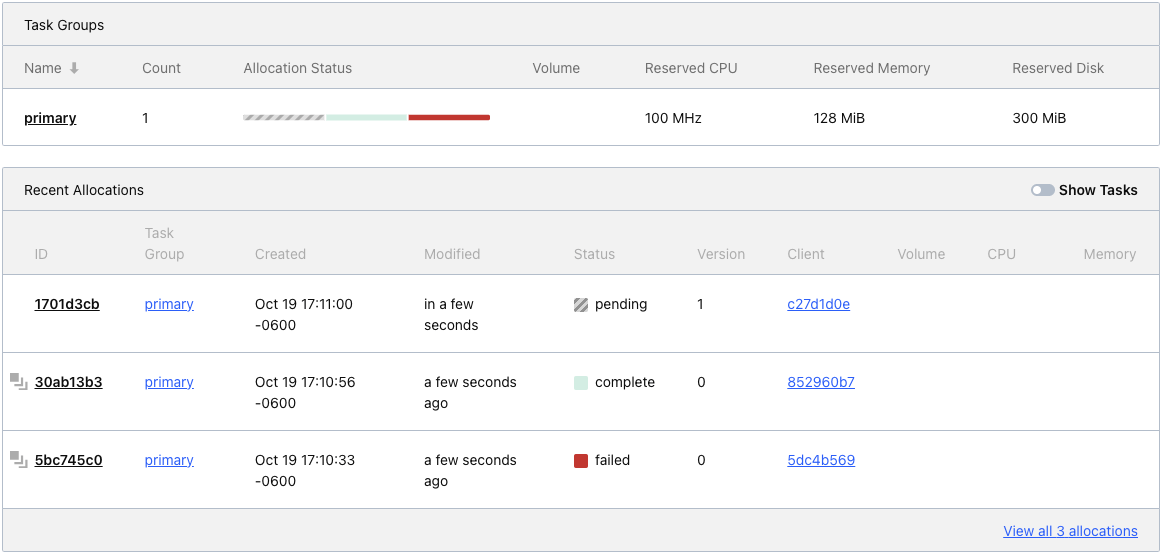

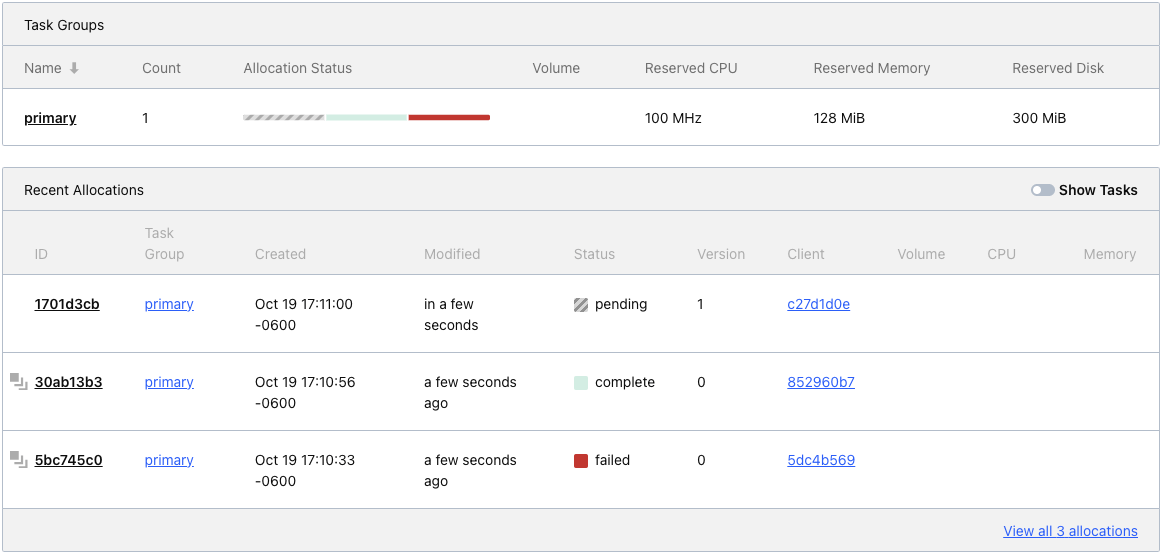

Job Allocations

New Run

Done

Failed to Run

Job Allocations

Where job

was run

Job Allocations

Nomad Cluster

Nomad Cluster

Nomad Cluster

Vault

Vault Access

Secret Engine

Policy

Policy

Role

Role

Role

Token

Auth Method

vault-policy-for-nomad-jobs.hcl

vault-role-for-nomad-jobs.hcl

vault-auth-method-jwt-nomad.json

vault.hcl

Vault Access

┗ vault-policy-for-nomad-jobs.hcl

┗ vault-role-for-nomad-jobs.hcl

┗ vault-auth-method-jwt-nomad.json

┗ vault.hcl

📁 hashistack_demo

┗ 📁 vault

Bootstrapping

#!/bin/bash

# Suppress vault address and TLS warnings by setting the VAULT_ADDR and VAULT_CACERT

export VAULT_ADDR="https://127.0.0.1:8200"

export VAULT_CACERT="/opt/vault/tls/tls.pem"

# Initialize Vault with a file backend. This is usually fine as long as you trust the server vault is running on.

init_result=$(vault operator init -n 1 -t 1 -ca-cert /opt/vault/tls/tls.pem -format=json)

# Get the unseal key (needed to unseal the vault for use)

unseal_key=$(echo "$init_result" | jq -r '.unseal_keys_b64[0]')

[ -z "$unseal_key"] && echo "Unable to initizlize vault." && exit 1

# Get the root login token (needed to log in and make changes to vault)

root_token=$(echo "$init_result" | jq -r '.root_token')

# Unseal the vault

echo -e "UNSEAL KEY IS \033[1;31;44m$unseal_key\033[00m"

vault operator unseal "$unseal_key"

# Write the unseal token to disk for auto-unsealing

echo "UNSEAL_KEY=$unseal_key" > /etc/default/vault

echo "VAULT_ADDR=https://127.0.0.1:8200" >> /etc/default/vault

echo "VAULT_CACERT=/opt/vault/tls/tls.pem" >> /etc/default/vault

# Login as root

vault login "$root_token"

# Install and configure the 1Password plugin for Vault

op_connect_shasum=$(shasum -a 256 /etc/vault.d/plugins/op-connect | cut -d " " -f1)

vault write sys/plugins/catalog/secret/op-connect sha_256="$op_connect_shasum" command="op-connect"

vault secrets enable --plugin-name='op-connect' --path="op" plugin

# Write the 1Password API Token to Vault

vault write op/config @/etc/vault.d/opconfig.json

# Enable jwt authentication for Nomad

vault auth enable -path 'jwt-nomad' 'jwt'

# Enable the traditional KV store for vault

vault secrets enable -version '2' 'kv'

# Write our config for nomad-jwt authentication

vault write auth/jwt-nomad/config '@/etc/vault.d/vault-auth-method-jwt-nomad.json'

# Write our config for Nomad jobs

vault write auth/jwt-nomad/role/nomad-jobs '@/etc/vault.d/vault-role-for-nomad-jobs.json'

# Get our Nomad auth method's unique ID to create an access policy

auth_methods=$(vault auth list -format=json)

accessor=$(echo "$auth_methods" | jq -r '."jwt-nomad/".accessor')

# Insert our accessor into our access policy

sed -i "s#AUTH_METHOD_ACCESSOR#$accessor#g" /etc/vault.d/vault-policy-for-nomad-jobs.hcl

# Write our policy to vault

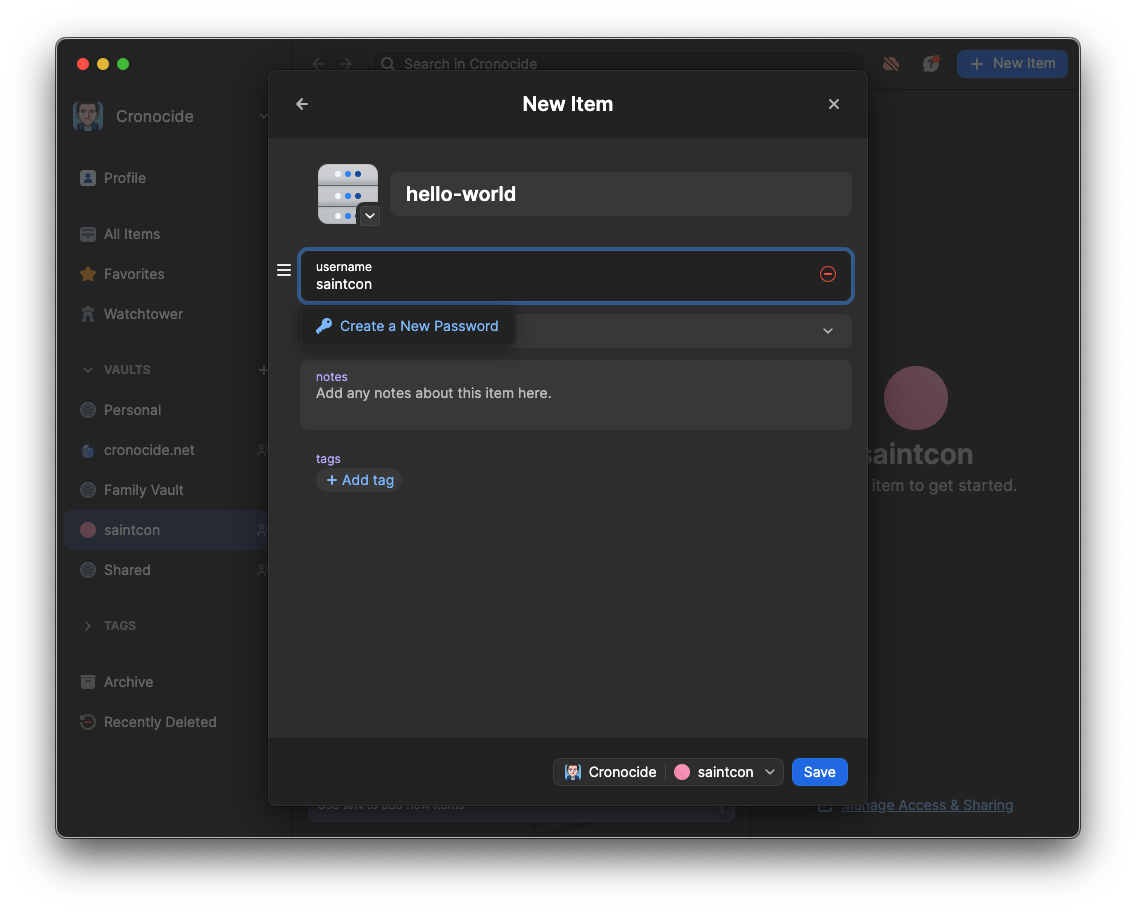

vault policy write 'nomad-jobs' '/etc/vault.d/vault-policy-for-nomad-jobs.hcl'1Password Connect

1Password Vault

1Password Vault

Vault

# opconfig.json

{

"op_connect_host": "http://1password-connect.service.consul:8482",

"op_connect_token": "<YOUR 1PASSWORD-CONNECT TOKEN HERE>"

}#> vault write op/config @opconfig.json

Success! Data written to: op/config

Inserting Credentials

#> nomad volume create /etc/nomad.d/jobs/1password.volume

Created external volume 1password with ID 1password

#> sudo iscsiadm --mode node --targetname \

iqn.2005-10.org.freenas.ctl:1password --portal 10.10.64.53 --login

Login to [iface: default, target: iqn.2005-10.org.freenas.ctl:1password, portal: 10.10.64.53,3260] successful.

#> sudo mkfs.ext4 /dev/sdb

Creating filesystem with 262144 4k blocks and 65536 inodes

Writing superblocks and filesystem accounting information: done

#> sudo mkdir /mnt/tmp && sudo mount /dev/sdb /mnt/tmp

#> sudo cp 1password-credentials.json /mnt/tmp/

#> sudo umount /dev/sdb

#> sudo iscsiadm --mode node --targetname \

iqn.2005-10.org.freenas.ctl:1password --portal 10.10.64.53 --logout

Using 1Password

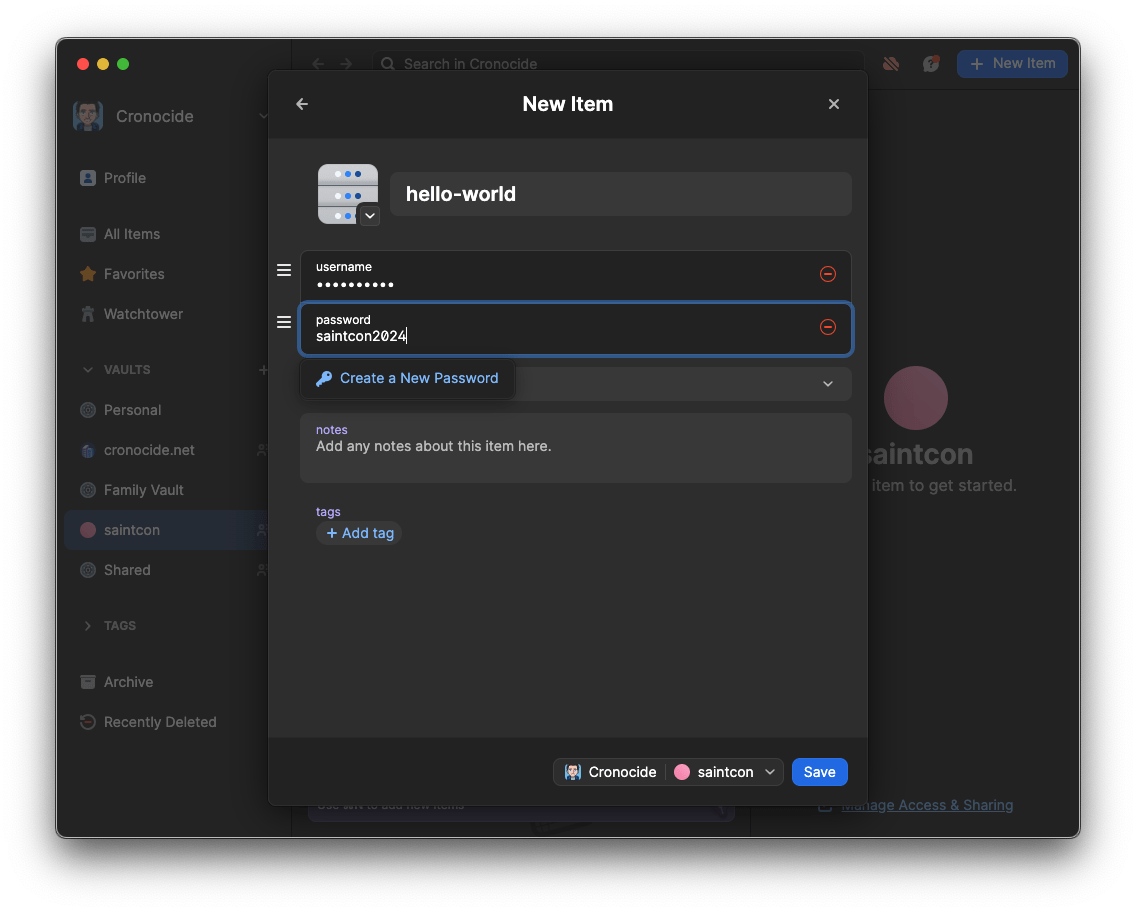

job "hello-world" {

type = "batch"

group "primary" {

vault {}

task "hello-world" {

driver = "docker"

config {

image = "busybox:latest"

command = "sh"

args = ["/local/entrypoint.sh"]

}

template {

destination = "/local/entrypoint.sh"

data =<<EOF

#!/bin/sh

{{with secret "op/vaults/saintcon/items/hello-world"}}

echo "The username is {{.Data.username}} and the password is {{.Data.password}}"

{{end}}

EOF

}

resources {

memory = 128

}

}

}

}

Further Reading

- Service Mesh

- Authentication Gateway

- WAF and CDN

Slides

Code

Level Up Your HomeLab Without Kubernetes

@cronocide