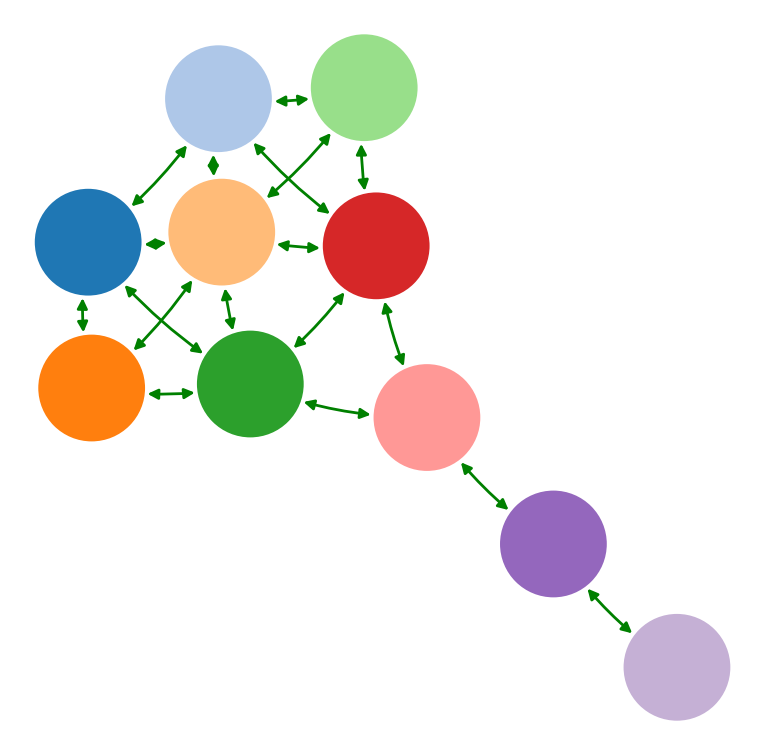

What node is most important?

Casper van Elteren

Dynamic importance of nodes is poorly predicted by static topological features

Complex systems are ubiquitous

- Structure

- Dynamics

- Emergent behavior

Most approaches are not applicable to complex systems:

- Use simplified dynamics

- Use structure as dynamic importance

- Use overwhelming interventions

What is the most important node?

> What node drives the system?

- Stationarity of system dynamics

- Local linearity of state transitions

- Memory-less dynamics

- ....

Wang et al. (2016)

However we have a many-to-one mapping

1. Simplified dynamics

"Well-connected nodes are dynamically important"

2. Which feature to select?

N.B. implicit dynamics assumption!

Harush et al. (2017)

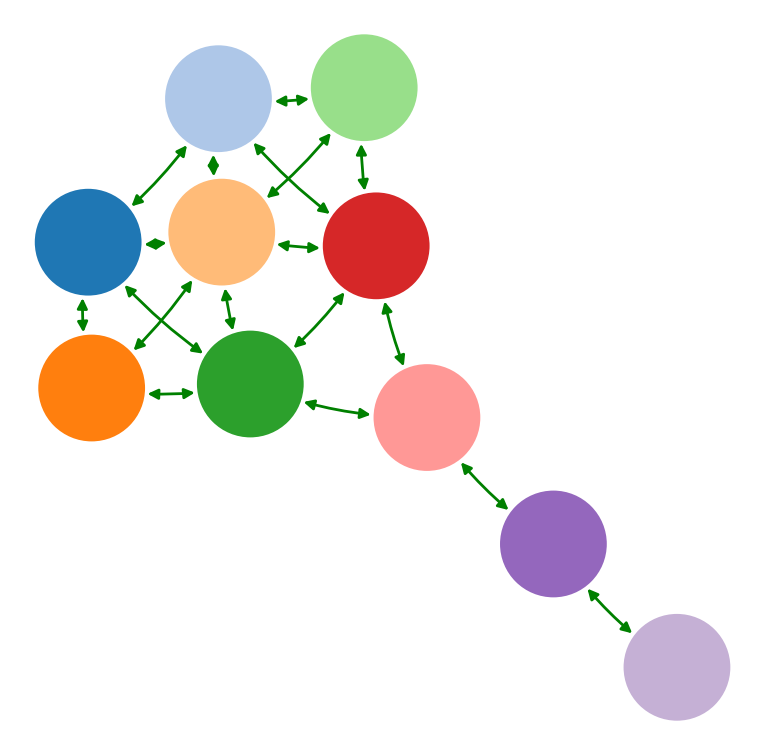

2. Dynamic importance interacts with structure

Genetic

Epidemic

Biochemical

Ecological

3. The size of intervention matters

- Interventions are crucial for the scientific method

- Many are overwhelming:

- Gene knockout

- Replacing signal

Pearl (2000)

Mechanism driving behavior are different under overwhelming interventions!

Summary

We have seen:

- Most methods simplify the complex system

- ..use structure for identifying 'important' parts

- ..use overwhelming interventions

Possible solution: information theory

Information theory and complex systems

Traditional approaches are domain specific but all ask similar questions, e.g.:

- What node is most important?

- Does it exhibit criticality?

- How robust is the system to removal of signals?

- ....

Quax et al. (2016)

How to achieve universal approach to study various complex behavior?

There is a need for a universal language that decouples syntax from semantics

Quax et al. (2016)

Domain specific

+

Quantify in terms of "information"

Traditional approach

Information viewpoint

Up

Down

...

P(System)

Up

Down

...

P(Bird)

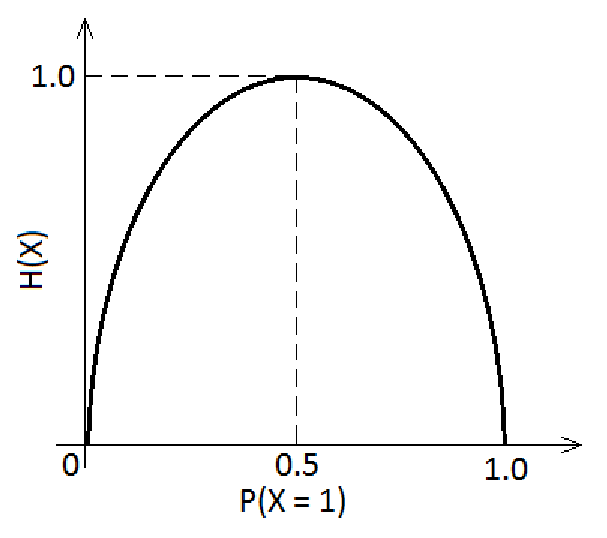

Shannon (1948)

Information Entropy: "Amount of uncertainty"

Mutual information: "Shared information"

N.B. No assumption on what generates P

Information in complex systems

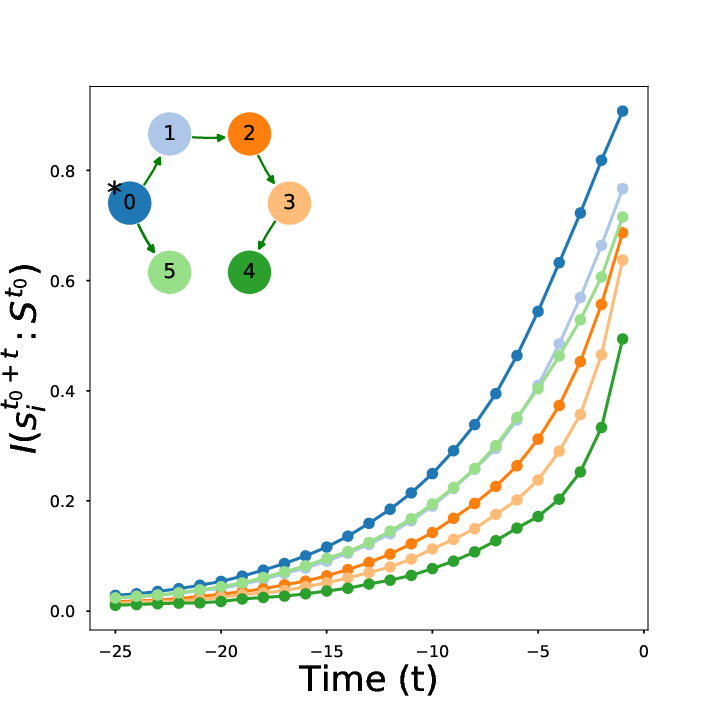

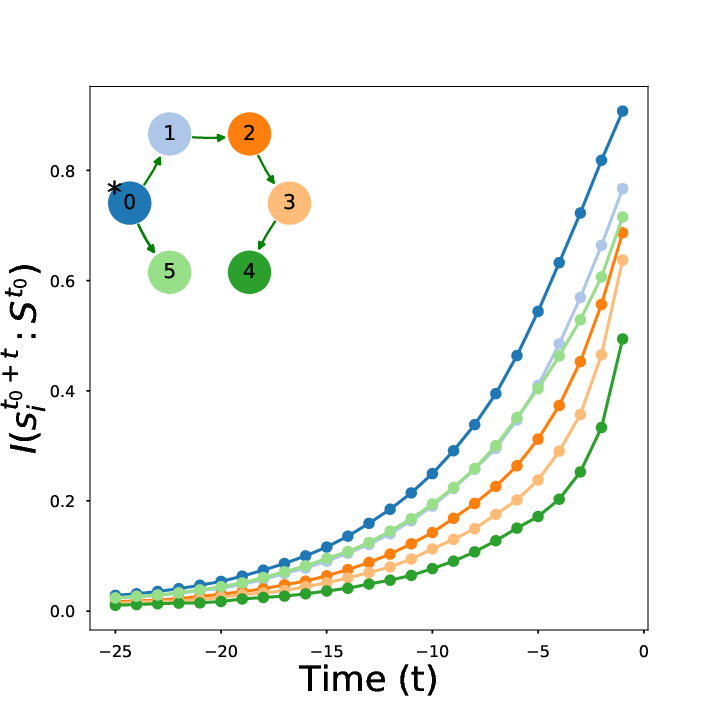

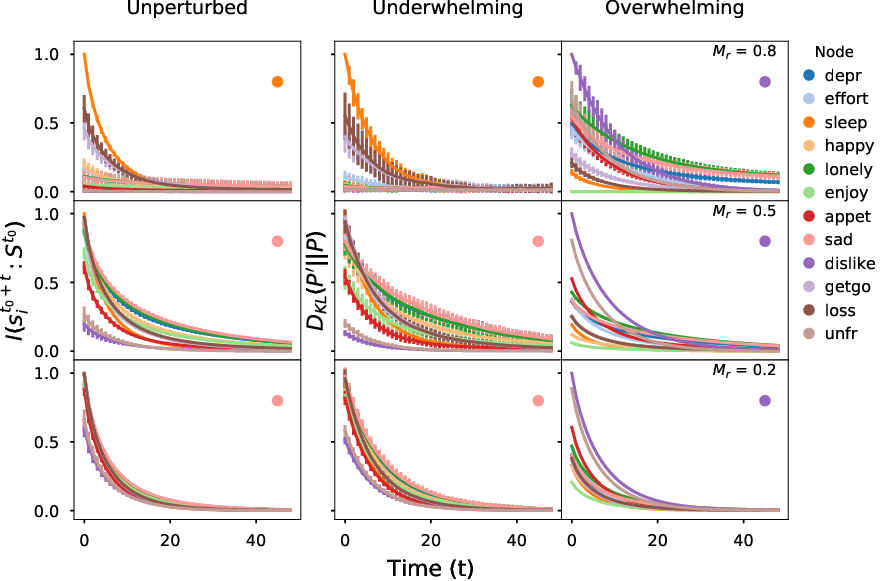

Given ergodic system S

Information will always decrease as function of time

Driver-node will share the most information with the system over time

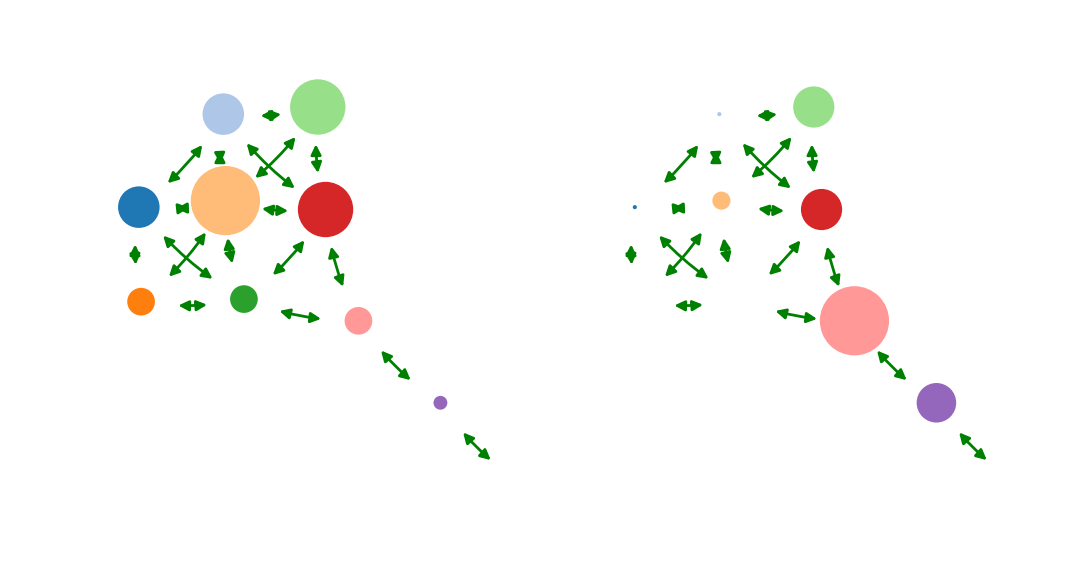

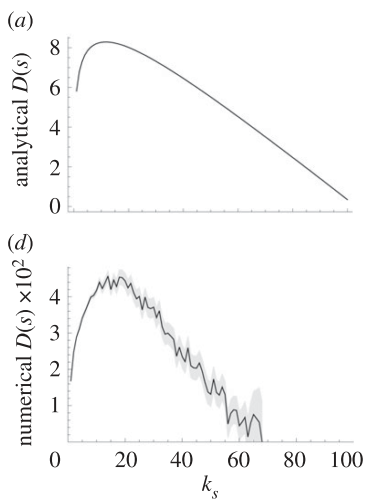

Diminishing role of hubs

Quax & Sloot (2013)

- Infinitely sized networks

- Locally tree-like

- No-self loops

Degree

Numerical

Analytical

Goals

Answer:

- Can information theory tools be used on real-world systems?

- Does well-connectedness translate to dynamical importance in real-world systems?

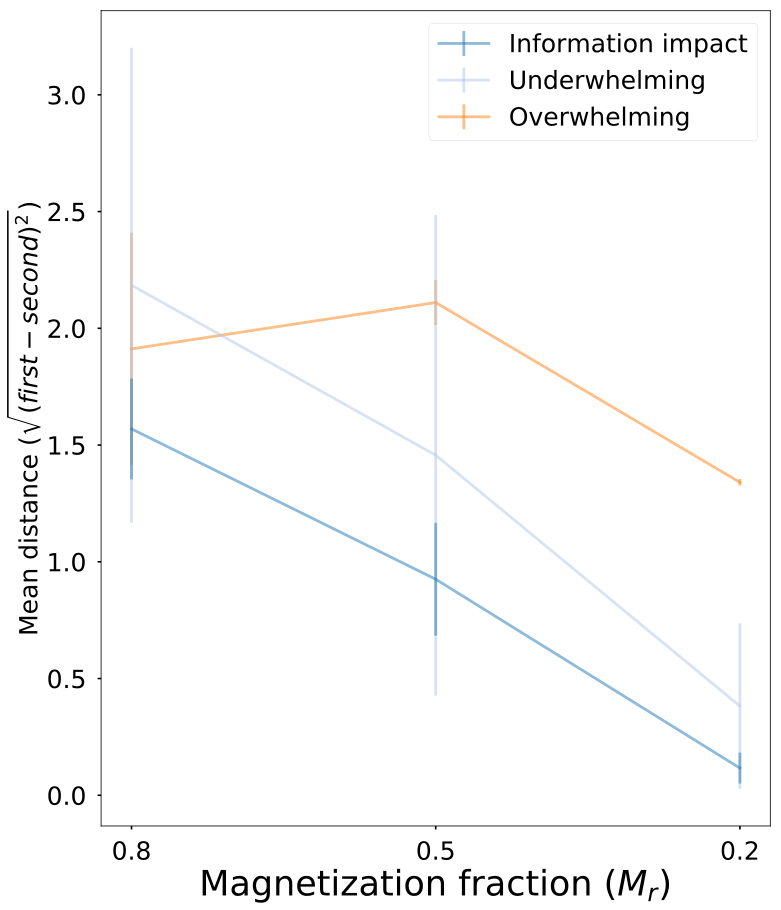

- Does intervention size matter in real-world systems?

Prior results:

- Assume dynamics

- Dynamics interact with structure

- Overwhelming interventions

- Theoretical

Goal: identify driver-node in real-world systems

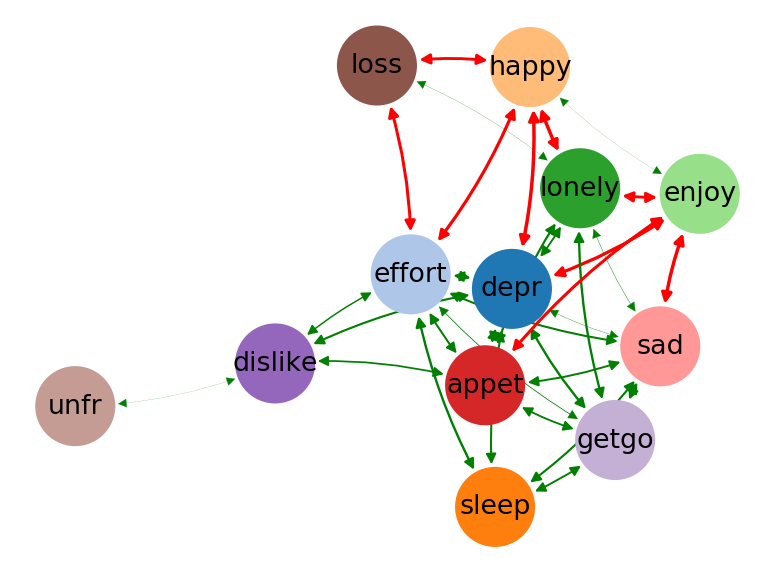

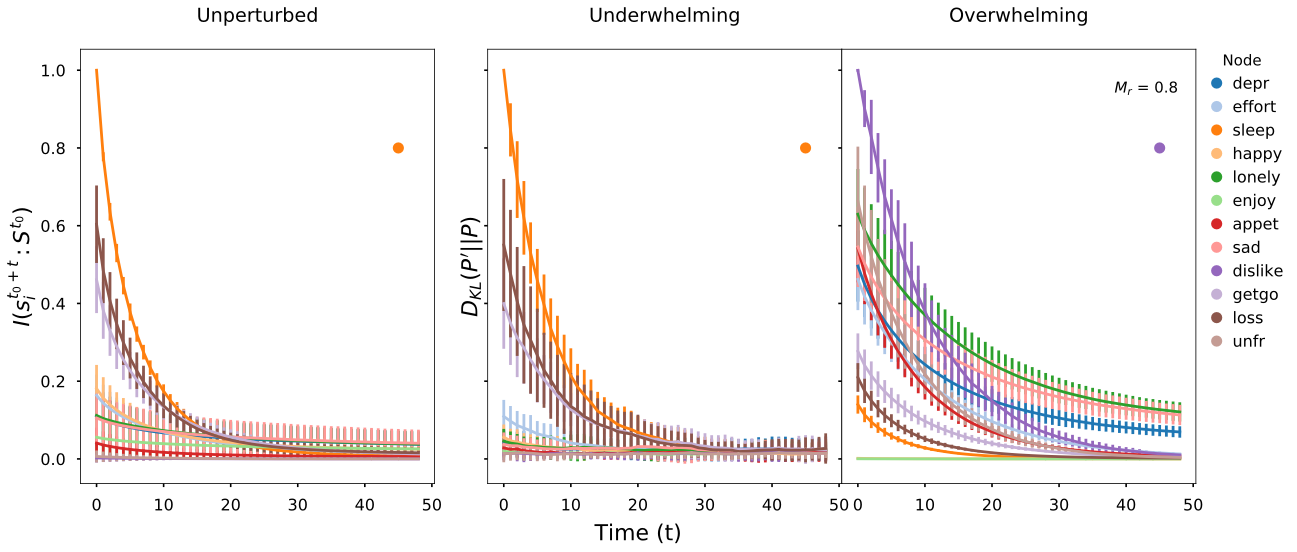

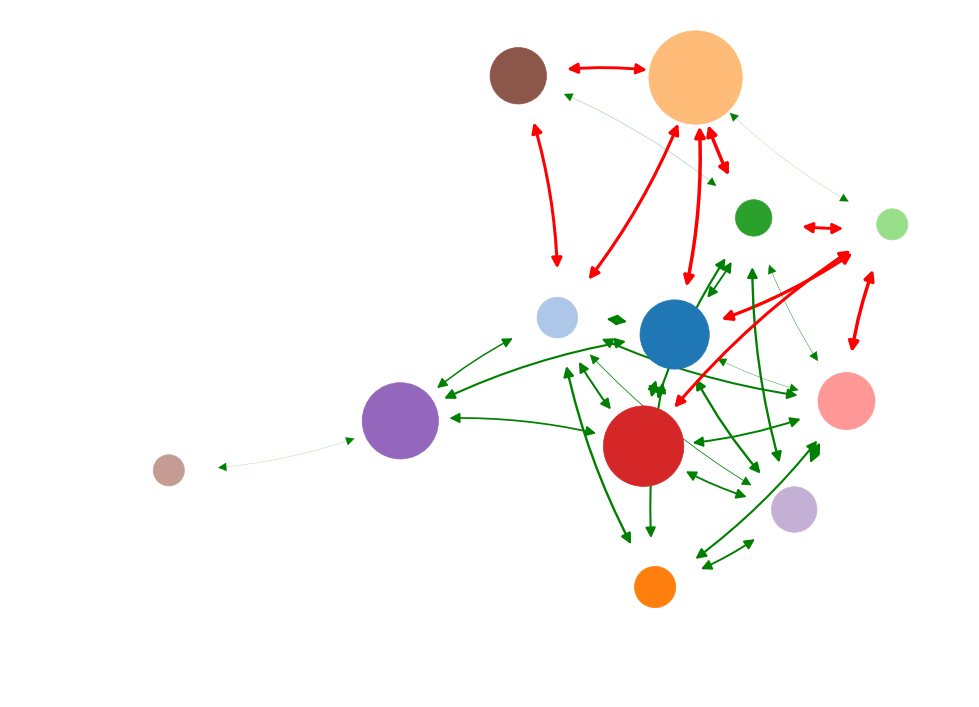

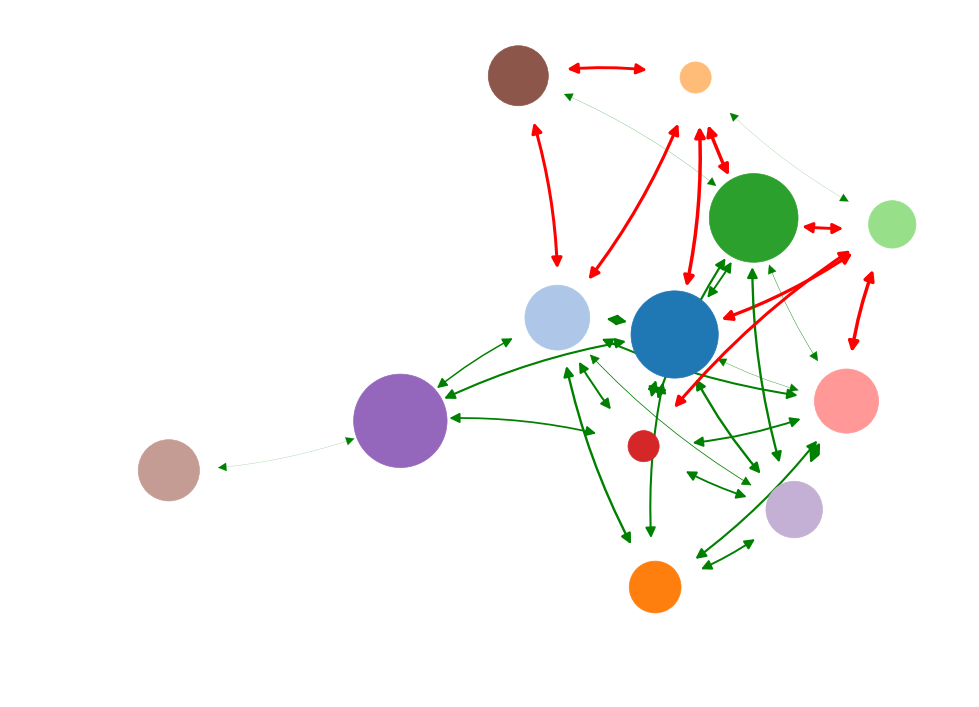

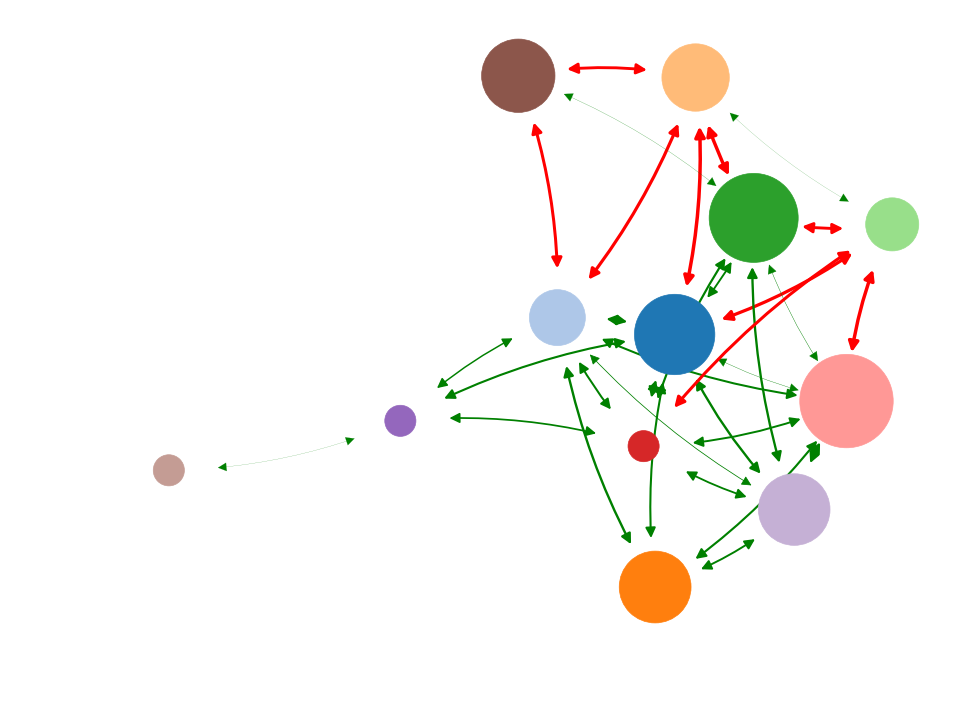

Application domain

- Mildly depressed patients

- Center for epidemiologic studies depression scale (CES-D)

- Changing lives for older couples (CLOC), N = 241

Fried et al. (2015)

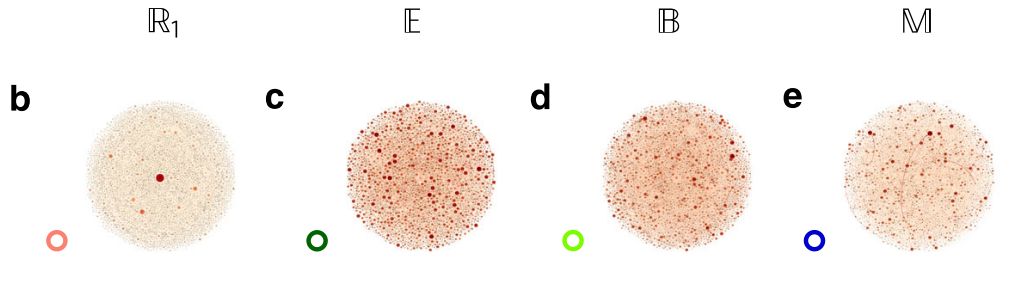

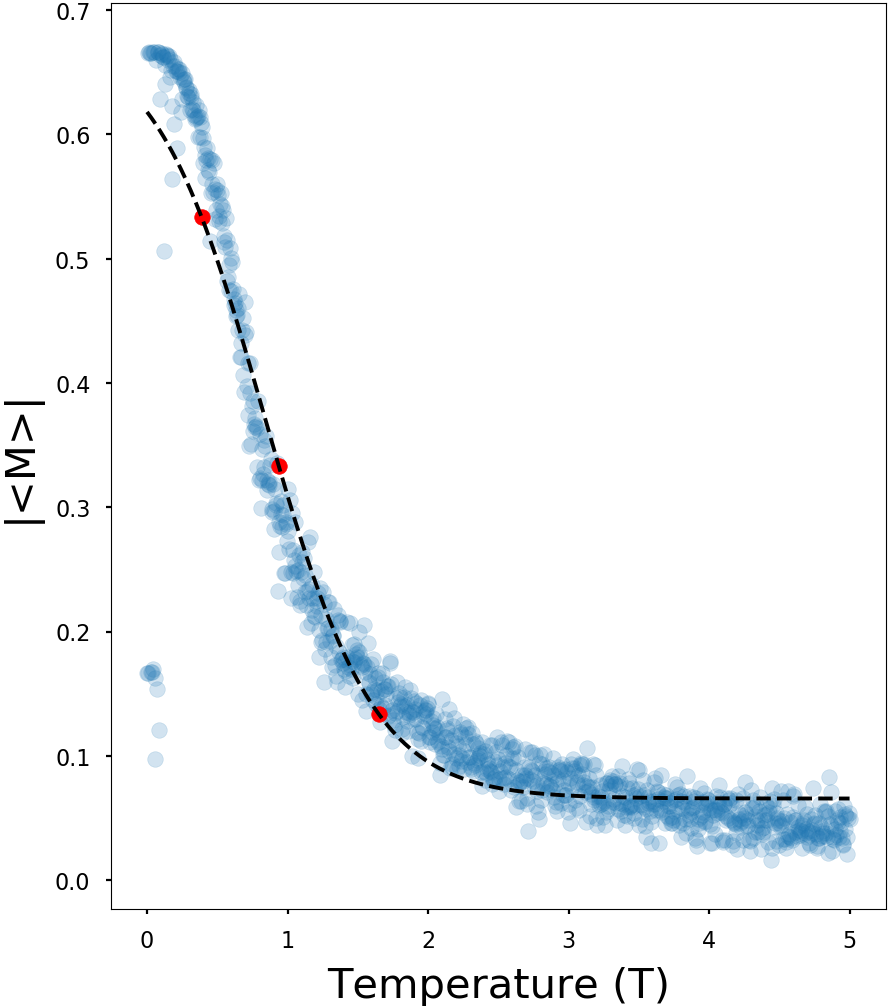

Node dynamics

Ising spin dynamics

Glauber (1963)

Used to model variety of behavior

- Neural dynamics

- Voting behavior

- ....

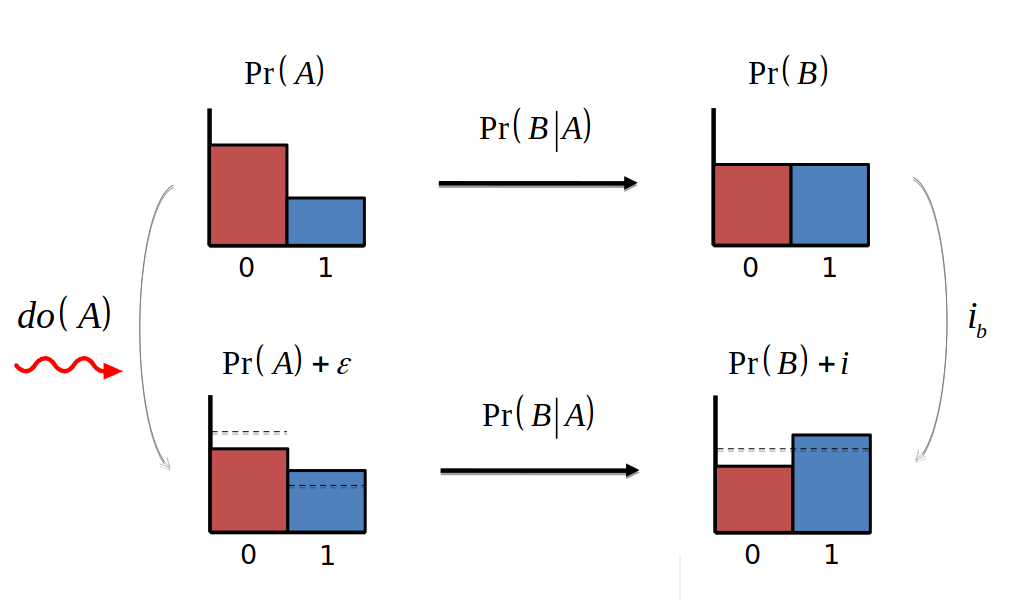

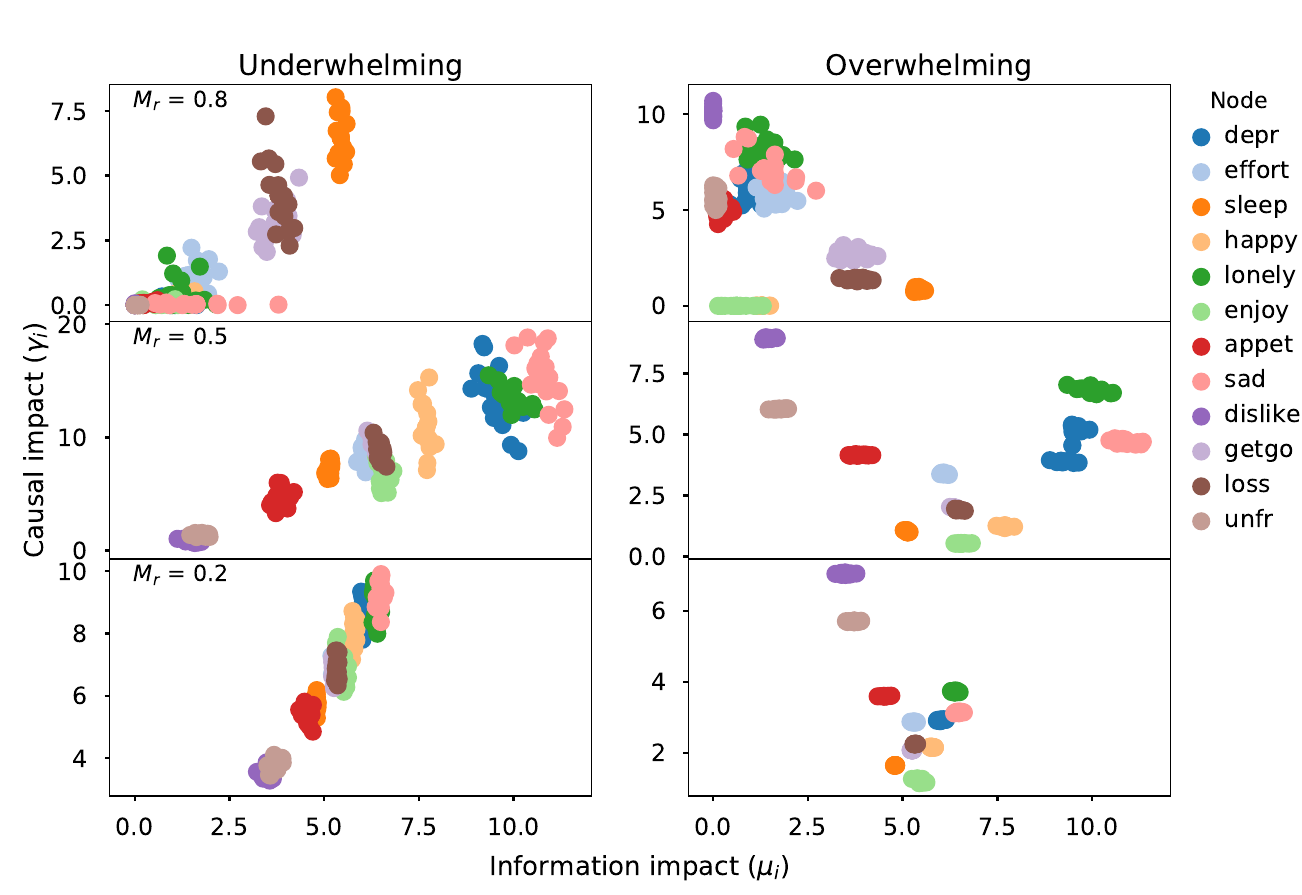

Causal influence

Causal influence forms the ground truth

- Underwhelming :

- Overwhelming:

Causal impact

Advantages of KullBack-Leibler divergence:

- Non Negative

- Zero iff P' == P

- No assumption on P

- Optimality in coding setting

- Embodies extra bits needed to code samples from P' given code P

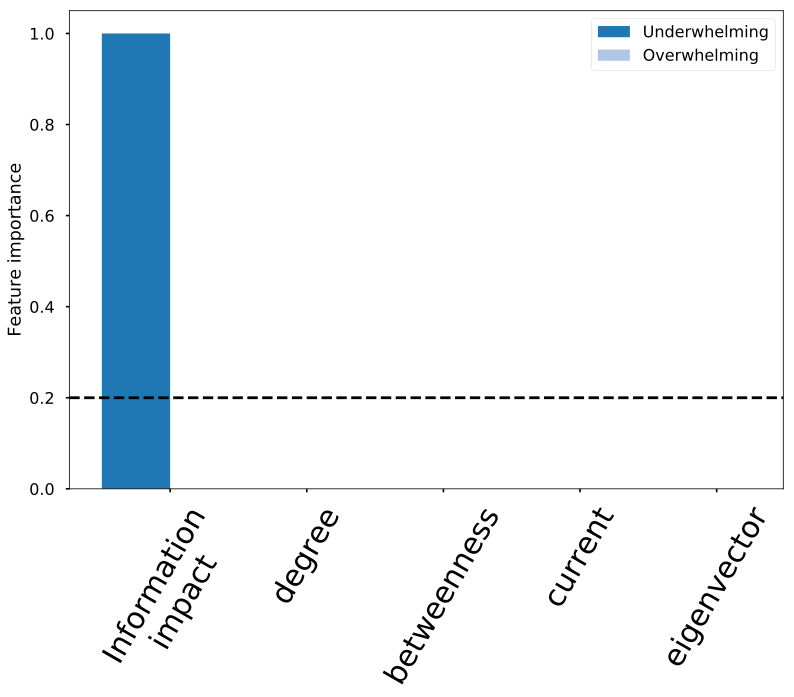

Information impact

- Node with largest causal influence has highest information impact

- Observations only!

- No perturbations required

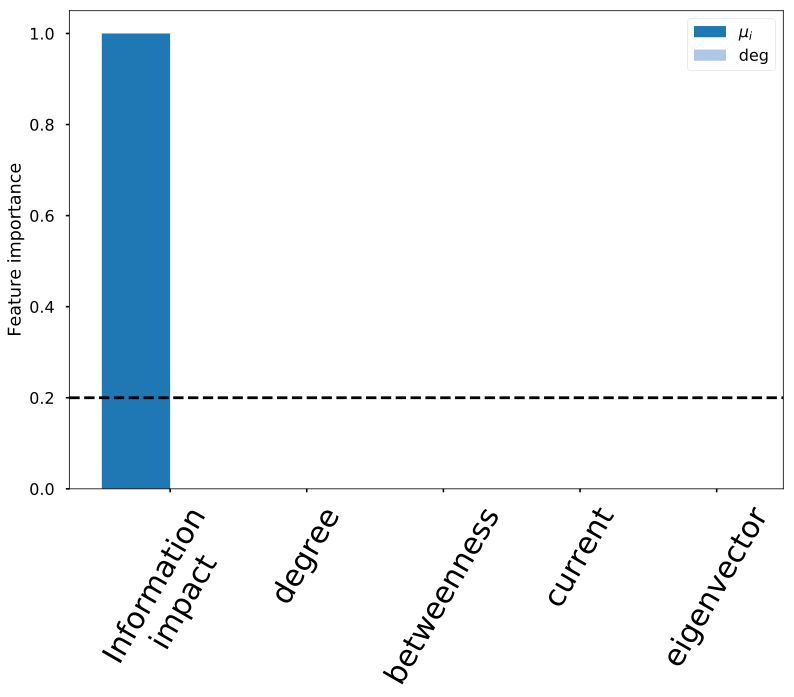

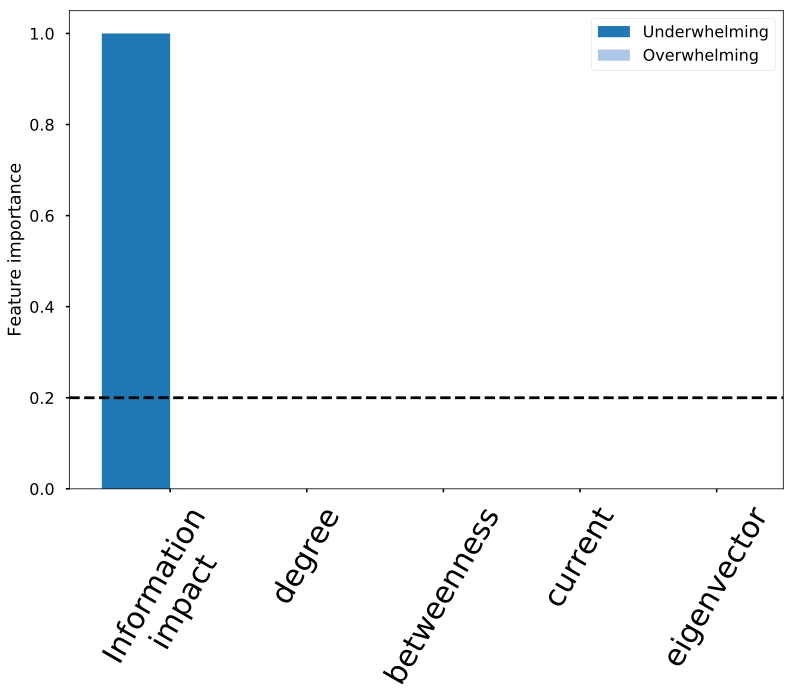

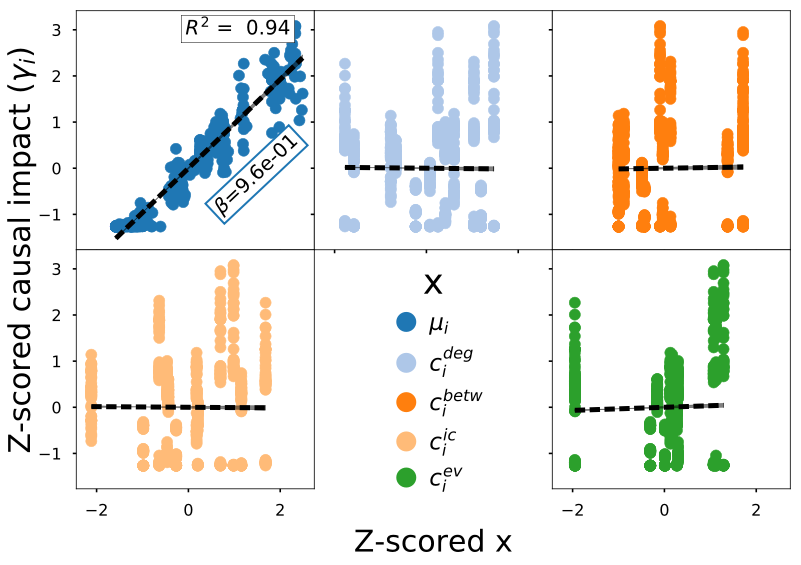

Structural metrics

| Name | What does it measure? |

|---|---|

| Betweenness | Shortest path |

| Degree | Local influence |

| Current flow | Least resistance |

| Eigenvector | Infinite walks |

Statistical procedure

| Ind. var max(x) | Dep. var |

|---|---|

| - Degree centrality - Betweenness centrality - Current flow centrality - Eigenvector centrality - Information impact |

Causal impact - Underwhelming - Overwhelming |

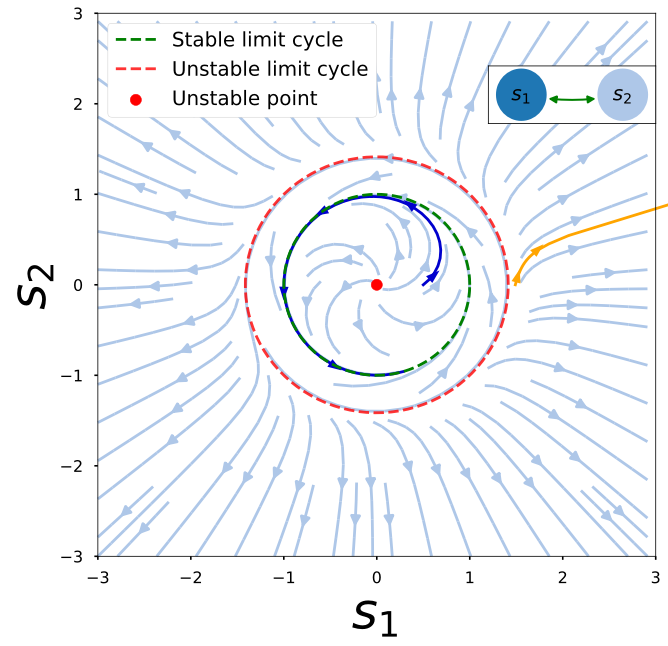

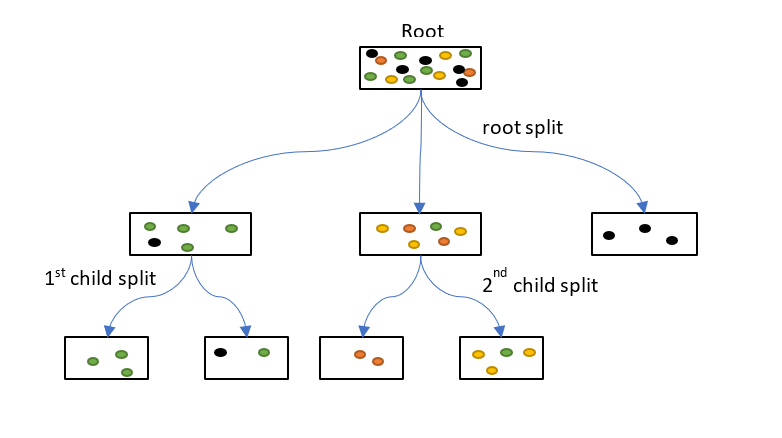

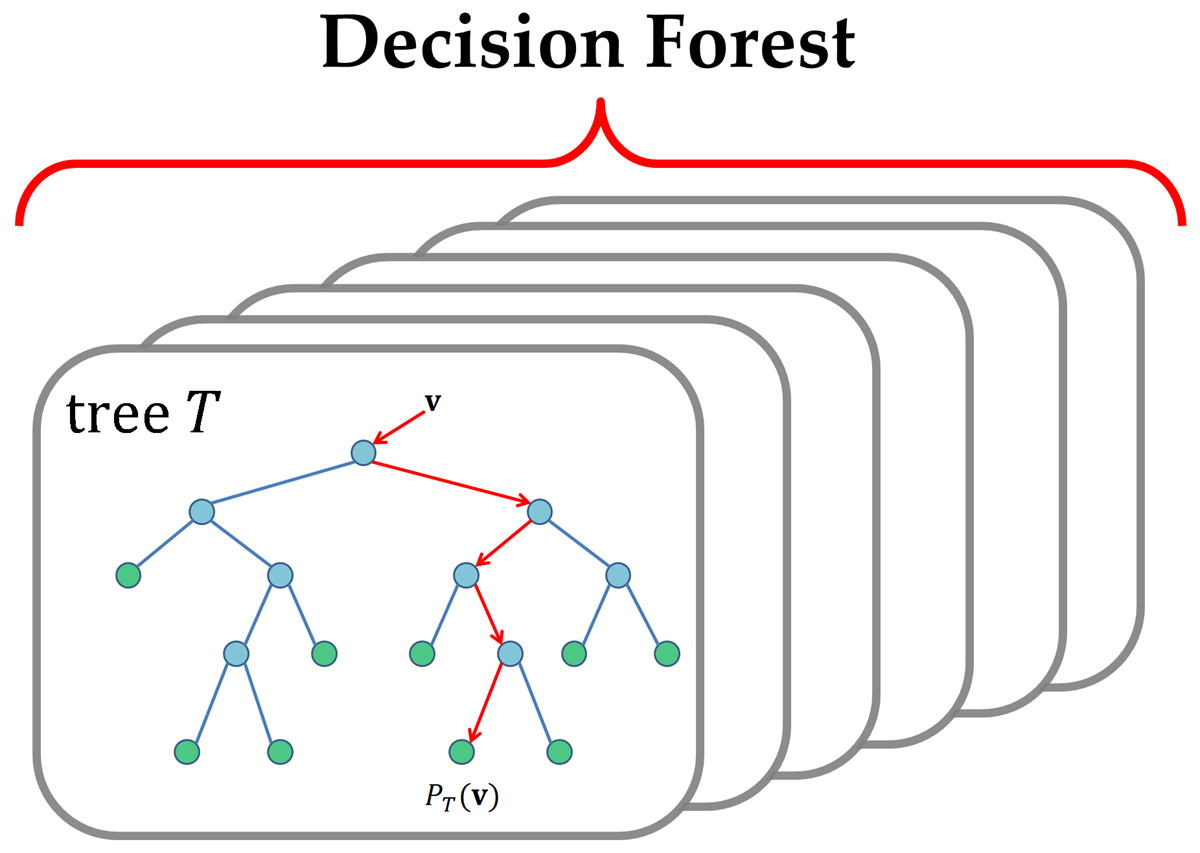

Classification with random forest:

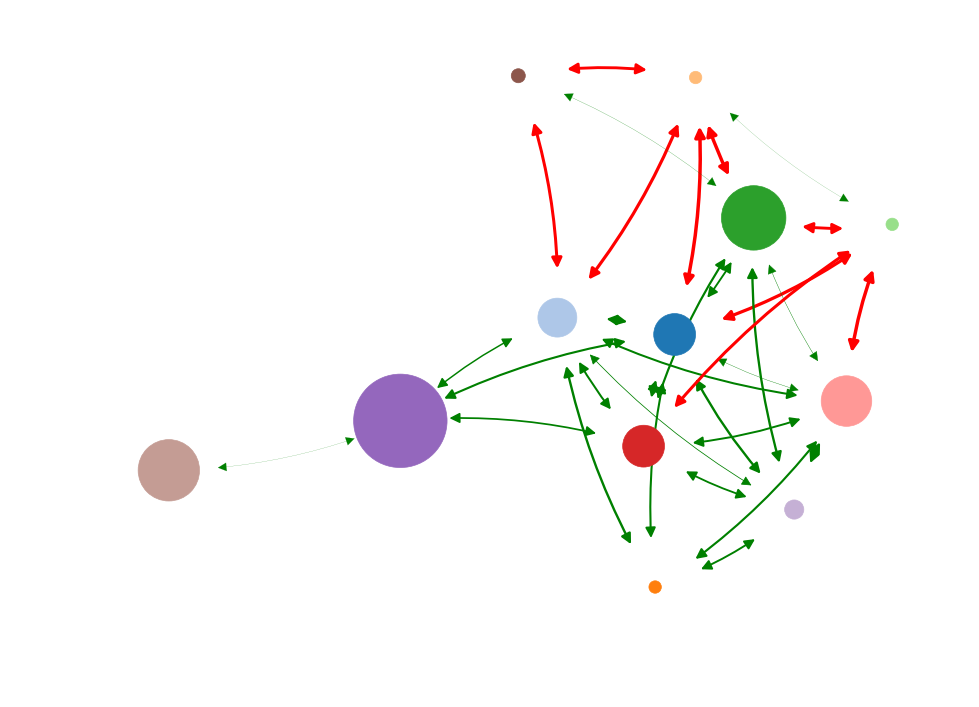

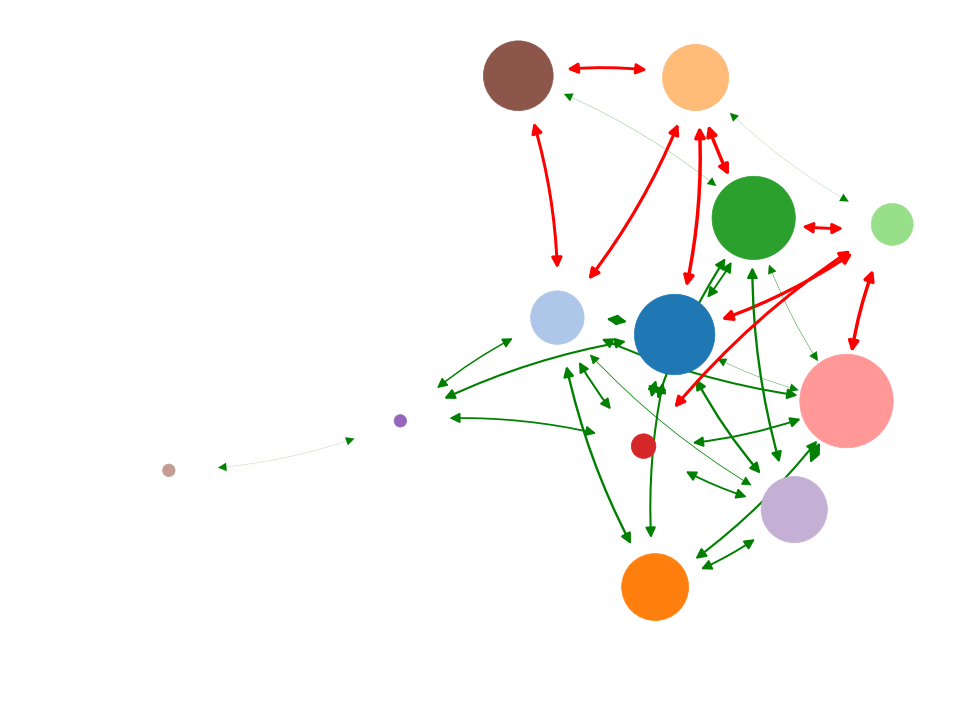

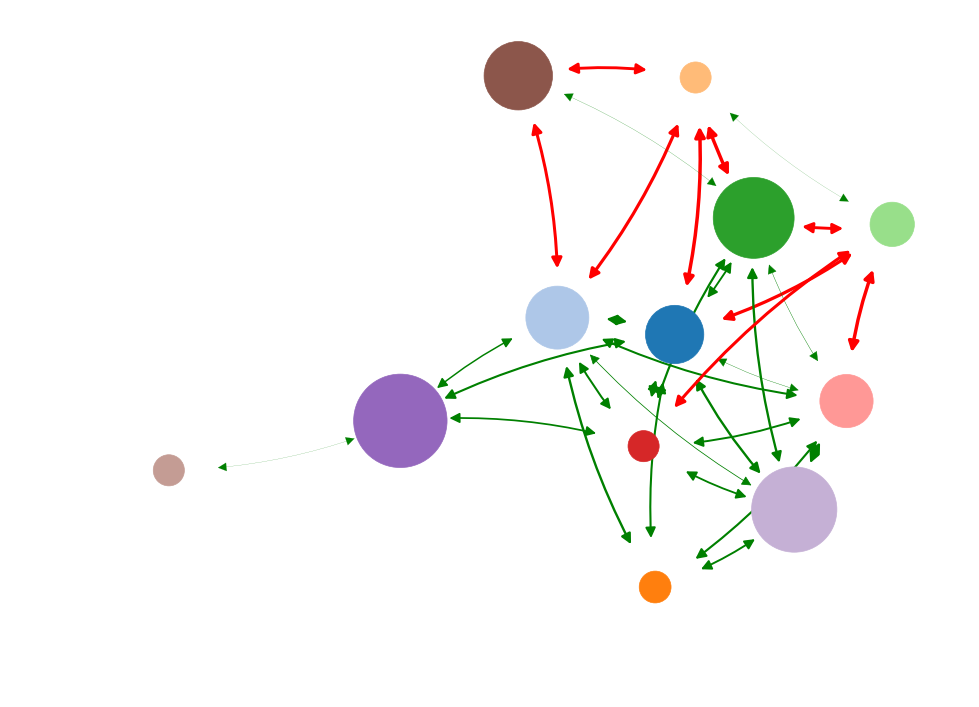

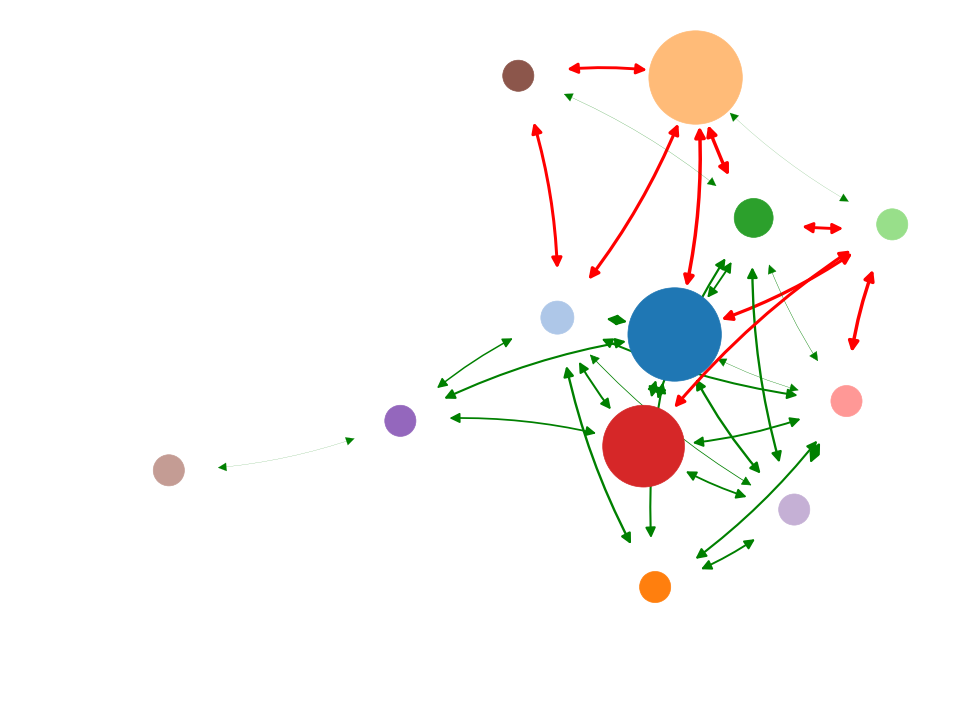

Random forest classifier

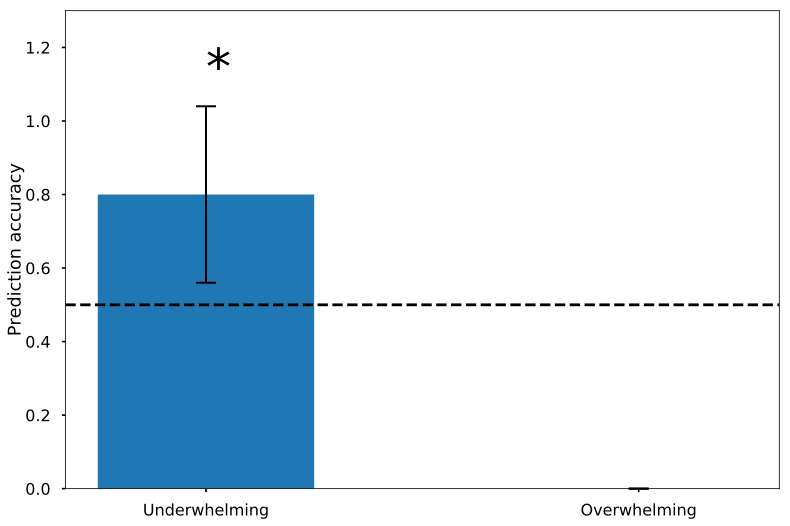

RNF classifier with high prediction accuracy

- Underwhelming does not match overwhelming causal impact

- Information impact matches underwhelming causal impact

Information impact captures driver-node change

Information impact varies linearly with low causal impact

- No structural metric showed a linear relation with low causal impact

- Information impact was highly linear with low causal impact

Summary

- Information impact predict driver-node for unperturbed dynamics

- Intervention size matters

- Structural metrics don't identify driver-node well

Take-home message:

Structural connectedness != dynamic importance

Future direction

- Does information impact generalize well to other graphs?

- Does it generalize well to other dynamics?

- Can it be used to detect transient structures?

- Asymmetry in time effects

- ....

Acknowledgement

- A big thanks to dr. Rick Quax for his supervision

Models

Information

toolbox

Plotting toolbox

IO toolbox

- Fast

- Extendable

- User-friendly

Information toolbox

Reference

- Glauber, R. J. Time-dependent statistics of the Ising model. Journal of Mathematical Physics 4, 294–307 (1963).

- Quax, R., Apolloni, A. & Sloot, P. M. a. The di-

minishing role of hubs in dynamical processes

on complex networks. Journal of the Royal Soci-

ety, Interface / the Royal Society 10, 20130568. arXiv:

1111.5483 (2013). - Quax, R., Har-Shemesh, O., Thurner, S., & Sloot, P. (2016). Stripping syntax from complexity: An information-theoretical perspective on complex systems. arXiv preprint arXiv:1603.03552.

- Harush, U. & Barzel, B. Dynamic patterns of in-

formation flow in complex networks. Nature Com-

munications 8, 1–11 (2017). - Harush, U. & Barzel, B. Dynamic patterns of in-

formation flow in complex networks. Nature Com-

munications 8, 1–11 (2017). - Fried, E. I. et al. From loss to loneliness: The re-

lationship between bereavement and depressive

symptoms. Journal of Abnormal Psychology 124, 256–

265 (2015).

Information impact

Betweenness

Degree

Current flow

Eigenvector

Low causal impact

High causal impact

Shannon (1948)

A

B

P(A)

0

1

P(B | A = a)

0

1

Entropy

Mutual information

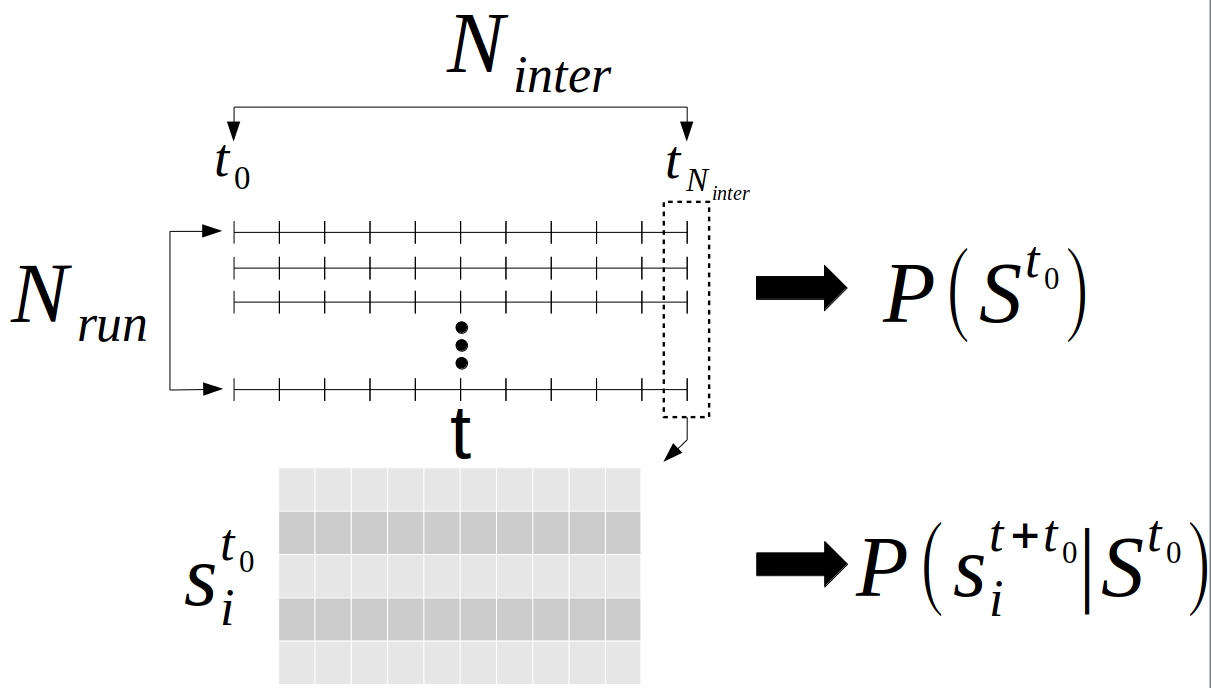

Numerical methods

- Monte-Carlo methods

- Compare information impact and centrality metrics

- Intervention:

- Underwhelming

- Overwhelming

Statistical procedure

- Quantify accuracy with random forest classifier

- Validation with Leave-One-Out cross-validation

m = amount of regressors

N = number of samples