изображение

робота

Оптимальное действие:

тут обучили награду

тут будут проблемы

Случай известной динамики среды

Планирование с и без обратной связи

What if the environment's model

is given?

Reminder:

Previously:

- observed only samples from the environment

- not able to start from an arbitrary state

Now the environment's model is fully accessible:

- can plan in our mind without interaction

- we assume rewards are known too!

or \(s_{t+1} = f(s_t, a_t)\)

Interaction vs. Planning

In deterministic environments

Model-free RL (interaction):

agent

environment

Interaction vs. Planning

In deterministic environments

Model-based RL (open-loop planning):

agent

environment

optimal plan

Planning in stochastic environments

Plan:

Reality:

Closed-loop planning (Model Predictive Control - MPC):

agent

environment

optimal plan

Planning in stochastic environments

Apply only first action!

Discard all other actions!

REPLAN AT NEW STATE!!

How to plan?

Continuous actions:

- Linear Quadratic Regulator (LQR)

- iterative LQR (iLQR)

- Differential Dynamic Programming (DDP)

- ....

Discrete actions:

- Monte-Carlo Tree Search

- ....

- ....

Планирование как поиск по дереву

Tree Search

Deterministic dynamics case

reminder:

apply \(s' = f(s, a)\) to follow the tree

assume only terminal rewards!

Tree Search

Deterministic dynamics case

- Full search is exponentially hard!

- We are not required to track states: the sequence of actions contains all the required informations

Tree Search

Stochastic dynamics case

apply \(s' \sim p(s'|s, a)\) to follow the tree

assume only terminal rewards!

Now we need an infinite amount of runs through the tree!

Tree Search

Stochastic dynamics case

- The problem is even harder!

If the dynamics noise is small, forget about stochasticity and use the approach for deterministic dynamics.

The actions will be suboptimal.

But who cares...

Монте-Карло поиск по дереву

Monte-Carlo Tree Search (MCTS)

Monte-Carlo Tree Search: v0.5

also known as Pure Monte-Carlo Game Search

Simulate with some policy \(\pi_O\) and calculate reward-to-go

We need an infinite amount of runs to converge!

Monte-Carlo Tree Search: v0.5

also known as Pure Monte-Carlo Game Search

- Not so hard as full search, but still!

- Will give a plan which is better than following \(\pi_O\), but is suboptimal!

- The better the plan - the harder the problem!

Is it necessary to explore all the actions with the same frequency?

Maybe we'd better explore actions with a higher estimate of \(Q(s, a)\) ?

At an earlier stage, we also should have some exploration bonus for the least explored actions!

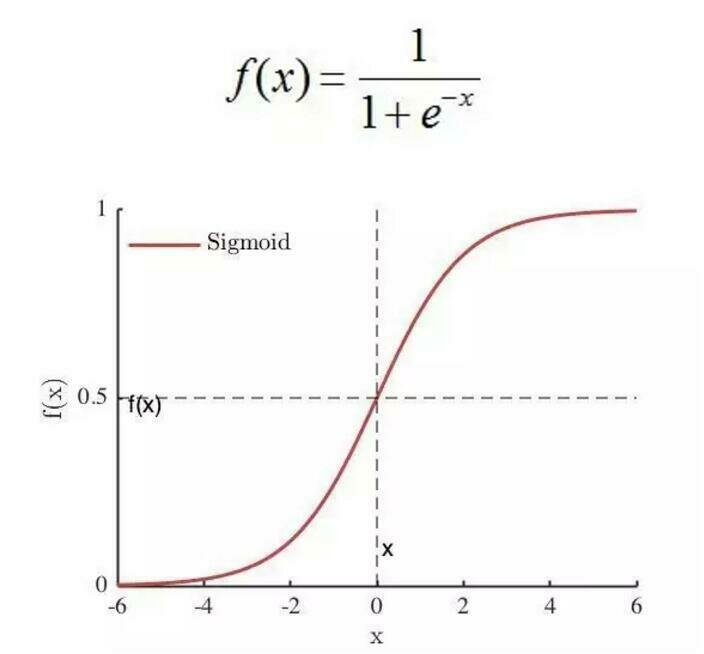

Upper Confidence Bound for Trees

UCT

Basic Upper Confidence Bound for Bandits:

Th.: Given some assumptions the following is true:

Upper Confidence Bound bonus for MCTS:

We should choose actions that maximize the following value:

Monte-Carlo Tree Search: v1.0

Simulate with some policy \(\pi_O\) and calculate reward-to-go

For each state we store a tuple:

\( \big(\Sigma, n(s) \big) \)

\(\Sigma\) - cummulative reward

\(n(s)\) - counter of visits

Stages:

- Forward

- Expand

- Rollout

- Backward

and again, and again....

Monte-Carlo Tree Search

Python pseudo-code

def mcts(root, n_iter, c):

for n in range(n_iter):

leaf = forward(root, c)

reward_to_go = rollout(leaf)

backpropagate(leaf, reward_to_go)

return best_action(root, c=0)

def forward(node, c):

while is_all_actions_visited(node):

a = best_action(node, c)

node = dynamics(node, a)

if is_terminal(node):

return node

a = best_action(node, c)

child = dynamics(node, a)

add_child(node, child)

return childdef rollout(node):

while not is_terminal(node):

a = rollout_policy(node)

node = dynamics(node, a)

return reward(node)def backpropagate(node, reward):

if is_root(node):

return None

node.n_visits += 1

node.cumulative_reward += reward

node = parent(node)

return backpropagate(node, reward)Моделирование оппонента

в настольных играх

Minimax MCTS

agent

environment

The environment's dynamics is unknown!

environment

The environment's dynamics is now known!

agent

maximizing return

minimizing return

Minimax MCTS

There are just a few differences compared to the MCTS v1.0 algorithm:

- Now you should track which player's move is now

- \(o = 1\;\; \texttt{if player's 1 move, else}\;\; -1 \)

- During forward pass, best actions now should maximize:

\(W(s, a) = oV(s') + c\sqrt{\frac{\log n(s)}{n(a)}}\)

- The best action, computed by MCTS is now:

\(a^* = \arg\max_a oQ(s, a) \)

- Other stages are not changed at all!

Улучшение стратегии

с помощью

Монте-Карло поиска по дереву

Policy Iteration guided by MCTS

You may have noted that MCTS looks something like this:

- Estimate value \(V^{\pi_O}\) for the rollout policy \(\pi_O\) using Monte-Carlo samples

- Compute it's improvement as \(\pi_O^{MCTS}(s) \leftarrow MCTS(s, \pi_O)\)

But then we just throw \(\pi_O^{MCTS}\) and \(V^{\pi_O}\) away and recompute them again!

We can use two Neural Networks to simplify and improve computations:

- \(V_\phi\) that will capture state-values and will be used instead of rollout estimates

- \(\pi_\theta\) that will learn from MCTS improvements

MCTS algorithm from AlphaZero

During the forward stage:

- At leaf states \(s_L\), we are not required to make rollouts - we already have its value:

\(V(s_L) = V_\phi(s_L)\)

or we can still do a rollout: \(V(s_L) = \lambda V_\phi(s_L) + (1-\lambda)\hat{V}(s_L)\)

- The exploration bonus is now changed - the policy guides exploration:

\(W(s') = V(s') + c\frac{\pi_\theta(a|s)}{1+ n(s')}\)

There are no infinite bonuses

Now, the output of MCTS\((s)\) is not the best action for \(s\), but rather a distribution:

\( \pi_\theta^{MCTS}(a|s) \propto n(f(s, a))^{1/\tau}\)

Other stages are not affected.

Assume, we have a rollout policy \(\pi_\theta\) and a corresponding value function \(V_\phi\)

But how to improve parameters \(\theta\)?

And update \(\phi\) for the new policy?

Policy Iteration through a self-play

Play several games with yourself:

Keep the tree through the game!

. . .

Store the triples: \( (s_t, \pi_\theta^{MCTS}(s_t), R), \;\; \forall t\)

Once in a while, sample batches \( (s, \pi_\theta^{MCTS}, R) \) from the buffer and minimize:

Better to share parameters of NNs

AlphaZero results

AlphaZero results

AlphaZero results

Случай неизвестной динамики

What if the dynamics is unknown?

\( f(s, a) \) - ????

If we assume dynamics to be unknown but deterministic,

then we can note the following:

- states are fully controlled by applied actions

- during MCTS search the states are not required

in case of known \( V^{\pi_O}(s), \pi_O(s), r(s) \)

(rewards are here for more general environments than board-games)

we can learn it directly from transtions of a real environment

What if the dynamics is unknown?

The easiest motivation ever!

Previously, in AlphaZero we had:

Now the dynamics is not available!

Thus, we will throw all the available variables into a larger NN:

\(s_{root}, a_0, \dots, a_L\)

dynamics

\(s_L\)

get value and policy

\(V_\theta (s_L), \pi_\theta (s_L) = f_\theta(s_L)\)

\(s_{root}, a_0, \dots, a_L\)

get value and policy of future states

\(V_\theta (s_L), \pi_\theta (s_L) = f_\theta(s_L)\)

Architecture of the Neural Network

the estimate of r(s, a)

\(g_\theta(z_{t+1}, a_{t+1}) \)

\(f_\theta(z_{t+2}) \)

. . .

. . .

encoder

\(g_\theta(z_t, a_t) \)

\(f_\theta(z_{t+1}) \)

MCTS in MuZero

Simulate with some policy \(\pi_O\) and calculate reward-to-go

For each state we store a tuple:

\( \big(\Sigma, n(s) \big) \)

\(\Sigma\) - cummulative reward

\(n(s)\) - counter of visits

Stages:

- Forward

- Expand

- Rollout

- Backward

and again, and again....

MCTS in MuZero

Simulate with some policy \(\pi_O\) and calculate reward-to-go

Stages:

- Forward

- Expand

- Rollout

- Backward

MuZero: article

Play several games:

. . .

Store whole games: \( (..., s_t, a_t, \pi_t^{MCTS}, r_t, u_t, s_{t+1}, ...)\)

Randomly pick state \(s_i\) from the buffer with a subsequence of length \(K\)

MuZero: results

MuZero: results

WOW

It works!