Zero to DevOps in

Under an Hour with

http://slides.com/dalealleshouse/kube-pi

Who is this guy?

Dale Alleshouse

@HumpbackFreak

hideoushumpbackfreak.com

github.com/dalealleshouse

Agenda

-

What is Kubernetes?

-

Kubernetes Goals

-

Kubernetes Basic Architecture

-

Awesome Kubernetes Demo!

-

What Now?

What is Kubernetes?

- AKA K8S (K-8 letters-S)

- Greek for Ship's Captain (κυβερνήτης)

- Google's Open Source Container Management System

- https://github.com/kubernetes

- Lessons Learned from Borg/Omega

- K8S is speculated to replace Borg

- Released June 2014

- 1.0 Release July, 2015

- Currently in Version 1.9

- Highest Market Share

- Bundled with Docker Enterprise

K8S Goals

- Container vs. VM Focus

- Portable (Run everywhere)

- General Purpose

- Any workload - Stateless, Stateful, batch, etc...

- Flexible (consume wholesale or a la carte)

- Extensible

- Automatable

- Advance the state of the art

- cloud-native and DevOps Focused

- mechanisms for slow monolith/legacy migrations

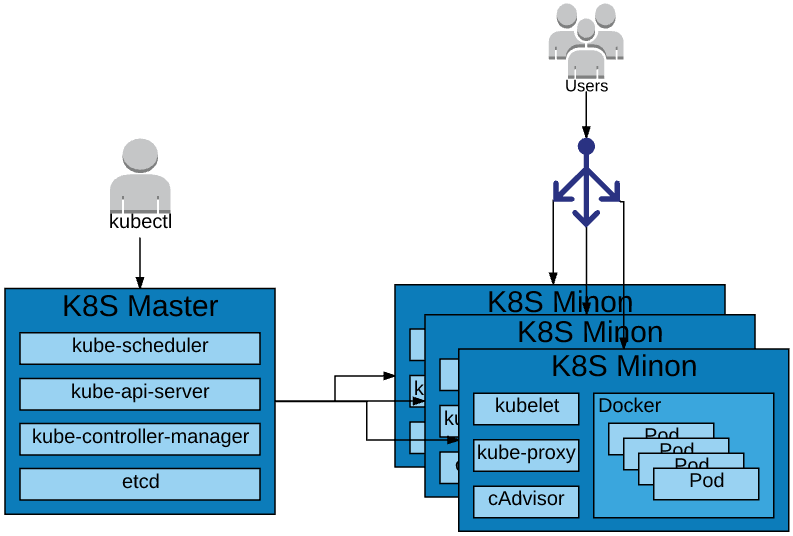

K8S Architecture

More Architecture Info

- Omega: flexible, scalable schedulers for large compute clusters

- https://research.google.com/pubs/pub41684.html

- Large-scale cluster management at Google with Borg

- https://research.google.com/pubs/pub43438.html

- Borg, Omega, and Kubernetes

- https://research.google.com/pubs/pub44843.html

- https://github.com/kubernetes/community

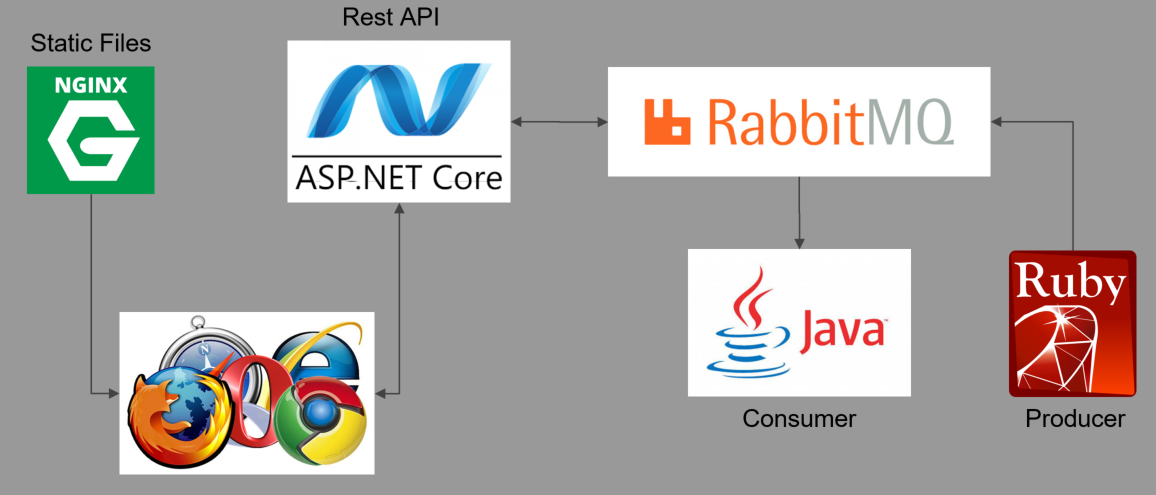

Demo System

https://github.com/dalealleshouse/zero-to-devops/tree/pi

Setup

- Cluster

- 4 Raspberry Pis

- kubeadm

- https://www.hanselman.com/blog/HowToBuildAKubernetesClusterWithARMRaspberryPiThenRunNETCoreOnOpenFaas.aspx

- Static IP - Assigned by Router

- DNS - Hosts File

- Docker Hub

- Images downloaded locally before demo

Deployments

- Deployments consist of pods and replica sets

- Pod - One or more containers in a logical group

- Replica set - controls number of pod replicas

# Create a deployment for each container in the demo system

kubectl run html-frontend --image=dalealleshouse/html-frontend:1.0 --port=80\

--env STATUS_HOST=k8-master:31111 --labels="app=html-frontend"

kubectl run java-consumer --image=dalealleshouse/java-consumer:1.0

kubectl run ruby-producer --image=dalealleshouse/ruby-producer:1.0

kubectl run status-api --image=dalealleshouse/status-api:1.0 port=5000 \

--labels="app=status-api"

kubectl run queue --image=arm32v6/rabbitmq:3.7-management-alpine

# View the pods created by the deployments

kubectl get pods

# Run docker type commands on the containers

kubectl exec -it *POD_NAME* bash

kubectl logs *POD_NAME*Services

Services provide a durable end point

# Notice the java-consumer cannot connect to the queue

kubectl get logs *java-consumer-pod*

# The following command makes the queue discoverable via the name queue

kubectl expose deployment queue --port=15672,5672 --name=queue

# Running the command again shows that it is connected now

kubectl get logs *java-consumer-pod*The above only creates an internal endpoint, below creates an externally accessible endpoint

# Create an endpoint for the HTML page as the REST API Service

kubectl create service nodeport html-frontend --tcp=80:80 --node-port=31112

kubectl create service nodeport status-api --tcp=80:5000 --node-port=31111The website is now externally accessible at the cluster endpoint

Infrastructure as Code

The preferred alternative to using shell commands is storing configuration in yaml files. See the kube directory

# Delete all objects made previously

# Each object has a matching file in the kube directory

kubectl delete -f kube/

# Recreate everything

kubectl create -f kube/Default Monitoring

K8S has a default dashboard and default monitoring called heapster

# configuration specific - most cloud providers have something similar

# create a tunnel from the cluster to the local machine

kubectl proxy

# Get the authentication token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | \

grep admin-user | awk '{print $1}')http://localhost:8001/ui

Scaling

K8S will automatically load balance requests to a service between all replicas. Making subsequent requests to the html page reveals different pod names.

# Scale the NGINX deployment to 3 replicas

kubectl scale deployment html-frontend --replicas=3K8S can create replicas easy and quickly

Auto-Scaling

K8S can scale based on load.

# Maintain between 1 and 5 replicas based on CPU usage

kubectl autoscale deployment java-consumer --min=1 --max=5 --cpu-percent=50

# Run this repeatedly to see # of replicas created

# Also, the "In Process" number on the web page will reflect the number of replicas

kubectl get deploymentsSelf Healing

K8S will automatically restart any pods that die.

# Find nodes running html-frontend

kubectl describe nodes | grep -E 'html-frontend|Name:'

# Simulate a failure by unplugging a network cable

# Pods are automatically regenerated

kubectl get pods

Health Checks

If the endpoint check fails, K8S automatically kills the container and starts a new one

# Find front end pod

kubectl get pods

# Simulate a failure by manually deleting the health check file

kubectl exec *POD_NAME* rm /usr/share/nginx/html/healthz.html

# Notice the restart

kubectl get pods

# See the restart in the event log

kubectl get events | grep *POD_NAME*...

livenessProbe:

httpGet:

path: /healthz.html

port: 80

initialDelaySeconds: 3

periodSeconds: 2

readinessProbe:

httpGet:

path: /healthz.html

port: 80

initialDelaySeconds: 3

periodSeconds: 2Specify health and readiness checks in yaml

Rolling Deployment

K8S will update one pod at a time so there is no downtime for updates

# Update the image on the deployment

kubectl set image deployment/html-frontend html-frontend=dalealleshouse/html-frontend:2.0

# Run repeadly to see the number of available replicas

kubectl get deploymentsViewing the html page shows an error. K8S makes it easy to roll back deployments

# Roll back the deployment to the old image

kubectl rollout undo deployment html-frontendWhat Now?

- K8S Docs

- https://kubernetes.io/docs/home/

- Free Online Course from Google

- https://www.udacity.com/course/scalable-microservices-with-kubernetes--ud615

- Maybe I can Help

- @HumpbackFreak

Thank You!

DON'T FORGET THE SURVEY!!