Motivation

Hamiltonian NNs

Symplectic methods

Conserve a modified energy over long time

Conserve the correct energy over long time

Being iterative methods they accumulate errors

Provide smooth representation of the solution, not based on previous positions.

High cost to solve large systems

It was shown this does not happen for NN differential equation solvers

Being iterative methods they accumulate errors

Hamiltonian neural networks

GOAL: Solve for \(t\in [0,T]\) the known system

STRATEGY: Model the solution with a network defined as

Training

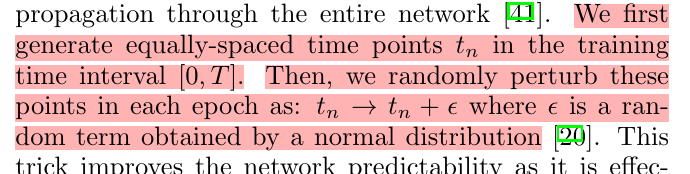

Introduce a temporal discretization

\(t_0=0<t_1<...<t_M=T\)

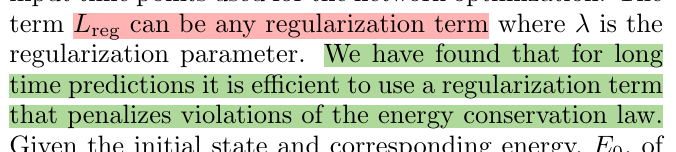

Then minimize the following loss function:

with \(\hat{z}_n := \hat{z}(t_n)\) and \(\dot{\hat{z}}_n := \frac{d}{dt}\hat{z}(t)\vert_{t=t_n}\)

Training

with \(\hat{z}_n := \hat{z}(t_n)\) and \(\dot{\hat{z}}_n := \frac{d}{dt}\hat{z}(t)\vert_{t=t_n}\)

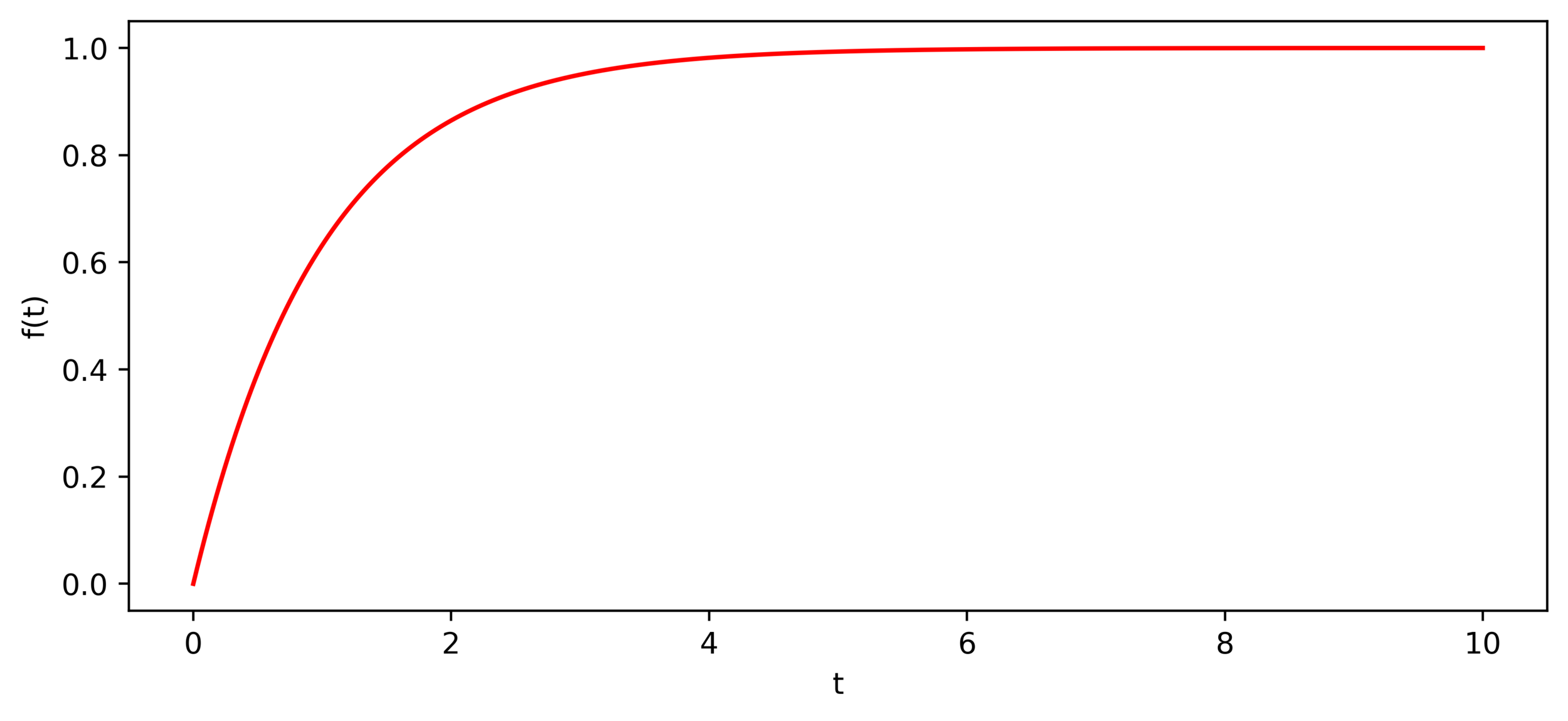

Choice of \(f(t)\)

They use the function \(f(t) = 1-e^{-t}\)

\(f\) rapidly tends to \(1\) and hence when \(\lambda=0\), for long enough time not just \(\hat{z}(t)\) will be symplectic but also \(\mathcal{N}_{\theta}(t)\) since

Error analysis

\(\delta z_n:= z(t_n)-\hat{z}(t_n)\in\mathbb{R}^{2n}\)

\( H(z_n) \approx H(\hat{z}_n) + \nabla H(\hat{z}_n) \delta z_n+ \frac{1}{2}\delta z_n^T \nabla^2H(\hat{z}_n)\delta z_n\)

\(\nabla H(\hat{z}_n) \approx \nabla H(z_n) - \nabla^2 H(\hat{z}_n)\delta z_n\)

This gives an ODE for the error, once we have \(\ell(t)\)

Error analysis

suppose \(\bar{\ell}\geq \ell_n\) for every \(n\)

Suppose \(\delta z_n\) is the maximum value and \(\delta z_n = (0,...,0,\delta z_n^i,0,...,0)\)

Then we have

$$|\delta z_n^i |\leq \frac{\bar{\ell}}{\sigma_{min}}$$

where \(\sigma_{min}\) is the minimum singular value of \(\nabla^2H(\hat{z}_n)\)

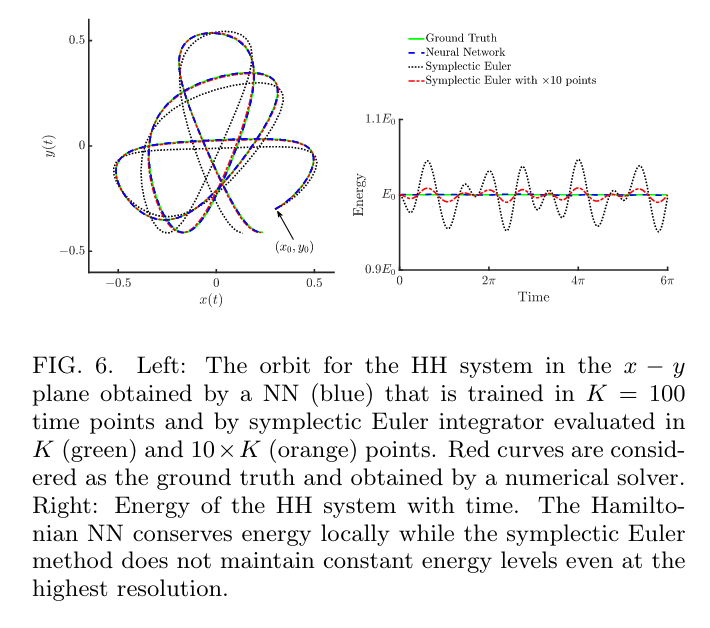

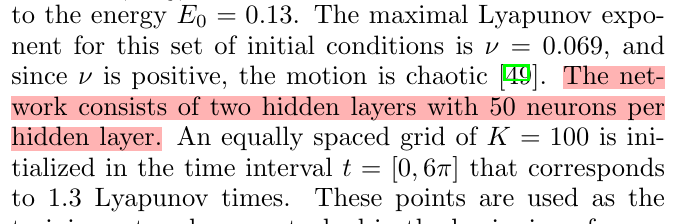

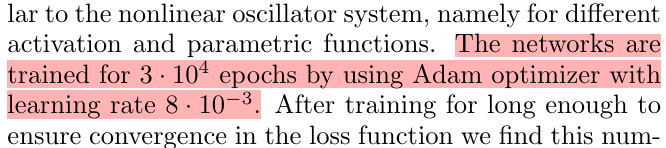

Hénon-Heiles system

Hénon-Heiles system