Lessons from Codefreeze 2016

Last week, I was in Kiilopaa, Finland

In case you didn't know...

Finland is really, really cold in January.

Average temperature:

-25℃

Codefreeze is part of a series of

SoCraTes (Software Craftsmanship & Testing) unconferences

SoCraTes events aim to facilitate conversations between people who care about building better software

"The marketplace"

SoCraTes UK 2015

Multi-tracked sessions

"The Law of Two Feet"

What kinds of things did we do?

We climbed a big hill and sledded back down

We literally froze our faces off

Some brave/crazy people had a "morning stand-up"

We learned to cross-country ski

We saw the Northern Lights while snow-shoeing

But mostly we sat on our computers

I also learnt some useful things...

#1

Testing requires understanding of intention

Teams have many different motivations for writing tests, and the focus of those tests usually reveals underlying values.

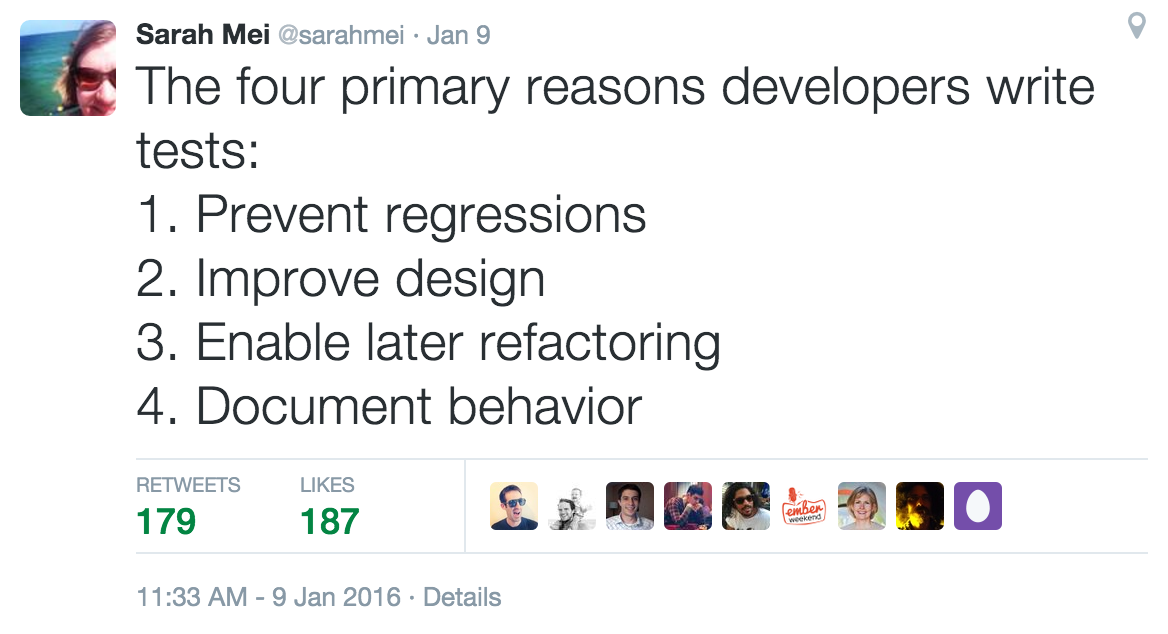

It started from this tweet:

Teams interested in preventing regression typically have lots of high-level acceptance and integration tests.

Teams interested in better design typically have lots of low-level unit tests for distinct modules, some integration tests, and few high-level acceptance tests.

Teams interested in refactoring have low-level tests that are agnostic about how the behaviours are modularised and some integration tests along logical boundaries.

Teams interested in documentation have many short unit tests that are easy to read for future developers working on the code.

But these are generalisations

Sometimes teams write tests without true intention

We shared anecdotes about working on teams that tended to write the types of tests that the developers happened to be comfortable writing.

We generally agreed that codebases with poor coverage creates not only technical vulnerabilities, but also less-tangible impacts on morale and team dynamics

When teams have initial discussions about program architecture, we should discuss our intentions for testing

We spend a great deal of time and energy designing and refactoring production code. Should we dedicate the same level of care to tests?

Key questions

- Who is meant to benefit from the test suite?

- Are non-technical people reading Gherkins?

- How are tests integrated into dev workflow?

- How are tests being maintained?

- Are obsolete tests being deleted regularly?

- Are tests evolving with business priorities?

- Why are we testing?

- Has focus changed during project life cycle?

#2

When mob programming works

We had a mob-programming session where we attempted to test-drive the Diamond Kata.

After almost 2 hours, we didn't complete it, but we had a great time (and almost finished!). We identified a few things that went well:

1. One driver for the entire duration who has good command of the language and test framework

We used Ruby and RSpec, which most of the participants were not familiar with.

This actually led to more interesting, high-level debates and disagreements and less friction over implementation details.

2. A "chief navigator" should emerge organically. This person moderates the conversation, builds consensus, and helps the driver translate ideas into implementation. Different people should swap in and out of this role.

It was nice to try mobbing with people we'd just met, because we brought no preconceptions of each others' experience or ability levels.

This meant that no one naturally deferred to anyone else at the beginning, making it possible for anybody to become the "chief navigator" at any time.

Using a test-first approach provided natural breakpoints where a new "chief navigator" could emerge.

3. Be willing to delete code

Mobs (and teams) should have constant discussion about the value of the code that has been written. Don't be precious about "your" contributions.

4. Establish some ground rules and write them somewhere easily readable.

We only had one rule: "No shouting"

5. Recognise that it takes time for a mob to settle into a stable group dynamic

It took upwards of 1 hour to start writing "meaningful" code

This is partly caused by people joining and leaving, both of which introduced instability

A new mob will probably require at least 1.5 hours to settle into flow. 2 hours is a good length of time for a mobbing session.

6. Have a positive and courageous attitude

A big risk of mob-programming is group inertia: People look to each other for approval, but building consensus is extremely difficult when no one has enough information

It only takes one person who says "Let's just try it and see what happens" to get things moving

7. Most importantly, have a retrospective at the end

#3

Property-based testing in practice

During mob-programming, we decided to use a property-based testing approach to get more meaningful outputs with each test.

With property-based testing, prefer the general

"For any input X, condition Y should hold"

rather than the specific

"For given input X, Y should happen"

Property-based testing begins from the assumption that humans are generally bad at generating enough meaningful test data to catch all edge cases.

Huge amounts of test data are also cumbersome and costly to maintain.

Libraries like Haskell's QuickCheck generate a large number of random inputs that developers can use to see if the outputs consistently follow certain rules (or, properties).

People had many different ideas about

a.) what counted as a property

b.) if a property was worth testing

What could a property-based test look like?

const editions = ['North America', 'North America Middle Market', 'Municipals'];

const viewableEditions = ['North America', 'Middle Market'];

const magicalTagParse = (editions) => doSomeStuff(editions);

magicalTagParse(editions); // returns viewableEditionsLet's say we have this obviously hypothetical test data...

To write tests, we need to make some general assumptions about the expected output. What do we know so far?

const editions = ['North America', 'North America Middle Market', 'Municipals'];

const viewableEditions = ['North America', 'Middle Market'];- The output is an array

- No elements are repeated

- All elements are strings

- The output is never longer than the input

const editions = ['North America', 'North America Middle Market', 'Municipals'];

const viewableEditions = ['North America', 'Middle Market'];"yes but how is this helpful?"

In practice, many developers use example-based tests to drive the feature build and property-based tests to verify behavior.

Potential benefits

- Thinking harder about what and why we test

- Deeper domain understanding early on

- Tests are less brittle and more decoupled

Potential drawbacks

- Need more information upfront and time to identify assumptions

- Complex properties rapidly become resource-intensive

- Setup and configuration overhead for test libraries

It could be interesting to think about how QAs and developers can work more collaboratively if we decide to explore property-based testing.

#4

Retrospectives

We shared different methods and experiences for running retrospectives.

The most important aspect of running a retrospective is to emphasize that it is a safe space where everybody is on even footing.

Some people begin by asking all participants to anonymously submit, on a scale of 1-10, how comfortable they feel about speaking honestly.

If more than a few people give low ratings

(<= 3), the retrospective is cancelled.

Every once in a while, it can be effective to ask during a retrospective,

"What did you learn about yourself during the last cycle?"

But it must be clear that with personal questions, participation is 100% optional

Empathy is paramount. Retrospectives aren't just about producing action items, they are the one time and space that the team can safely check in with one another.

Other random things

At one point, we played a crazy German card game called "Dark Overlord"...

This reminded somebody of an exercise where instead of attempting to assign blame or responsibility for something that went wrong, team members were each challenged to try to shift the "blame" onto themselves, emphasizing that work is shared responsibility.

Playing Werewolf is mandatory at all SoCraTes

A better idea of what the Agile Manifesto means by "Individuals and interactions over processes and tools"

tl;dr everybody should go to more SoCraTes conferences!

Questions?