Cloud Composer

Airflow as a Service

by Joaquín Menchaca

Exploring an Airflow + Spark + Kubernetes architecture and implementation

Architecture

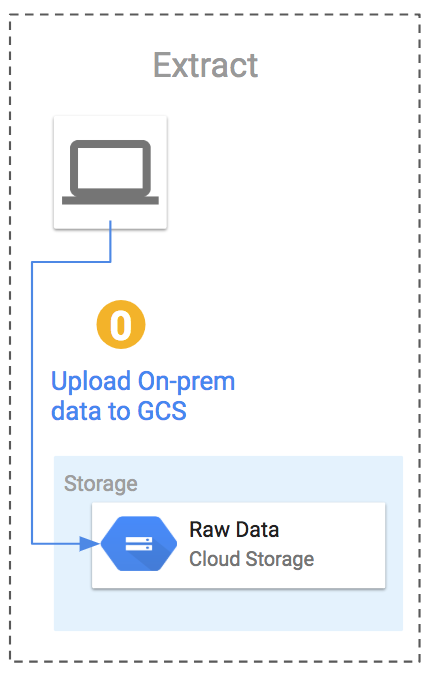

Extract Data

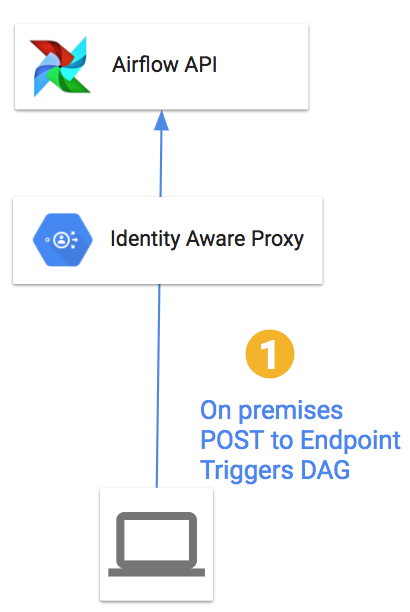

Identity Aware Proxy

Creating a Cluster

gcloud composer environments create demo-ephemeral-dataproc \

--location us-central1 \

--zone us-central1-b \

--machine-type n1-standard-2 \

--disk-size 20

Enabling service [composer.googleapis.com] on project [348697763275]...

Operation "operations/acf.de22b478-5152-435f-8288-bde889dddac9" finished successfully.

Waiting for [projects/evenflow/locations/us-central1/environments/demo-ephemeral-dataproc] to be created with [projects/evenflow/locations/us-central1/operations/276589b0-579f-4377-830d-6271aea419d4]...done.Variables

gcloud composer environments run demo-ephemeral-dataproc \

--location=us-central1 variables -- \

--set gcs_bucket $PROJECT

kubeconfig entry generated for us-central1-demo-ephemeral--276589b0-gke.

Executing within the following kubectl namespace: composer-1-7-2-airflow-1-9-0-276589b0

[2019-07-12 22:54:29,566] {driver.py:120} INFO - Generating grammar tables from /usr/lib/python2.7/lib2to3/Grammar.txt

[2019-07-12 22:54:29,588] {driver.py:120} INFO - Generating grammar tables from /usr/lib/python2.7/lib2to3/PatternGrammar.txt

[2019-07-12 22:54:29,832] {__init__.py:45} INFO - Using executor CeleryExecutor

[2019-07-12 22:54:29,859] {configuration.py:389} INFO - Reading the config from /etc/airflow/airflow.cfg

[2019-07-12 22:54:29,878] {app.py:43} WARNING - Using default Composer Environment Variables. Overrides have not been applied.Trigger the DAG

python dag_trigger.py \

--url="https://$AIRFLOW_URL/api/experimental/dags/average-speed/dag_runs" \

--iapClientId=$CLIENT_ID.apps.googleusercontent.com \

--raw_path="gs://$PROJECT/cloud-composer-lab/raw-$EXPORT_TS/"Workflow

create_cluster >> submit_pyspark

submit_pyspark >> [delete_cluster, bq_load]

bq_load >> delete_transformed_files

move_failed_files << [bq_load, submit_pyspark]