Sirius & Storm

Agenda

- Apache Storm

- Storm Cluster (2 min)

- Storm Components (5 min)

- Sirius Deploy (2 min)

- Team City (2 min)

- Ansible (2 min)

- Sirius Config (2 min)

Apache Storm

Apache Storm

Distributed data transformation streaming cluster

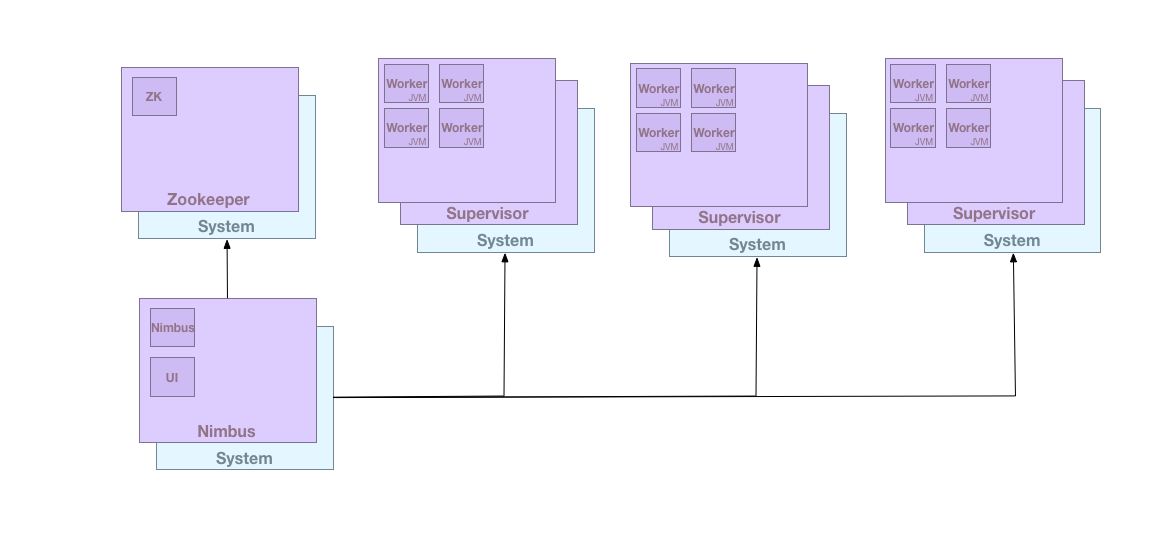

Storm Components

- Apache Storm JVMs

- Scheduling: Nimbus

-

Orchestration: Supervisor

- Worker (four per supervisor)

- Visual Logs: Logviewer

- Front Panel: UI

- Client: Jar

- Nginx (reverse proxy)

- Supervisor (service supervisor)

Configuration

---

storm_clusters:

stormcluster-01:

nimbus-stormcluster.gobalto.com:

inet: 10.110.20.7

roles: [nimbus, logviewer, ui]

nimbus2-stormcluster.gobalto.com:

inet: 10.110.20.146

roles: [nimbus, logviewer, ui]

supervisor1-stormcluster.gobalto.com:

inet: 10.110.20.10

roles: [supervisor, logviewer]

supervisor2-stormcluster.gobalto.com:

inet: 10.110.20.11

roles: [supervisor, logviewer]

supervisor3-stormcluster.gobalto.com:

inet: 10.110.20.9

roles: [supervisor, logviewer]

zookeeper-stormcluster.gobalto.com:

inet: 10.110.20.8

roles: [zookeeper, proxy]storm cluster group_var

-

Cluster Systems

-

2 Nimbus (3 JVMs)

- nimbus JVM

- logviewer JVM

- ui JVM

-

3 Supervisor (6 JVMs)

- supervisor JVM

- logviewer JVM

- 4 worker JVM

-

1 Zookeeper (1 JVM)

- zookeper JVM

- nginx reverse-proxy

-

2 Nimbus (3 JVMs)

-

Dev System (or container)

- 1 Client (jar jvm)

Note: This is a current configuration for deploy playbooks and future cluster playbooks. It should be refactored to pull AWS tags for the configuration.

Nginx Reverse Proxy pt1

.

├── sites-available

│ ├── default

│ ├── nimbus1-stormcluster.gobalto.com

│ ├── nimbus2-stormcluster.gobalto.com

│ ├── storm-cluster.gobalto.com

│ ├── supervisor1-stormcluster.gobalto.com

│ ├── supervisor2-stormcluster.gobalto.com

│ └── supervisor3-stormcluster.gobalto.com

└── sites-enabled

├── nimbus1-stormcluster.gobalto.com -> /etc/nginx/sites-available/nimbus1-stormcluster.gobalto.com

├── nimbus2-stormcluster.gobalto.com -> /etc/nginx/sites-available/nimbus2-stormcluster.gobalto.com

├── storm-cluster.gobalto.com -> /etc/nginx/sites-available/storm-cluster.gobalto.com

├── supervisor1-stormcluster.gobalto.com -> /etc/nginx/sites-available/supervisor1-stormcluster.gobalto.com

├── supervisor2-stormcluster.gobalto.com -> /etc/nginx/sites-available/supervisor2-stormcluster.gobalto.com

└── supervisor3-stormcluster.gobalto.com -> /etc/nginx/sites-available/supervisor3-stormcluster.gobalto.comAn NGiNX reverse proxy is absolutely required to all access to all the systems for the web access. Additionally a /etc/hosts must be configured for every system so that all the components can properly communicate to each other.

NGiNX Reverse Proxy pt2

# LogViewer example

server {

listen 8000;

server_name supervisor1-stormcluster.gobalto.com;

location / {

proxy_pass http://10.110.20.10:8000;

}

}

Two examples of proxy configuration. Each Logviewer and UI end-point must have a configuration.

- Prerequisite Knowledge: hostnames, DNS, hosts file, web virtual host, reverse proxy routing

# UI example

server {

listen 80;

server_name storm-cluster.gobalto.com nimbus-stormcluster.gobalto.com;

location / {

proxy_pass http://10.110.20.7:8080;

}

}Apache Storm Config

storm.zookeeper.servers:

- "zookeeper-stormcluster.gobalto.com"

nimbus.seeds: ["nimbus-stormcluster.gobalto.com", "nimbus2-stormcluster.gobalto.com"]

nimbus.childopts: "-Xmx1024m -Djava.net.preferIPv4Stack=true"

ui.childopts: "-Xmx768m -Djava.net.preferIPv4Stack=true"

supervisor.childopts: "-Djava.net.preferIPv4Stack=true"

worker.childopts: "-Xmx768m -Djava.net.preferIPv4Stack=true"

supervisor.slots.ports:

- 6700

- 6701

- 6702

- 6703

storm.local.dir: "/app/storm"

Apache Storm 1.x (storm.yaml)

The cluster needs to have a list of all zookeepers and nimbus servers

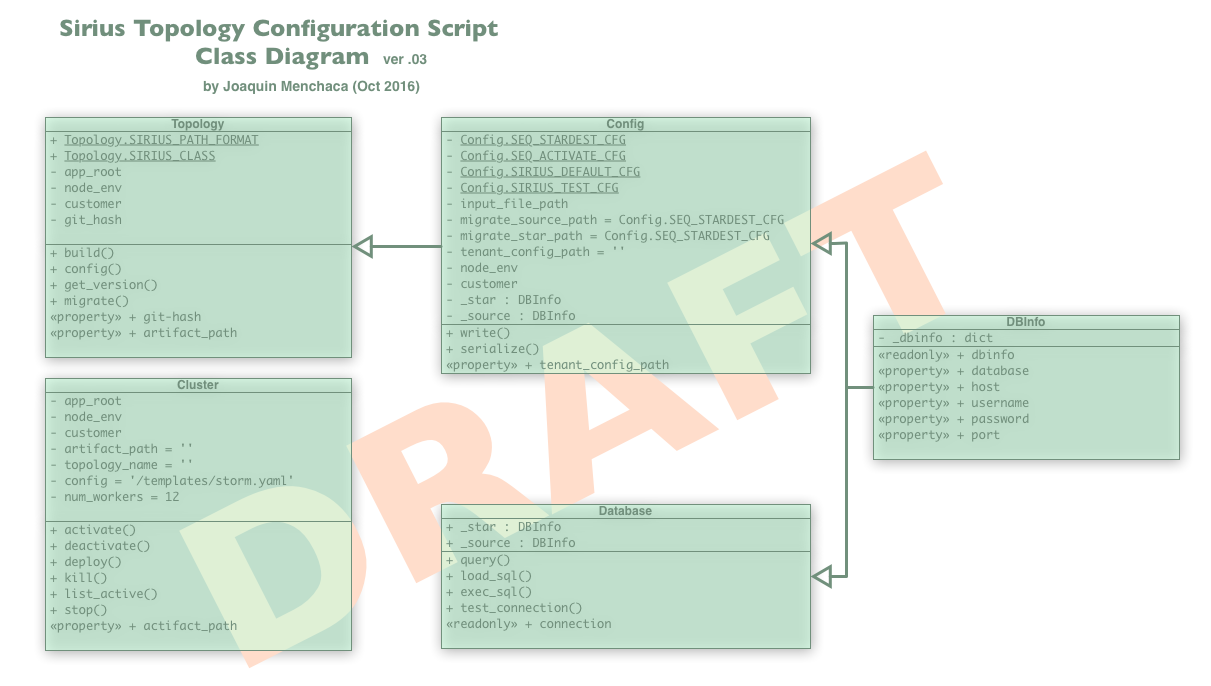

Sirius Topology

Topology

A topology is a blue print of the actual transformation. It is created in software code and packaged as a Java JAR.

This is what is submitted to a topology.

Sirius Topology

Sirius Topology transforms data from Activate (Source) to a Star Schema (Star).

Therefore you need to create a database schema (tables) for the audit logs on the source, and the star schema. This process is called migration, and there are two configs.

The Spouts and Bolts will use tenantConfig.

Sirius Migration Config

{

"dev": {

"username": "storm_user",

"password": null,

"database": "star_covance",

"host": "localhost",

"dialect": "postgres"

},

"test": {

"username": "storm_user",

"password": null,

"database": "star_covance",

"host": "localhost",

"dialect": "postgres"

}

}Sequelize (config.json)

There are two migration operations: source and star. The environment variable NODE_ENV determines, which configuration to utilize.

Sirius Tenant Config

{

"covance" : {

"sourceDBInfo": {

"database": "storm_togo_dev",

"host": "REDACTED",

"port": 5432,

"username": "storm_togo_test",

"password": "REDACTED"

},

"starDBInfo": {

"database": "storm_dev",

"host": "REDACTED",

"port": 5432,

"username": "storm_dev_user",

"password": "REDACTED"

}

}

}Sirius (tenantconfig.json)

The topology job running in the Apache Storm cluster has an embedded configuration.

This configured per tenant (customer).

Note that NODE_ENV cannot be supported in a cluster because there is no plausible way to configure the env var for each JVM. This is control by Apache Storm project developers.

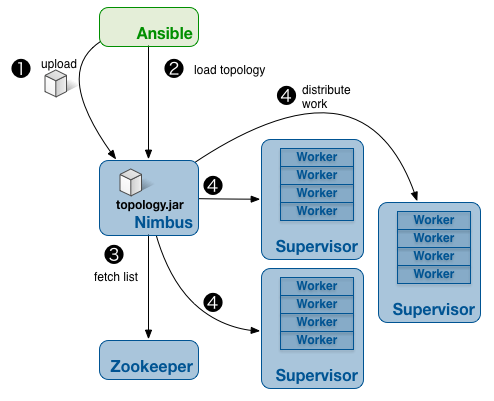

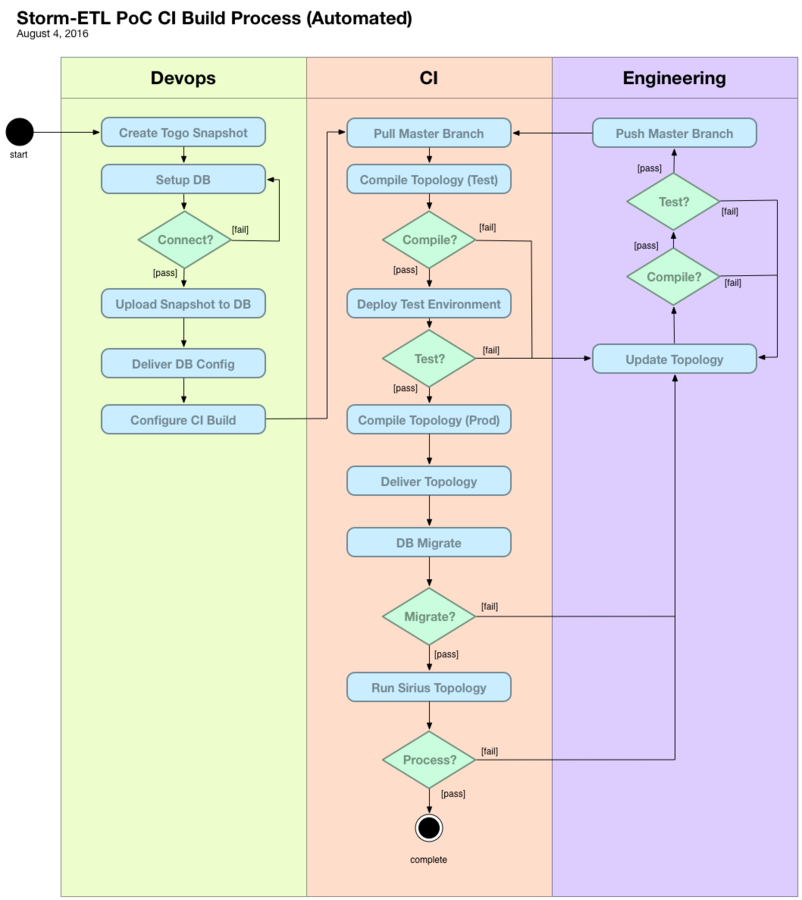

Sirius Deploy

Deploy Process Overview

- Team City Build/Deploy

- Docker Build Container

- Docker Ship Container

- Ansible Configure Container

- Ansible Launch Container

-

Ansible Orchestrate Container

- Configure DB Sources

- Build JAR

- Deactivate/Kill Topology

- DB Migrate Source & Star

- Submit Topology

- Monitor Progress

Life Cycle

- Build

- Ship

- Deploy

- Pull

- Run

- Orchestrate

Orchestrate

Configure, Build, Stop, Migrate, Deploy (Submit Topology), Status (load_sql)

Base Docker Images

-

storm base build

- storm 1.0.2

-

maven base build

- maven 3.3.9

-

java base build

- oracle jdk 8

- ubuntu 14 trusty

DOCKER_REPO="gobaltoops/sirius"

docker build -t=${DOCKER_REPO}:base .

docker push ${DOCKER_REPO}:baseDOCKER_REPO="gobaltoops/sirius"

docker build -t=${DOCKER_REPO}:maven --no-cache=true .

docker push ${DOCKER_REPO}:maven

DOCKER_REPO="gobaltoops/sirius"

docker build -t=${DOCKER_REPO}:storm --no-cache=true .

docker push ${DOCKER_REPO}:stormThere are three base systems that are required.

Sirius Container

Unlike a self-contained web application, Apache Storm only accepts a JAR.

Thus this container is thus only used for build-configuration-deploy. It has a self-contained (segregated) Apache Storm, Java JDK 8, Maven build system, and Node JS environment.

This is used to build, configure, and deploy a topology to a cluster.

Sirius Dockerfile pt 1

FROM gobaltoops/sirius:maven

ENV APP_ROOT /gobalto

ENV NODE_ROOT ${APP_ROOT}/src/main/resources/resources/

ENV TEST_ROOT ${APP_ROOT}/test/

WORKDIR ${APP_ROOT}

RUN mkdir -p ${TEST_ROOT} && \

mkdir -p ${NODE_ROOT} && \

mkdir -p ${APP_ROOT}/output

#### UNIT TESTS

COPY test/package.json ${TEST_ROOT}

RUN npm -g install mocha istanbul && \

cd ${TEST_ROOT} && \

npm install

#### NODE LIBRARY SUPPORT

COPY src/main/resources/resources/package.json ${NODE_ROOT}

RUN cd ${NODE_ROOT} && \

npm -g install sequelize@3.23 sequelize-cli pg pg-hstore && \

npm install

VOLUME ${APP_ROOT}/logs/

VOLUME ${APP_ROOT}/output/

Dockerfile pt 2

#### COPY REST OF CODE

COPY . ${APP_ROOT}/

#### SIRIUS CONFIG SCRIPT SUPPORT

RUN apt-get update && \

apt-get install -y libpq-dev python3-pip

RUN pip3 install --upgrade pip setuptools wheel && \

pip3 install psycopg2

#### Needed for Psycopg2 output from UTF-8 Postgres database

ENV PYTHONIOENCODING=utf-8

#### LINK SIRIUS CONFIG SCRIPT

ENV COMMON_SCRIPTS ${APP_ROOT}/ci/docker/configs/common/

RUN ln -sf ${COMMON_SCRIPTS}/sirius_cfg.py /usr/local/bin/sirius

#### KEEP ALIVE

CMD while :; do sleep 1; done

Team City Deploy

#!/bin/sh

# Skip Build if no change and Redeploy is 0

#[ $SKIP_BUILD -eq 1 -a $REDEPLOY -eq 1 ] || exit 0

CUSTOMER=%customer%

GIT_HASH_SHORT=$(/usr/bin/git log --abbrev-commit --abbrev=8 --max-count=1 --format=%h)

ssh storm-dev-01 sirius_deploy ${CUSTOMER} dev ${GIT_HASH_SHORT}Team City Build

# BUILD AND SHIP

/usr/bin/docker login -u ${DOCKER_HUB_USER} -p ${DOCKER_HUB_PSSWD} -e ${DOCKER_HUB_EMAIL}

/usr/bin/docker build -t ${DOCKER_REPO}:${GIT_HASH_SHORT} .

/usr/bin/docker push ${DOCKER_REPO}:${GIT_HASH_SHORT}

# DEPLOY PROCESS

CUSTOMER=%customer%

GIT_HASH_SHORT=$(/usr/bin/git log --abbrev-commit --abbrev=8 --max-count=1 --format=%h)

ssh storm-dev-01 sirius_deploy ${CUSTOMER} dev ${GIT_HASH_SHORT}There are two steps for the build process currently.

- creates the build container and ships it to DockerHub.

- remotely run Ansible script on strom-dev-01 system.

Sirius Deploy

#!/bin/bash

CUST=$1

ENV=$2

HASH=$3

SCRIPT=$(echo $0 | awk -F/ '{ print $NF }')

if [ $# -lt 3 ]; then

echo 1>&2 "$0: not enough arguments, usage is '$SCRIPT CUST ENV HASH'"

exit 2

elif [ $# -gt 3 ]; then

echo 1>&2 "$0: too many arguments, usage is '$SCRIPT CUST ENV HASH'"

exit 2

fi

# The three arguments are available as "$1", "$2", "$3"

ansible-playbook -v -e "git_hash_short=${HASH} customer=${CUST} env=${ENV}" \

/etc/ansible/playbooks/sirius_deploy.ymlSirius Deploy

#!/bin/bash

CUST=$1

ENV=$2

HASH=$3

SCRIPT=$(echo $0 | awk -F/ '{ print $NF }')

if [ $# -lt 3 ]; then

echo 1>&2 "$0: not enough arguments, usage is '$SCRIPT CUST ENV HASH'"

exit 2

elif [ $# -gt 3 ]; then

echo 1>&2 "$0: too many arguments, usage is '$SCRIPT CUST ENV HASH'"

exit 2

fi

# The three arguments are available as "$1", "$2", "$3"

ansible-playbook -v -e "git_hash_short=${HASH} customer=${CUST} env=${ENV}" \

/etc/ansible/playbooks/sirius_deploy.ymlsirius_deploy playbook

---

- hosts: all

tasks:

- name: Add sirius_container to storm_clusters group

add_host: name=sirius_container groups=storm_clusters

- name: Add sirius (docker) to storm_clusters group

add_host:

name: sirius

groups: storm_clusters

ansible_connection: docker

ansible_ssh_user: root

ansible_become_user: root

ansible_become: yes

- hosts: sirius_container

connection: local

roles:

- sirius_container

tasks:

- hosts: sirius

roles:

- sirius_deploy

sirius_container pt 1

---

- name: Test External Variables

fail: msg="Bailing out. This role requires '{{ item }}'"

when: "{{ item }} is not defined"

with_items: "{{ required_vars }}"

- include: setup.yml

- include: config.ymlsirius_container pt 2

---

# tasks to configure

- name: Include customers variables

include_vars: customers.yml

- name: Configure envfile

template:

src: dev.env.j2

dest: "{{host_staging_dir}}/templates/dev.env"

- name: Configure storm configuration (storm.yaml)

template:

src: storm.yaml.j2

dest: "{{host_staging_dir}}/templates/storm.yaml"

- name: Configure storm hosts enviroment

template:

src: hosts.j2

dest: "{{host_staging_dir}}/templates/hosts"

sirius_container templates

# dev.env.j2

{% for key, val in customers[customer].iteritems() %}

{{ key }}="{{ val }}"

{% endfor %}

# hosts.j2

{% for key, val in storm_clusters[storm_cluster].iteritems() %}

{{ val['inet'] }} {{ key }}

{% endfor %}

# storm.yaml.j2

nimbus.seeds: [{{ storm_clusters[storm_cluster].keys() |

select('search', 'nimbus') |

join(", ") }}]

sirius_container pt 3

---

# task to setup container build environment

# these task can be run on localhost or remote system

- name: Include docker variables

include_vars: docker.yml

- name: Log into DockerHub

docker_login:

username: "{{ docker_hub_username }}"

password: "{{ docker_hub_password }}"

email: "{{ docker_hub_email }}"

- name: Make dirs

file: path={{item}} state=directory mode=0755

with_items:

- "{{ host_staging_dir }}/logs"

- "{{ host_staging_dir }}/templates"

- "{{ host_staging_dir }}/target"

- name: Find if select container exists

shell: docker ps -a | grep -q '{{ name_app }}$'

register: sirius_container

ignore_errors: true

- name: Stop and remove the container if it already exists

shell: 'docker stop {{ name_app }} && docker rm {{ name_app }}'

when: sirius_container.rc == 0

- name: Build Sirius Container

docker:

name: "{{ name_app }}"

image: gobaltoops/sirius:{{ git_hash_short }}

state: reloaded

pull: always

command: bash "{{ app_root }}"/"{{ config_path }}"/"{{ app_env }}"/wrapper.sh

volumes:

- "{{ host_staging_dir }}/templates:/templates"

- "{{ host_staging_dir}}/logs:{{ app_root }}/logs"

- "{{ host_staging_dir }}/target:{{ app_root }}/output"

env:

APP_ROOT: "{{ app_root }}"

CUSTOMER: "{{ customer }}"

NODE_ENV: "{{ app_env }}"

- name: Add Docker Connection

add_host:

name: "{{ name_app }}"

groups: storm_clusters

ansible_connection: docker

ansible_ssh_user: root

ansible_become_user: root

ansible_become: yes

sirius_container pt 4

- name: Build Sirius Container

docker:

name: "{{ name_app }}"

image: gobaltoops/sirius:{{ git_hash_short }}

state: reloaded

pull: always

command: bash "{{ app_root }}"/"{{ config_path }}"/"{{ app_env }}"/wrapper.sh

volumes:

- "{{ host_staging_dir }}/templates:/templates"

- "{{ host_staging_dir}}/logs:{{ app_root }}/logs"

- "{{ host_staging_dir }}/target:{{ app_root }}/output"

env:

APP_ROOT: "{{ app_root }}"

CUSTOMER: "{{ customer }}"

NODE_ENV: "{{ app_env }}"

- name: Add Docker Connection

add_host:

name: "{{ name_app }}"

groups: storm_clusters

ansible_connection: docker

ansible_ssh_user: root

ansible_become_user: root

ansible_become: yes

sirius_deploy role

---

# tasks file for sirius_deploy

- name: Test External Variables

fail: msg="Bailing out. This role requires '{{ item }}'"

when: "{{ item }} is not defined"

with_items: "{{ required_vars }}"

- name: Config db connections from envfile

command: sirius config {{ envfile }}

- name: Build topology jar

command: sirius build {{ git_hash_short }}

- name: Stop active topologies

command: sirius stop

- name: Migrate source Activate db

command: sirius migrate source

- name: Migrate destination star db

command: sirius migrate star

- name: Deploy topology

command: sirius deploy {{ git_hash_short }}