Aversion to option loss in a restless bandit task

Danielle Navarro, Peter Tran & Nicole Baz

11 June 2018, ALG meeting, Katoomba

Products don't stay on sale forever

Possible romantic partners move on

Houses go off the market

Images via pixabay

In real life, choice options have limited availability

Images via pixabay

Keeping options viable requires active maintenance

RSVP now to attend party later

Study now for a career later

Show up to the first date to get invited on a second

Images via unsplash

How many to pursue?

Pursuing too many options consumes time, effort and other scarce resources

The opportunity cost for maintaining poor options can be substantial

Yet... pursuing too few is risky... What if the world changes? What if your needs change?

Existing literature?

- Vanishing options tasks

- Shin & Ariely (2004)

- Ejova et al (2009)

- Neth et al (2014)

- Other related literature

- Endowment effect (Kahneman & Tversky 1979)

- RL models with prospect curves (e.g., Speekenbrink & Konstantinidis 2015)

"doors" problems

Instantiation within a bandit task

image source: flaticon

M1

M2

M3

M4

lose $5

win $2

lose $1

lose $1

win $2

lose $3

I've not used these machines recently, and someone else has taken them

I've concentrated recent bets on these machines

win $2

win $2

lose $5

RL approximation: options not pursued for N trials vanish

1

2

3

4

5

6

7

8

chosen

viable option not chosen

someone takes the machine

someone takes the machine

Experimental task

Method

- Task:

- Six armed bandit

- Horizon: 50 trials (x3)

- Feedback between games

- Other details:

- Experiments run on Amazon Mechanical Turk

- Expt 1: N = 400, Expt 2: N = 300, Pay: US$10/hr

- Instructions had short "test" to check understanding

-

Manipulations:

- Availability (const., threat)

- Change (static, slow, fast)

- Drift (none, biased)

Dynamics?

Results

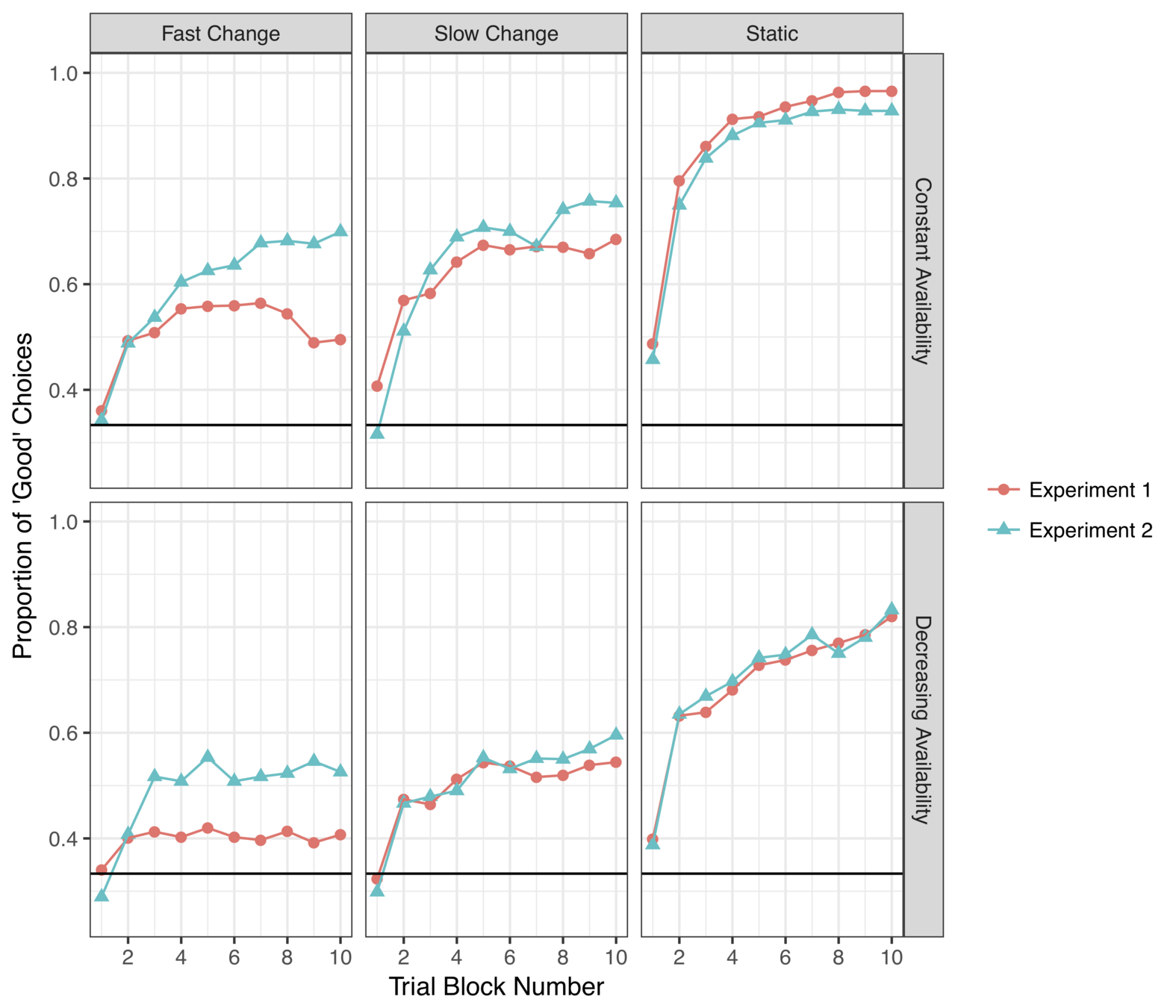

Learning curves

- Good = "top 2" option

- People learn quickly

- Fewer good choices when:

- option threat exists

- environment changes

- (Runaway winner effect in Exp 2 fast change)

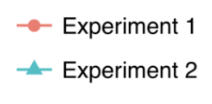

How many options do we keep?

~ 3-4 options retained

(consistent with Ejova et al 2009)

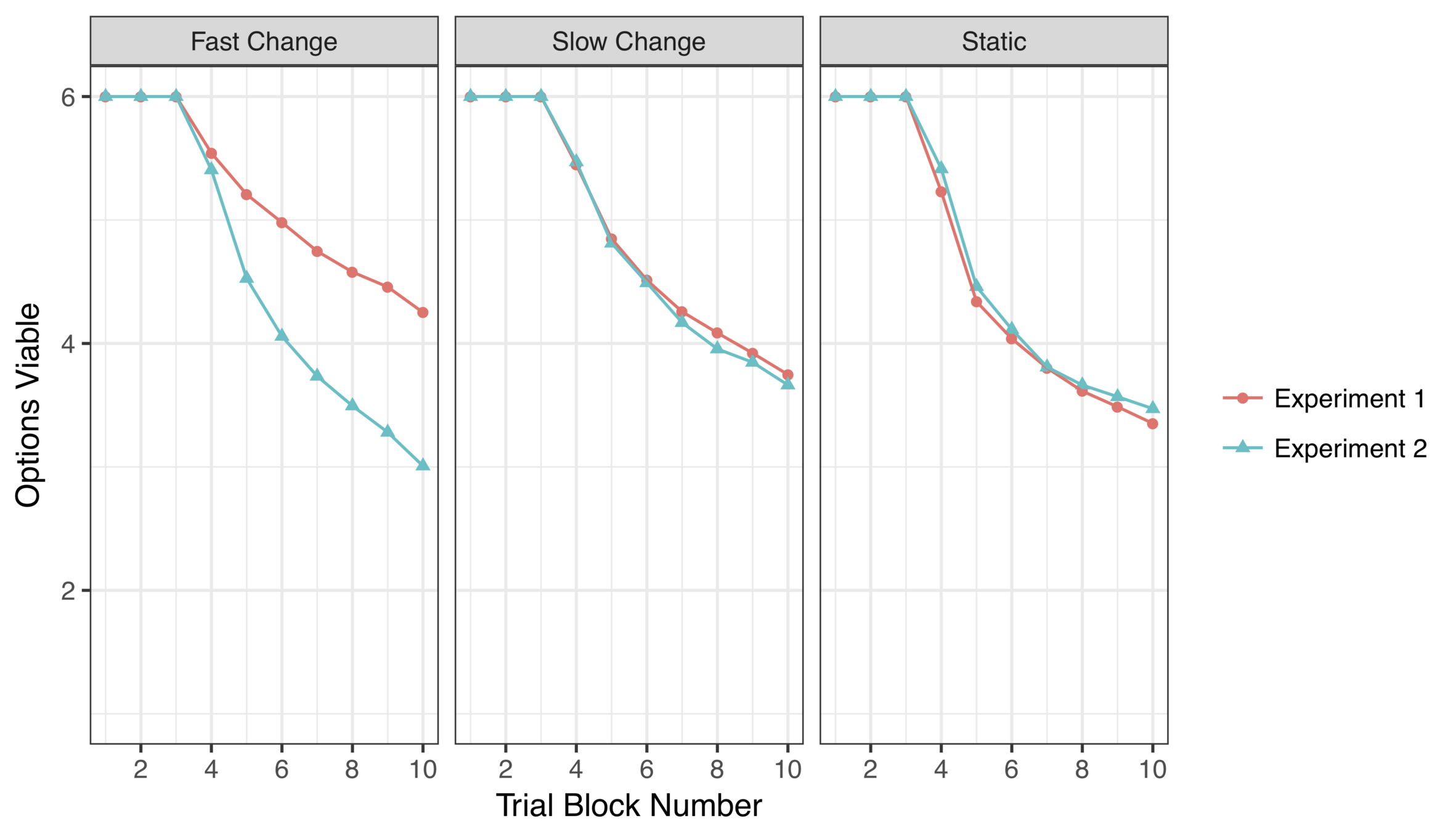

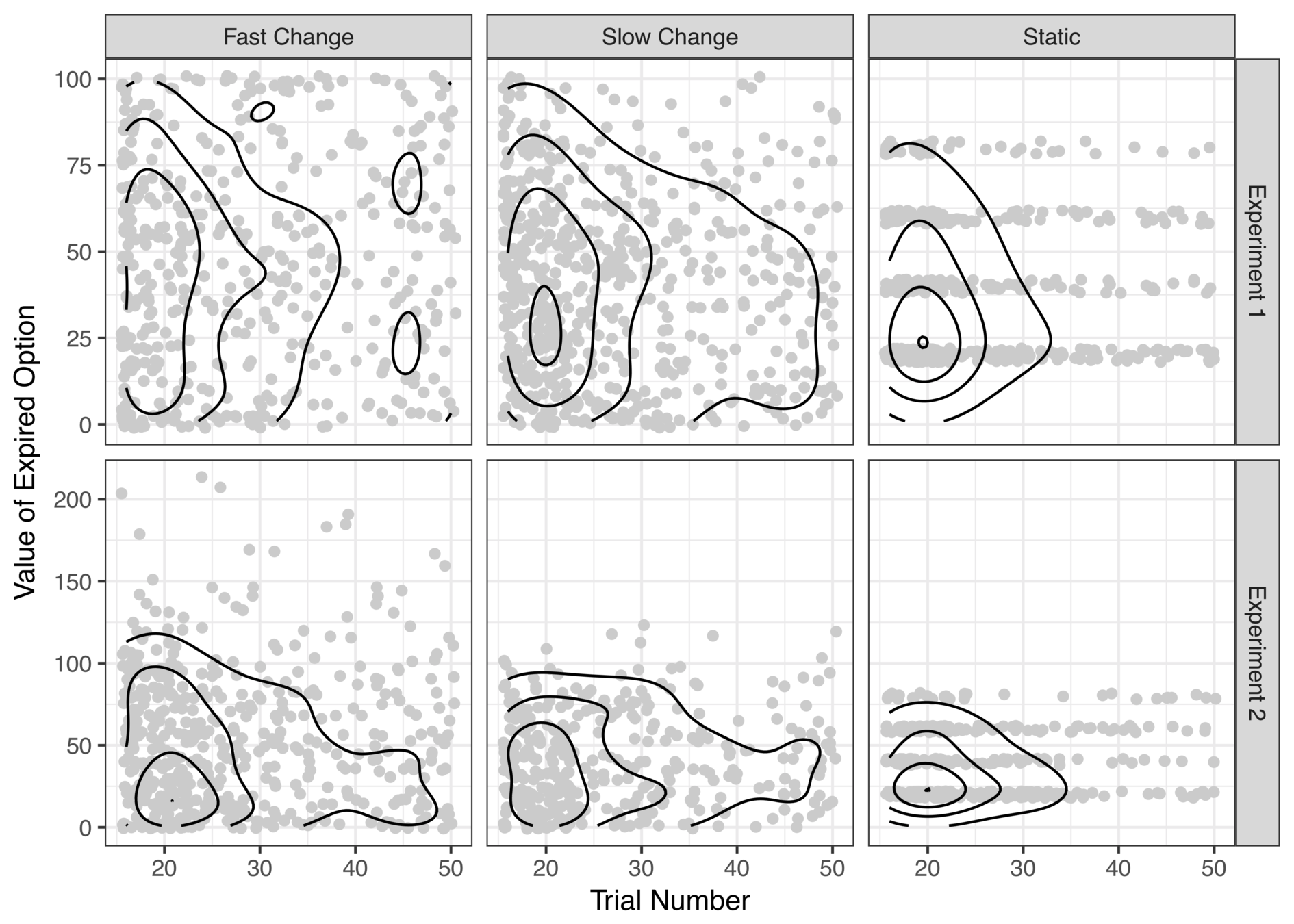

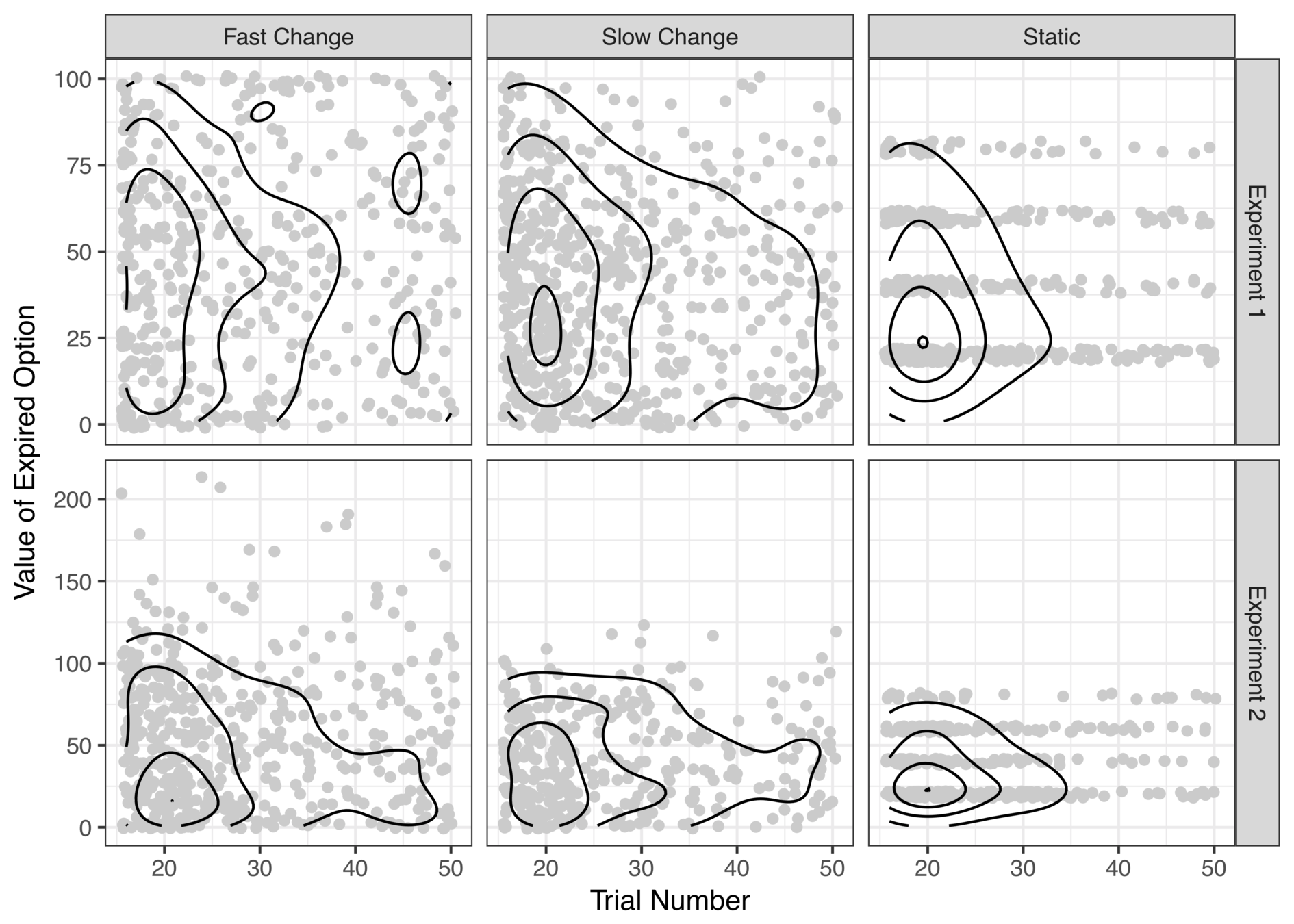

When do we let options go?

(an unapologetically exploratory analysis!)

1. Poorer discrimination in volatile environments?

early expired options almost always low value

early expired options sometimes high value

2. Letting go near the deadline?

Looks like people are "clinging" to a few suboptimal options only to let them expire right before the deadline?

Interim summary

- People mostly make good choices, but it is hard in extremely volatile environments (not surprisingly)

- People do let options expire but are perhaps reluctant: agrees with Ejova et al (2009), Neth et al (2014), possibly also with Shin & Ariely (2004)

- There appears to be systematicity to how and when we allow options to expire

Open questions

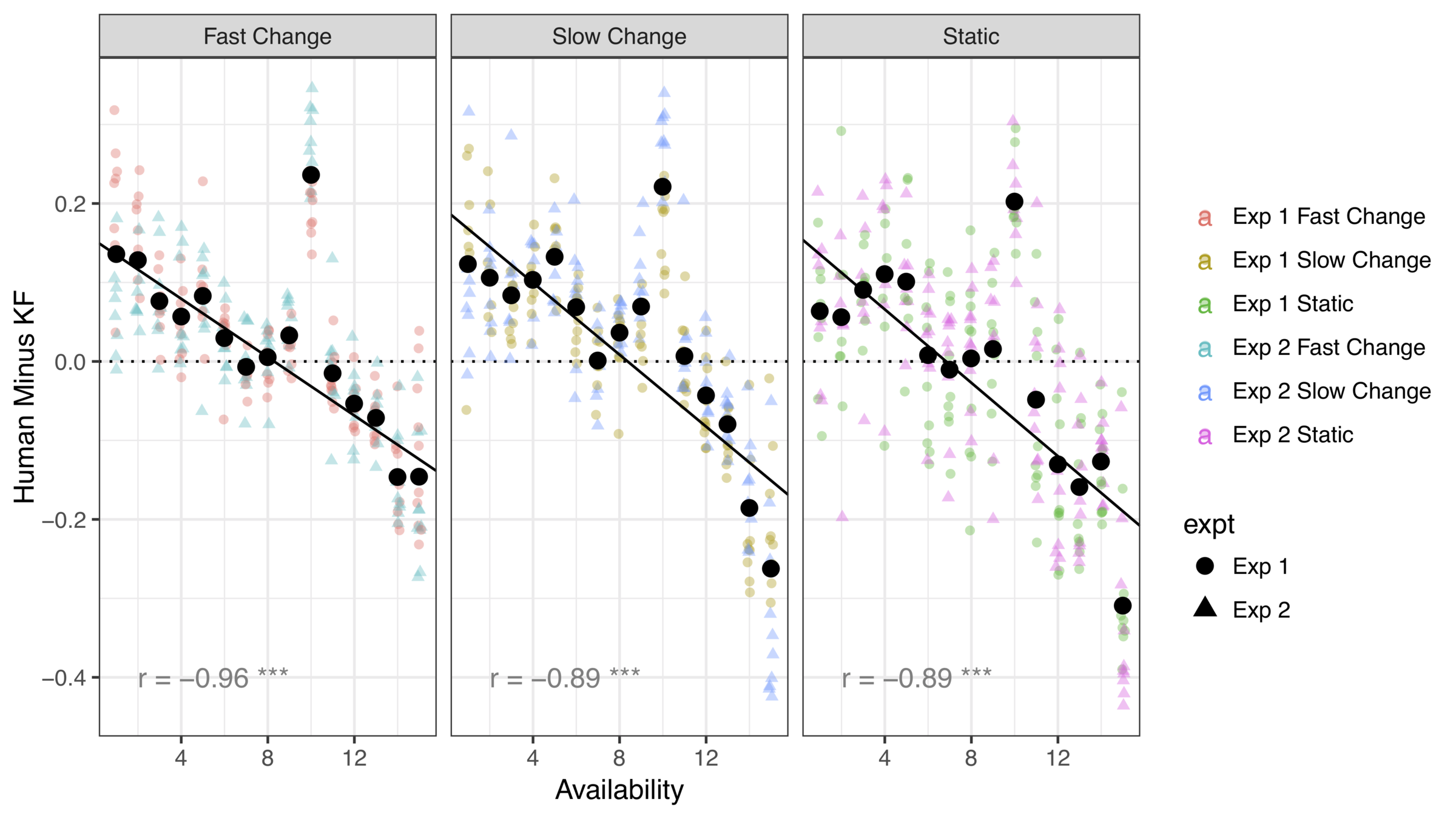

- Is the expiry any different to what we'd expect from a standard RL model (e.g. Kalman filter)

- If there are differences, what pattern do they take?

- Do the differences in responding across volatility levels reflect a strategy change, or the same approach expressed differently because the environment is different?

Computational Modelling

Kalman filter

Expected reward for option \(j\) on this trial

Uncertainty about reward for option \(j\) on this trial

Expected reward for option \(j\) on last trial

Uncertainty about reward for option \(j\) on last trial

Uncertainty drives Kalman gain

Kalman gain influences beliefs about expected reward and uncertainty

Kalman filter

Predicted reward for choosing the option

Prediction error

(only update chosen option)

Amount of learning depends on the Kalman gain

Kalman filter

- Volatility \(\sigma_w\) and noise \(\sigma_n\) fixed at veridical values

- Initial values \(E_{j0}\) and \(S_{j0}\) reflect diffuse prior

- Model not yoked to participant: purely predictive

KF updates uncertainty

Gain depends on uncertainty

Choice probabilities

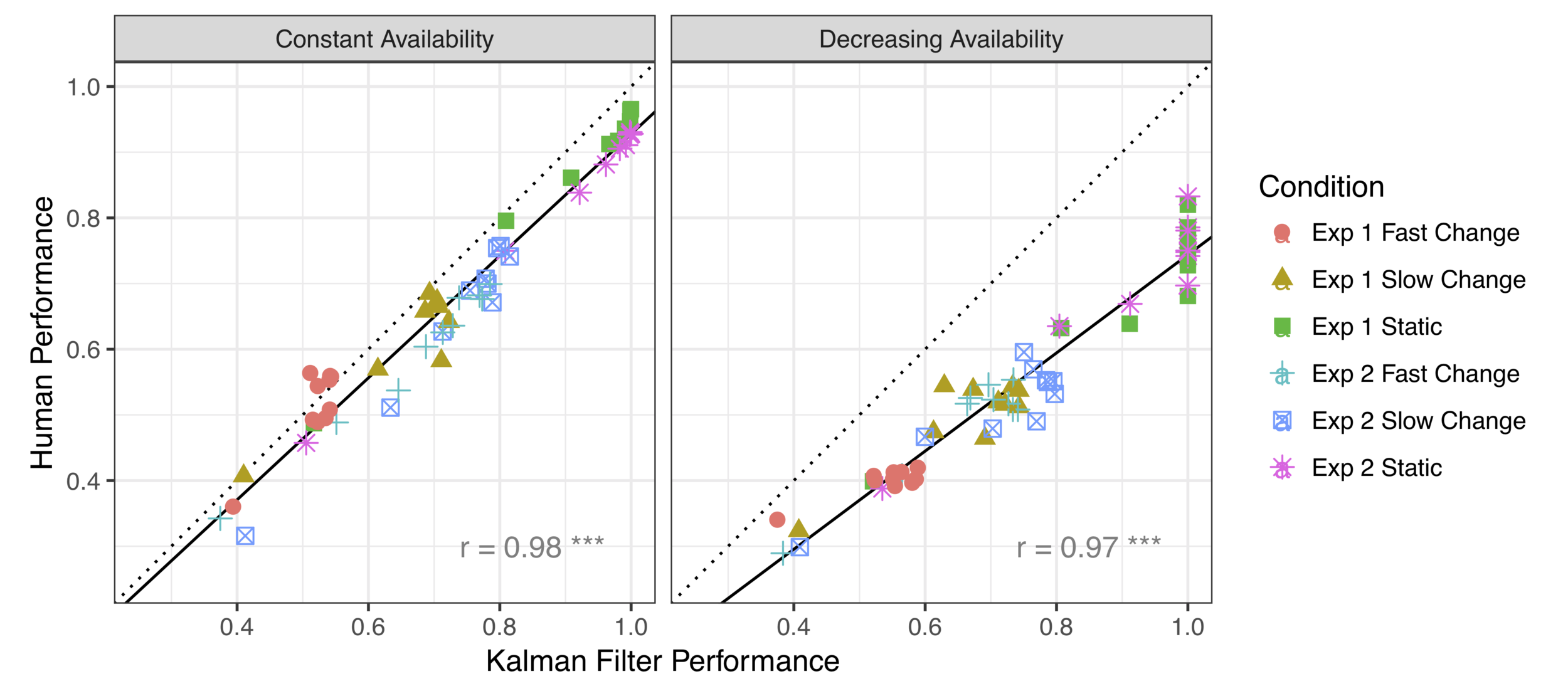

KF model provides an excellent account of choice behaviour when options do not expire

There is a systematic difference when option loss is a possibility

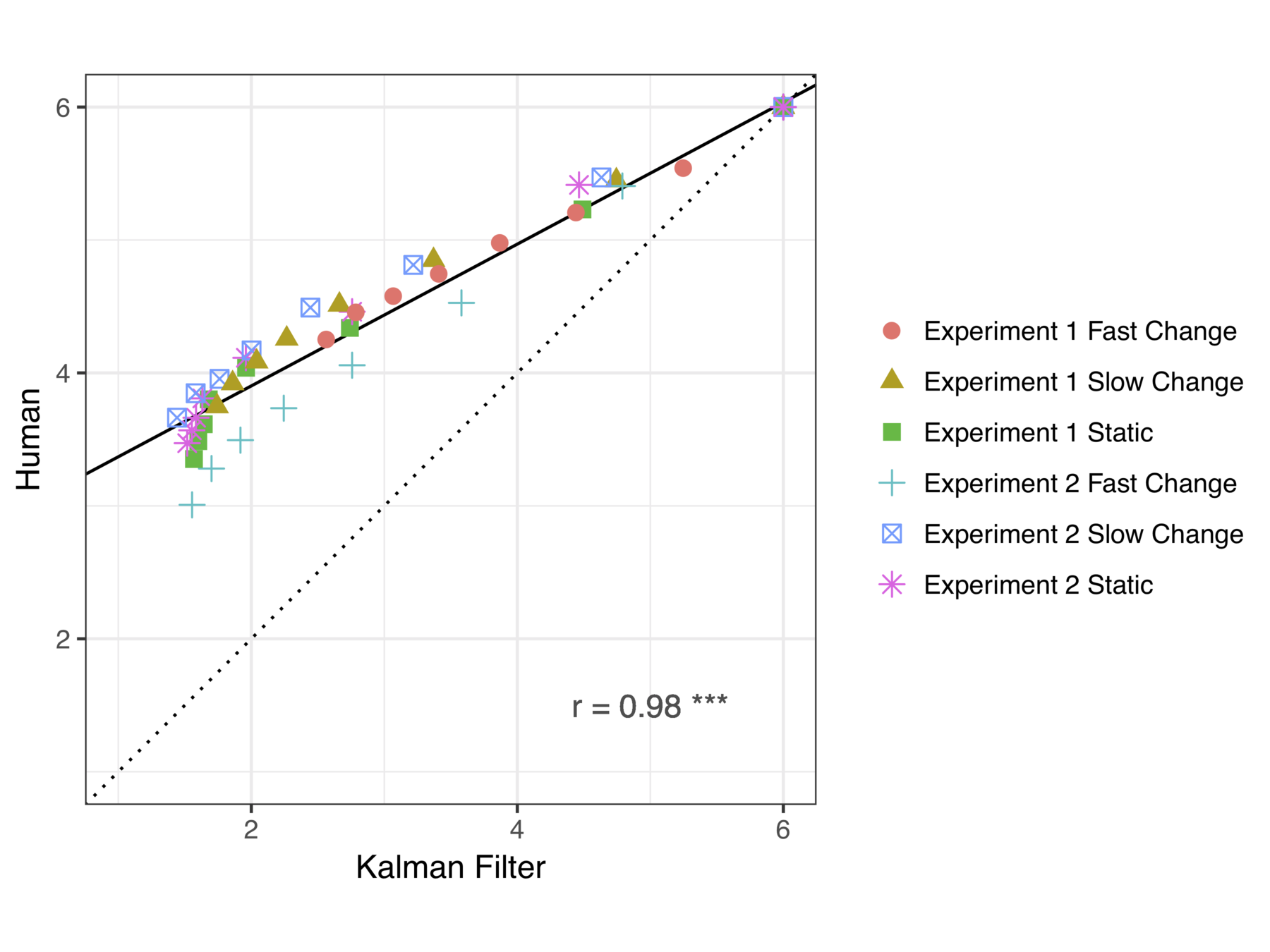

Options retained?

Human decision makers retain more options than the KF model

Quantifying loss aversion?

Conclusions?

- Replicated findings from "doors" tasks in a bandit task framework without explicit switching costs

- Extension to several dynamic environments

- Computational modelling to measure the shift in decision policy when option loss exists

- Attempted to quantify the loss aversion signal

Main findings

Follow up?

- Covariates? Does anxiety play a particular role here?

- Why the "gradual rising" pattern? Hazard in the task is abrupt (cliff) not smooth (lion). Why do people treat a "cliff" task like a "lion" threat?

Thanks!

Project:

- Preprint: psyarxiv.com/3g4p5

- OSF: osf.io/nzvqp

Support: