Continuous Integration & Automated testing with Worklight

Donal Spring

donalspr@uk.ibm.com

springdo

springdoAgenda

- Worklight Summary

- Continuous Integration

- What, why & how?

- Automated Testing

- invokeWLProcedure.js

- Chaining

- Building Worklight using CI

- Multiple Worklight Environments

- Managing Jenkins

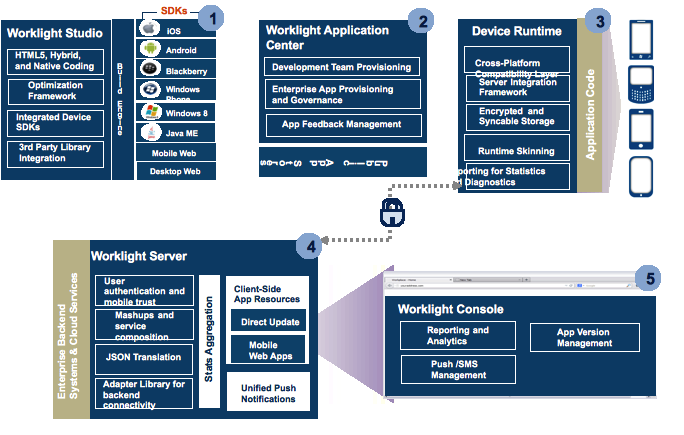

Worklight refresh

aka "The bits"

Worklight Adapters

in a nut shell :

Simple integration API

"Normal" WL Development

IDE --> Native tooling --> Deployment

Current issues facing delivery teams

The "it works on my system" attitude

Manual testing / development

Scalable?

Continuous integration

What is it?

Why do it?

How do i do it?

Build -> Test -> Deploy in a day

Why do it?

Client transparency

Time to market

Encourages developers

Mitigates integration issues

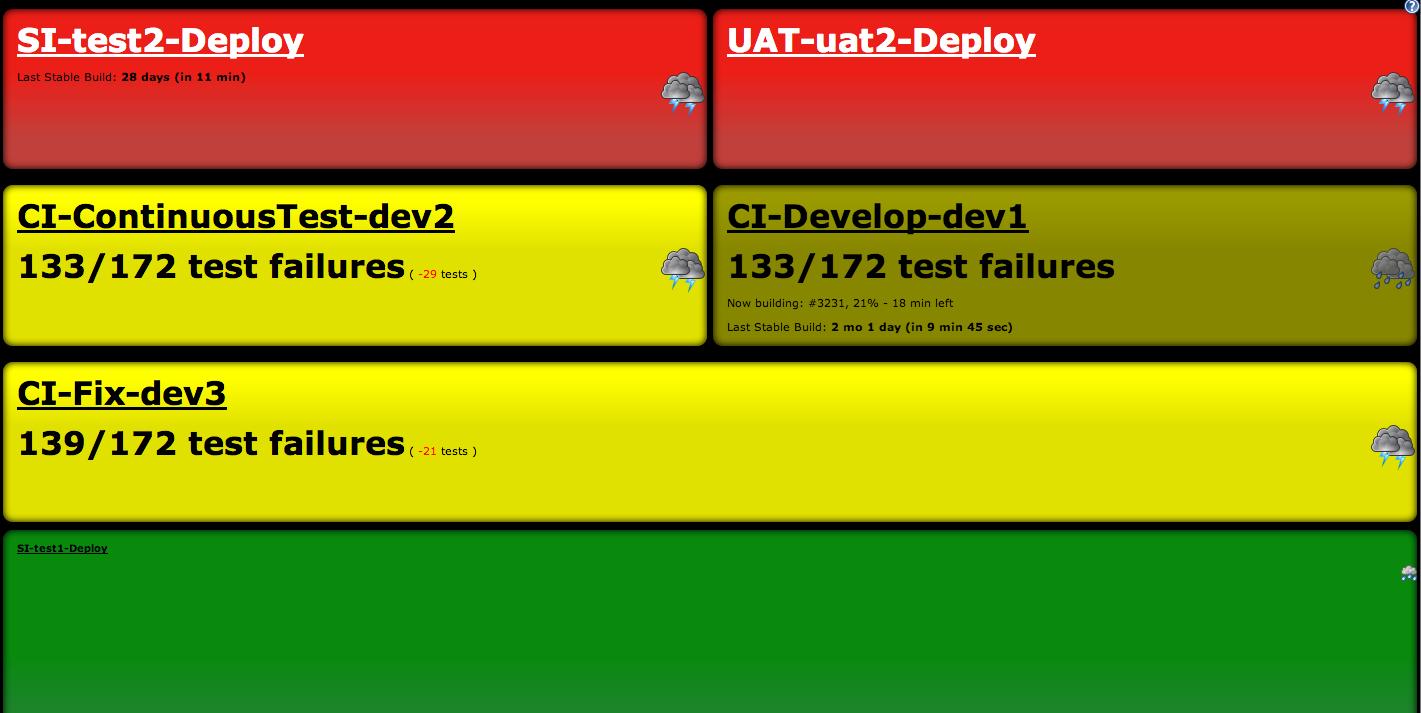

The CI Environment

- Development:

built frequentlythe latest level of codepossibly unstableimmediate feedback

- Future Proofing (post v1.0)

Bug Fixing &New features in parallel

The Tools

- Liberty & Jenkins

Why Jenkins?

--> Plugins

tools

2. Automated ways of building components

.wlapp

.war

.adapter

tools

3. Powerful SCM e.g. GitHub

Pull Requests

Web Hooks

Git Blame

Powerful SCM can boost development time and simplify integration

Tools

4. Ant Tasks & Scripts

The "Glue" to hold the process together

- Source Code Checkout

- Build Worklight artefacts

- Deploy .wlapp & .adapters

- Build native applications (.ipa, etc)

- Execute tests

- Deploy Apps to app center

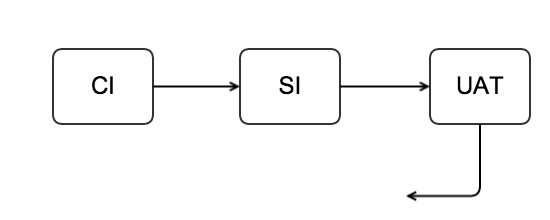

Ideal Steps to Production

SI & UAT

SI

More stable code base

Promoted at end of Sprints

End to end process tests

QA and UI testing can be expanded

Integration tests

UAT

The final check before code is pushed to production Business sign off Final QA "Production like" environment (clustered, load balanced etc)

Jenkins

code - commit - test <repeat>

* tour of Jenkins *

demo adapter

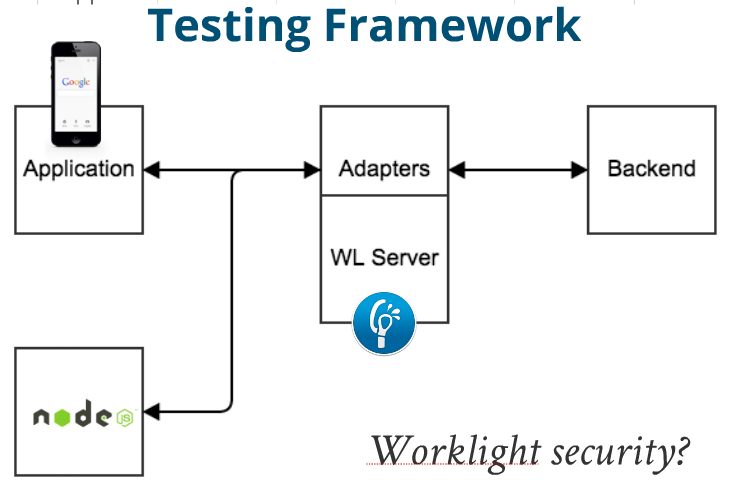

Testing

Server Side components (Adapters)

Well defined inputs / outputs

Feedback on code quality & data manipulation

What about Rational Test Bench?

Tools

&

Adapter URl

var baseUrl = "http://" + domain + ":" + port + "/" + contextRoot;

var address = baseUrl + "/invoke?adapter=%ADAPTERNAME%&procedure=%PROCEDURENAME%¶meters=%PARAMETERS%";

check in POSTman (localhost)

npm config set -> domain, port & contextRoot

var domain = process.env.npm_package_config_domain;NOTE : adapters are currently not RESTful

* URIencode for safety *

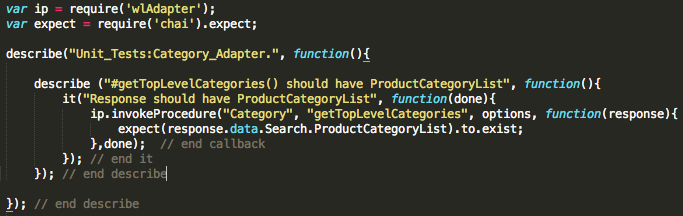

InvokewlProcedure.js

var request = require('request');

var invokeProcedure = function (adapter, procedure, parameters, callback, done){ // prepare the URL var payload = _invoke(URL)callback(payload) // passed from script calling this functiondone(); // Mocha callback for async completeness} var _invoke = function (address) { request.get({url:address, json:true}, function(error, response, data){ // do something interesting? return {error: error, response: response, data: payload}; }) }

exports.invokeProcedure = invokeProcedure;WL security

check in POSTman (dev)

var when = require('when');_prepareChallengeResponse(url).then(_invoke).then(

function(payload){

callback(payload);

}).done(function(done /*Mocha done*/){

done();

});

var _prepareChallengeResponse = function (url){var deferred = when.defer();request.get(url, function(error, response, data){// handle error & strip out the /*secure */ stringwlInstanceId = data.challenges.wl_antiXSRFRealm['WL-Instance-Id'];url.headers = { 'WL-Instance-Id' : wlInstanceId}; return deferred.resolve(url);})};

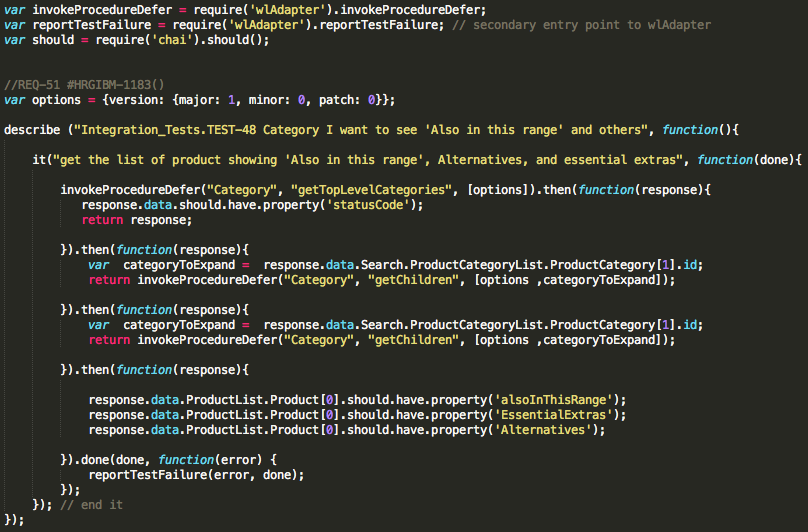

Chaining adapter requests

request chaining - invokeDefer()

var invokeProcedureDefer = function (adapter, procedure, parameters){

// form url from param etc

var deferred = when.defer();

_prepareChallengeResponse(url).then(_invoke).then(

function(payload){

deferred.resolve(payload);

},

function(error){

// handle error in some way

deferred.reject(error);

}

);

return deferred.promise;

};

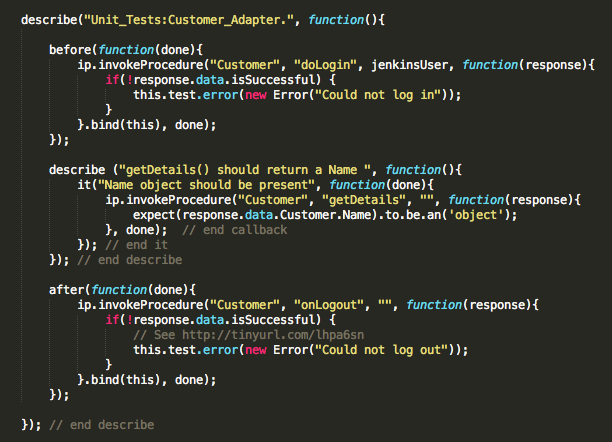

A basic Test

An integration test

Authenticated Adapters

Mocha reporters

spec / html / Xunit / nyan ....

Local test execution example

Jenkins processing of test results ( . )

What about stubbing solutions?

Building wl with Jenkins

Build scripts & build time should be short

Jenkins exposes lots of $ENV_VARIABLES

Build Artefacts plugin

Jenkins can execute bash, ant and other scripts

Keep Jenkins job config MINIMAL (script execution)

Multiple nodes --> Distributed builds

Build-main.sh

Basics of the script

BUILD_TAG=${JOB_NAME}.${BUILD_NUMBER}

setEnvironment $DEPLOYER_ENV

restartServer

setUpRepo $PROJECT_DIR $MAINREPO_TREEISH $BUILD_TAG

runLocalAnt $BUILD_OUTPUT -DlibertyAppDir=${LIBERTY_APP_DIR} \

buildRestApplication buildSecurityTestApplication \

deployCustomizationWar deployAllAdapters

setBuildDescription

buildOnSlave

deployApplications

addCustomServerConfig

runTests

deployAppsToAppCenter $BUILD_OUTPUT/archived-products

Build-slave.sh

Basics of the script

security unlock-keychain -p PASSWORD $LOGIN_KEYCHAIN# 2. git checkout git_pull cleanOutput # 2.5 (minification / LESS / etc.) gruntTask # 3. run worklight ant task runWorklightTask addProvisioningProfile # 4. Build the ios app cleanAndBuild $SCHEME # 5. archive + sign ios app archive $SCHEME # 6. build android app cleanAndBuildAndroid # 7. remove the .app and .app.dSYm files as they take up too much space removeIosArtefacts $SCHEME $APP_CENTER_SCHEME $APP_CENTER_IPA_NAME

Automate adapter testing

- cd ${UNIT_TEST_DIR}

- npm config set domain=$DOMAIN

- npm install

- (npm install invokeWL)

- npm run-script PreTest

- npm run-script UnitTests

- npm run-script IntegrationTests

Things to consider :

Timeouts, file quantity, reporting, --no-registry, package.json

GOTCHA - npm config Global issue

Promote to ENVIRONMENTS

*without rebuilding WAR & Adapters

worklight.properties.$ENVIRONMENT

adapter.proxy.protocol=http adapter.proxy.domain=192.168.12.10 adapter.proxy.port=8080

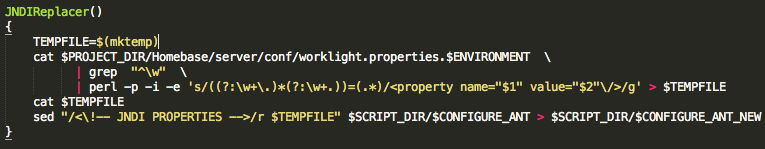

In deploy-to-environment-ant.xml add <!--JNDI_PROPERTIES-->

Replace the string with worklight.properties values & install the war

Now in server.xml

<jndiEntry jndiName="worklight/adapter.proxy.protocol" value='"http"'/> <jndiEntry jndiName="worklight/adapter.proxy.domain" value='"192.188.12.10"'/><jndiEntry jndiName="worklight/adapter.proxy.port" value='"8080"'/>

how to promote

git tag & promote -> Build with parameters

worklight.properties.$ENVIRONMENT

Managing Environments

In your server.xml :

<include optional="true" location="server.CI.xml"/><server>

<logging traceSpecification=

"com.worklight.adapters=FINEST:com.worklight.integration=FINEST"

traceFileName="stdout" maxFileSize="20" maxFiles="2"

traceFormat="BASIC" />

</server>

cp / scp depending on what you need / where you are

Managing Jenkins from jenkins

Use SCM strategy to manage CI process

curl -H 'Authorization: $TOKEN' -H 'Accept: application/vnd.github.v3.raw' -L https://api.github.com/locationOfFileToExtract/$1 > $2

chmod 755 $2

./$2As jobs grow - use common.sh

set$ENV () {

export $WL_SERVER_URL

export $DB2_DATABASES_DBNAME_WRKLGHT

# and what ever else per environment

}

what about Jenkins security?

Doing it in an automated way ...

Power of jenkins jobs :

"Generic" jobs (Start / Stop servers)

Traffic Light Jobs

Trendy & cool development - make fixes from the pub

This is where we work . . .

Thoughts?

UI Testing?

Automated client side testing ?

Which bits are suitable for the cloud?