Using NIF to handle both compute-bound & IO-bound tasks in elixir

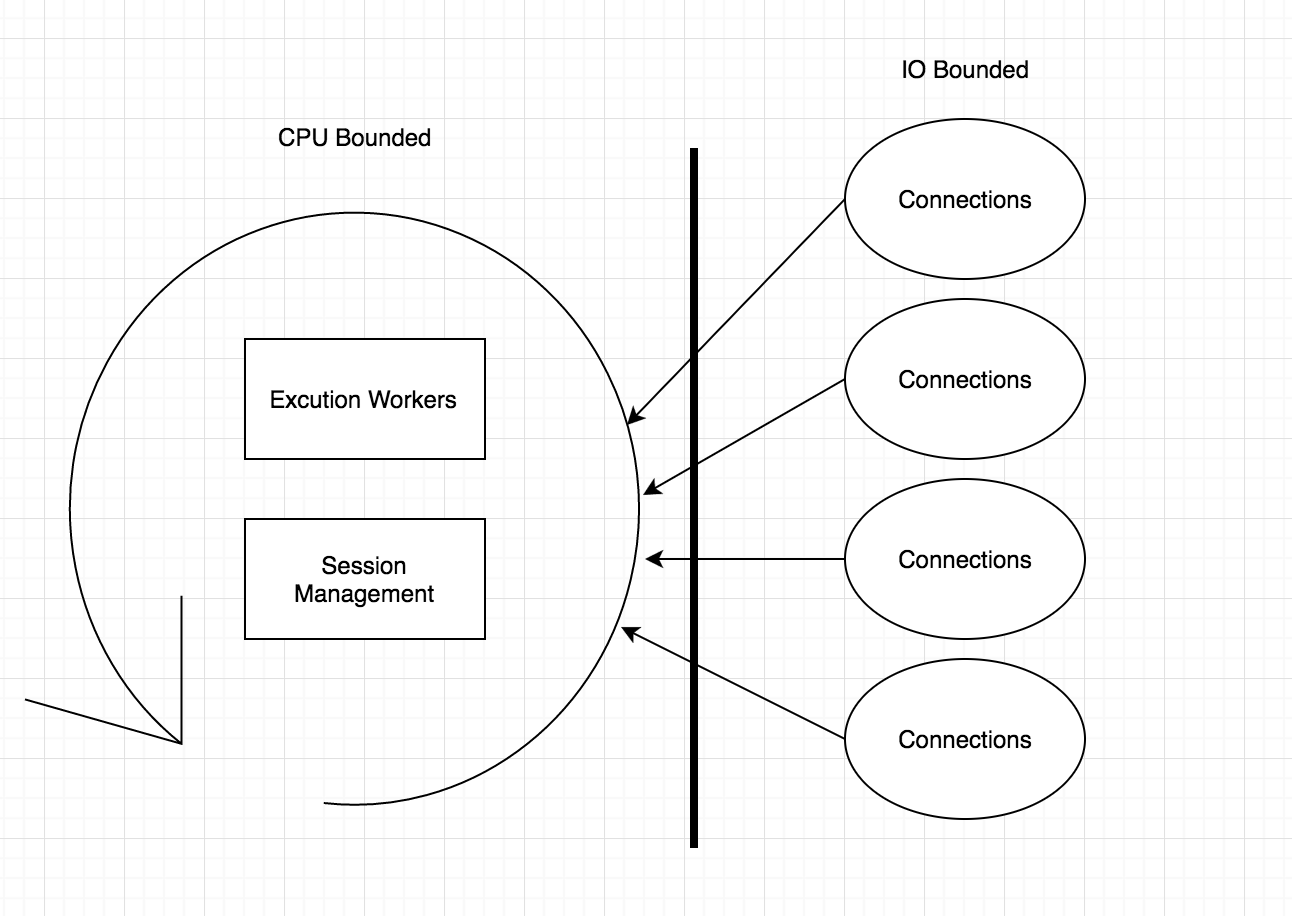

Two type of tasks

- CPU Bound

- IO Bound

Core concept

Make full use of CPU

CPU Bound

Solution: return cpu time back to user space

- less scheduling of threads

- dedicated core for threads

- make full use of cache

- branch predicting friendly

- SIMD

problem: to much waste of time on scheduling

IO Bound

Solution: give back cpu time back to kernel

- less threads

- callback / coroutine

- reactor & proactor

problem:too much threads waiting blocked cpu

IO & Compute Both Bounded?

Database

Scenario

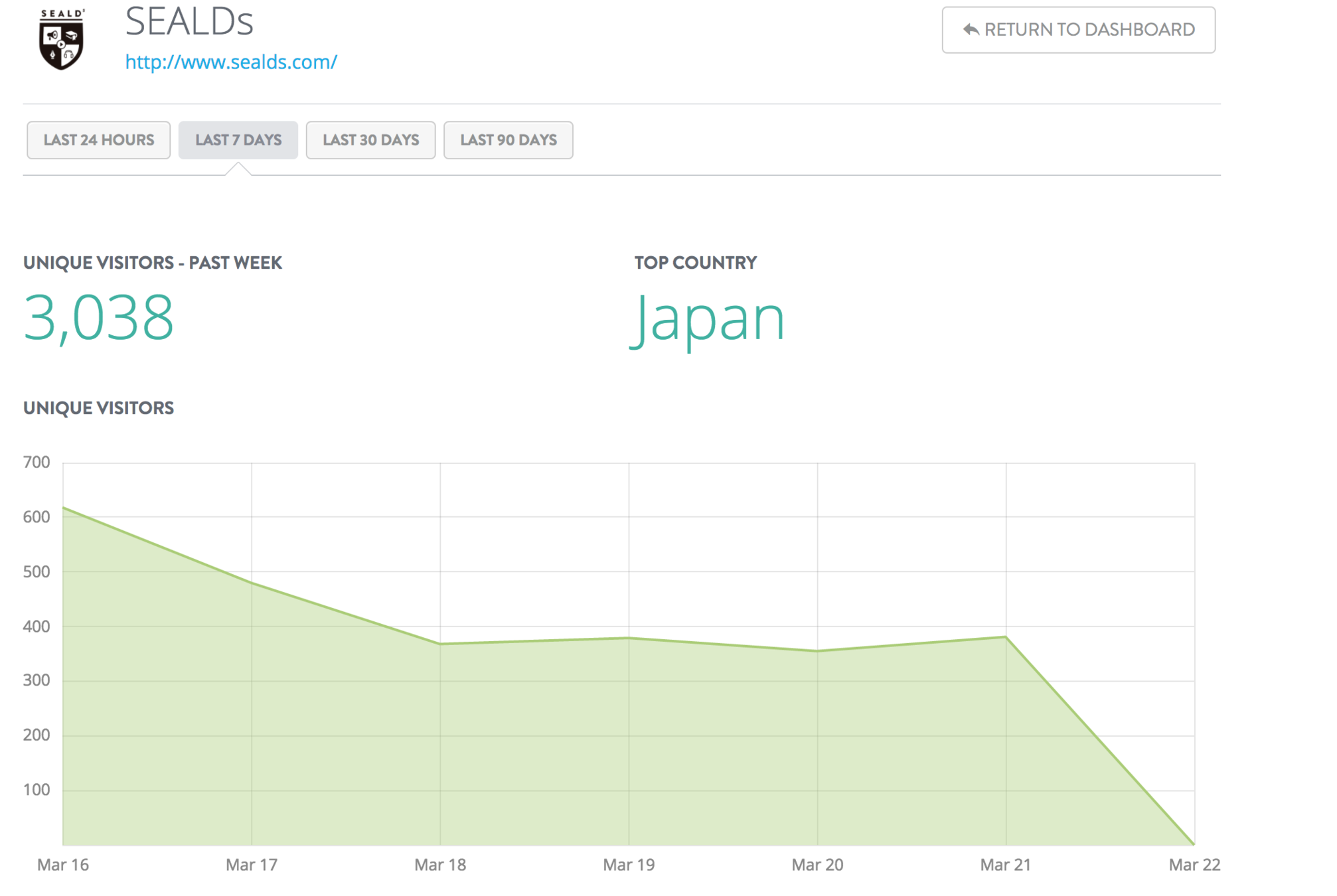

Analytics Service

Main task type:map/reduce on prefetched cache

Soluiton 1

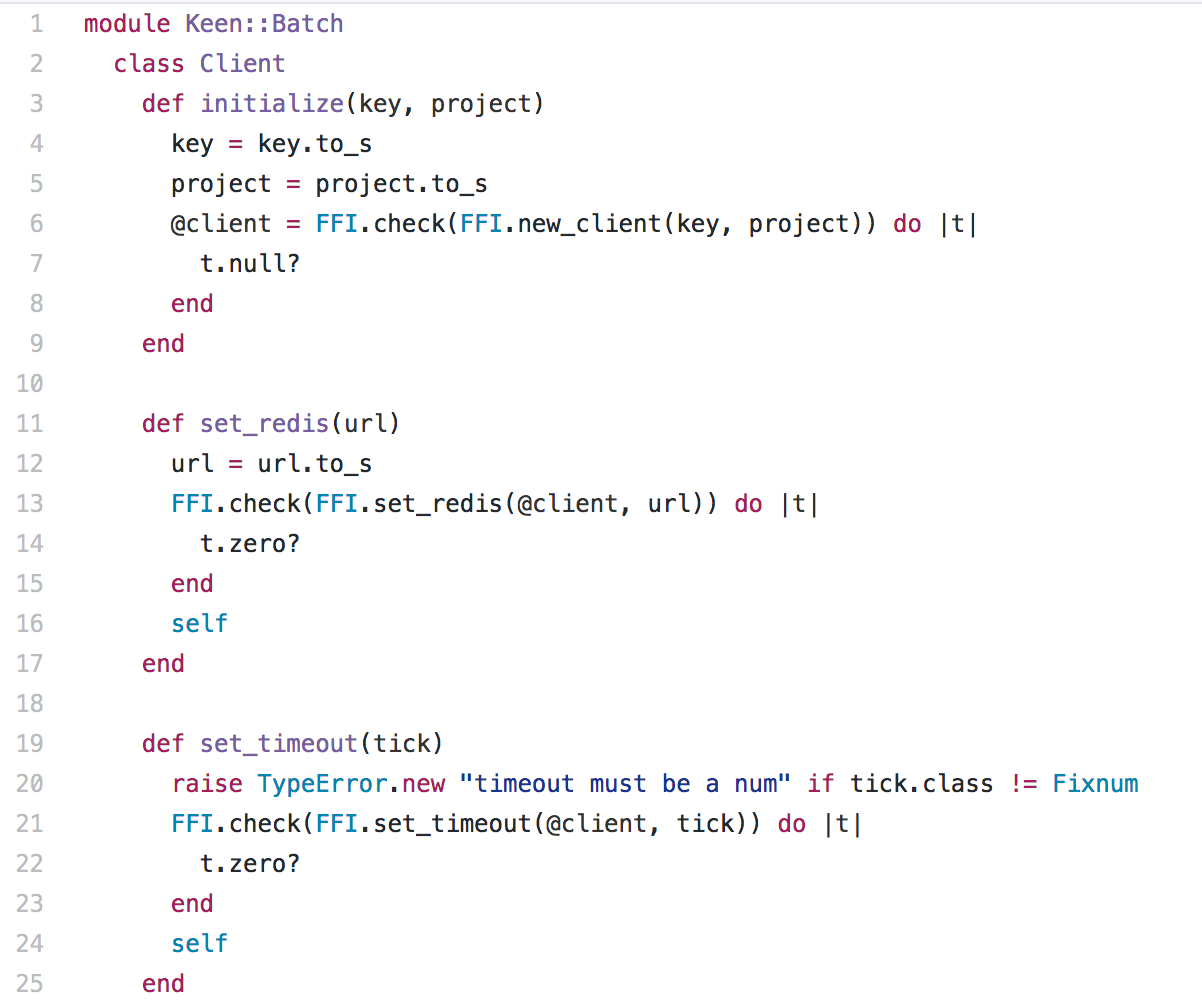

Ruby + Rust

Ruby

Rainbows for async

Rust

Computation

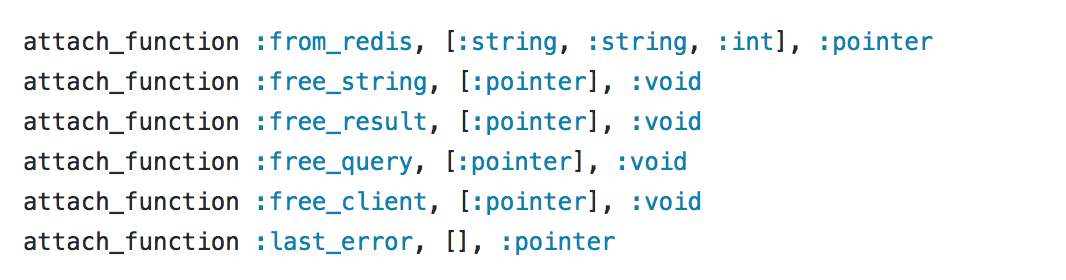

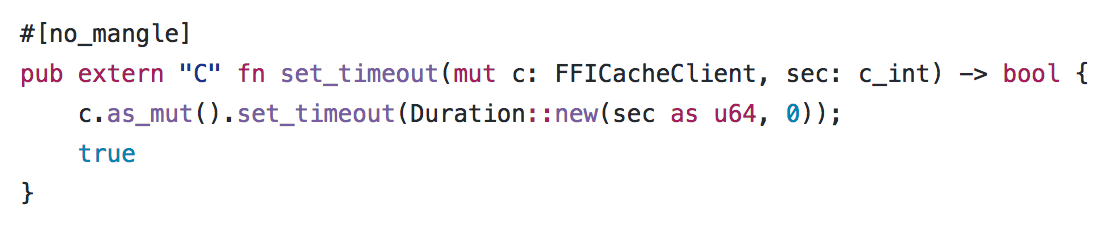

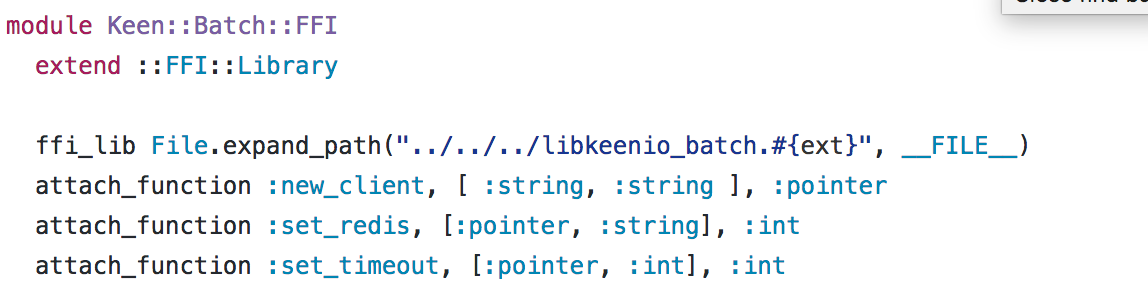

Expose Pure FFI

Ruby Native Extension

Wrapper at Ruby side

Problems

- Eventmachine is a bullshit

- Boilerplates

- FFI safety

-

Mandatory Free

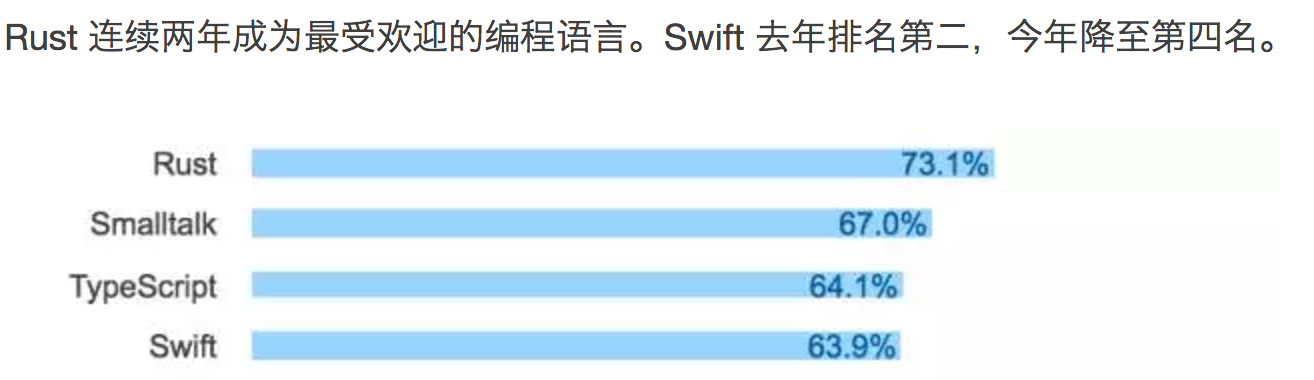

Why Rust

Safety

Rust is a systems programming language that runs blazingly fast, prevents segfaults, and guarantees thread safety.

As a NIF library is dynamically linked into the emulator process, this is the fastest way of calling C-code from Erlang (alongside port drivers). Calling NIFs requires no context switches. But it is also the least safe, because a crash in a NIF brings the emulator down too.

------

http://erlang.org/doc/tutorial/nif.html

Performance

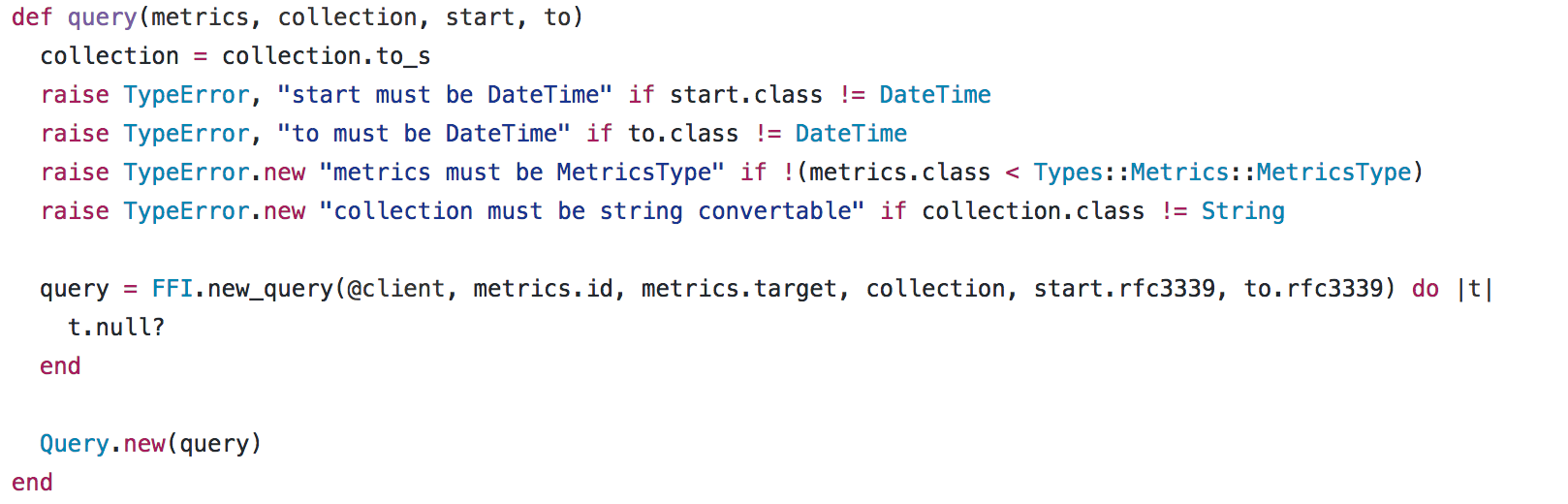

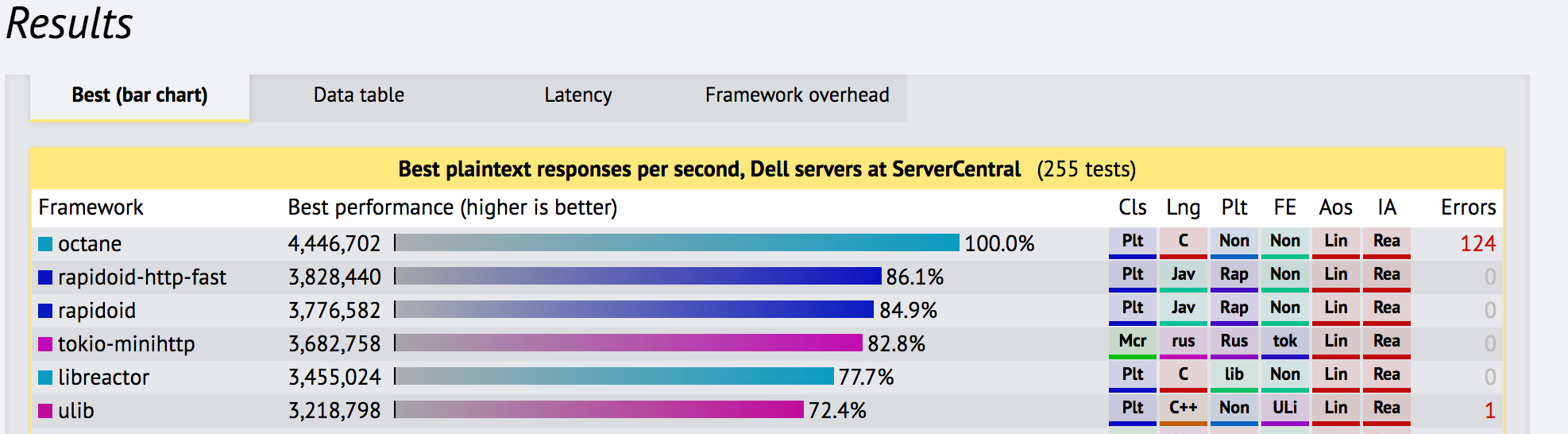

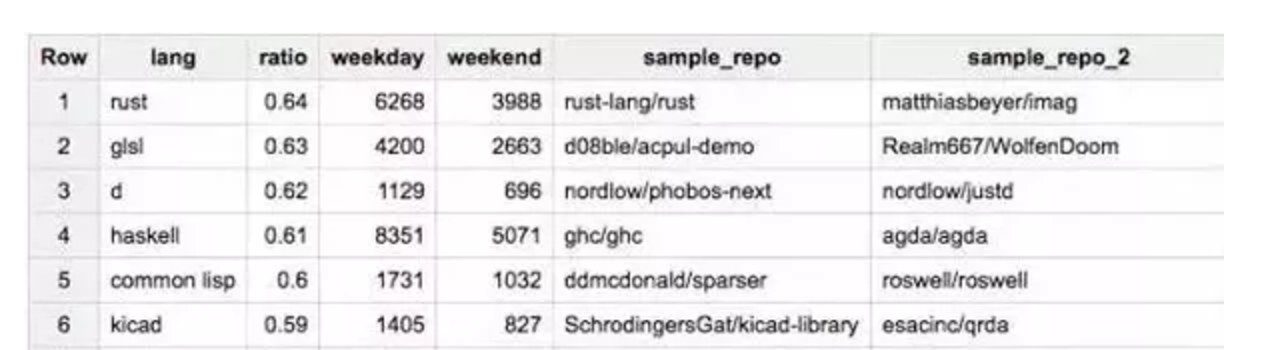

Techempower round 14

alioth benchmark game (https://strk.ly/VJ)

”Rust now beats C++ in many benchmarks and is on par with others“

Interesting

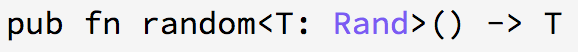

- Meta Programming (macro)

- Type Inference

- Trait Mixin

Solution 2

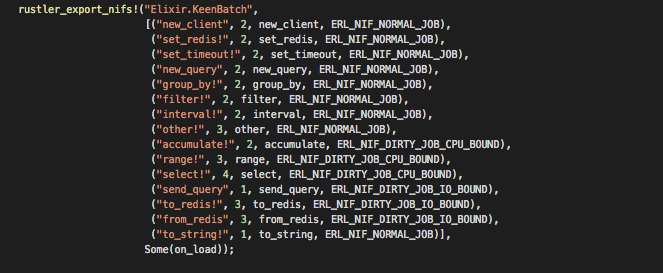

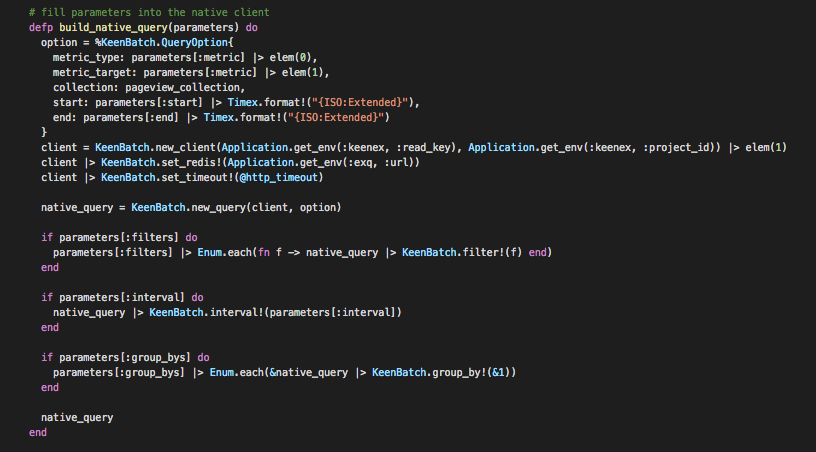

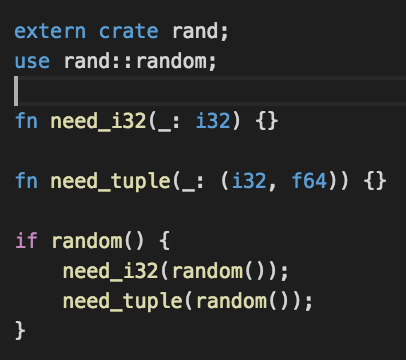

Elixir + Rust

Why?

Elixir's high concurrency

Better interface between Elixir & Rust (Rustler)

But cons:

Elixir is much slower

Elixir has less 3rd-party libraries

Exotic grammar

Not compatible with Strikingly's deploy logic

Step by step

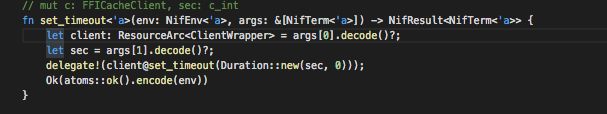

Translate interface code

Implement the server

System structure

Caveat

You need a customized erlang VM (https://hub.docker.com/r/wooya/elixir-dirty-scheduler/)

-

elixir --erl "-smp +K true +A 10 +SDio 300 +SDcpu 100:100" -S mix phoenix.server

Deployed since 2016.11

0 Down time

Improvements

- Use proactor to read data from redis

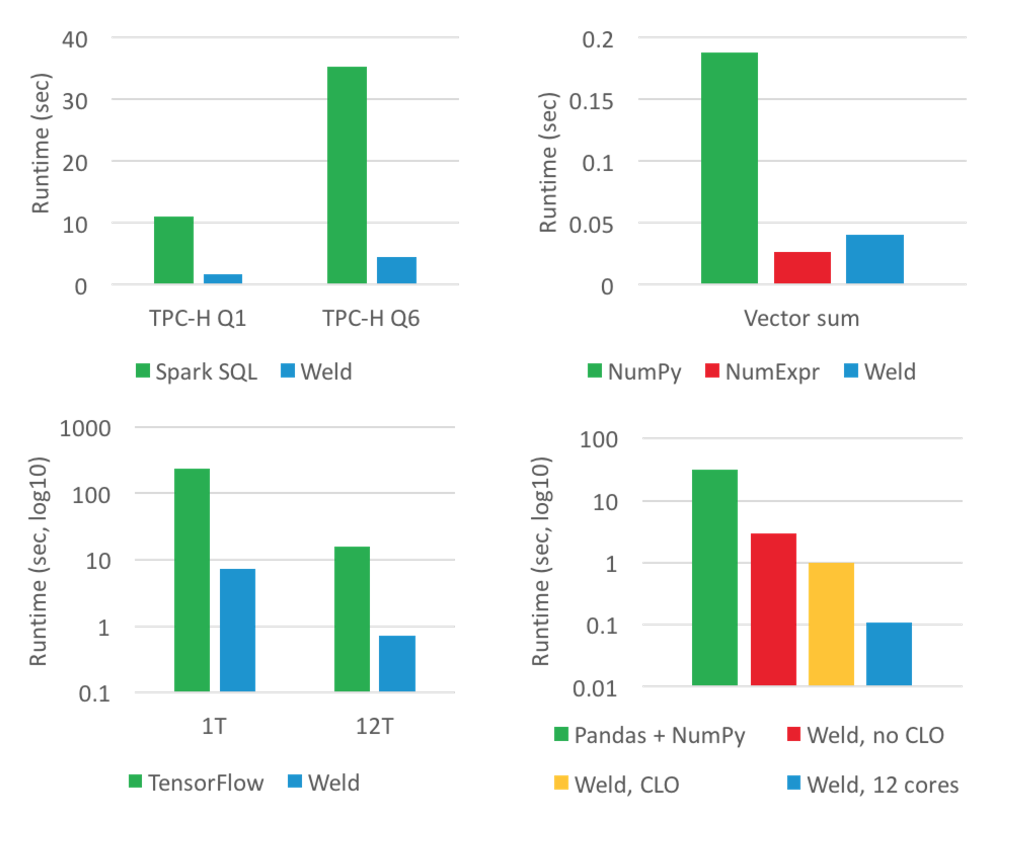

- Use Weld to improve throughput

Weld: computation engine from Stanford