Model Interpretability

Weiyuan @ SFU DSL

Outline

- Interpretability: Why & What

- Taxonomies of Interpretability

- Ways to evaluate interpretation

- Conclusion

Why Interpretability

Some Data Scientists in Companies usually stir their "pile" day to day

--- I just gave him a $10B loan!

Do you think he can pay it back? ---

--- Why not? The Model said so.

But it's a DOG! ---

Look at the thing you did! ---

Current solutions?

Not enough

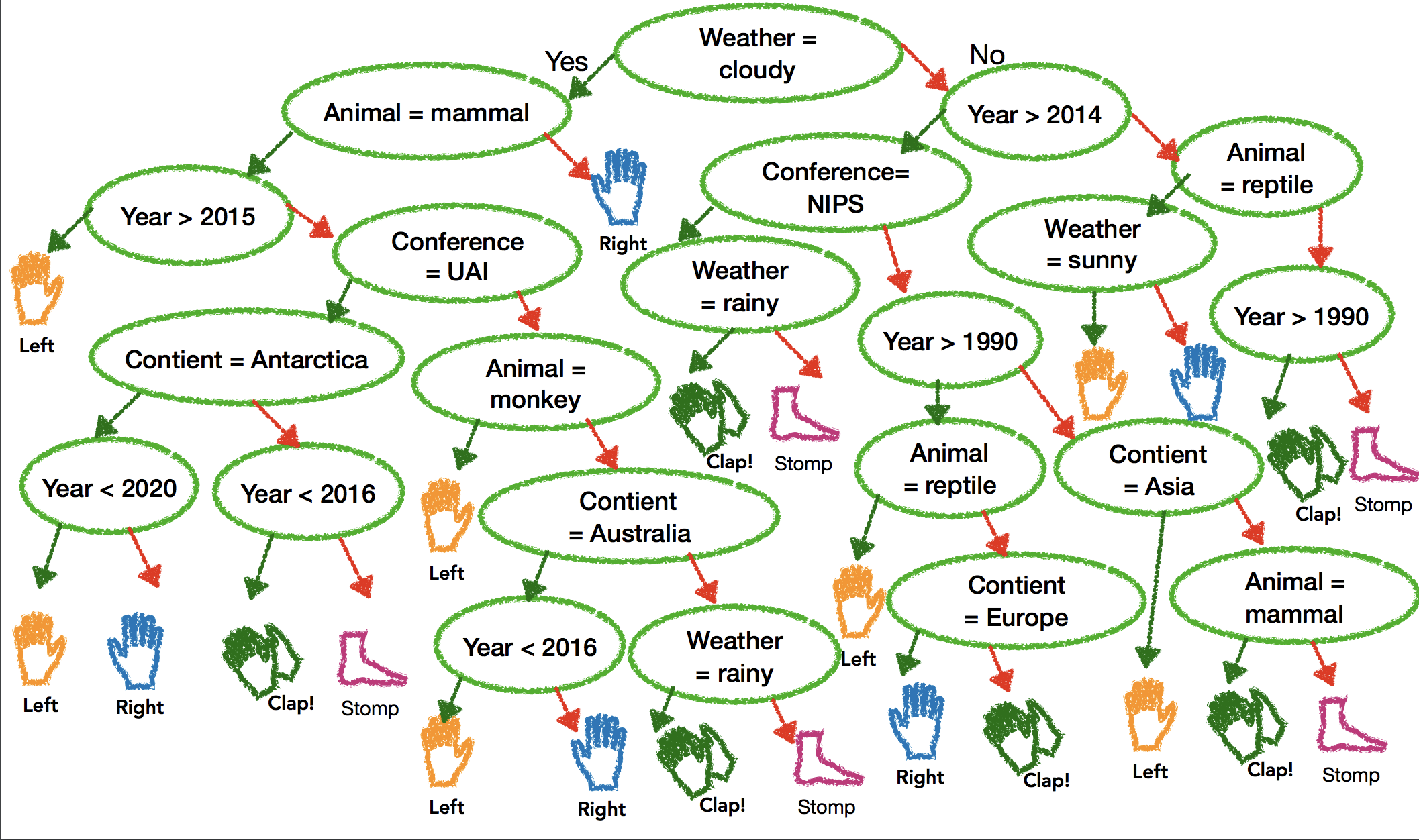

Decision Tree 🤔

Pic From Been et al.

Wait...Is interpretability possible?

Seems like all currently working models are already too complex for a human to understand.

For sure!

- Interpretability is NOT about understanding all bits and bytes of the model for all data points (we cannot).

- It’s about knowing enough about your downstream tasks.

Taxonomies

of

Interpretability

Types of interpretation methods for after building a model

Model problem: LIME

- Given a model

- Interpretation of representative data points w.r.t. the model.

- New linear model = original model)

- 50*quadrangle courtyard within ring-3 of Beijing + 0.0001 * less than age 25 -> release the loan

Data problem: Influence function

- Given a model, it tells you: loan -> the guy

- Remove Fortune 500 people => ! loan -> the guy

- Some data is critical for this prediction!

Feature problem: SHAP

- Given a model, it tells you: loan -> the guy

- Age=25: 10% decision power

- Name=Bill Gates: 90% decision power

- Name must be important to make decision for this guy!

Ways to evaluate interpretation

Spectrum of evaluation

- Function-based

- Cognition-based

- Application-based

Application-based

- How much did we improve loan payback rate?

- Do decision makers find the explanations useful?

Application-based

- It’s real evaluation

- but it’s costly

- and hard to compare work A to B

Function-based

- Use proxy metrics

- How sparse are the features?

- How non-negativity it is?

Function-based

- It's easy to formalize, optimize, and evaluate…

- but may not solve a real need

- e.g., 5 unit sparsity will have more interest rate than 10 unit sparsity?

Cognition-based

Application-based

Function-based

High cost

Low cost

High validity

Low validity

Cognition-based

- What factor should change to change the outcome?

- Time budget

- Severity of underspecification

- Cognitive chunks

- Audience training

- ...

Conclusion

- Interpretability is getting hotter

- Interpretability becomes increasingly important

- Current interpretation is not enough

- Current interpretation evaluation is not enough

Conclusion

- Interpretability is getting hotter

- Interpretability becomes increasingly important

- Current interpretation is not enough

- Current interpretation evaluation is not enough