NLMF: NonLinear Matrix Factorization Methods for

Top-N Recommender Systems

Santosh Kabbur and George Karypis

Department of Computer Science, University of Minnesota Twin Cities, USA

ICDMW'14

Motivation

-

Traditional MF-based models assumptionUser preference is consistent across all the items that he/she has rated.

- Traditional MF-based models do not put emphasis on fitting the preference diversity of a given user preference.

- However, many users can have multiple interests and their preferences can vary with each such interest.

Background (1/3)

-

Top-N recommendation Problem

- Recommend the most appealing N items for users (Not necessary to be the exactly top N).

- Ranking metrics (e.g. Precision) are the better measures of top-N task.

- Conventional MF-based models which minimizes RMSE does not translate into performance improvements of top-N task.

- Few top popular items can skew the top-N performance.

Background (2/3)

-

MaxMF proposed by Weston et al.

- Represent user with multiple latent vectors, each corresponding to a different interest.

- Have more power to fit user's preference if user’s interests are diverse.

- Only choose the maximum scoring interest as the final recommendation score.

Background (3/3)

-

MaxMF proposed by Weston et al.

- Weaknesses

- Only interest-specific component is considered.

- May fail in two cases

- Users who have not provided enough preferences.

- Users do not have enough diversity in their itemsets.

- Weaknesses

MaxMF

- Represent user by T interest vectors.

- The set of items is partitioned into T partitions for each user.

- If an item is ranked higher in the top-N list, at least one of the user's interests must provide a high score for that item.

Modification-NLMF

- Number of items for every interest may not be sufficient if only interest-specific preference component is learned.

- Learn user preferences as a combination of global preference and interest-specific preference components.

- Strike a balance between the two.

NLMFi

- The item vectors is independent between global preference and interest-specific preference components.

- The embedding sizes of the two components need not to be the same.

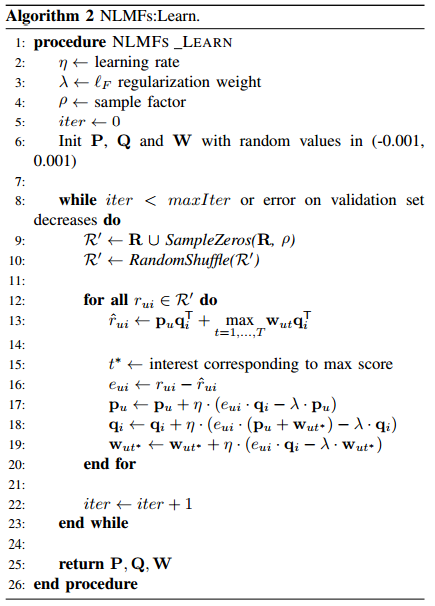

SGD for top-N task

- It is a common practice to do negative sampling in top-N task.

- Both positive feedbacks and missing entries are updated.

- ρ * (# of positive feedbacks) missing entries are sampled and are regarded as zeros.

- Usually ρ is small (in the range of 3-5).

w_{ut^*}

wut∗

NLMFs

- The item vectors is shared between global preference and interest-specific preference components.

Experiment (1/9)

- Dataset

- Netflix

- Flixster

- Extract a subset of the original dataset.

- Remove the top 5% of the frequently rated items.

- Binarize the Rating to make implicit feedbacks.

- Rated => 1

- Non-rated => 0

Experiment (2/9)

Experiment (3/9)

- 5-fold Leave-One-Out-Cross-Validation (LOOCV)

- Randomly select one item per user from the dataset and place it in the test set.

- Repeat to create 5 different folds.

- Evaluation Metric

- Hit rate

- Average Reciprocal Hit Rank = MRR in this case

- Hit rate

=1

Experiment (4/9)

- Competitors

- State-of-the-art for top-N recommendation problem

- UserKNN

- PureSVD

- BPRMF

- SLIM

- MaxMF

- State-of-the-art for top-N recommendation problem

Experiment (5/9)

Experiment (6/9)

- Effect of Number of Interests

- Peak values happen at T=3 or 4.

- Further increasing the value of T, the performance starts to decrease.

- Since the number of items for each interest decreases, less meaningful interest vectors are learned.

Experiment (7/9)

Experiment (8/9)

Experiment (9/9)

- All hyperparameters of these models are tuned to maximize Hit rate.

-

NLMFi (independent) outperforms NLMFs (shared).

- Item vectors are learned separately in NLMFi, which makes it have more power of striking a balance between global preference and interest-specific components.

Conclusion

- Contribution

- A MF-based method (NLMF) which solves the top-N recommendation problem by considering user's preference diversity.

- The recommendation score is computed as a combination of global preference and interest-specific user preference.

- Future works

- Test on much sparser dataset.

- Extend to rating prediction task.