Apache Kafka

Plan

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Theory - Part 1

Hands-on exercice

Theory - Part 2

ruby-kafka & Karafka gems

Shopmium ↔ Quotient

Principles

Basics

Topics & partitions

Consumers

Producers

Kafka APIs

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

What?

Events streaming framework

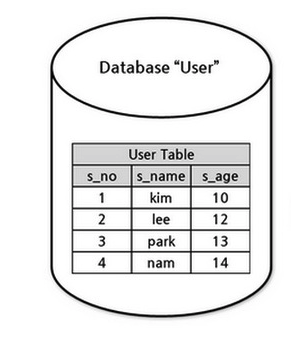

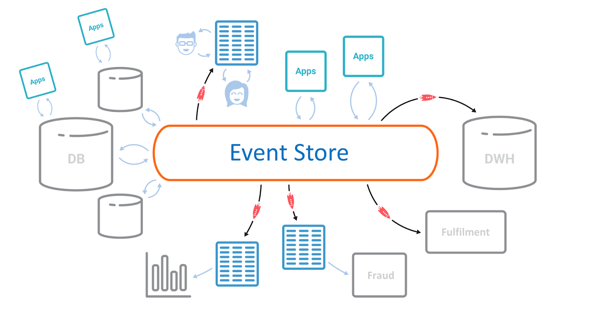

Instead of storing objects with state in a db, we reason with logs of events.

Events driven architecture

vs

Kafka = (distributed) system to manage the logs

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

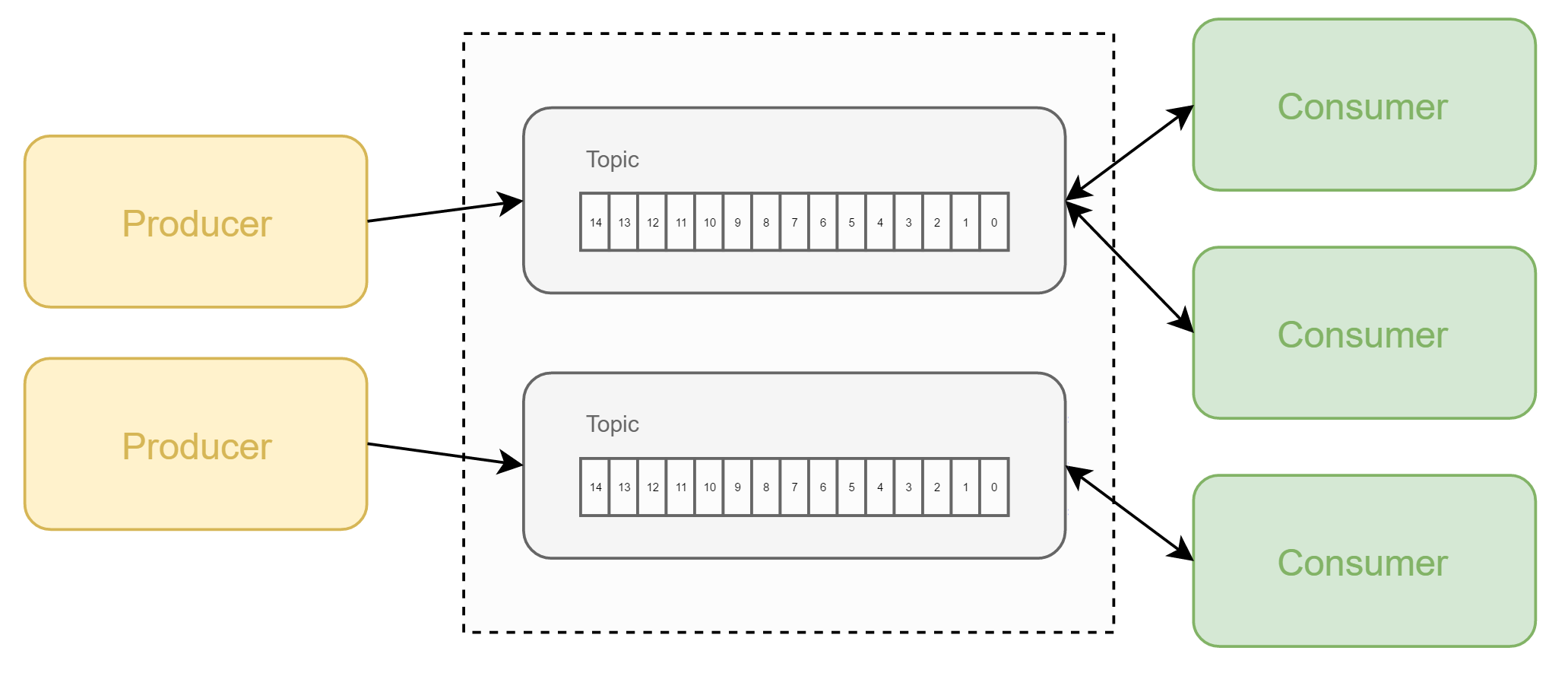

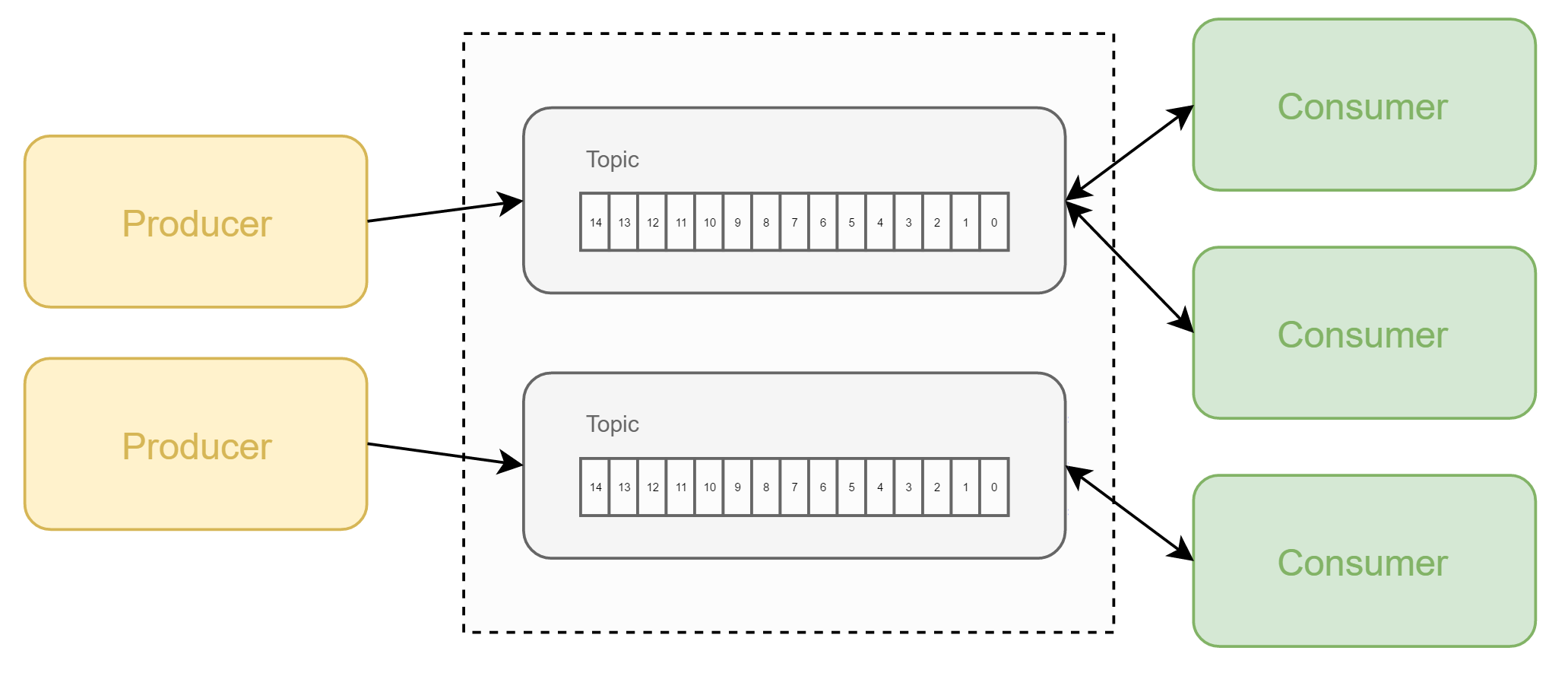

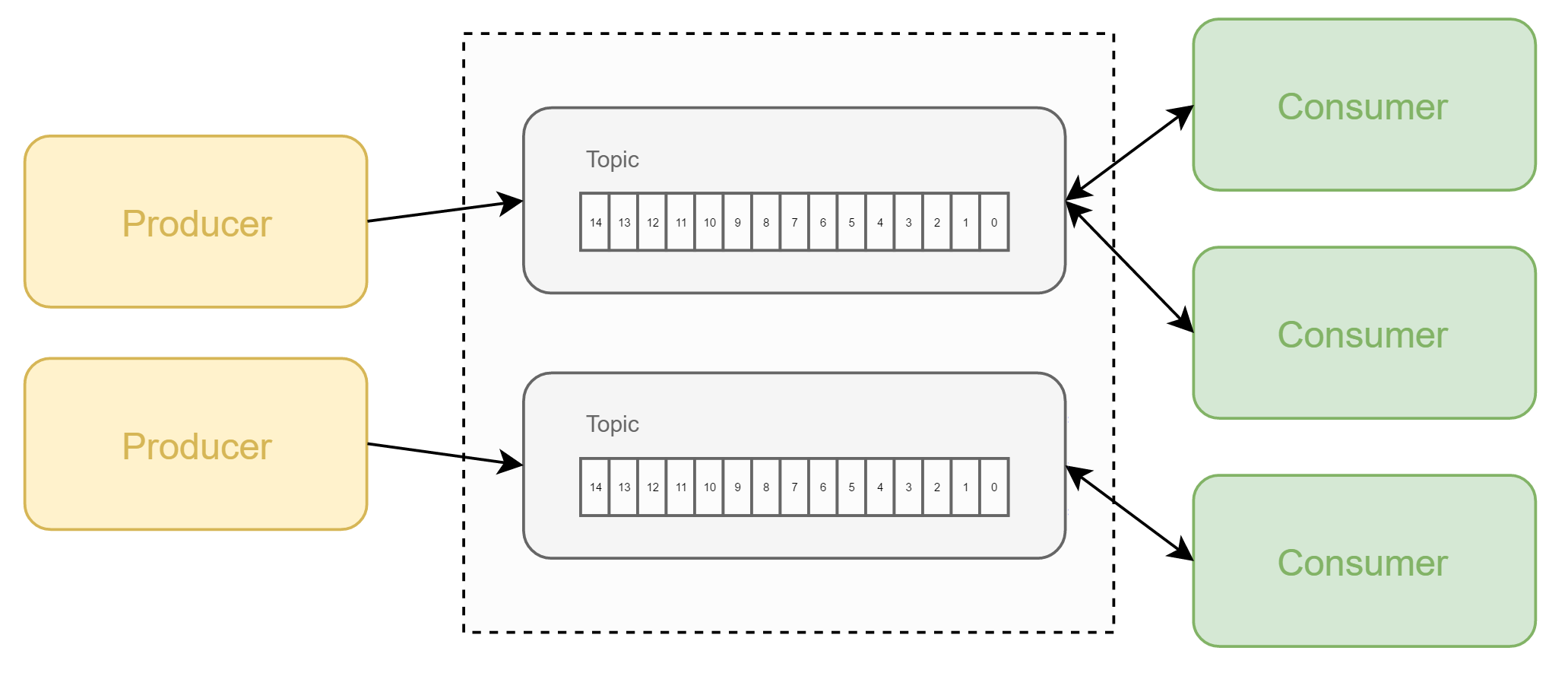

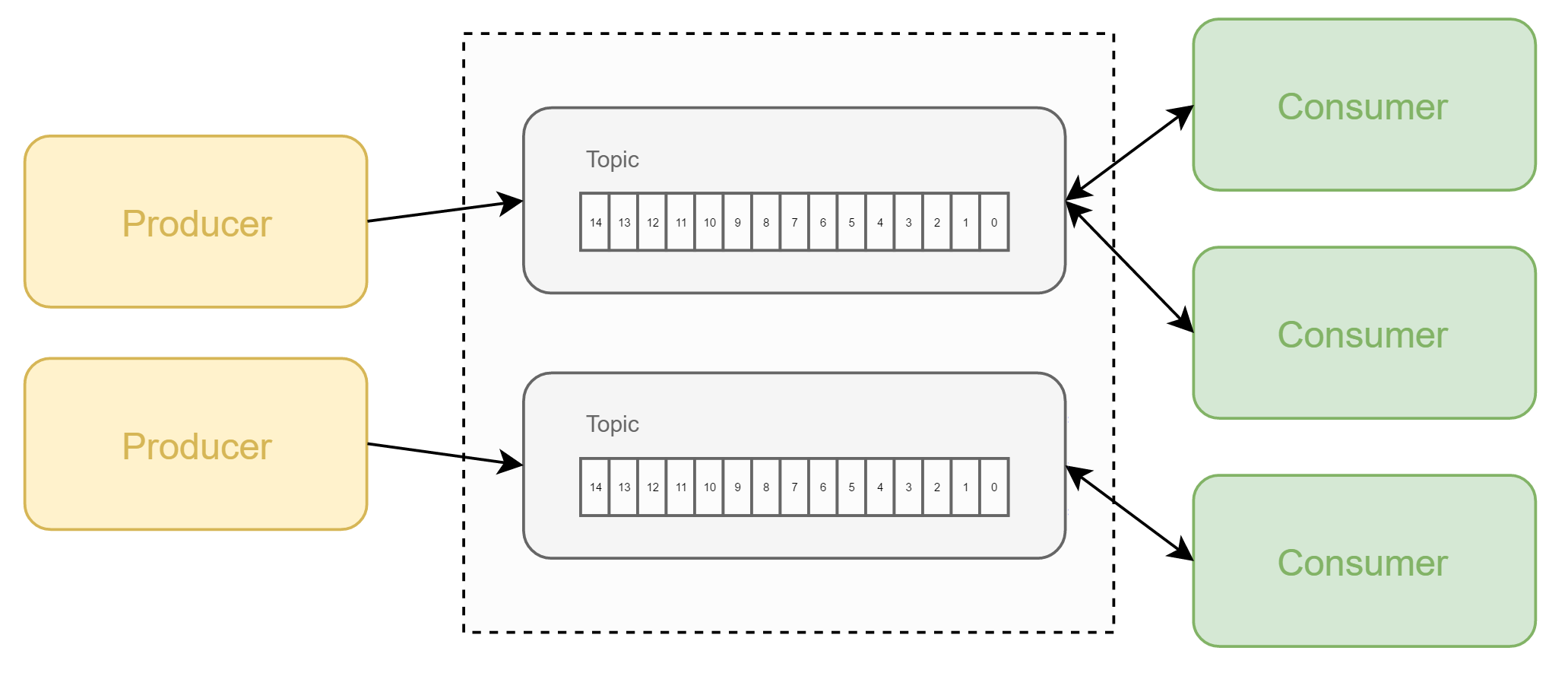

Pub-sub messaging architecture

Producer - Cluster (brokers) - Consumer

Protocol used: ≠ HTTP

→ persistent connection

Continuous flow of events between services

Microservices that communicate through Kafka

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Message bus (redis + sidekiq) ≠ Streaming platform

- Sidekiq, Resque, etc. → once the events are taken from the queue, they disappear

- Kafka → consumer does not impact the messages

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

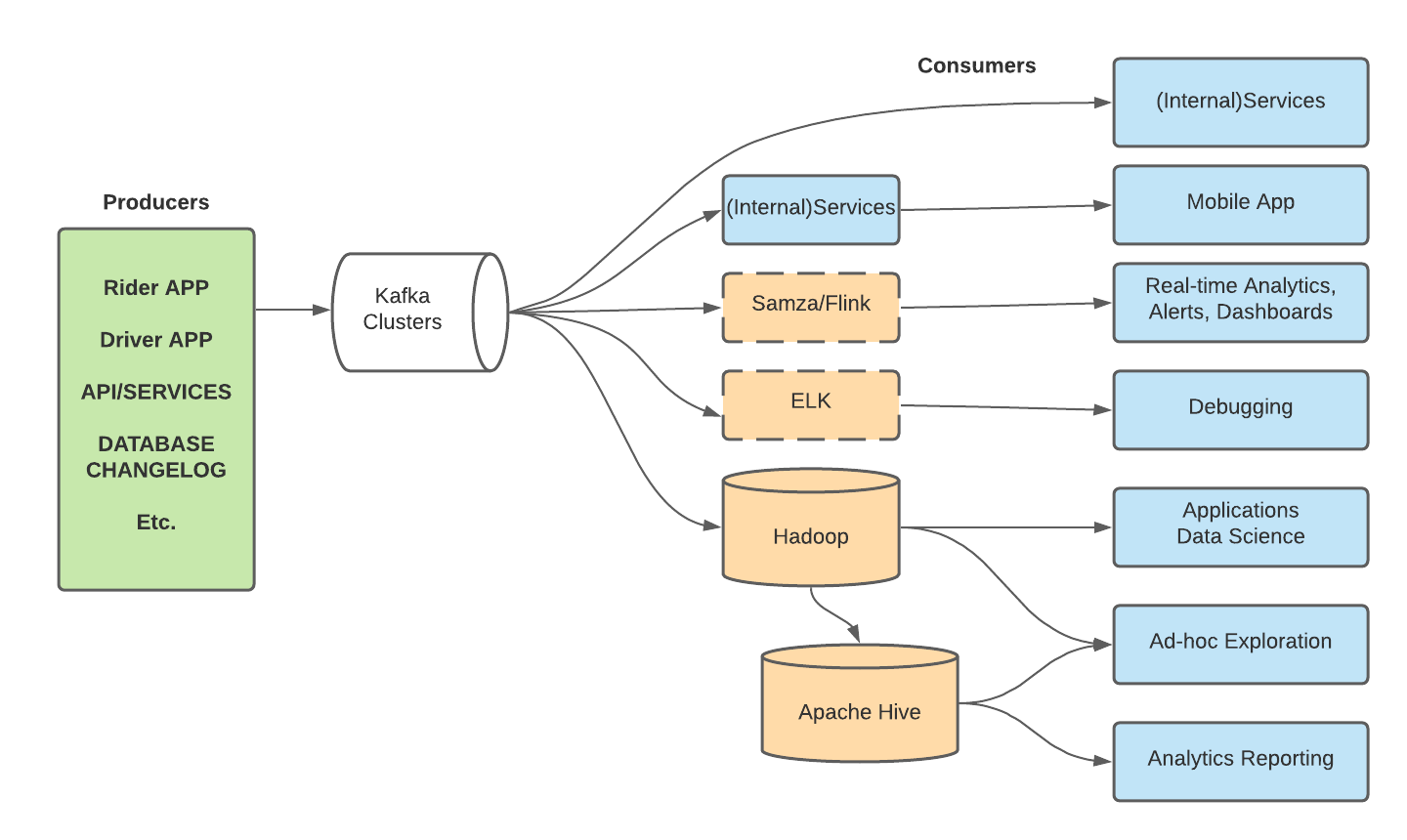

Usages

Real-time data flow

Microservices that communicate through Kafka

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

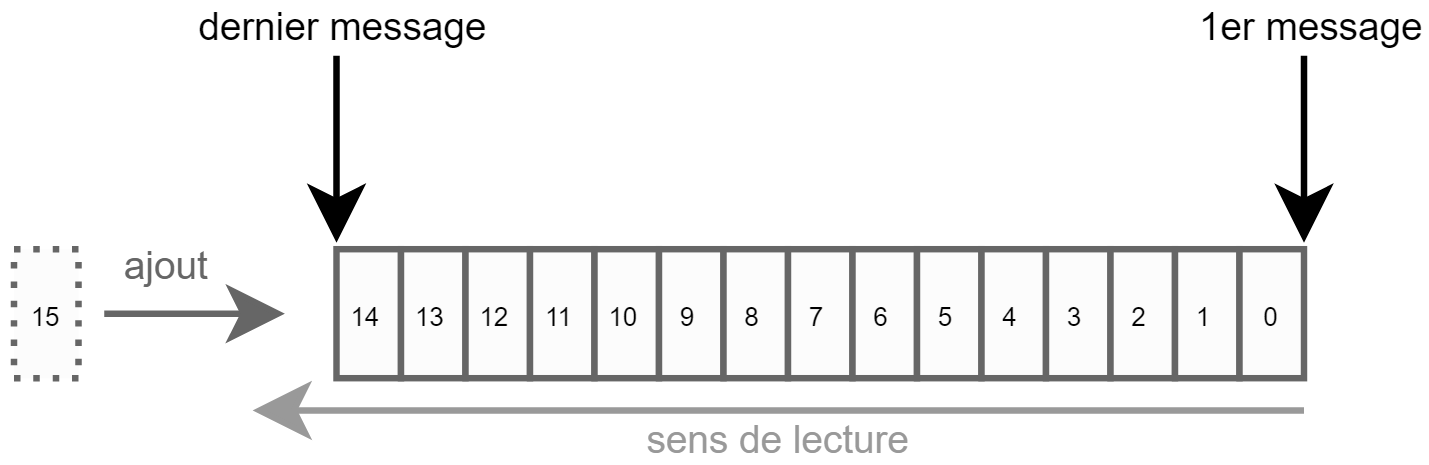

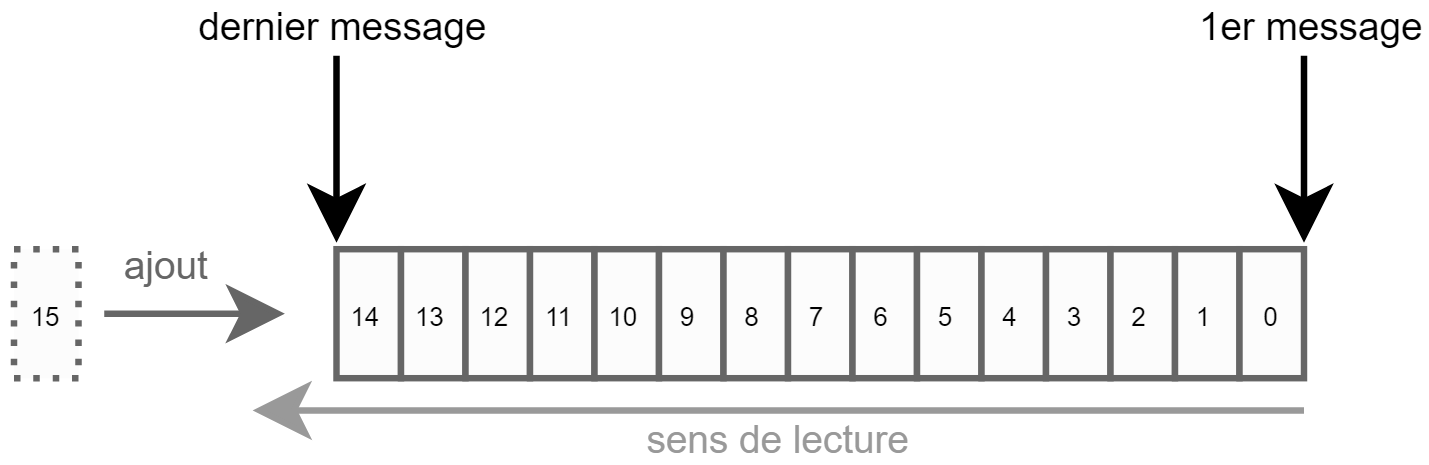

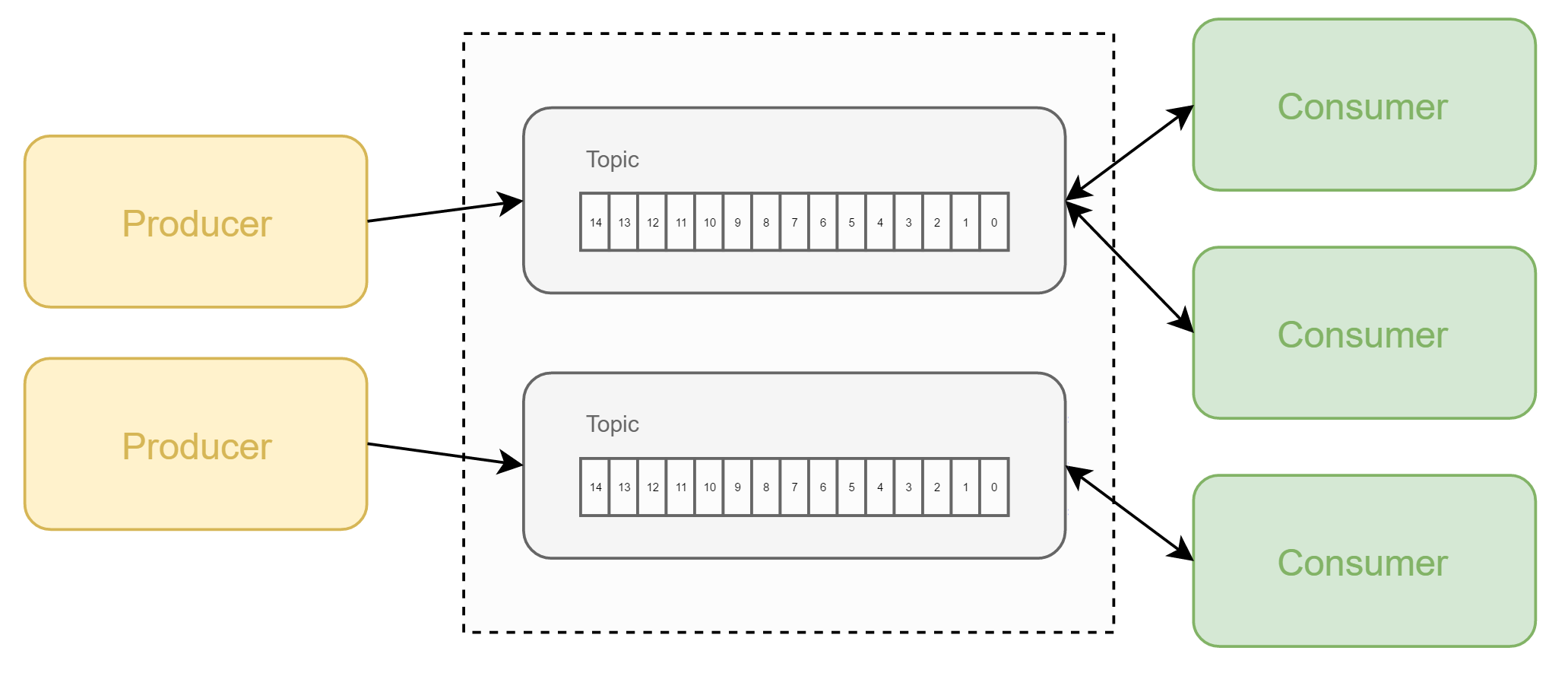

Topics

Topic = log = ordered and immutable collection of events

- events stored for a set period of forever

- multi-producers and multi-subscribers (0..n)

- events are not deleted after consumptions

- clients cannot update or delete messages

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

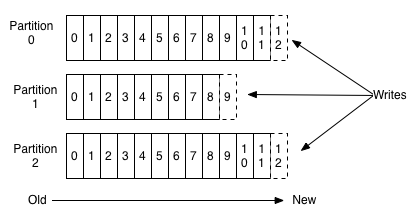

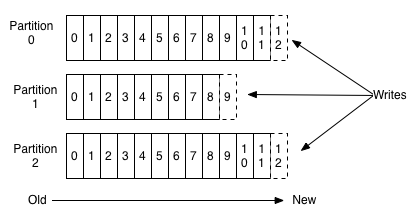

Partitions

A topic is divided in partitions (≠ nodes in the cluster)

So actually a log = a partition

- Kafka = distributed system. Necessity to distribute a topic for scalability

- consumer and producers subscribe to a topic (all partitions)

- a consumer will get messages from all partitions

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Consumers

- can start reading messages from the current position or from any offset

- always reads messages in order, without stopping

- asks messages when it is ready to handle them

super flexible (shuts down → catch up with unprocessed messages) - consumer offset is also stored in special topic

- immutable topics → can be read by multiple consumers at the same time (independently, ≠ speeds, from ≠ offsets)

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

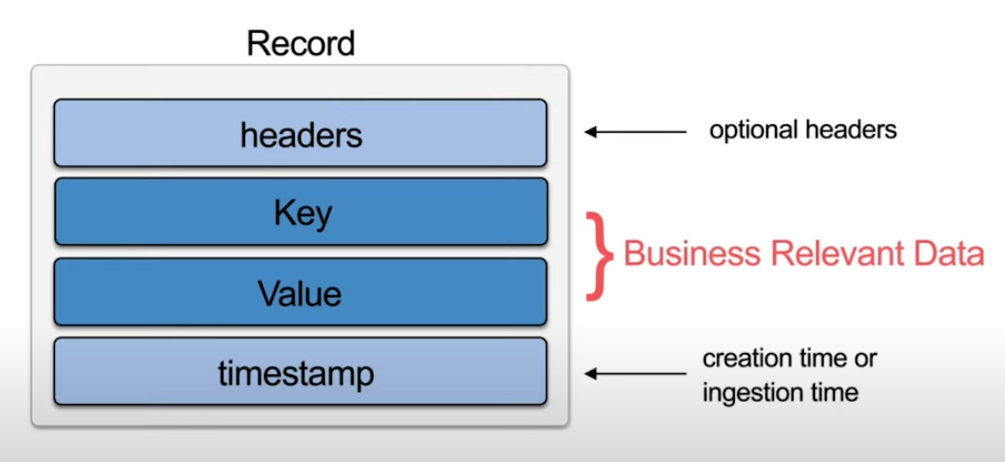

Messages

Message = key-value pair

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Producers

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

- just writes messages

- much simpler than consumer

- decides on which partition to write (based on key is present)

- the broker acknowledges the message once it's commited

Hands-on exercice

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

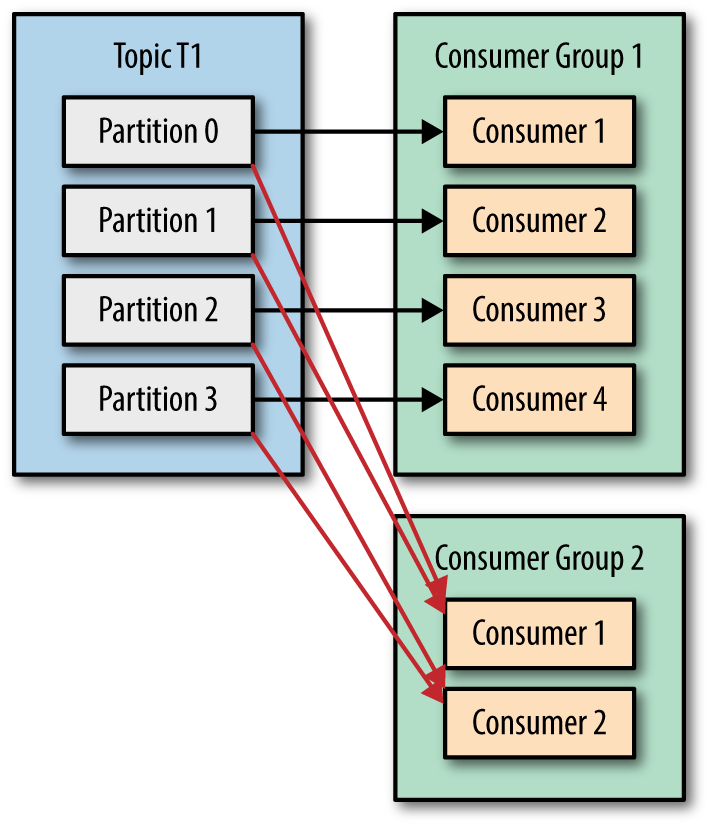

Consumer groups

- useful for consumers scalability (can be distributed across machines)

- the same consumer application can be split across

- Automatic rebalancing process

- Automatic horizontal scalability

- Number limited by the nb of partitions

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Partitions

A topic is divided in partitions (≠ nodes in the cluster)

So actually a log = a partition

- Kafka = distributed system. Necessity to distribute a topic for scalability

- consumer and producers subscribe to a topic (all partitions)

- a consumer will get messages from all partitions

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

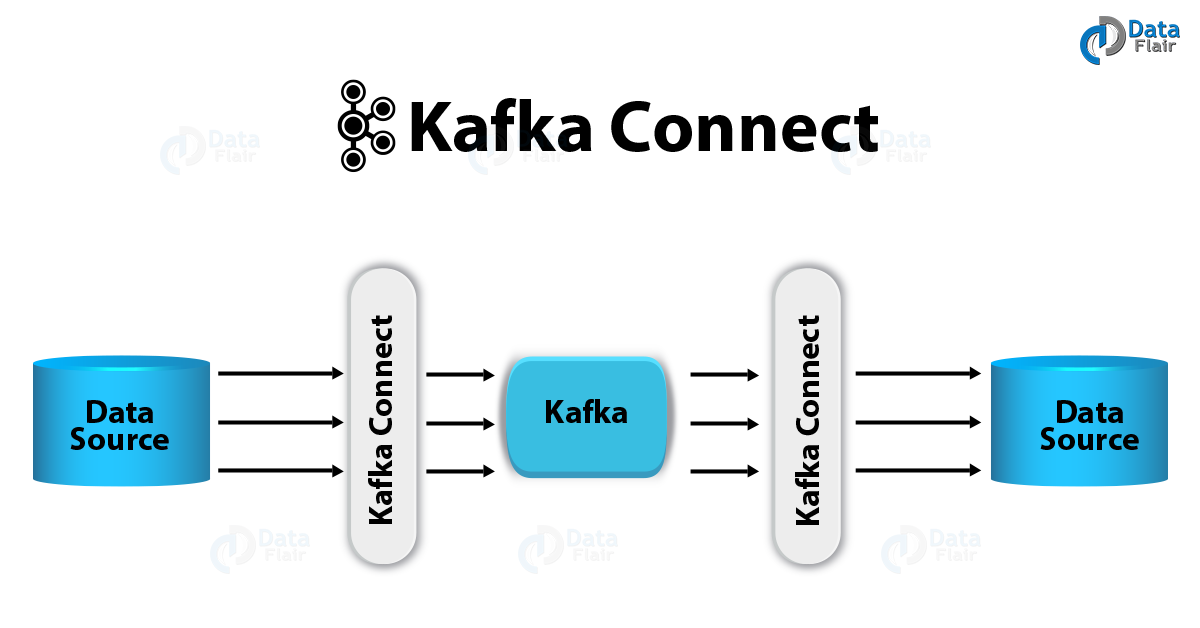

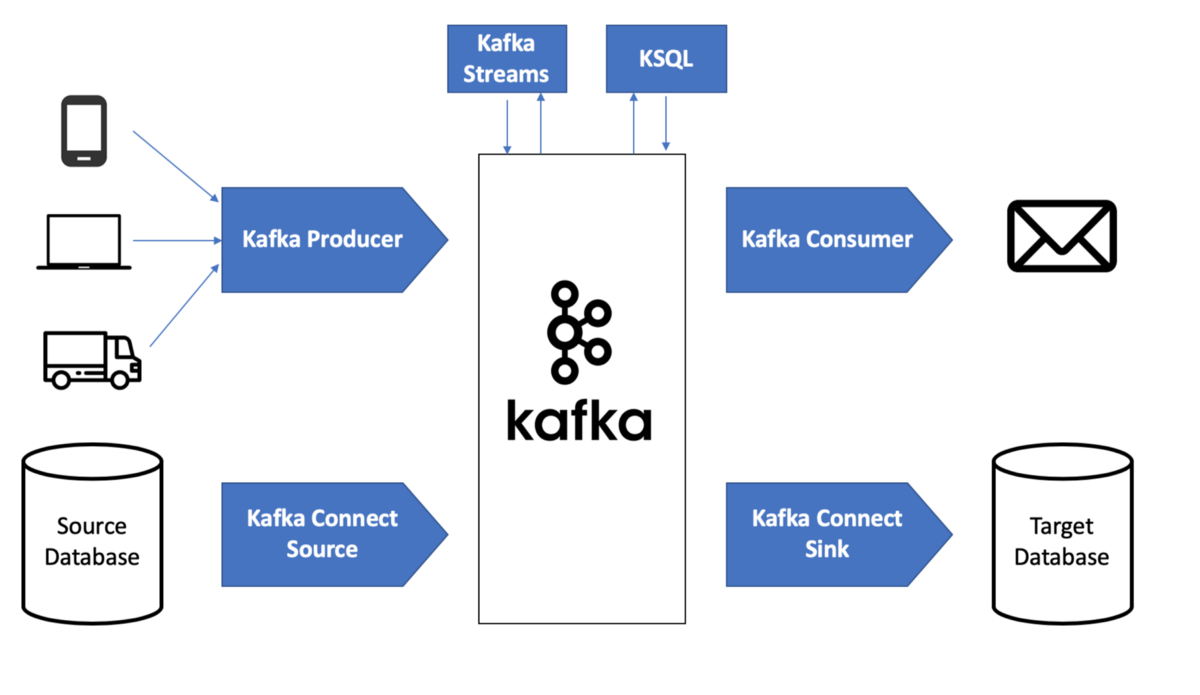

Kafka APIs

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Built-in tools

Admin

Kafka Streams

Processing data between topics, aggregations, joins...

Kafka Connect

Integration with db, search indexes (Elastic Search...), key-value stores (Redis...) - Read and write

Possibilité d'orchestrer facilement Producer + Consumer + dbs avec Connect + Streams...

Kafka APIs

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Kafka APIs

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

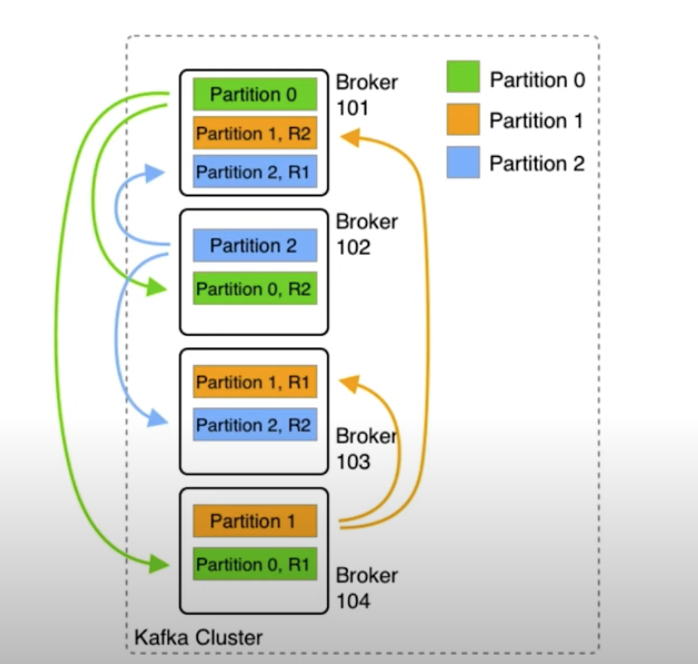

Brokers

- Cluster = several brokers, which manage partitions

- Replication:

1 leader

n followers

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Decoupling

Principle of pub sub pattern

- Slow consumers do not affect producers

- Consumers can be added without affecting producers

- Failure of consumer does not affect system

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Highly scalable

The brokers are easily scalable (horizontally)

→ No limit to the throughput of events (unlike a db)

Kafka brokers, do not track any of its consumers. The consumer service itself is in charge of telling Kafka where it is in the event processing stream, and what it wants from Kafka.

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

Scalability

Highly scalable: Netflix, LinkedIn, Microsoft : trillions of events per day in their clusters

Uber

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

ruby-kafka

https://github.com/zendesk/ruby-kafka

Wrapper around Kafka Consumer and Producer API

Built-in tools for instrumentation

Theory 1 - Exercice - Theory 2 - Gems - Shopmium

karafka

https://github.com/karafka/karafka

framework

higher-level abstraction

manages low-level logic (handling errors, retries and backoffs; consumer-groups; concurrency; brokers discoveries...)

cf. config files

Shopmium

Theory 1 - Exercice - Theory 2 - Gems - Shopmium