Support Vector Machines (SVM)

models for target classification

MLPP

MLPP

Logistic Regression - recap

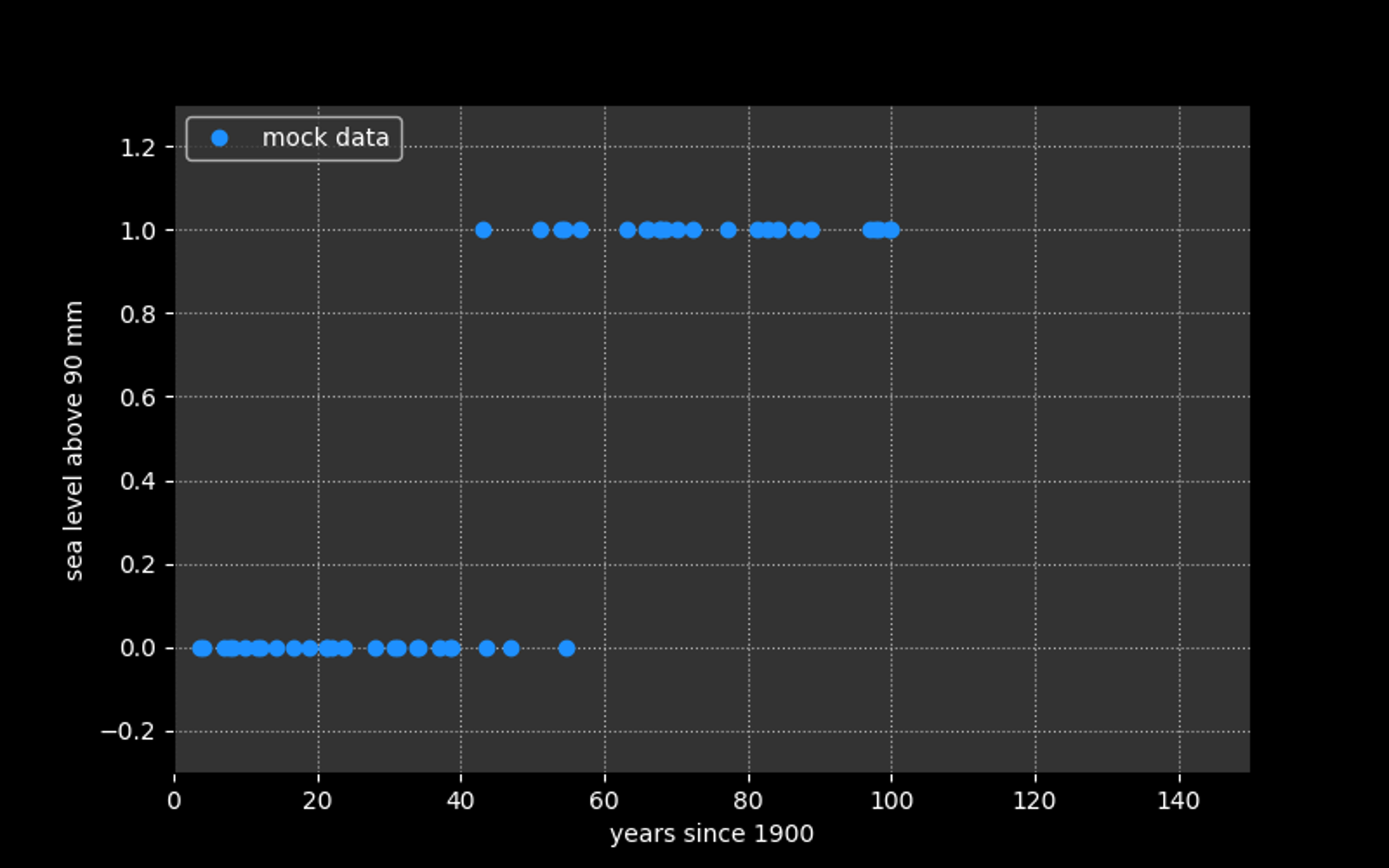

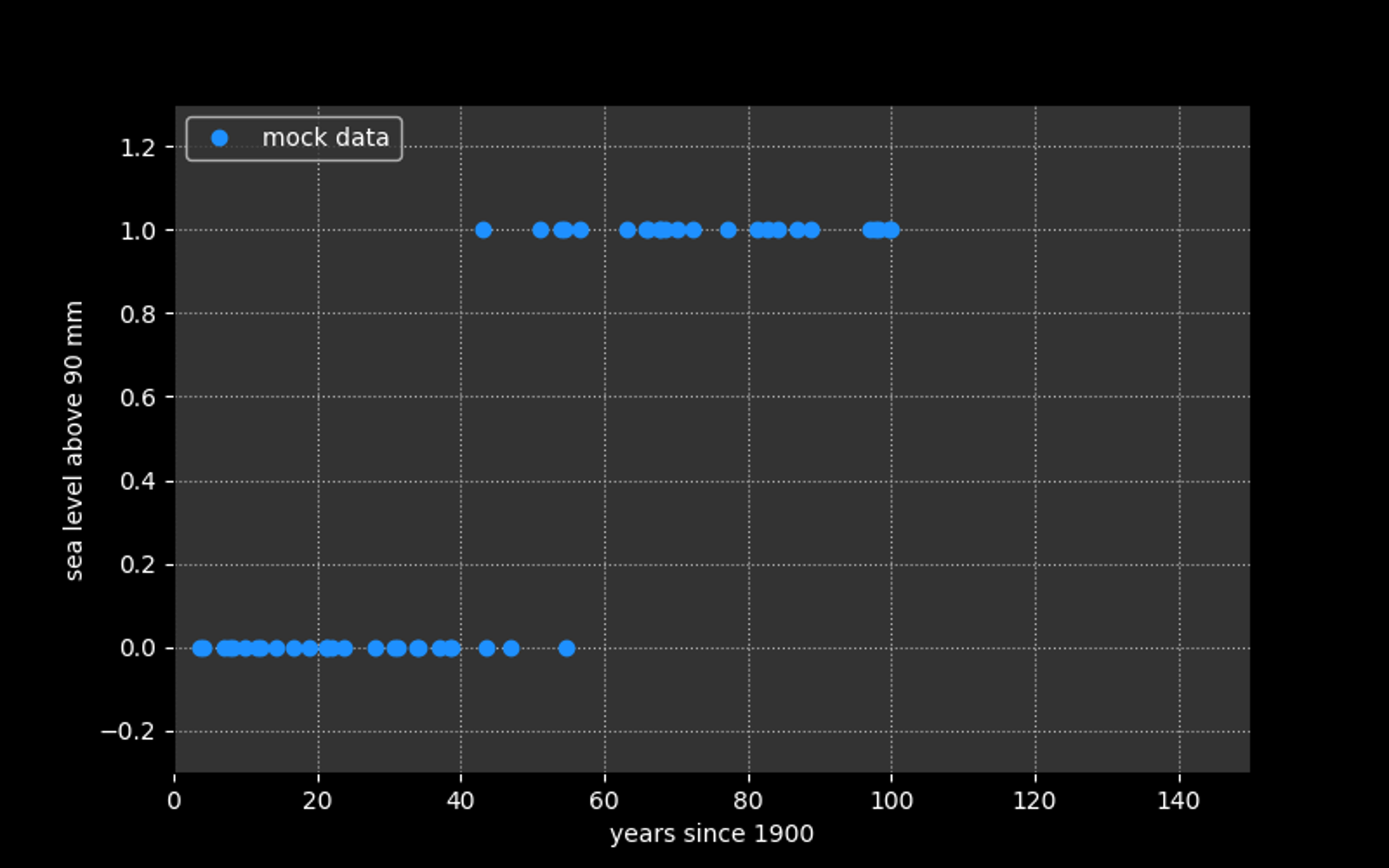

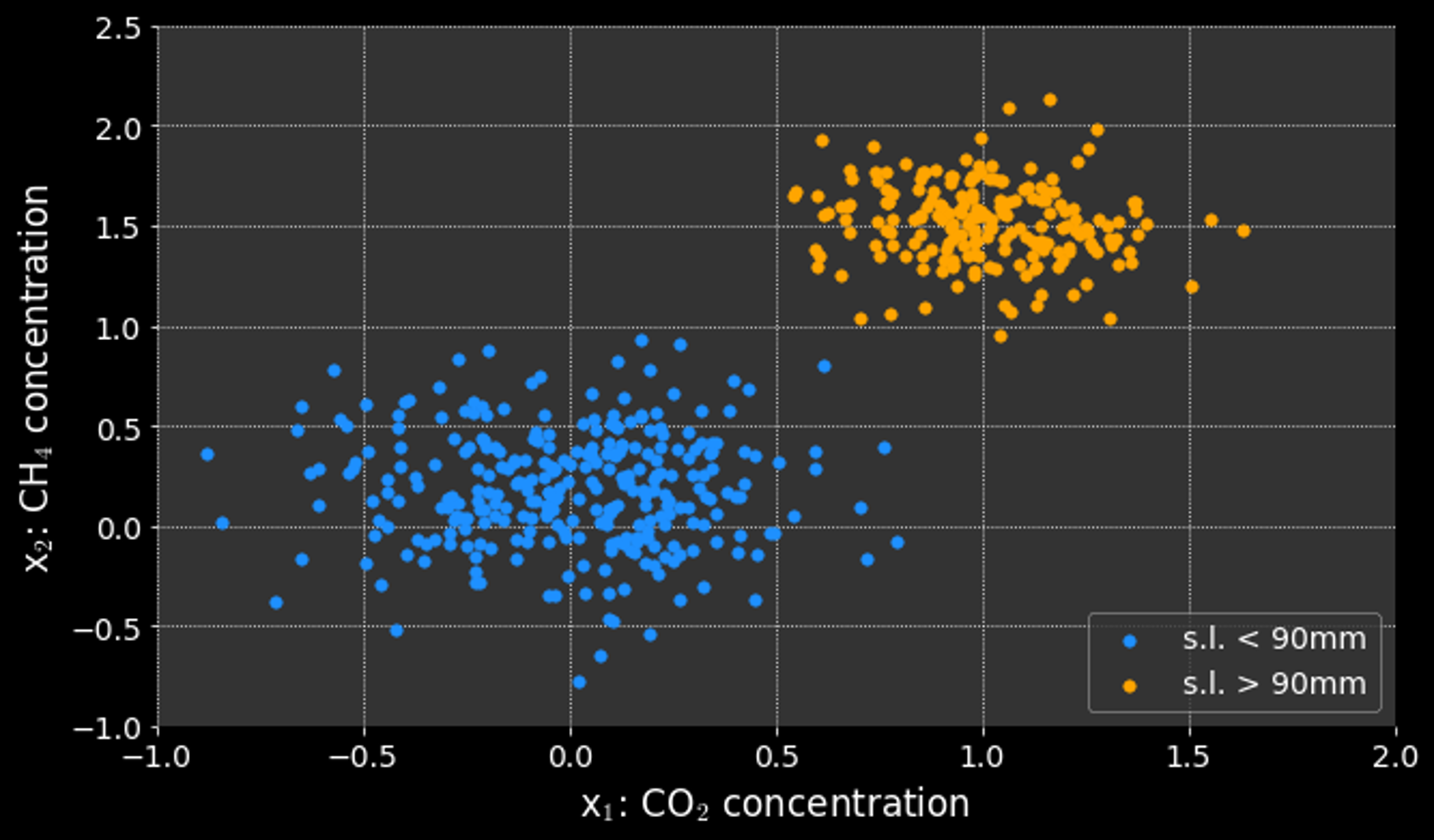

Modeling data for which the target consists of

binary values

MLPP

Logistic Regression - recap

Modeling data for which the target consists of

binary values

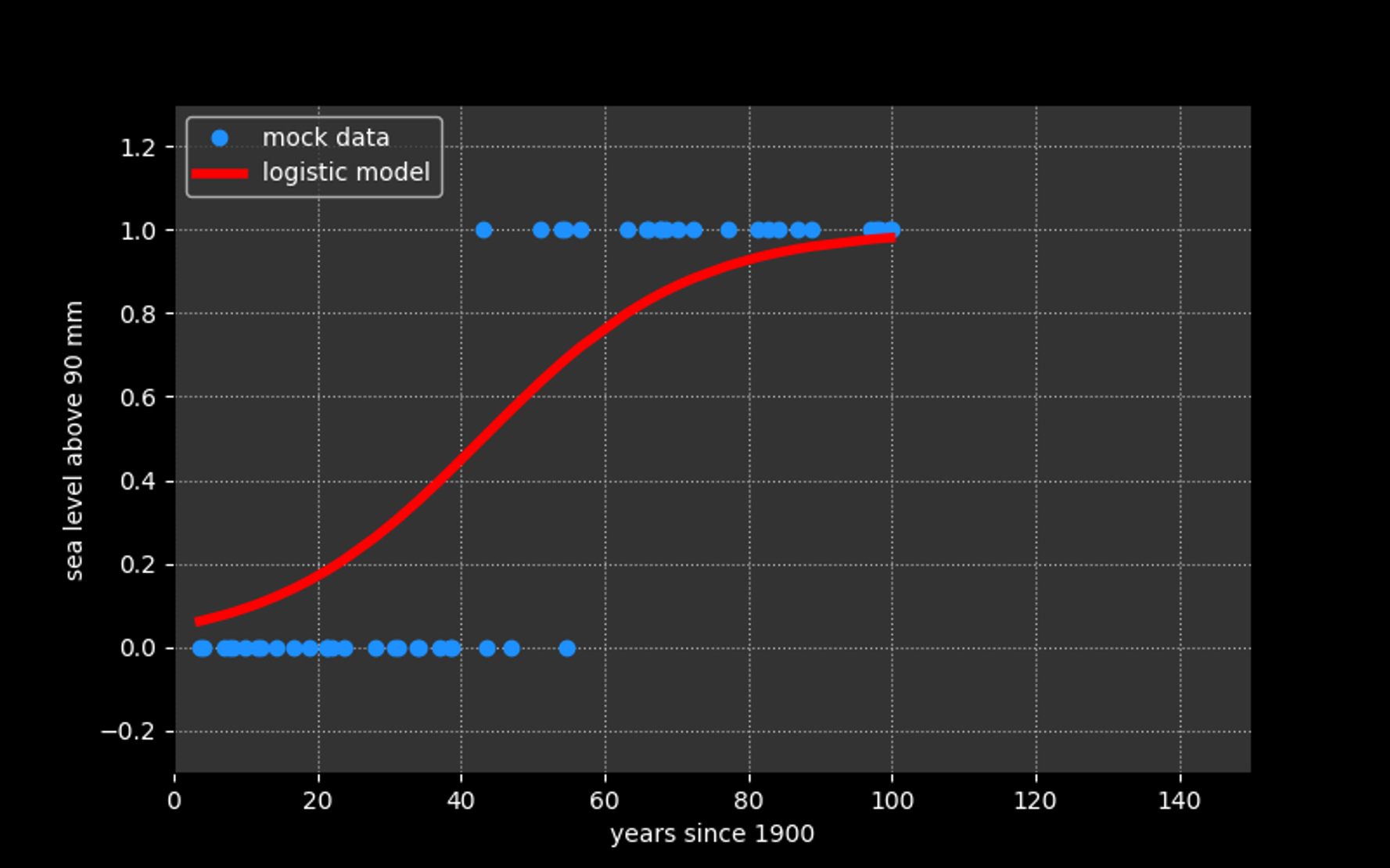

In these cases we can model our data using the logistic function*:

* to be interpreted as a probability that the target is True (=1)

MLPP

Logistic Regression - recap

Modeling data for which the target consists of

binary values

In these cases we can model our data using the logistic function*:

ML PARADIGM

parameter: m and b

metric:

algorithm: SGD (or similar)

MLPP

Support Vector Machines (SVM)

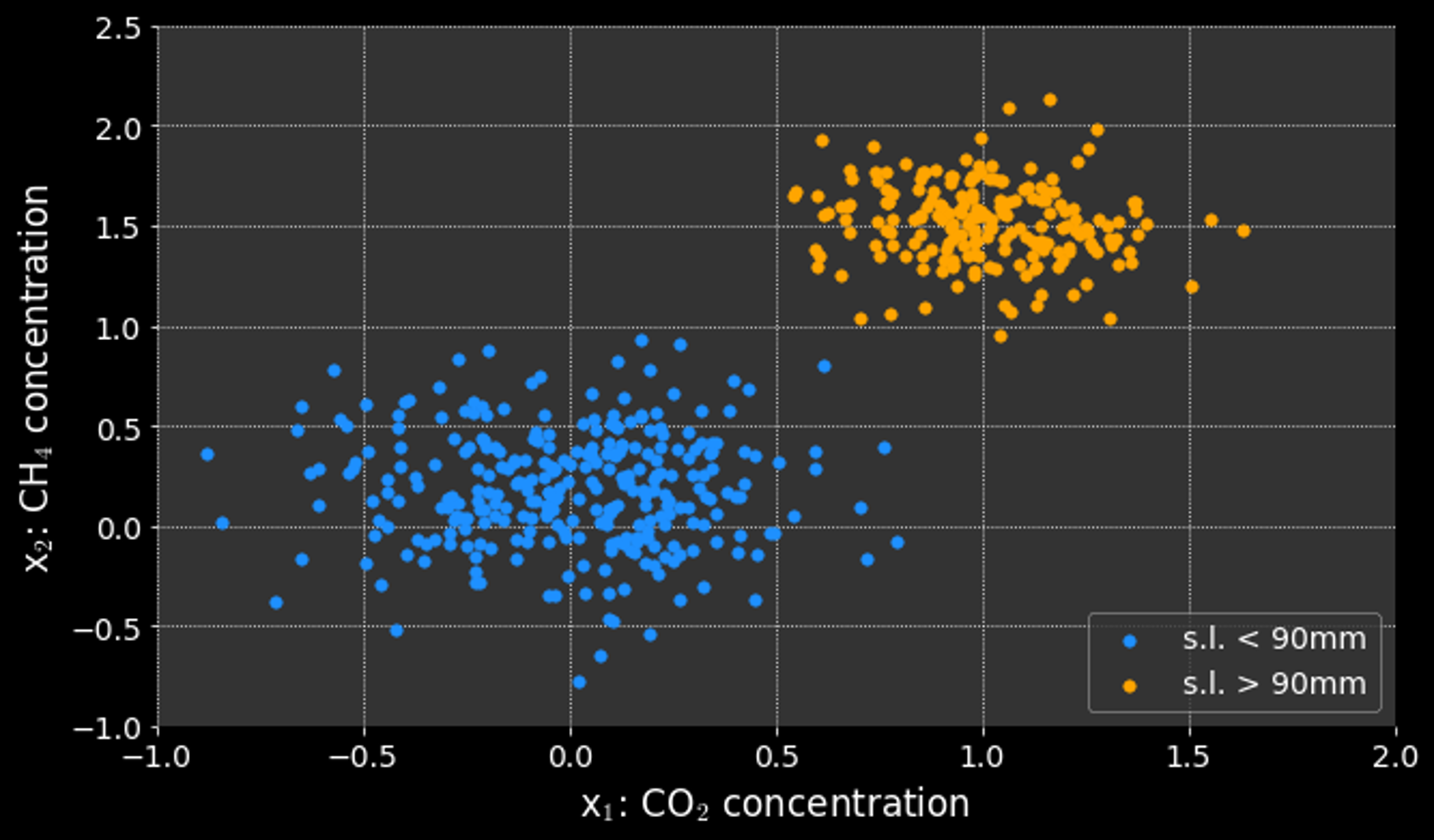

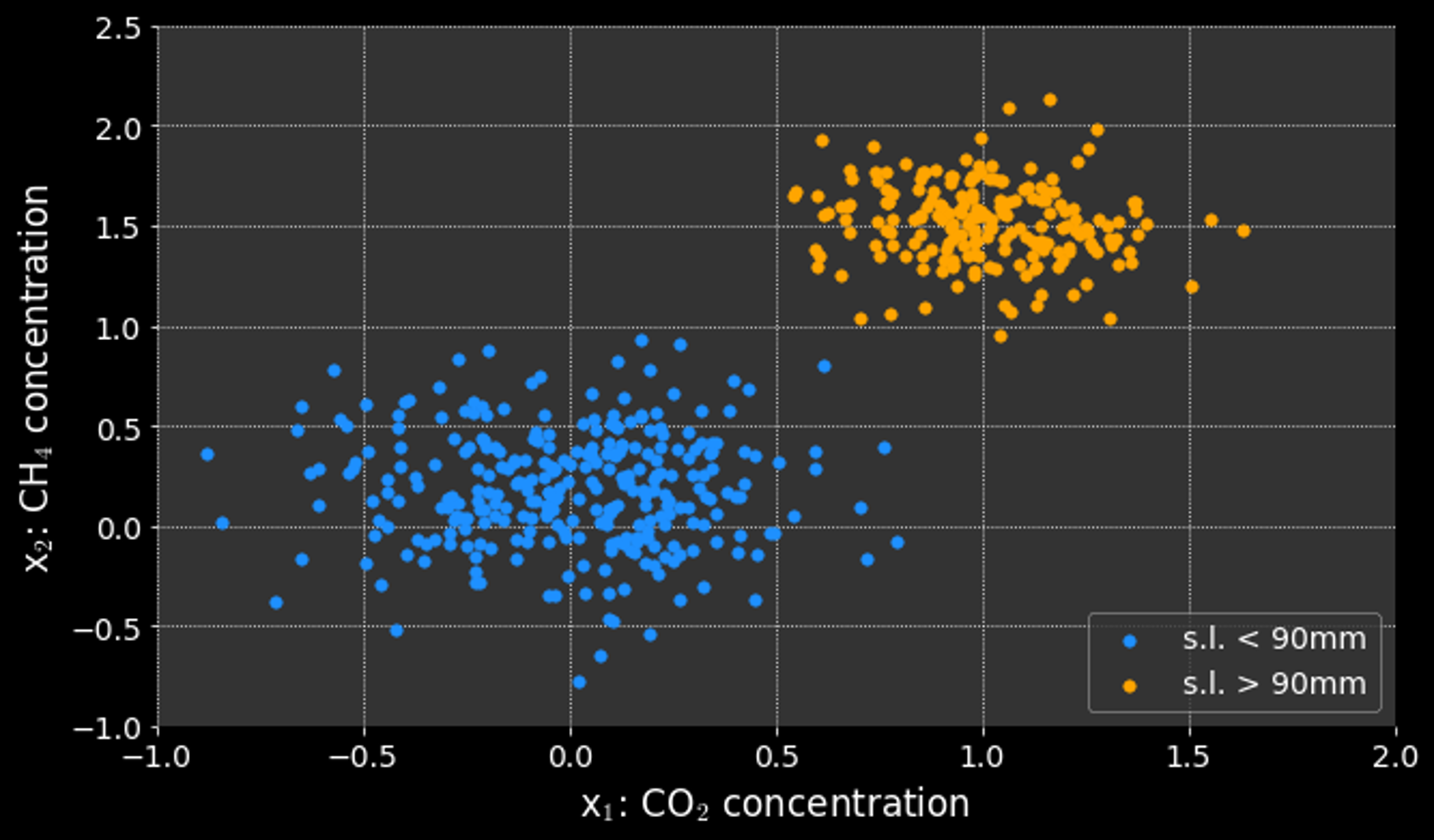

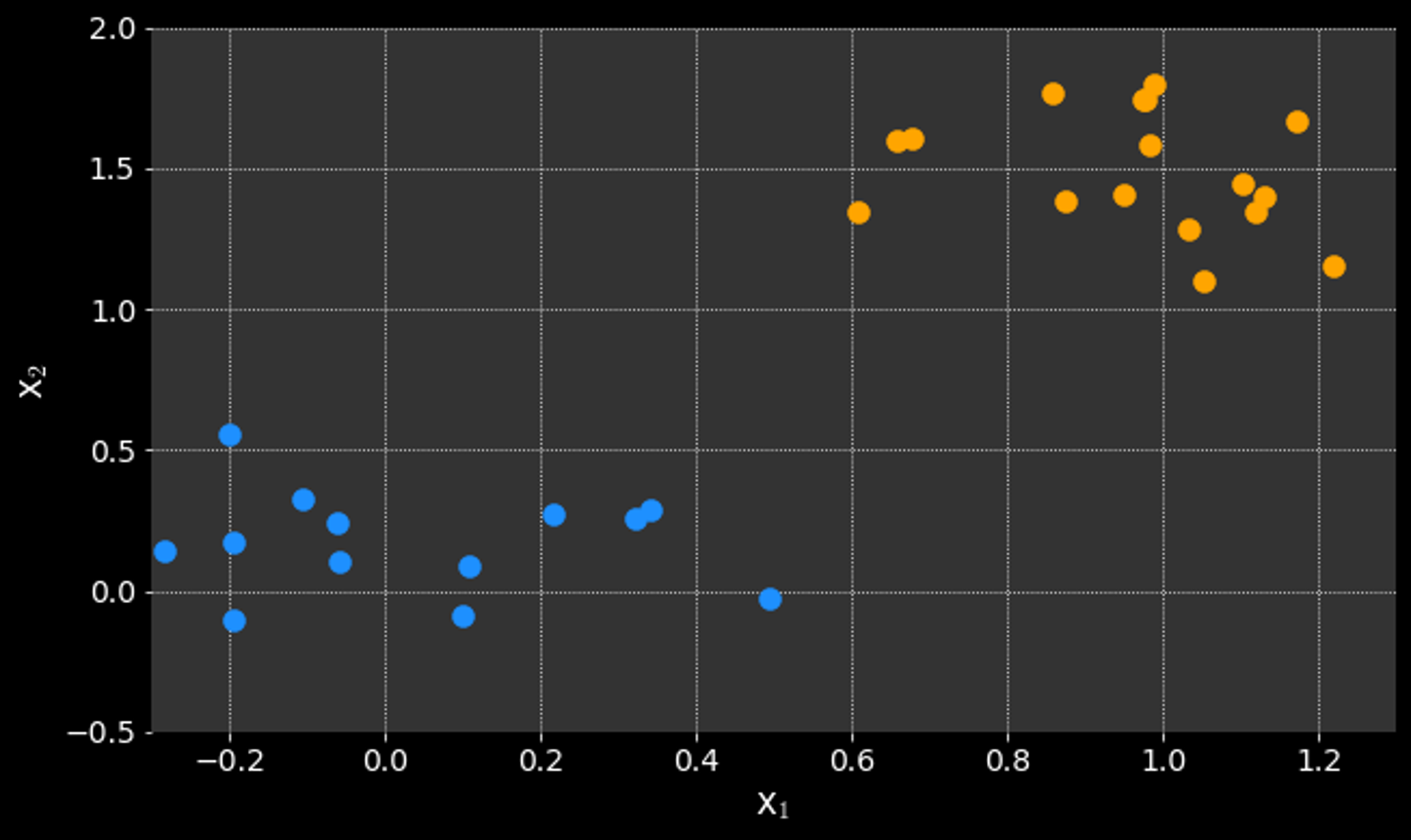

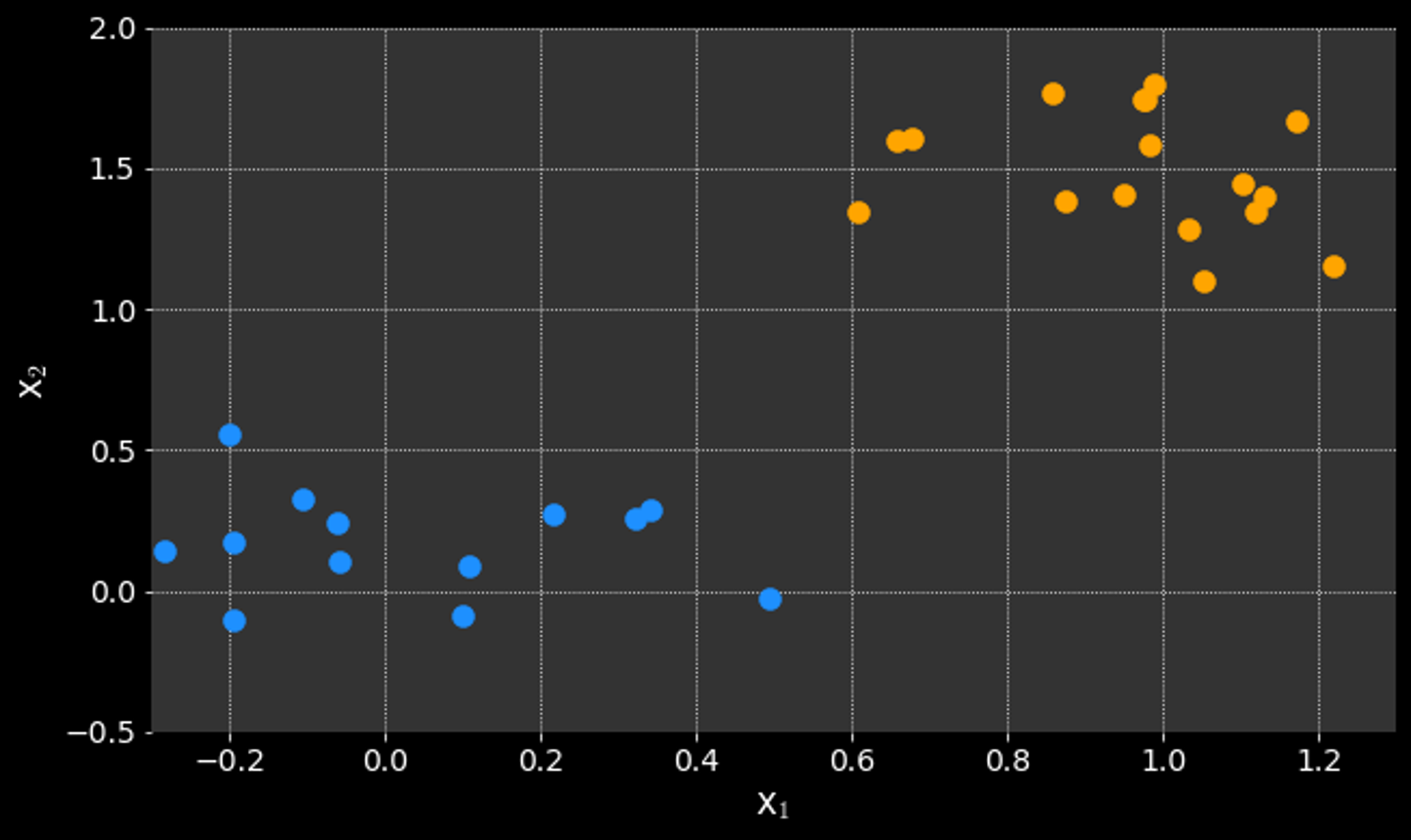

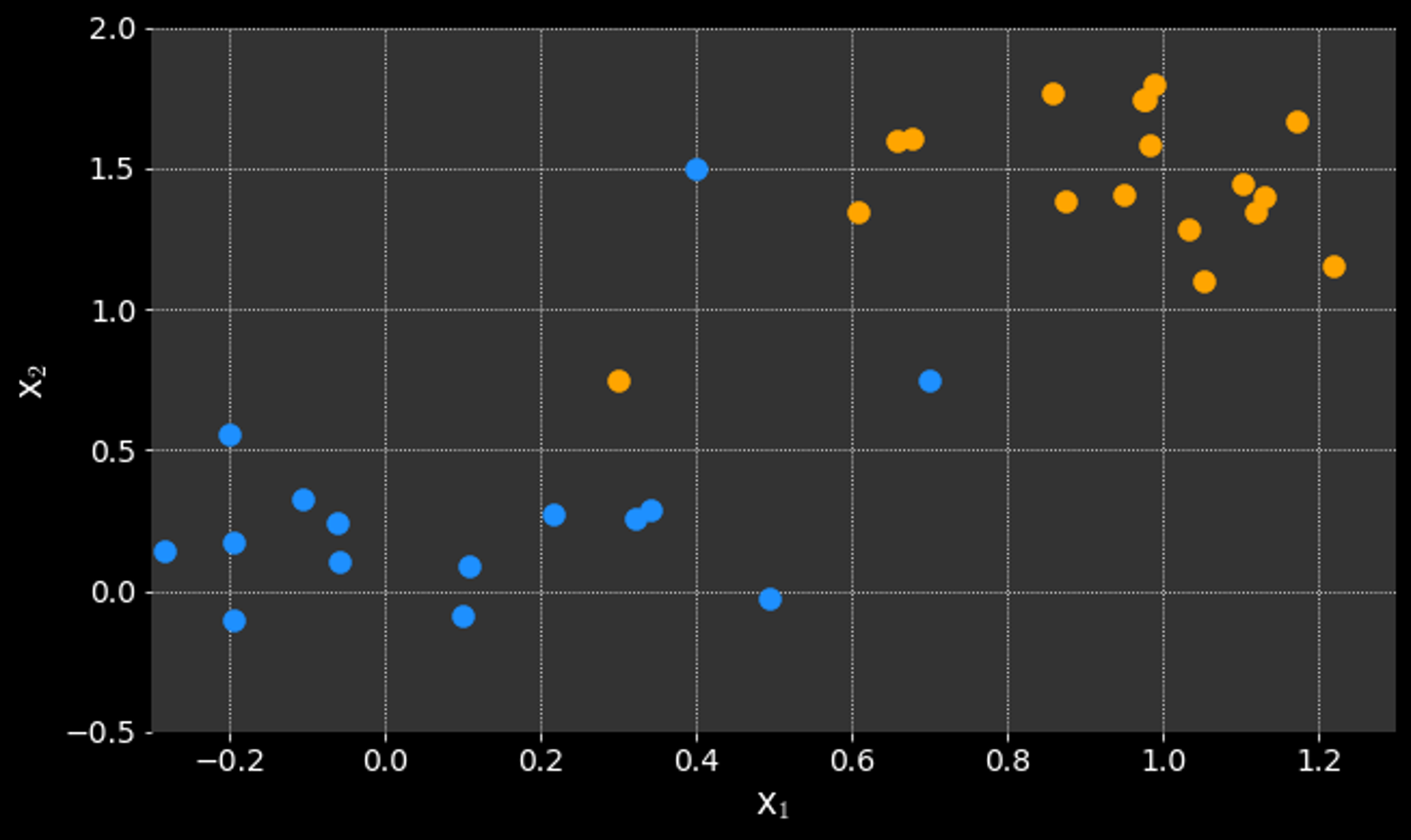

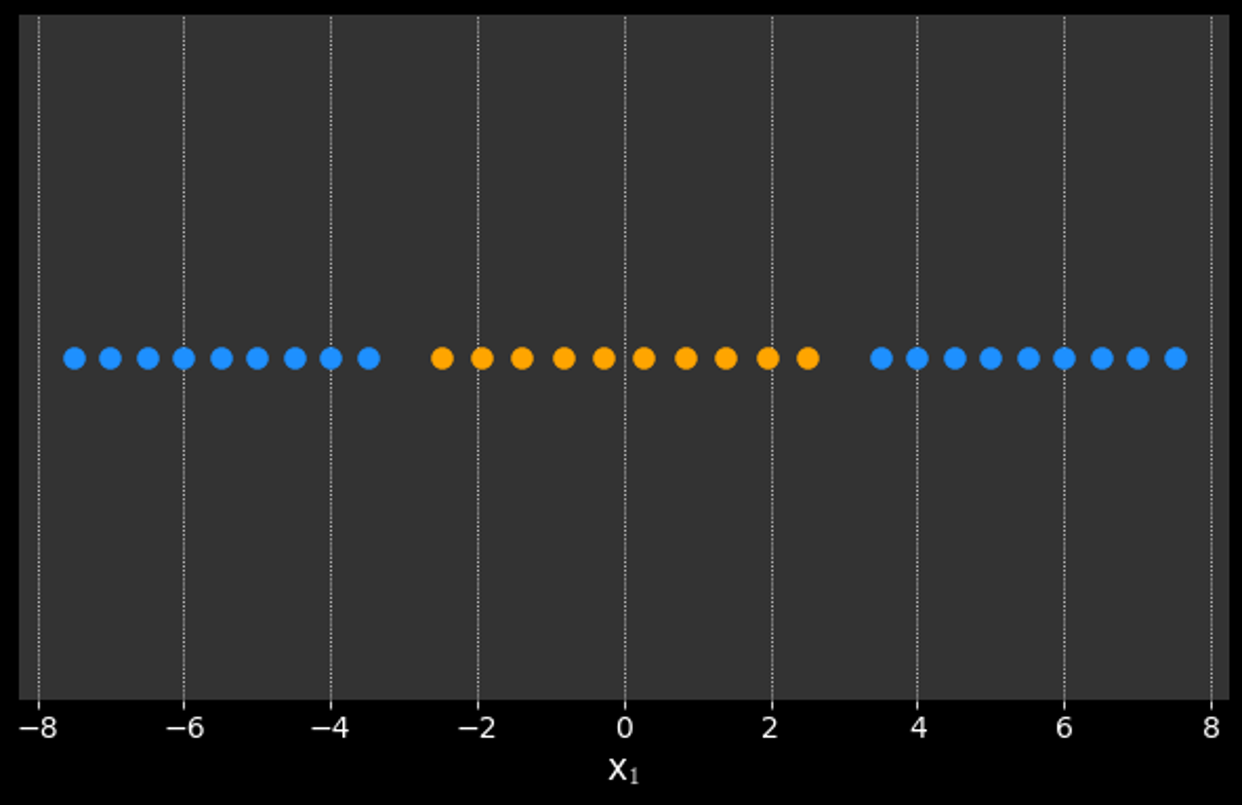

Thinking about the classification problem differently:

Can't we just draw a line that separates the classes?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

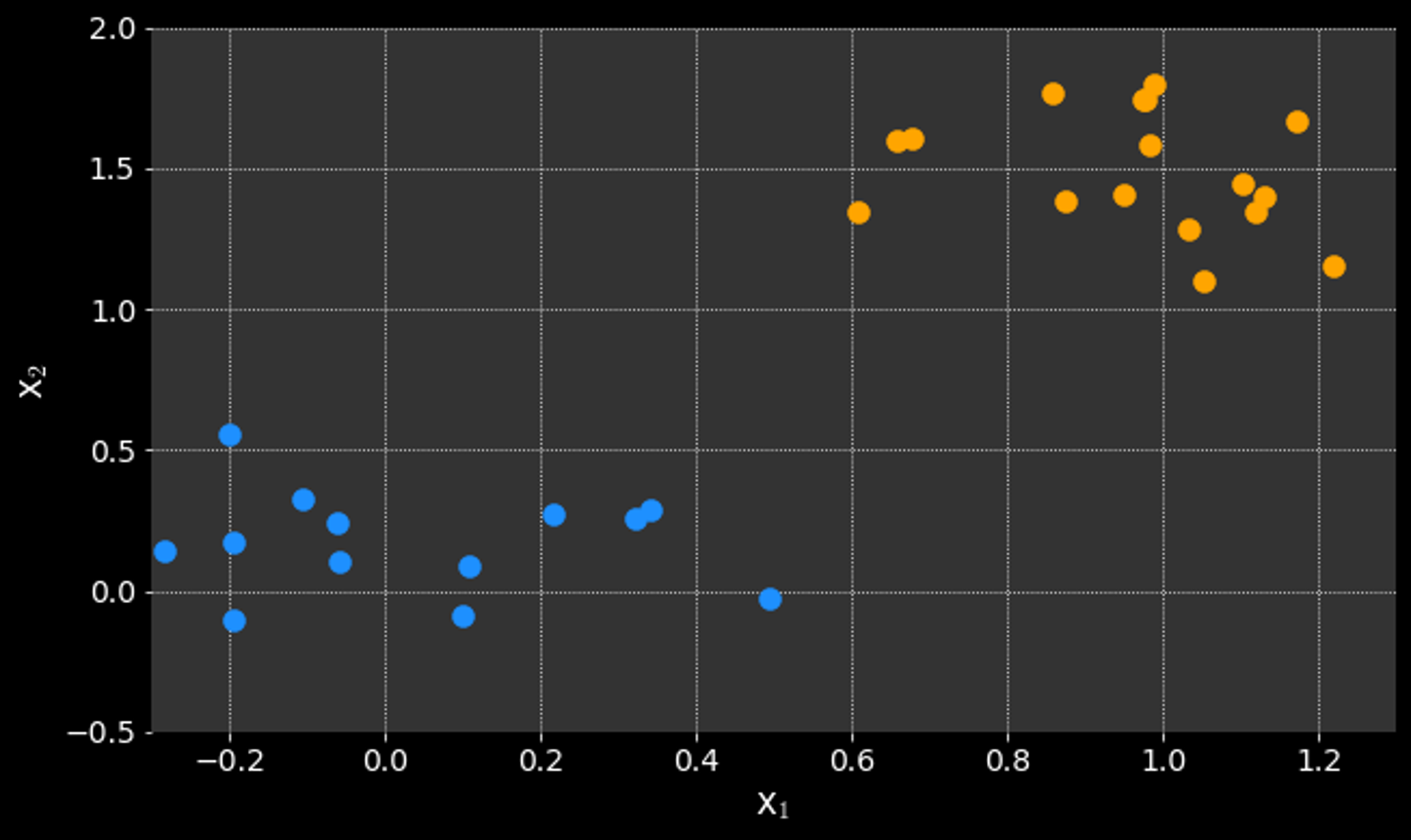

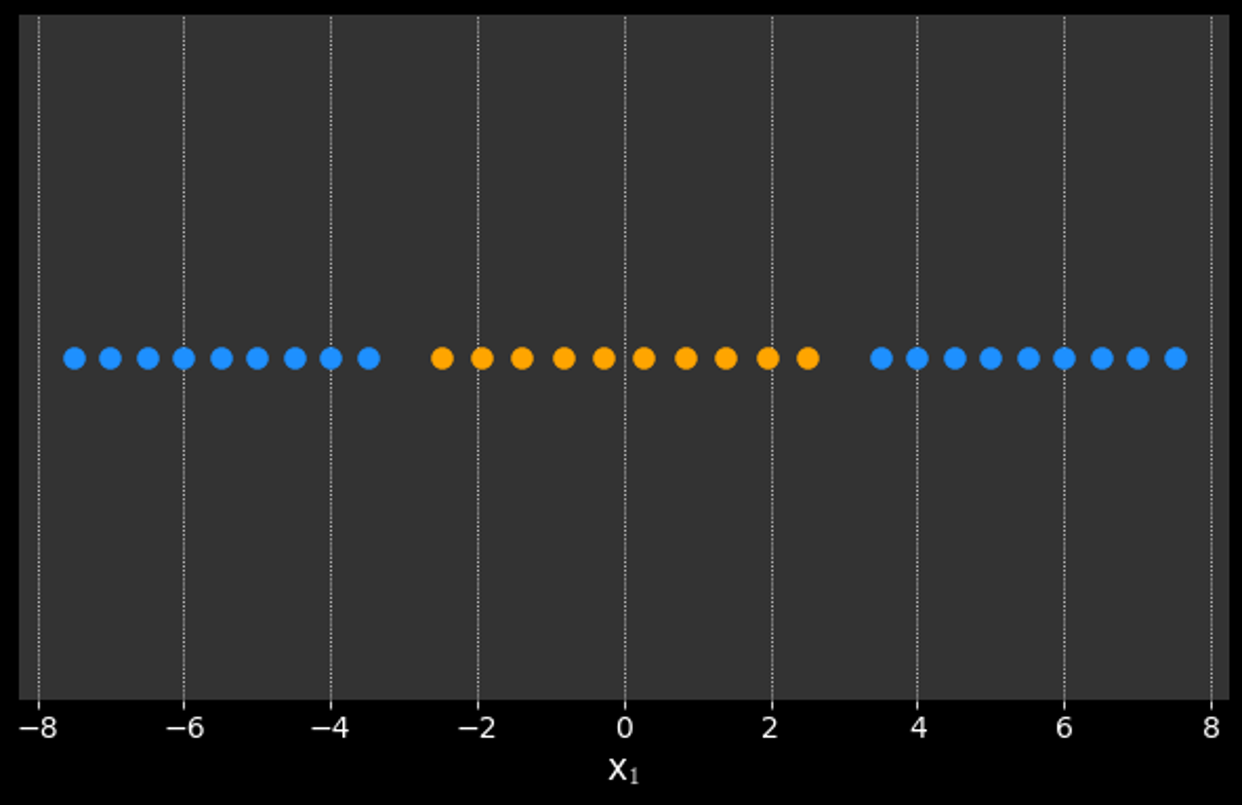

Support Vector Machines (SVM)

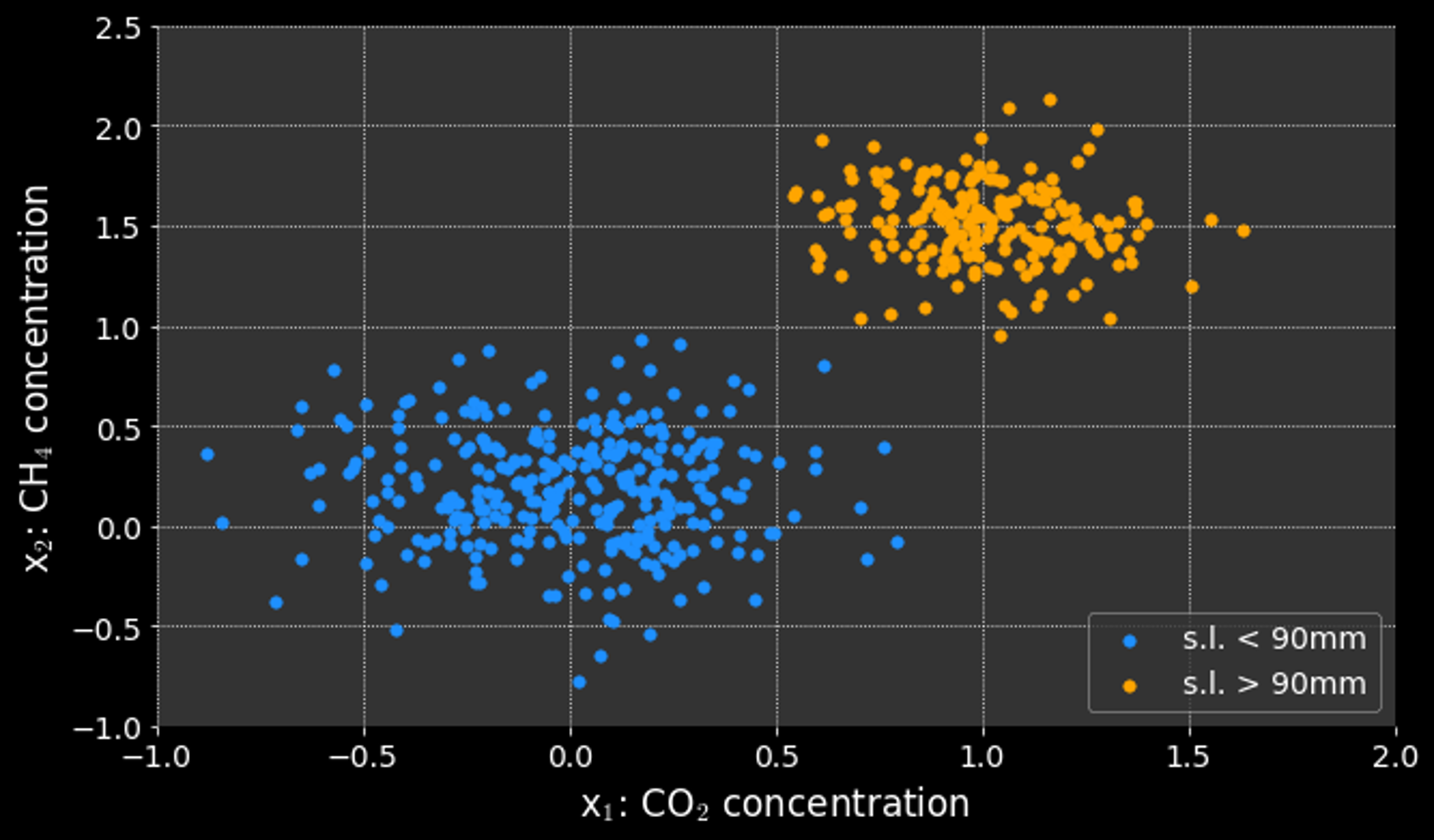

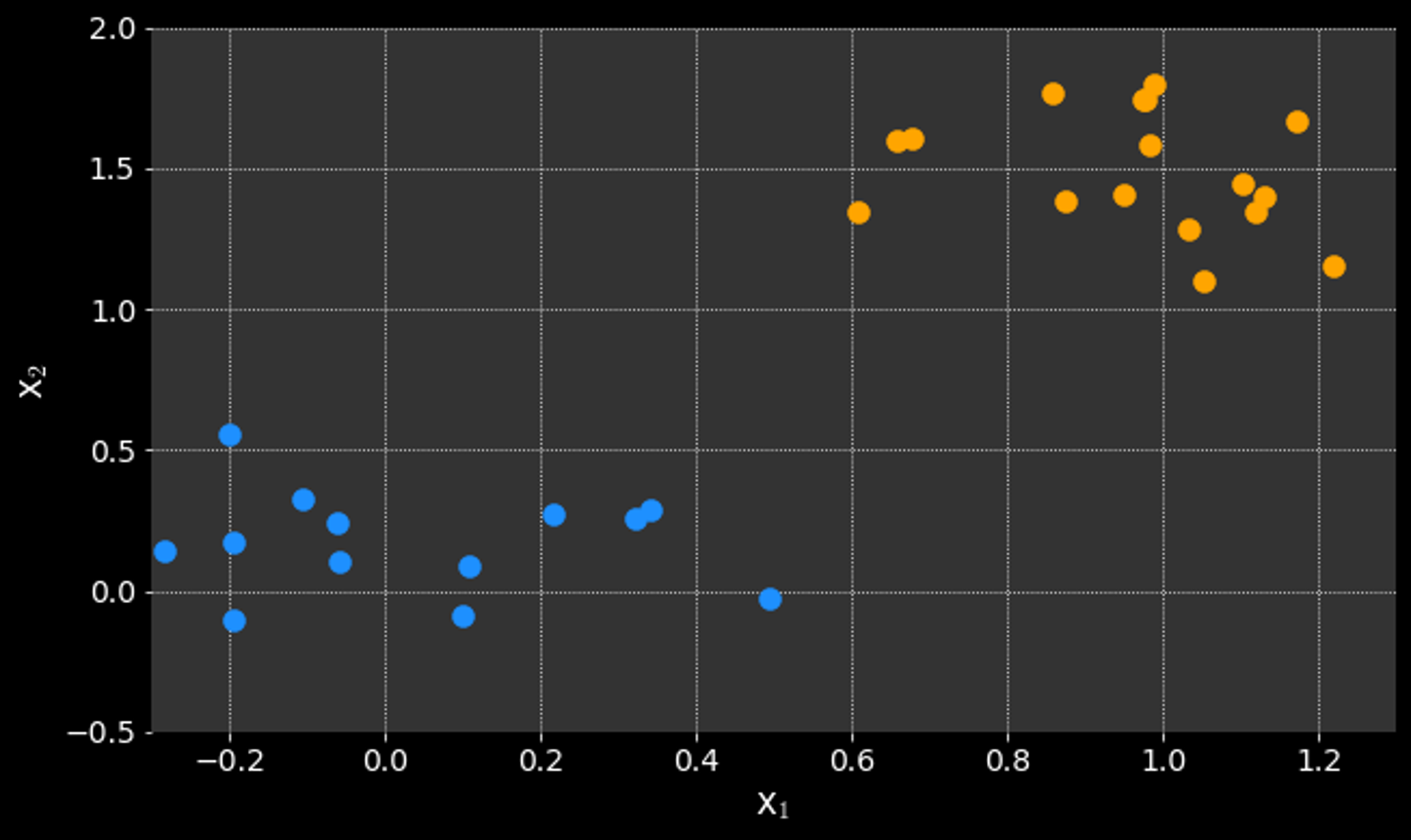

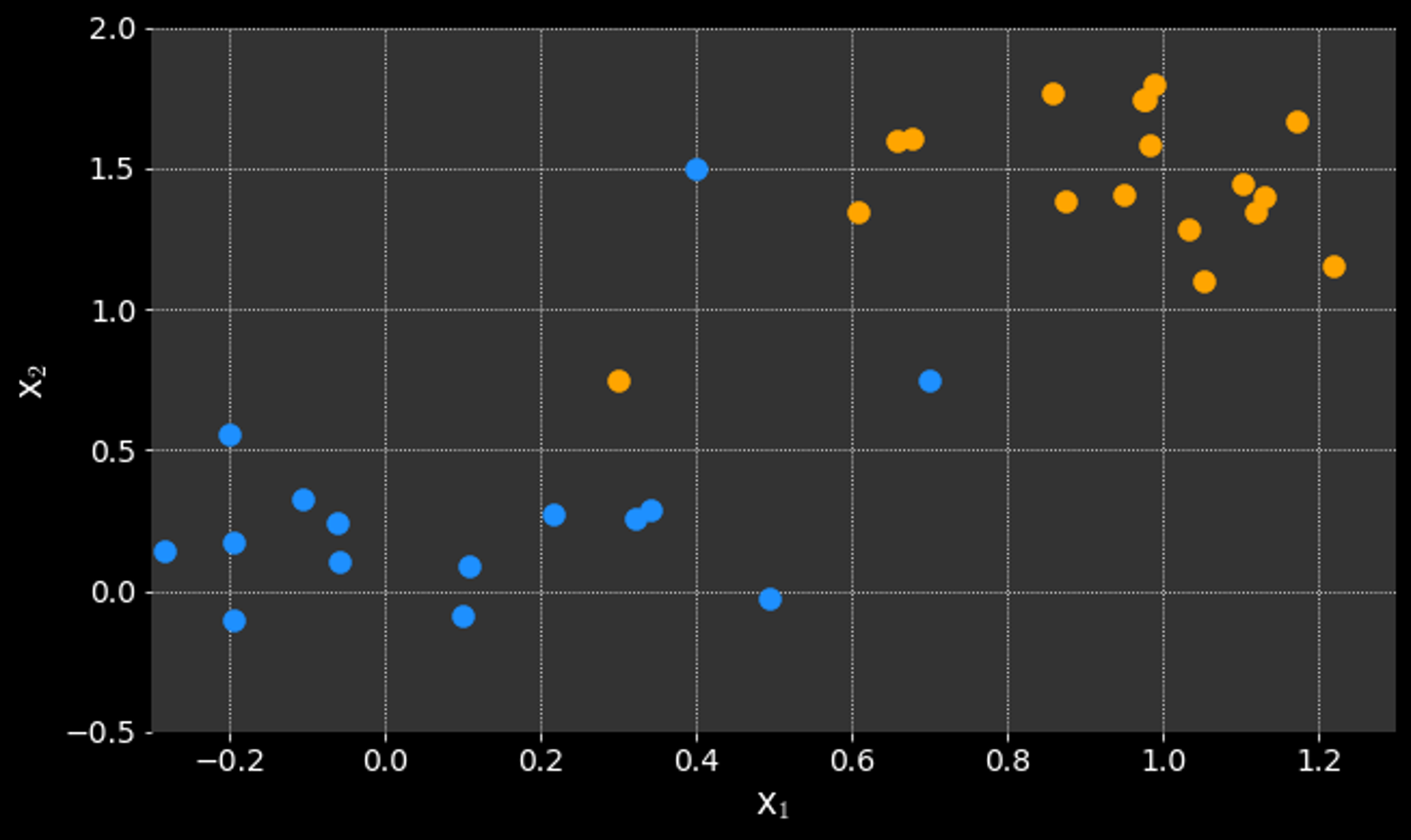

Thinking about the classification problem differently:

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

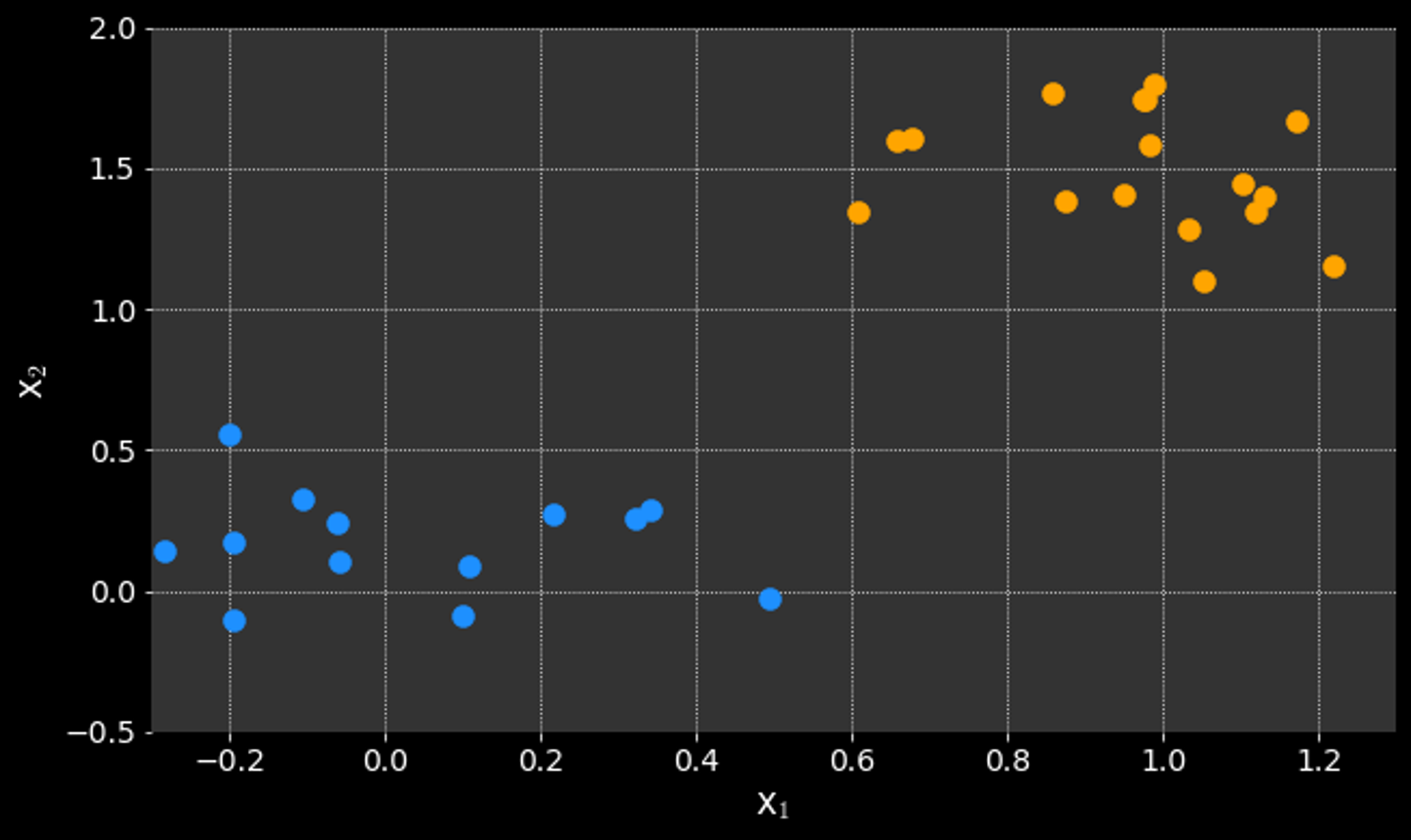

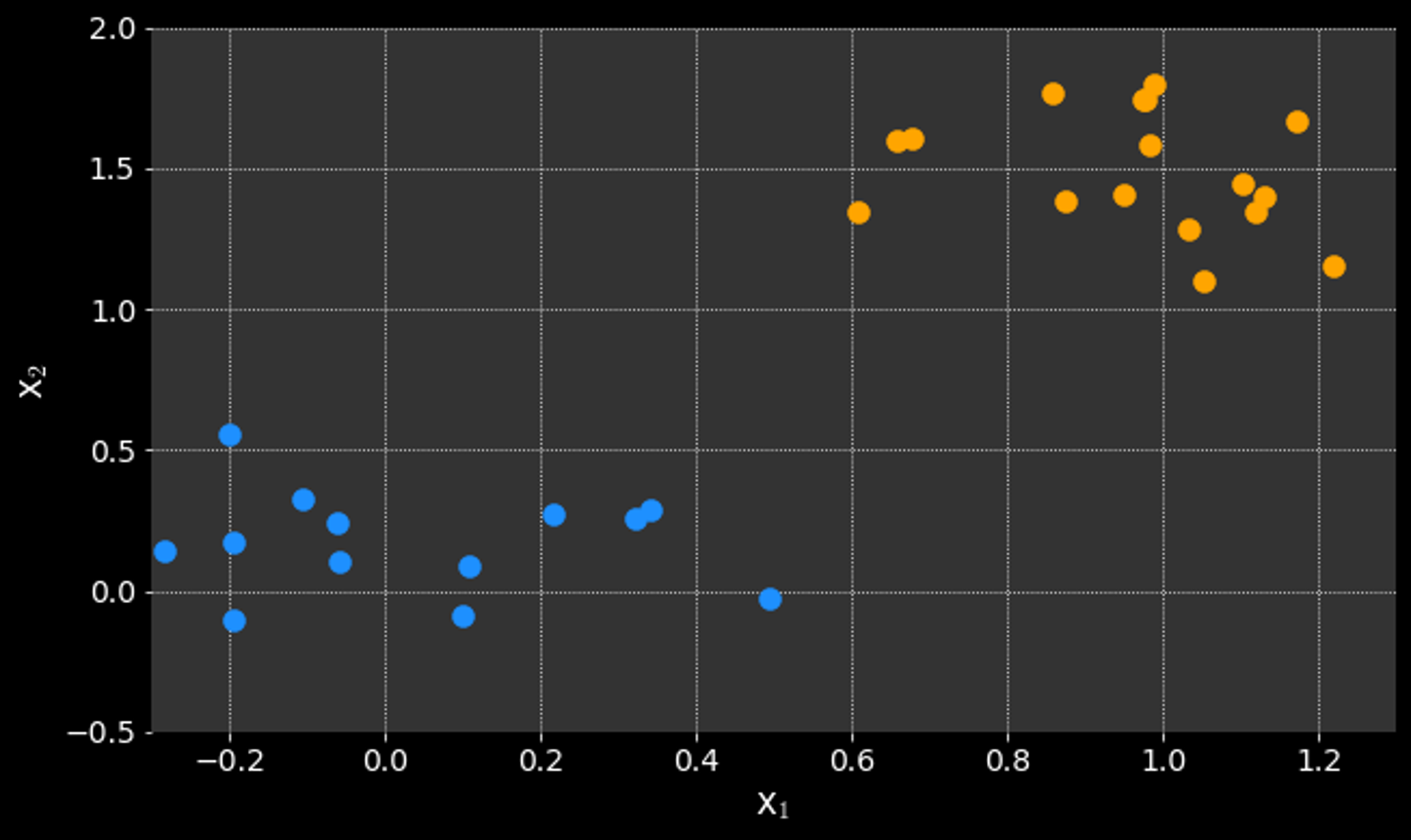

Support Vector Machines (SVM)

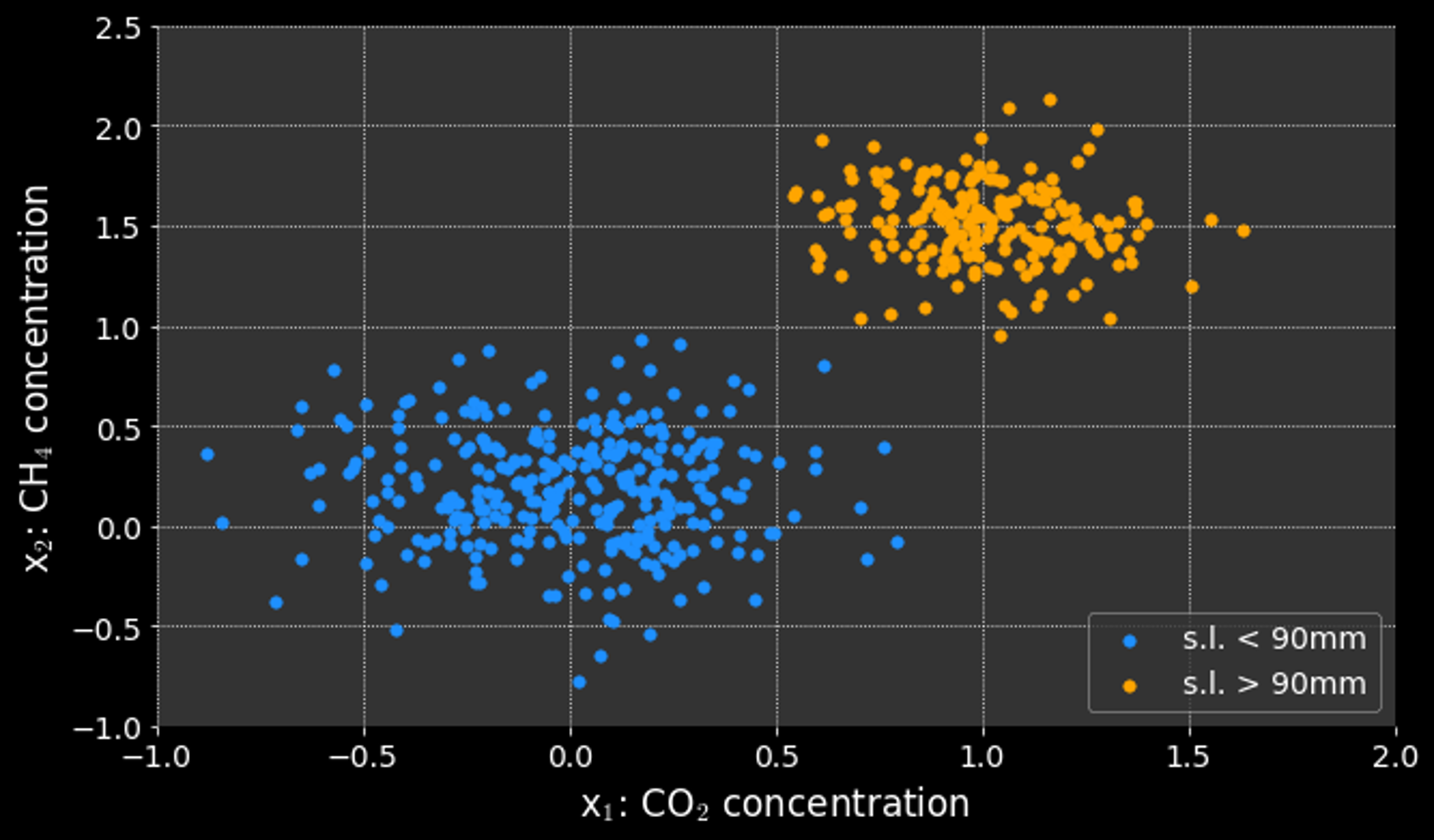

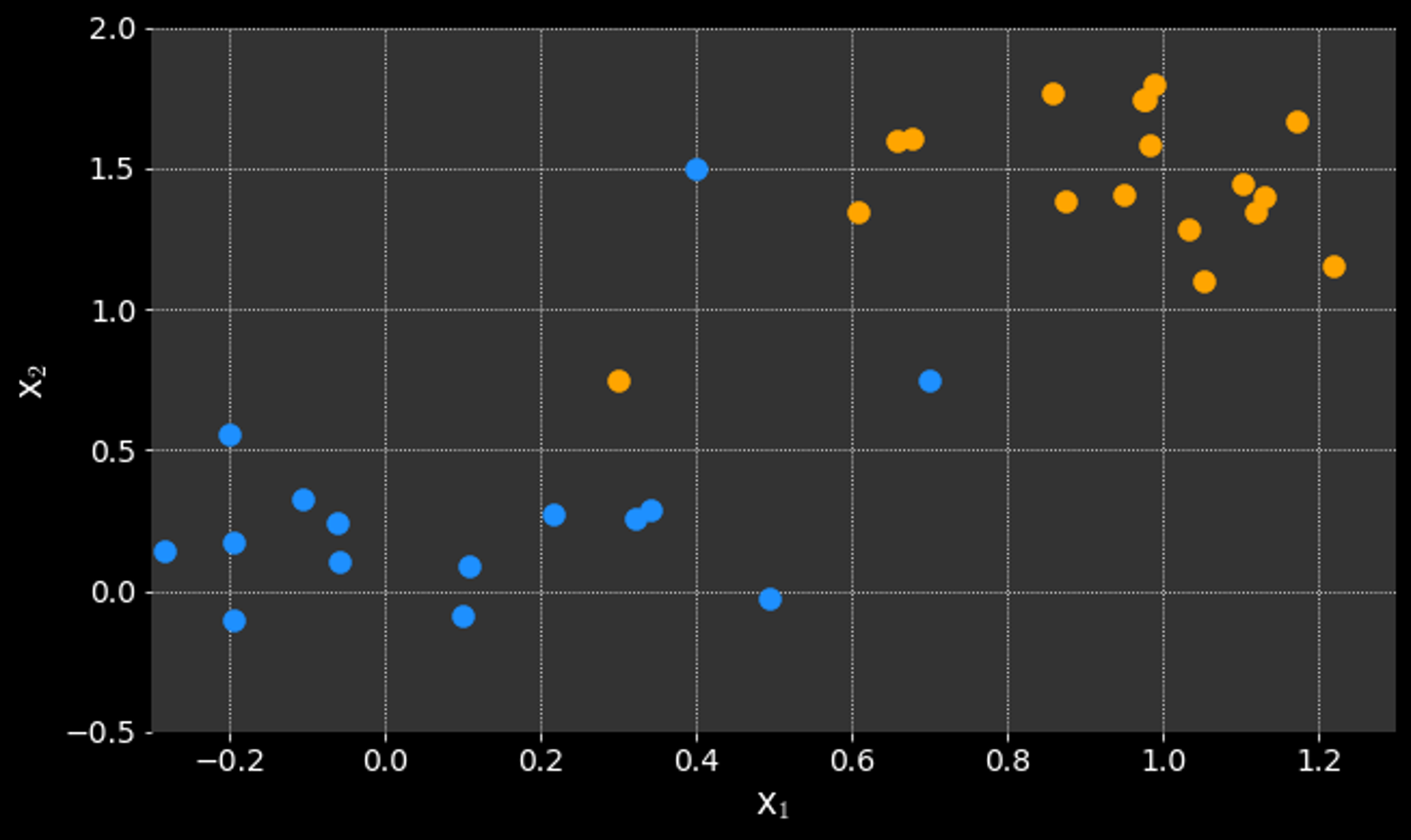

Thinking about the classification problem differently:

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

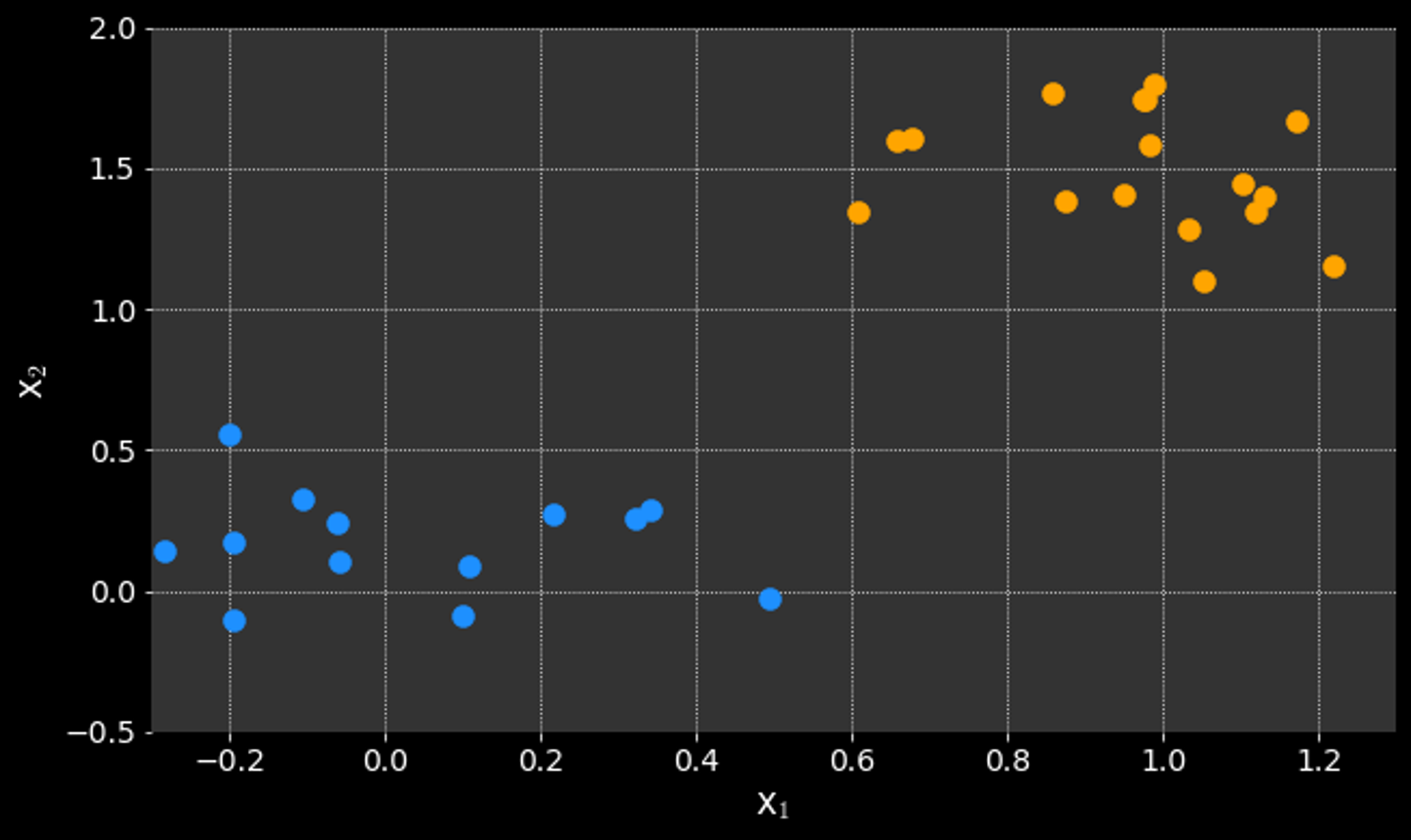

Support Vector Machines (SVM)

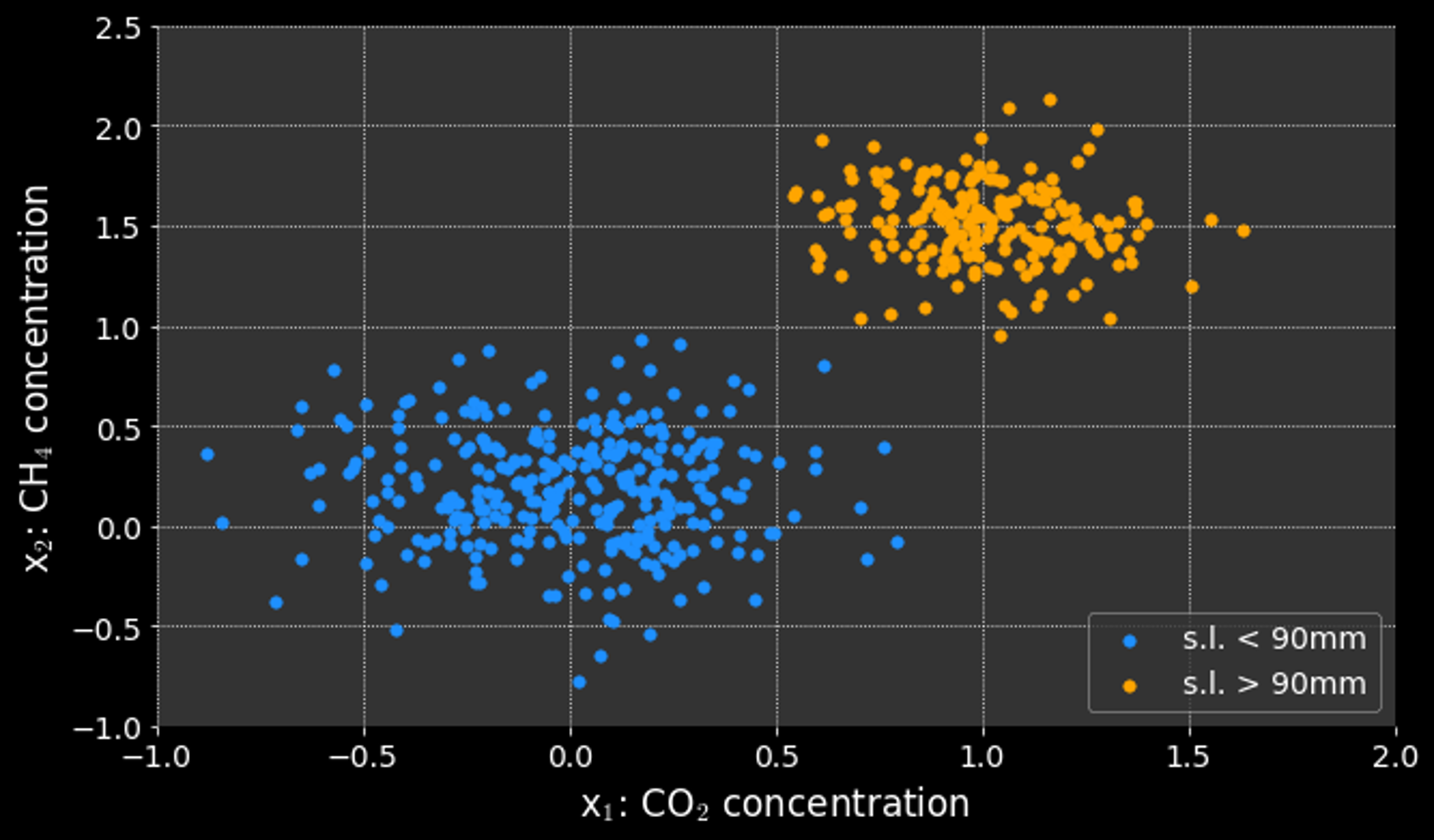

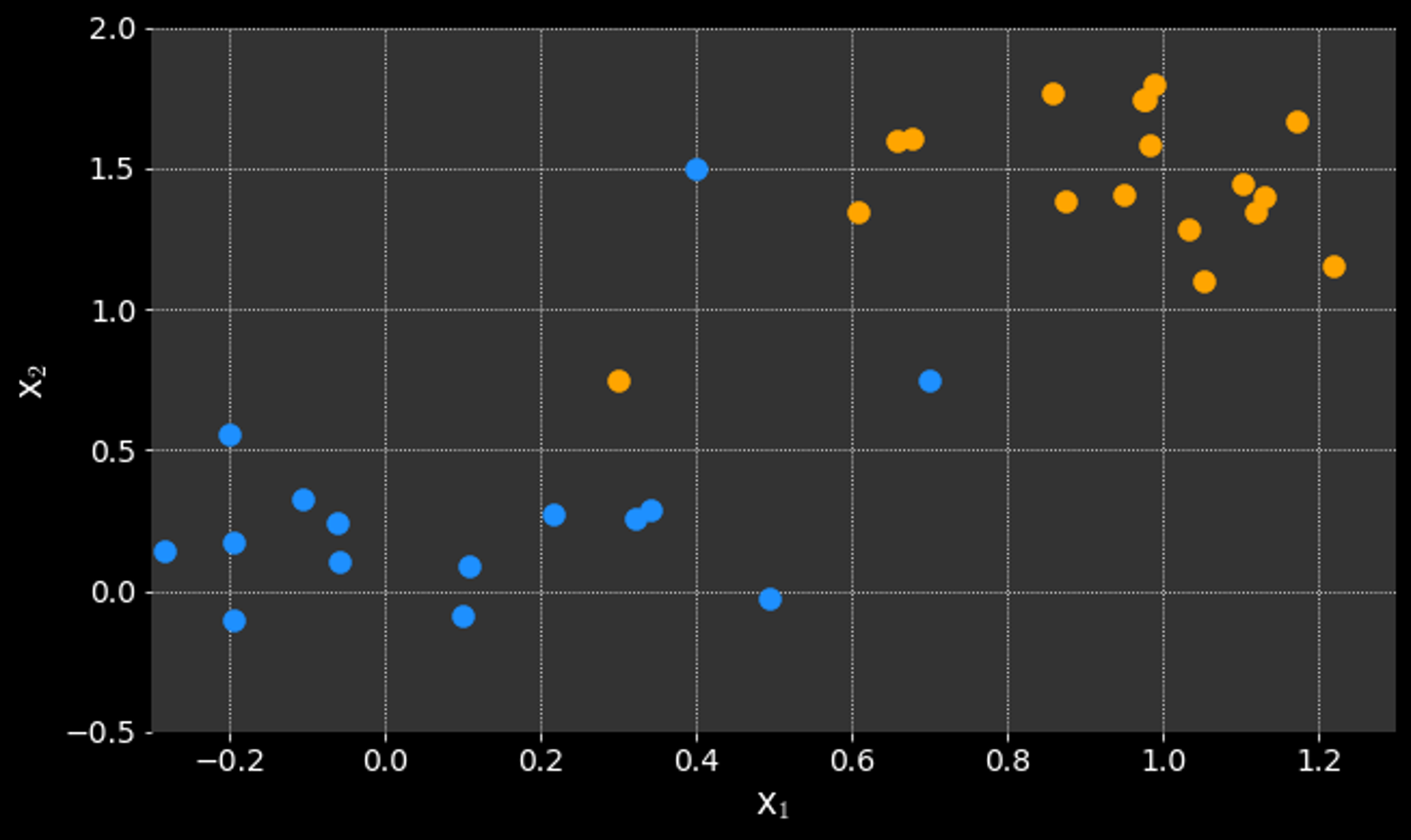

Thinking about the classification problem differently:

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

Support Vector Machines (SVM)

Thinking about the classification problem differently:

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

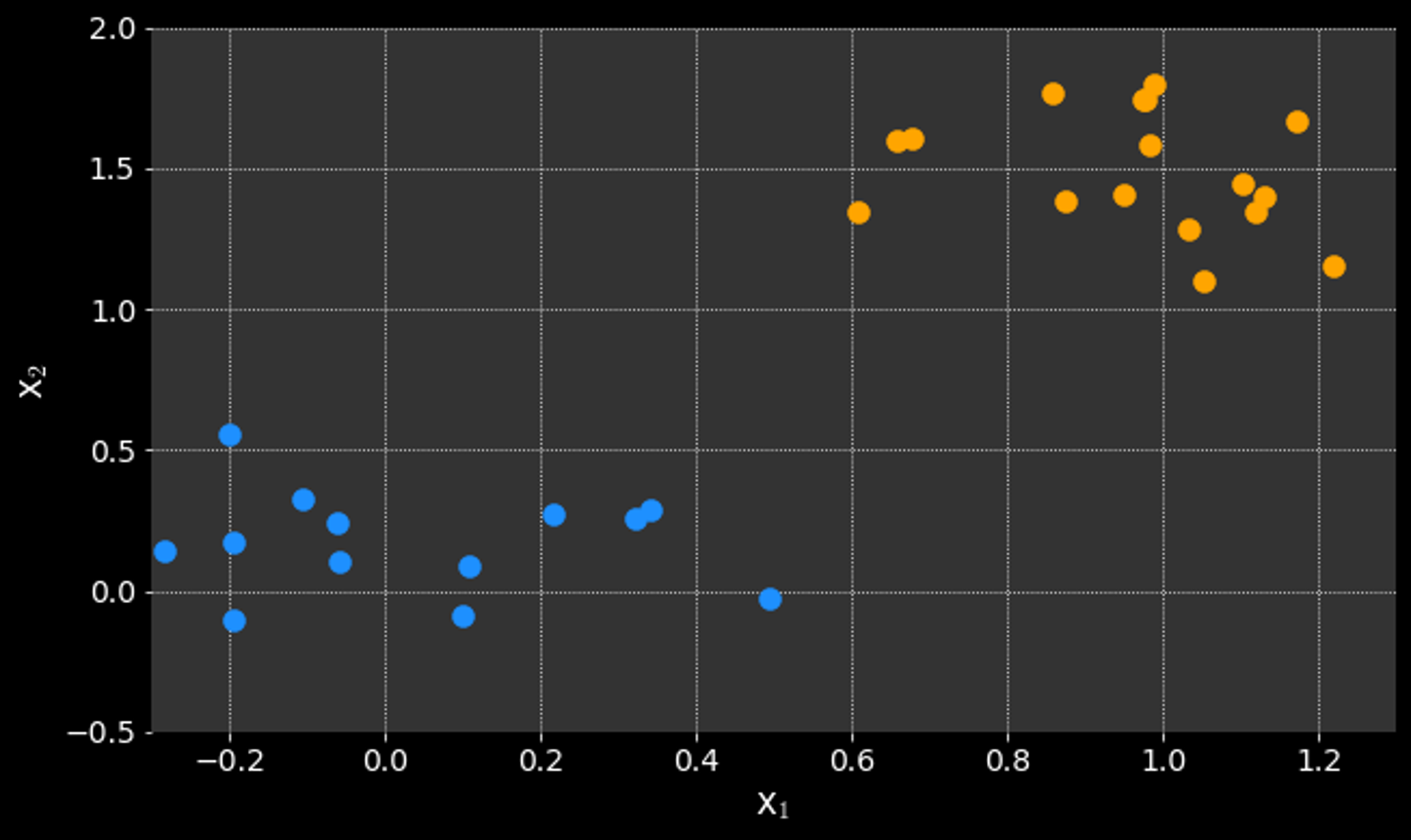

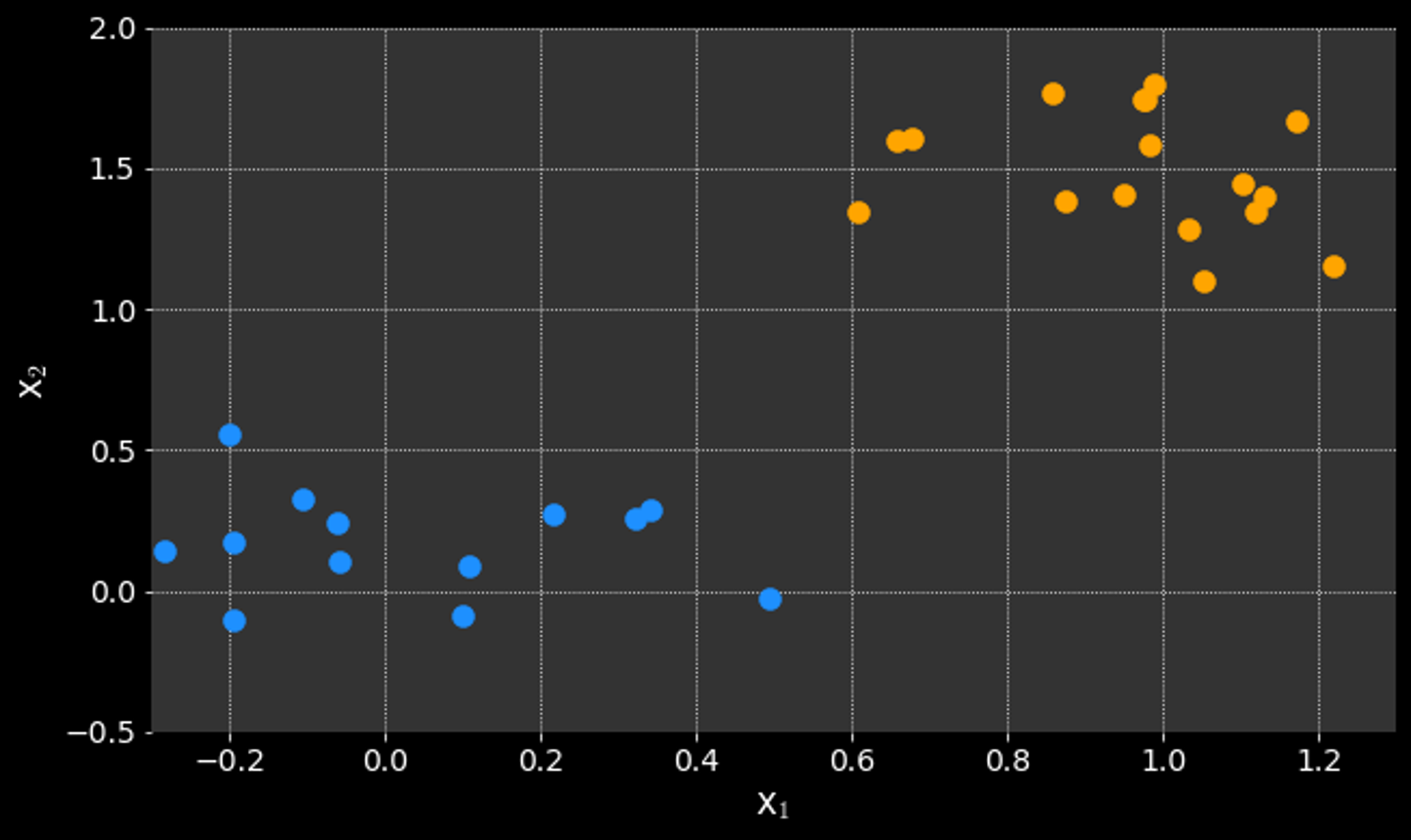

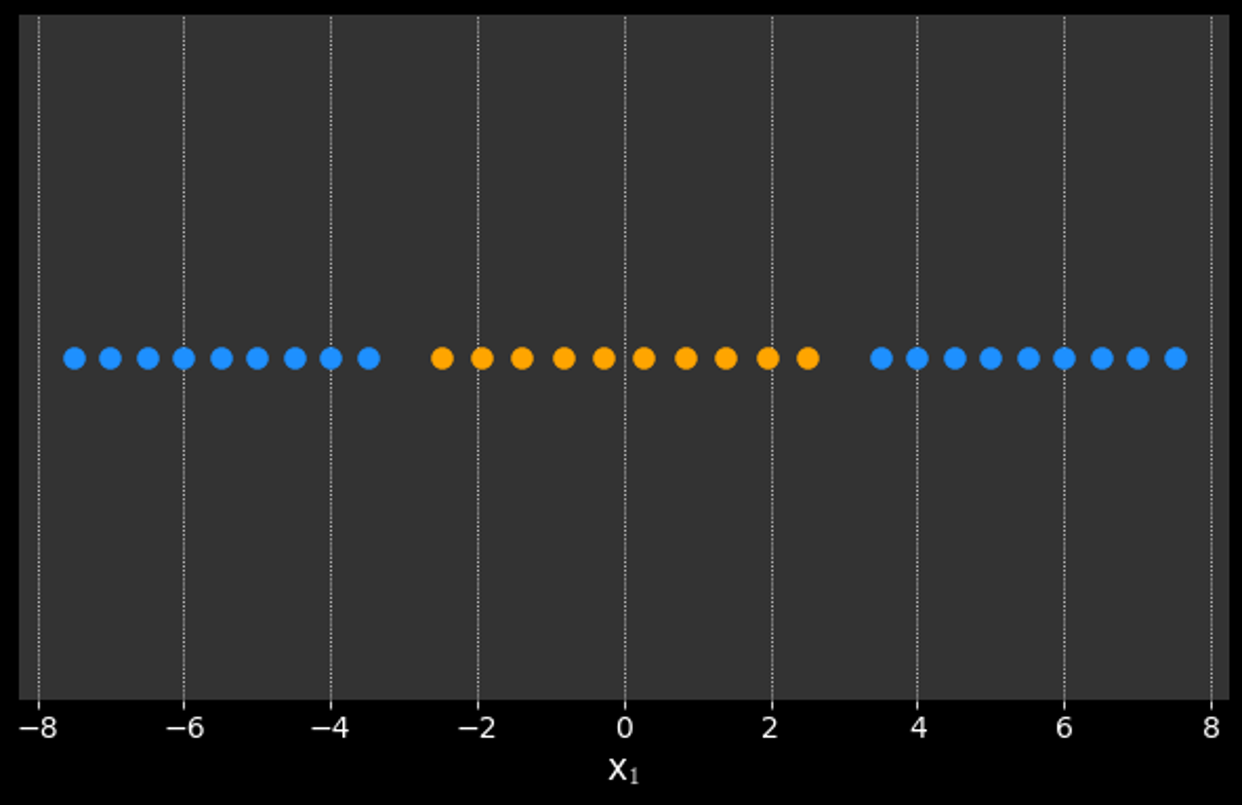

Support Vector Machines (SVM)

Thinking about the classification problem differently:

recall:

To find the "best" line we need a metric to optimize

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

MLPP

Support Vector Machines (SVM)

Thinking about the classification problem differently:

recall:

To find the "best" line we need a metric to optimize

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

In Support Vector Machines (SVM):

Metric: maximize the gap (aka. "margin") between the classes)

MLPP

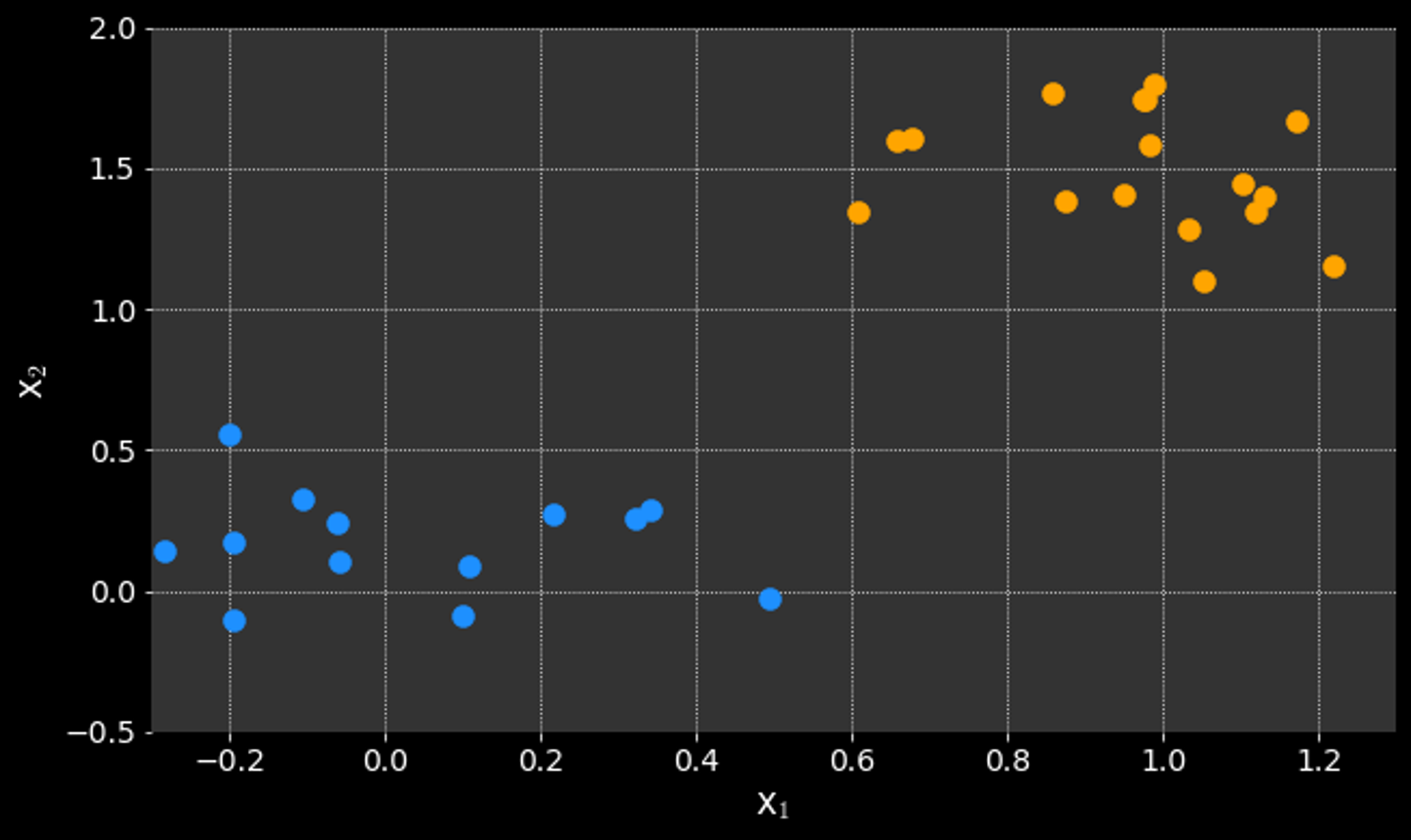

Support Vector Machines (SVM)

Thinking about the classification problem differently:

recall:

To find the "best" line we need a metric to optimize

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

In Support Vector Machines (SVM):

Metric: maximize the gap (aka. "margin") between the classes)

margin

MLPP

Support Vector Machines (SVM)

Thinking about the classification problem differently:

recall:

To find the "best" line we need a metric to optimize

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

In Support Vector Machines (SVM):

Metric: maximize the gap (aka. "margin") between the classes)

max margin

MLPP

Support Vector Machines (SVM)

Thinking about the classification problem differently:

recall:

To find the "best" line we need a metric to optimize

Can't we just draw a line that separates the classes?

But...which line is "best"?

Logistic regression is a probabilistic approach:

- finds the probability a data point falls in a class

In Support Vector Machines (SVM):

Metric: maximize the gap (aka. "margin") between the classes)

max margin

separating hyperplane

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

Logistic regression:

All points

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

Logistic regression:

All points

SVM:

Only the "difficult points" on the decision boundary

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

Logistic regression:

All points

SVM:

Only the "difficult points" on the decision boundary

Support Vectors:

Points (vectors from origin) that would influence the decision if moved

Points that only touch the boundary of the margin

support vectors

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

Logistic regression:

All points

SVM:

Only the "difficult points" on the decision boundary

Support Vectors:

Points (vectors from origin) that would influence the decision if moved

Points that only touch the boundary of the margin

Separating hyperplane:

or

support vectors

MLPP

Support Vector Machines (SVM)

Which points should influence the decision?

Objective:

Maximize the margin by minimizing:

Separating hyperplane:

or

support vectors

Constraints:

such that

MLPP

Support Vector Machines (SVM)

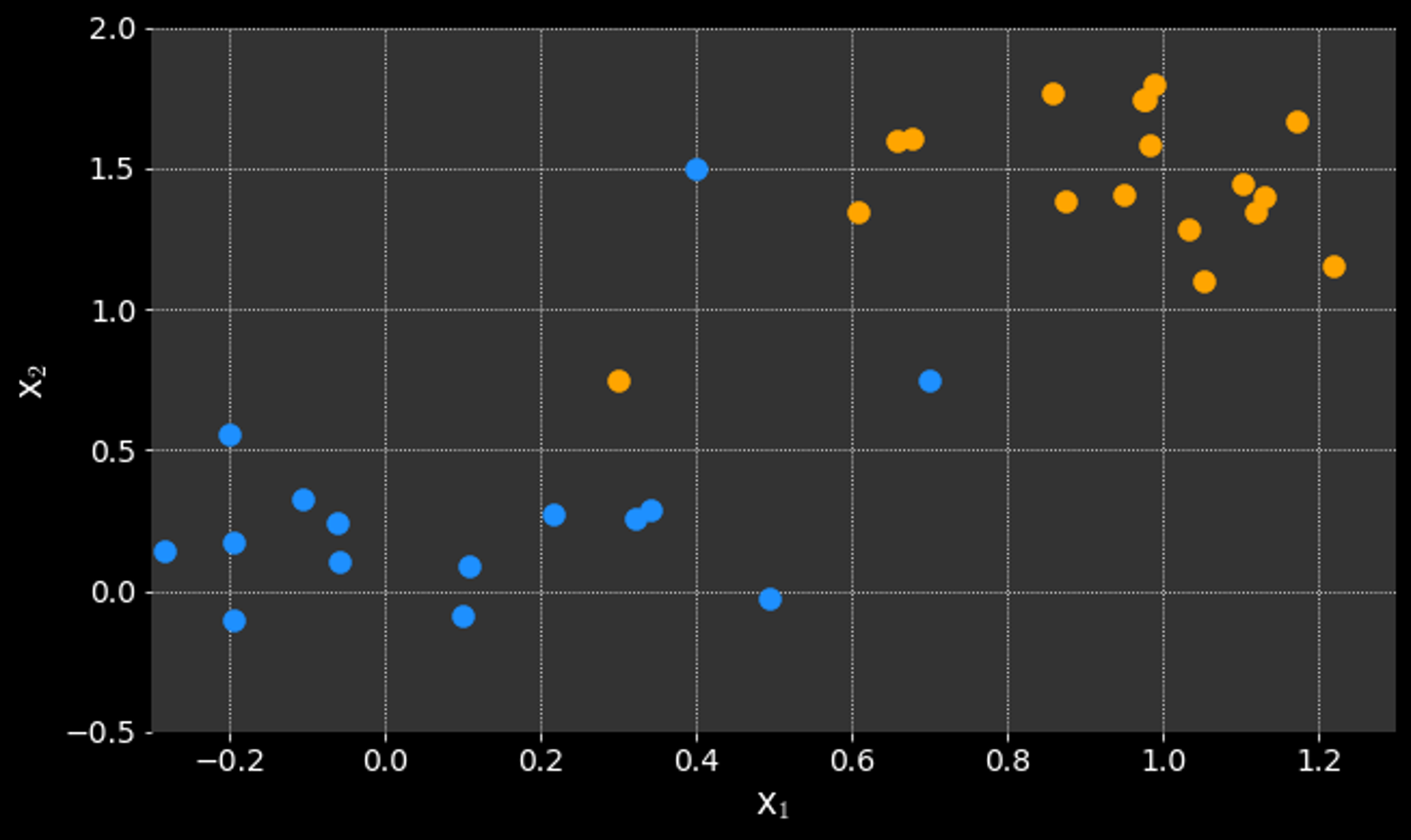

What if it is not possible to cleanly separate the data?

MLPP

Support Vector Machines (SVM)

What if it is not possible to cleanly separate the data?

MLPP

Support Vector Machines (SVM)

What if it is not possible to cleanly separate the data?

Minimizing

is known as Hard Margin SVM

MLPP

Support Vector Machines (SVM)

What if it is not possible to cleanly separate the data?

Minimizing

is known as Hard Margin SVM

Allow for some errors using Soft Margin SVM:

modify the objective and minimize:

where:

for all correctly classified points

and:

distance to boundary for all incorrectly classified points

max margin

MLPP

Support Vector Machines (SVM)

What if it is not possible to cleanly separate the data?

Minimizing

is known as Hard Margin SVM

Allow for some errors using Soft Margin SVM:

modify the objective and minimize:

where:

for all correctly classified points

and:

distance to boundary for all incorrectly classified points

is the penalty term:

Large c penalizes mistakes - creates hard margin

small c lowers the penalty, allows errors - creates soft margin

max margin

d3

d2

d1

MLPP

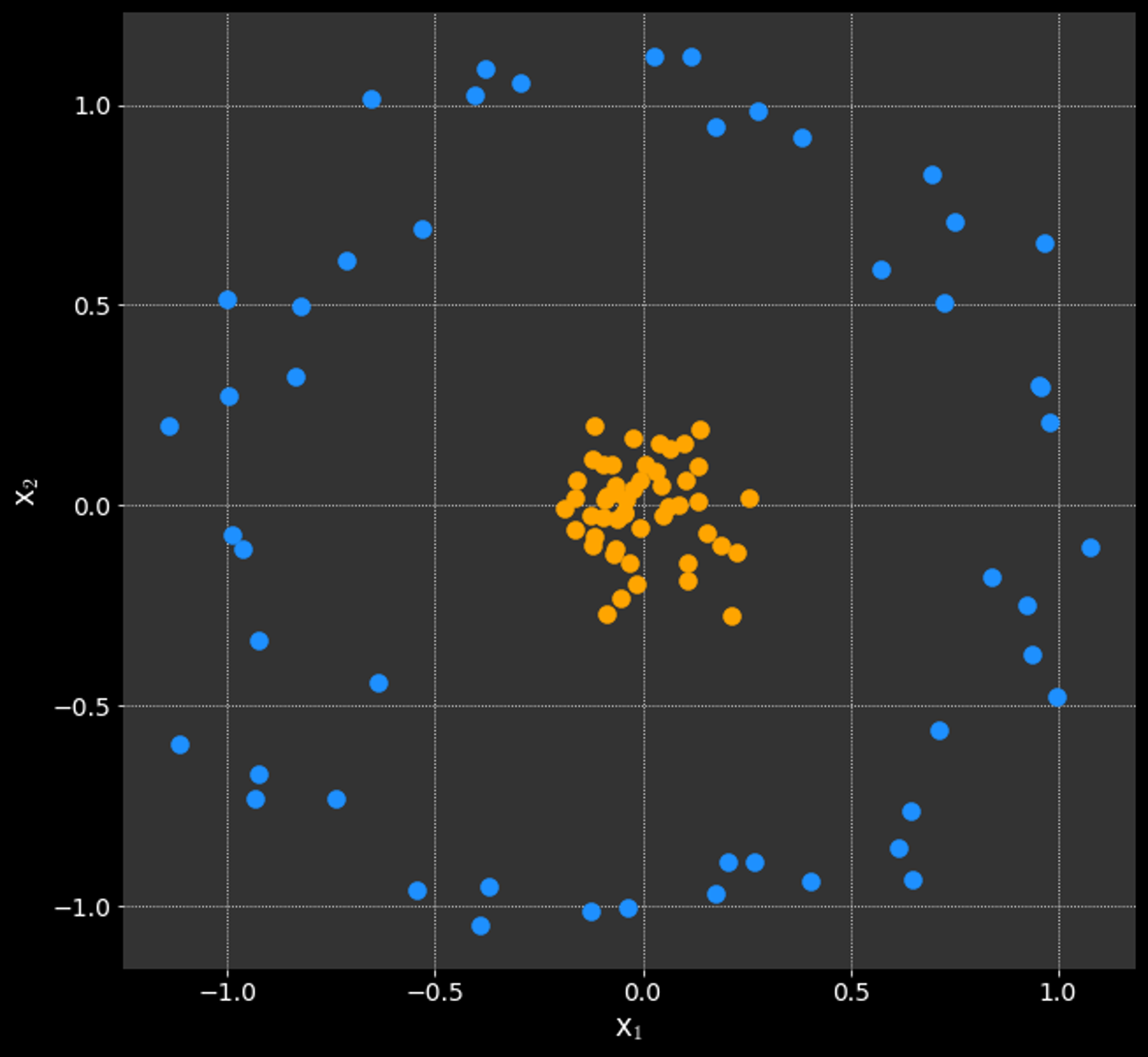

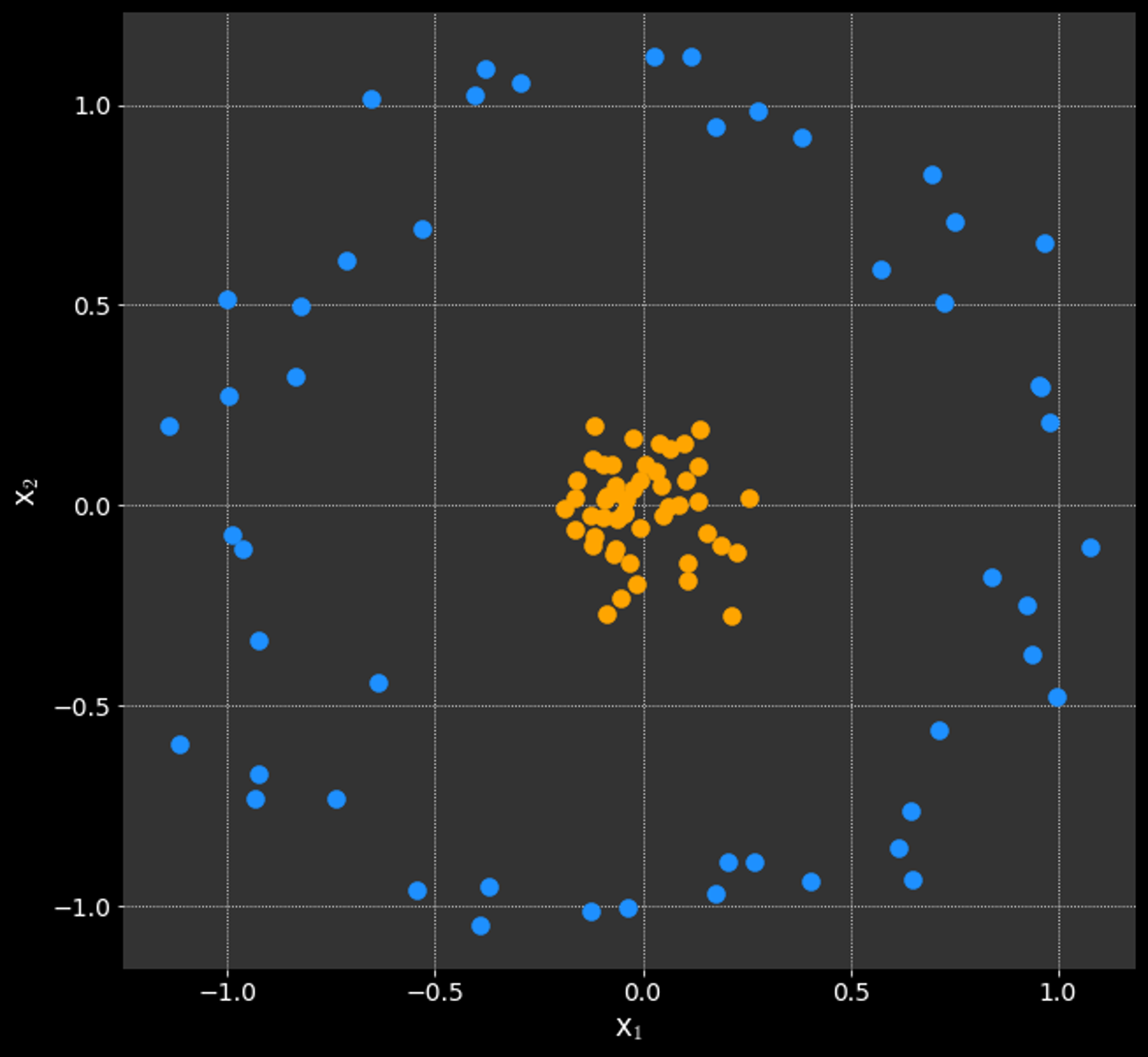

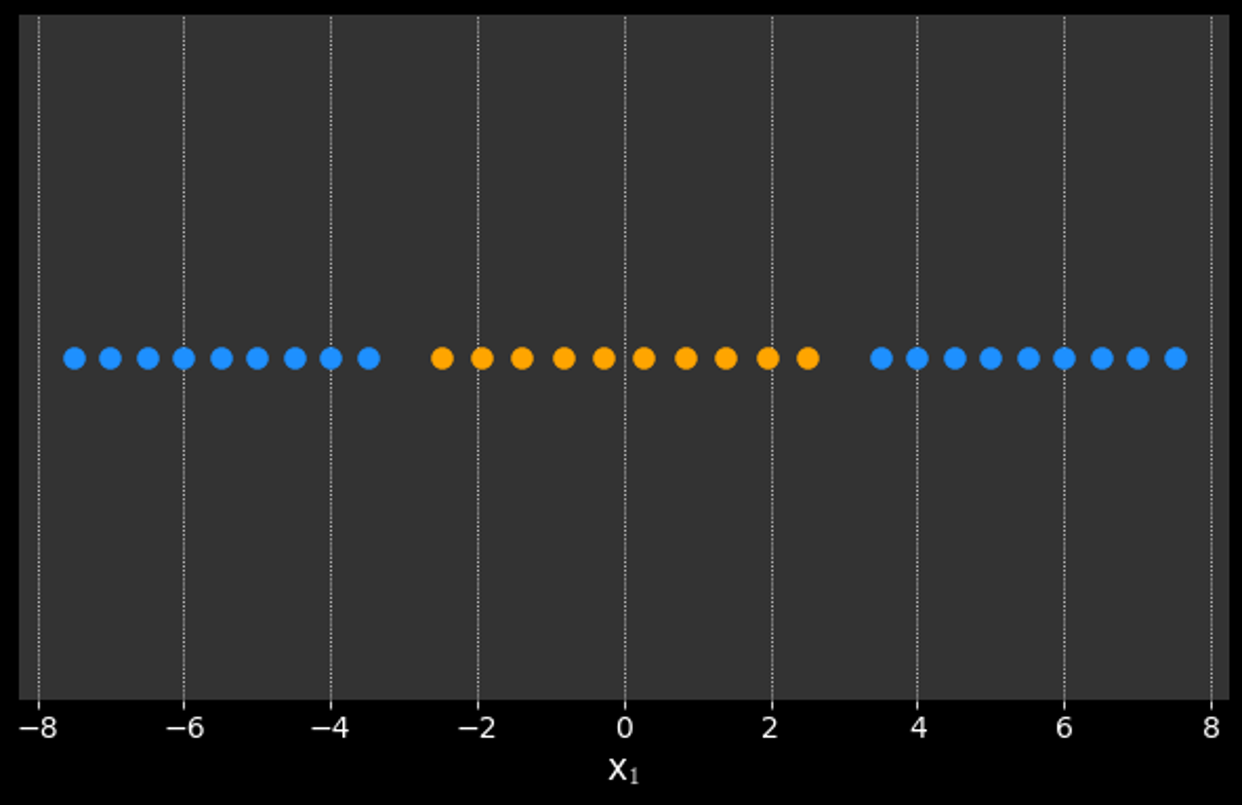

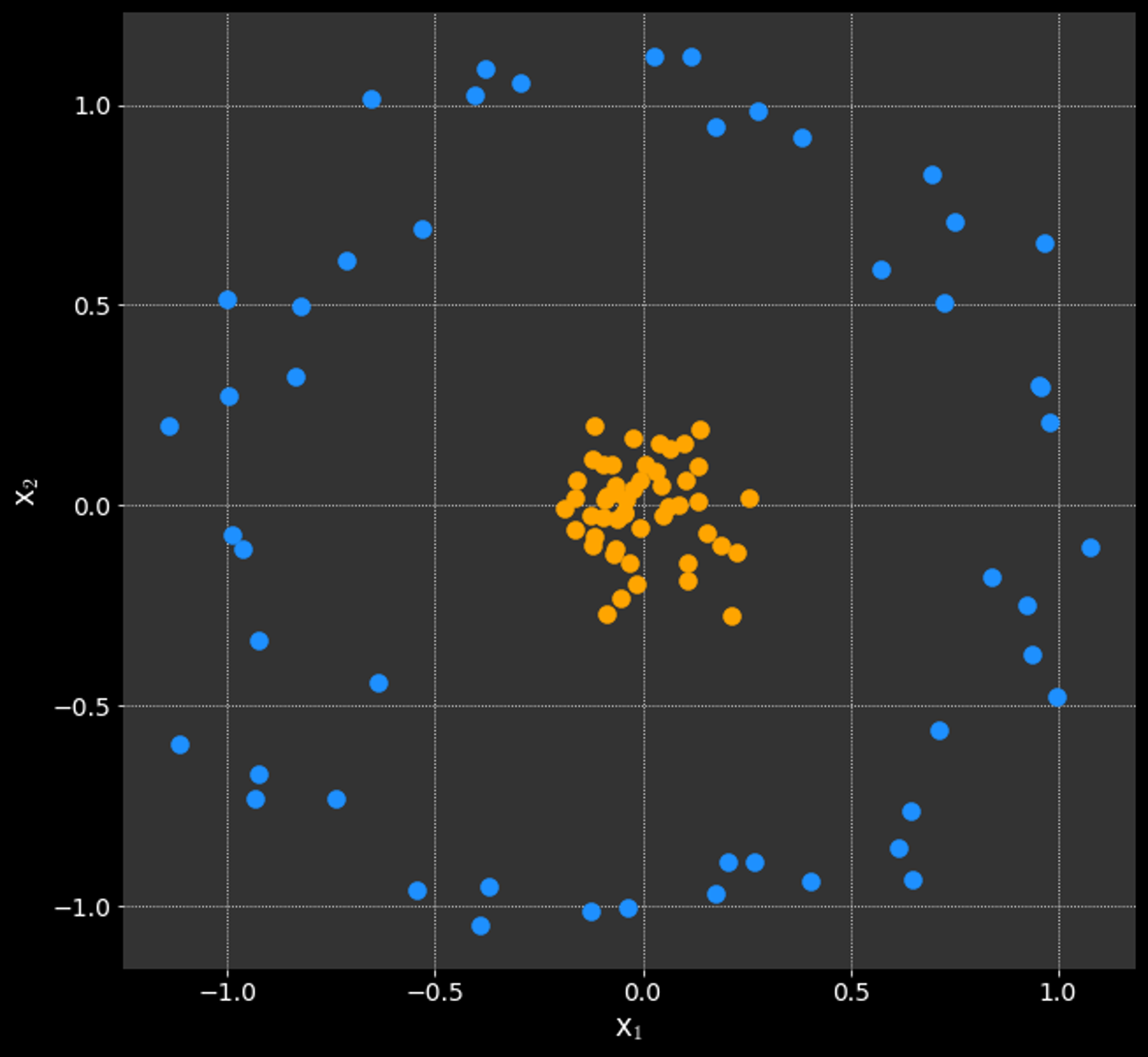

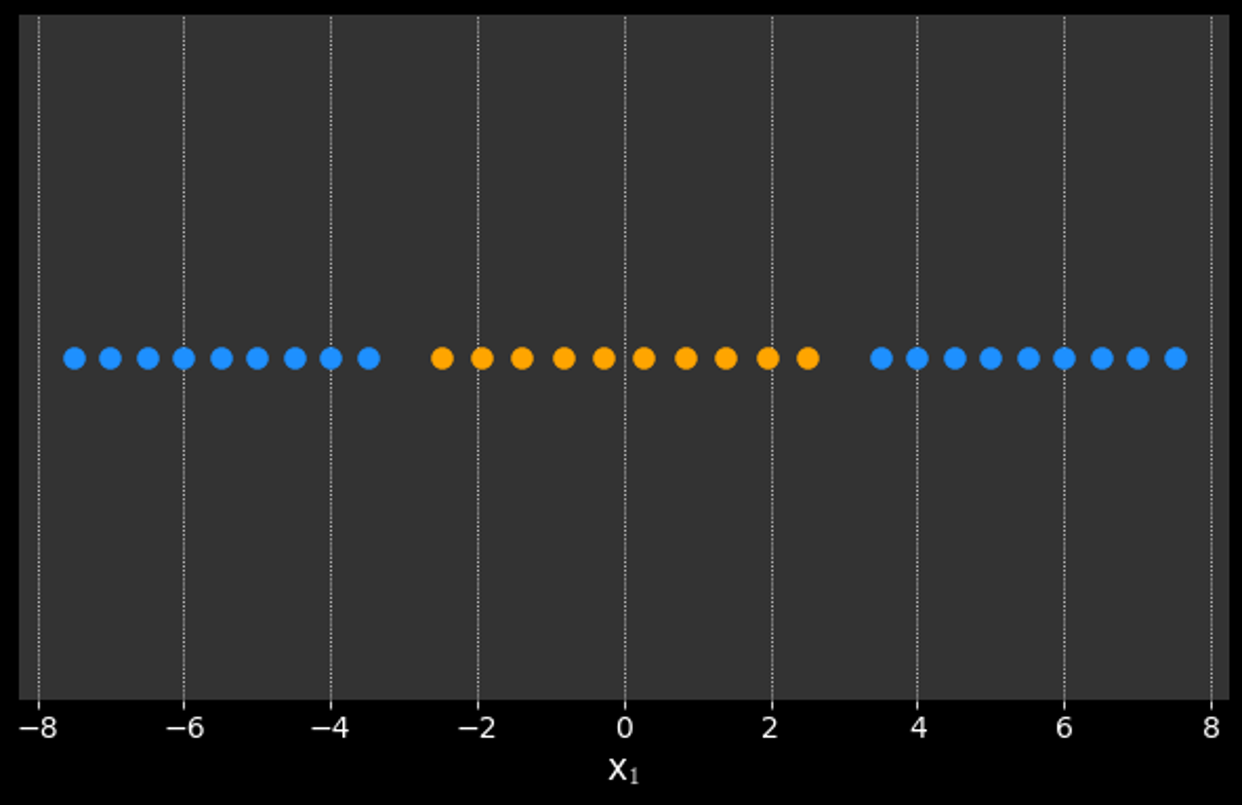

Support Vector Machines (SVM)

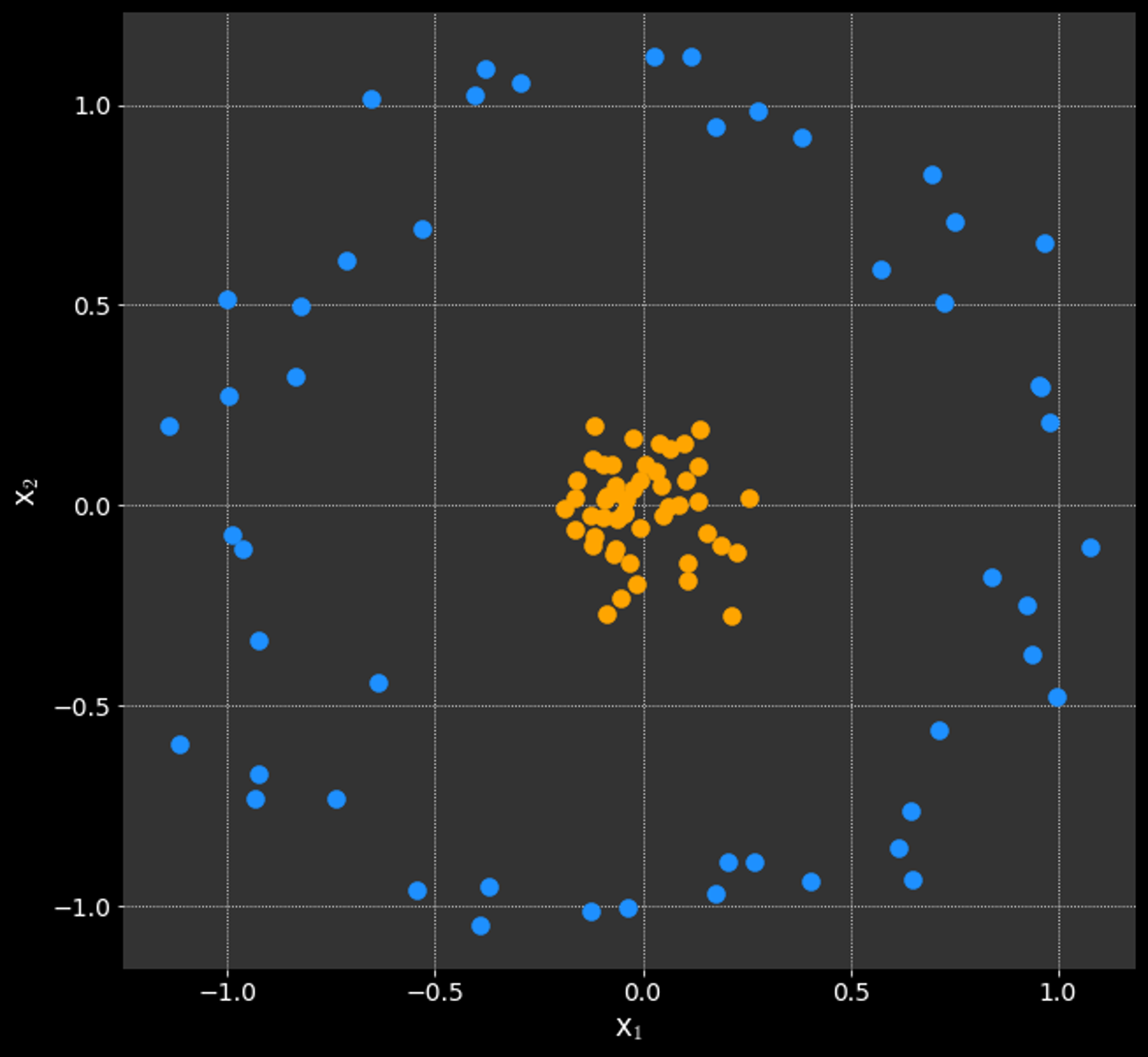

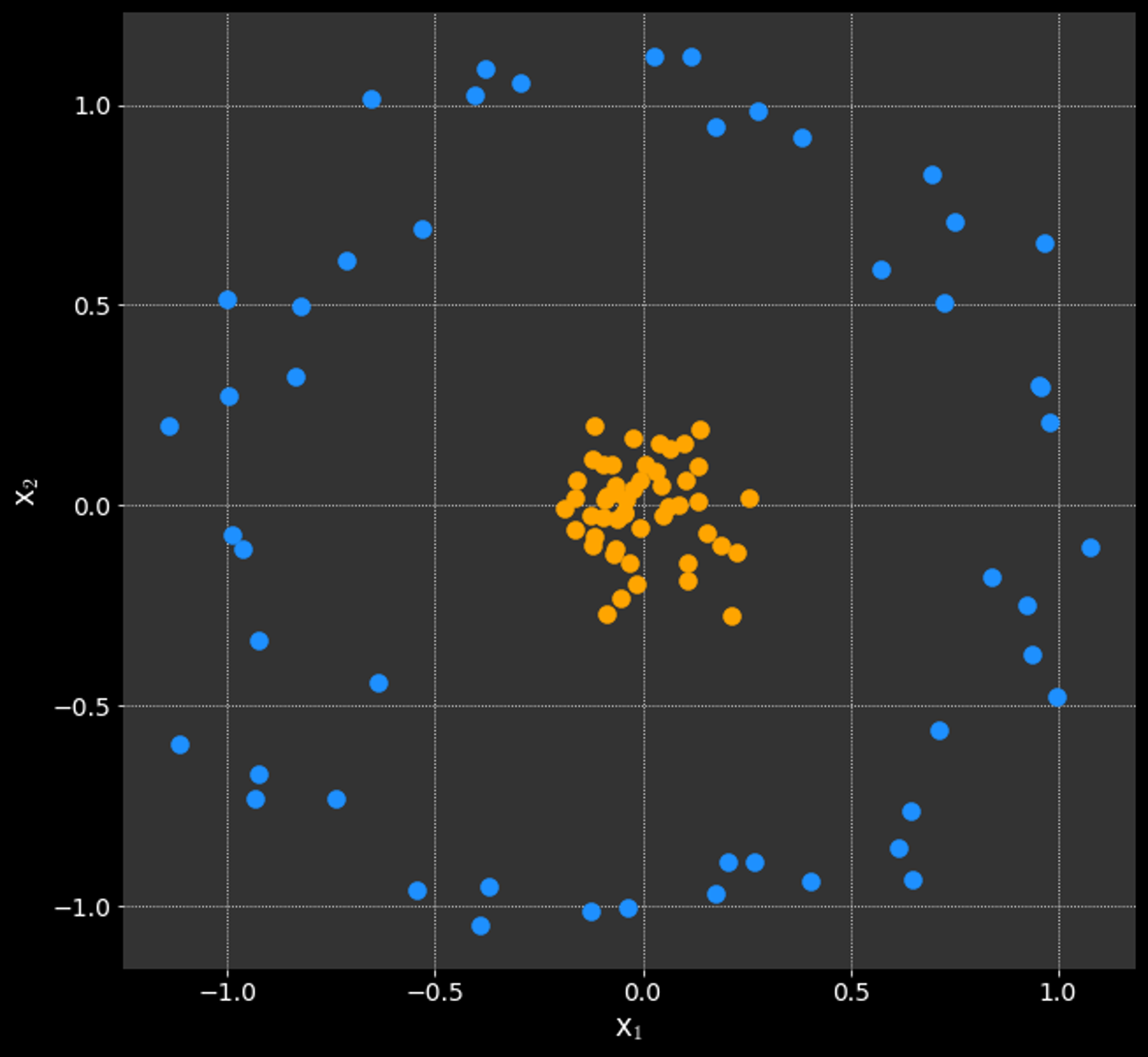

What if the data is not linearly separable?

MLPP

Support Vector Machines (SVM)

What if the data is not linearly separable?

MLPP

Support Vector Machines (SVM)

What if the data is not linearly separable?

MLPP

Support Vector Machines (SVM)

What if the data is not linearly separable?

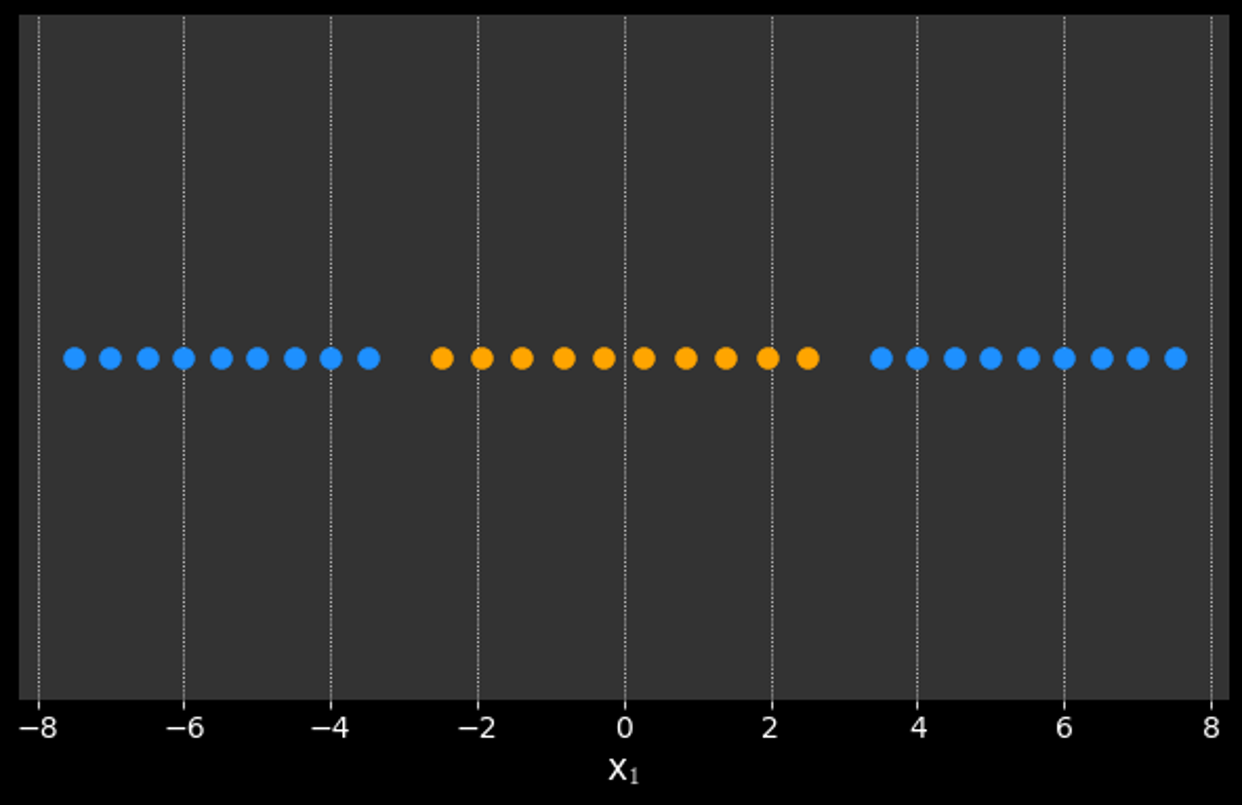

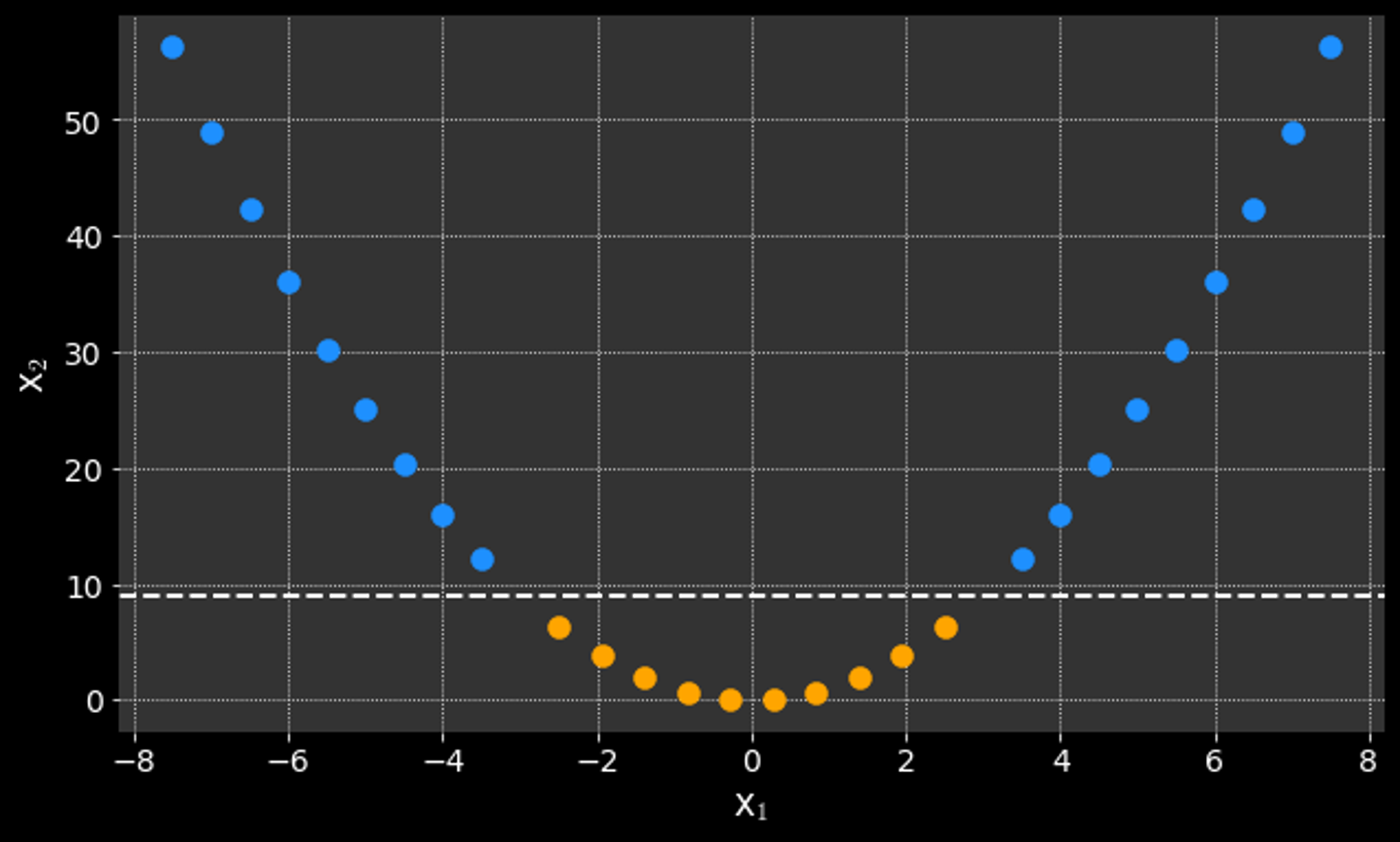

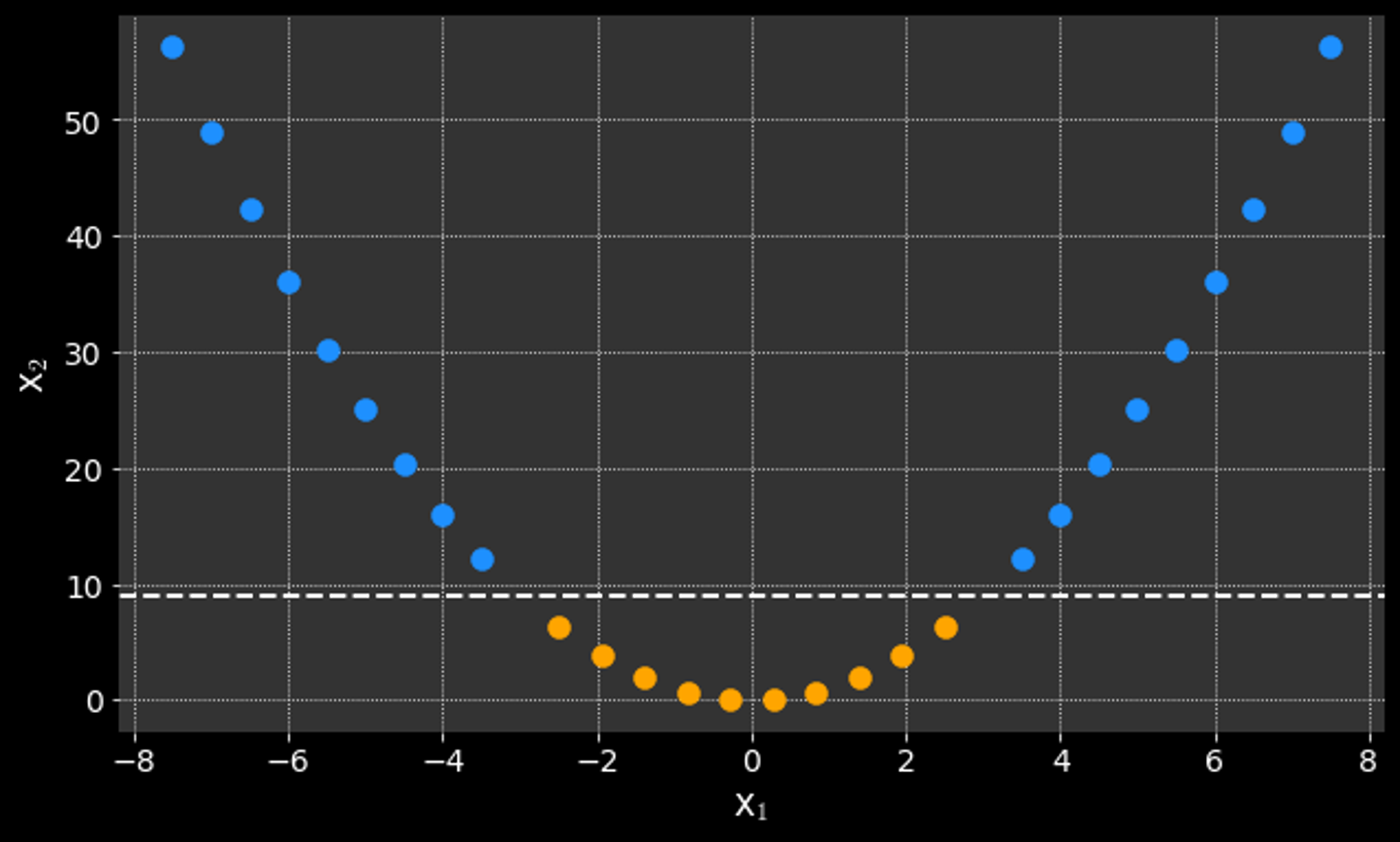

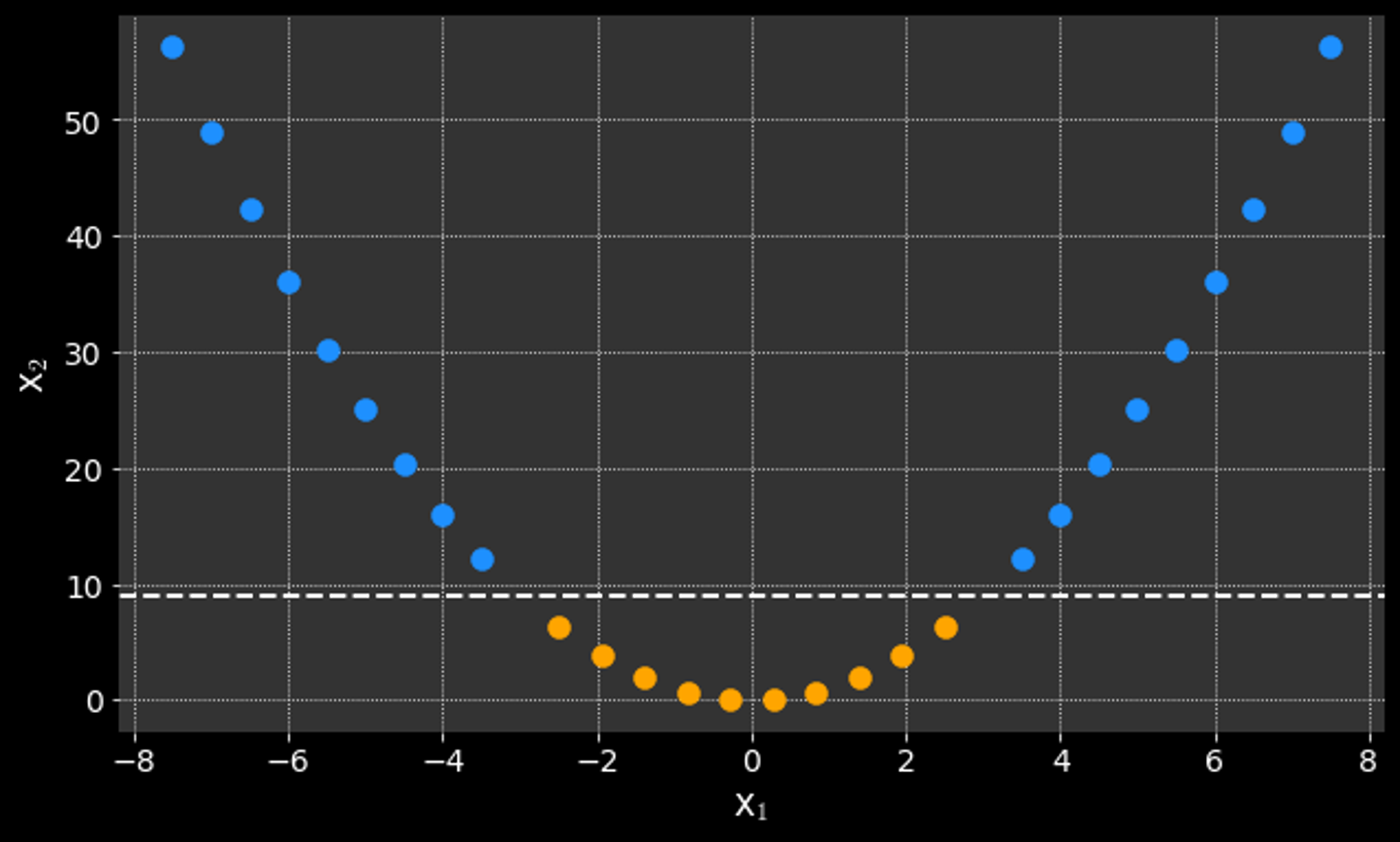

Kernel Trick:

Using a mathematical function to convert the data into linearly separable sets

MLPP

Support Vector Machines (SVM)

What if the data is not linearly separable?

Kernel Trick:

Using a mathematical function to convert the data into linearly separable sets

kernel

function

MLPP

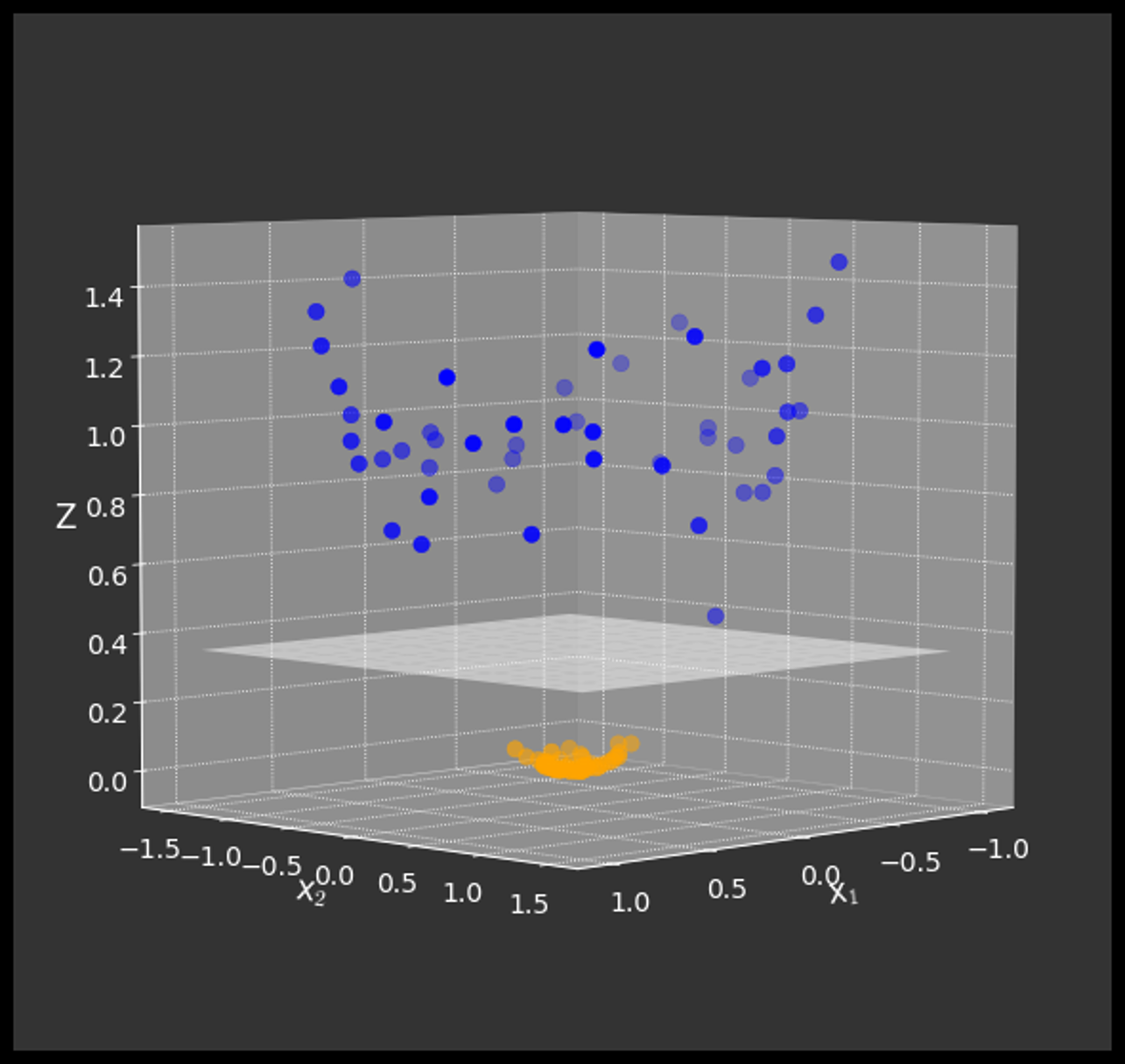

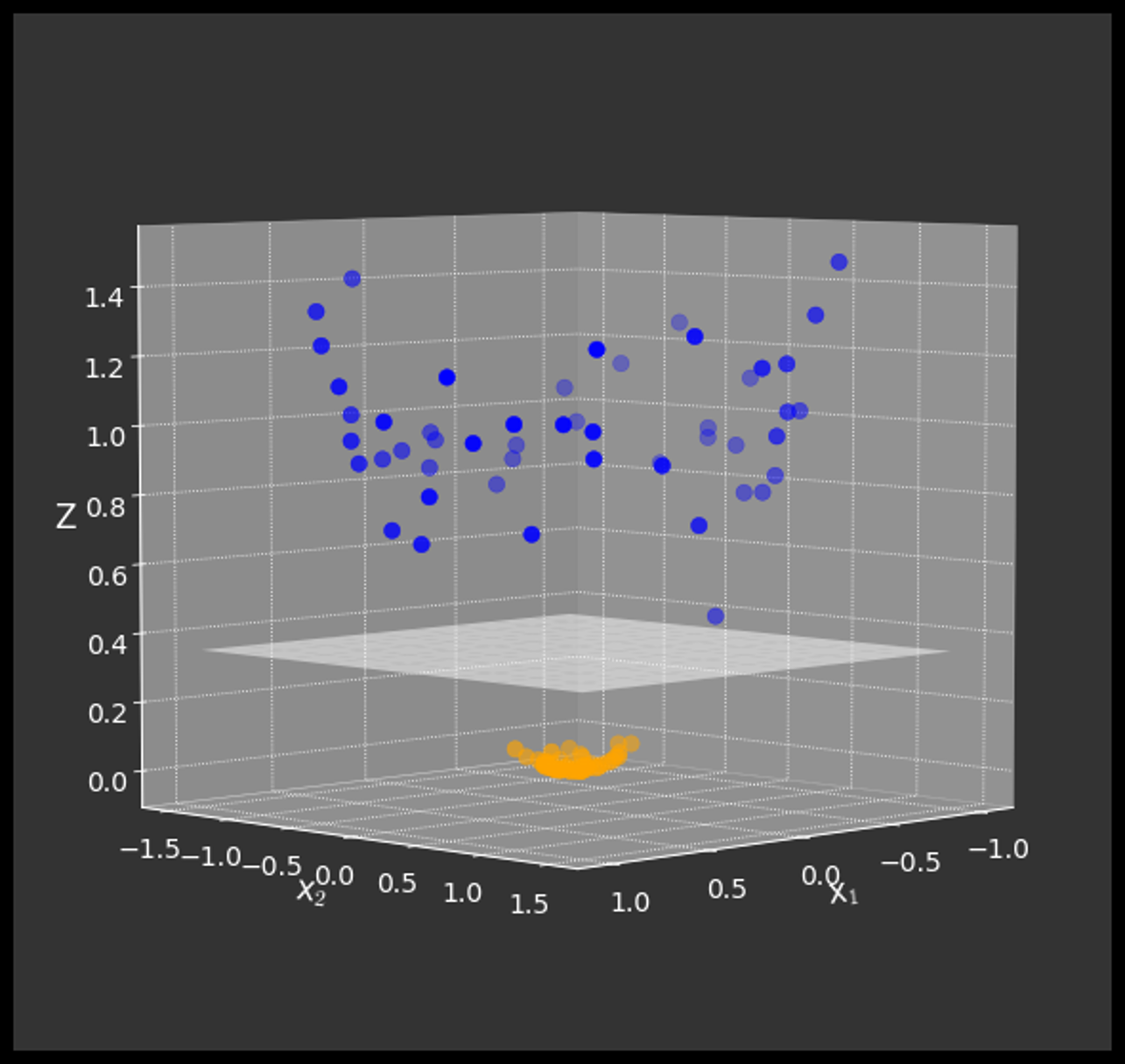

Support Vector Machines (SVM)

What if the data is not linearly separable?

Kernel Trick:

Using a mathematical function to convert the data into linearly separable sets

kernel

function

kernel

function

MLPP

Support Vector Machines (SVM)

What if the data is not linearly separable?

Kernel Trick:

Using a mathematical function to convert the data into linearly separable sets

kernel

function

kernel

function

Some kernel Types:

Linear kernel ("Non-kernel")

Polynomial kernel

Sigmoid kernel

Radial Basis Function (RBF) kernel

MLPP

Support Vector Machines (SVM)

Logistic Regression:

- Probabilistic - relies on maximizing the likelihood of reaching a label decision

- Relies on well identified independent variables

- Vulnerable to overfitting and the influence of outliers

- Simple to implement and use

- Efficient on large datasets with low number of features

Support Vector Machines

- Geometric - relies on maximizing the margin distance between the classes

- Capable of handling unstructured or semi-structured data like text and images

- Lower risk of overfitting and not sensitive to outliers

- Choosing the kernel is difficult

- Inefficient on large datasets, performs best on small datasets with large number of features