Ops

TALKS

Knowledge worth sharing

#01

Florian Dambrine - Principal Engineer - @GumGum

Terraform

Agenda

What DOES it DO

***

Basics

***

DEEP dive

***

CHEATSHEET

What does it do

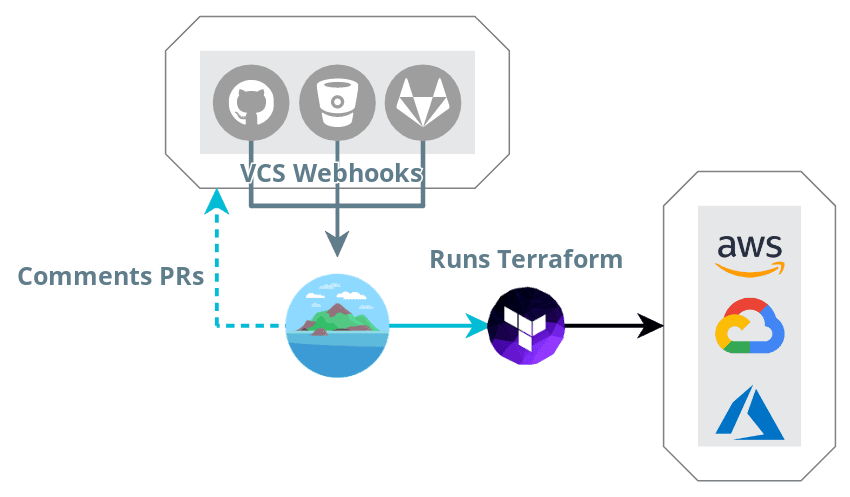

/ Terraform / terragrunt / atlantis

Terraform / Terragrunt / Atlantis ???

Engine that interacts with an SDKs (providers) to CRUD datasources & resources. Operations performed by Terraform are stored in a state)

Wrapper to keep your Terraform code DRY and ease multi-account management

Pull Request Bot to run Terraform on the fly when a PR is updated. It ensures a development workflow as well as acting as a central runner

RUNTIME

FROM hashicorp/terraform:0.12.29

# Caching providers + credstashFROM lowess/terragrunt as tools

FROM runatlantis/atlantis:v0.15.0

COPY --from=tools /usr/local/bin /usr/local/bin

COPY --from=tools /opt/.terraform.d /opt/.terraform.d

COPY --from=tools /root/.terraformrc /home/atlantis/.terraformrc

COPY --from=tools /root/.terraformrc /root/.terraformrcRECAP

RECAP

Read MORE !

Basics

/ Terraform State / Repo Layout / Local development / INFRA AS CODE GOTCHAS /

Terraform state management

Terraform must store state about your managed infrastructure and configuration. This state is used by Terraform to map real world resources to your configuration, keep track of metadata, and to improve performance for large infrastructures.

terraform.tfstate

locking

State isolation with Terragunt

locals {

remote_state_bucket = "terraform-state-${local.product_name}-${local.account_name}-${local.aws_region}"

remote_state_bucket_prefix = "${path_relative_to_include()}/terraform.tfstate"

}

remote_state {

backend = "s3"

config = {

encrypt = true

bucket = "${local.remote_state_bucket}"

key = "${local.remote_state_bucket_prefix}"

region = local.aws_region

dynamodb_table = "terraform-locks"

}

...

}terraform/terragrunt/terragrunt.hcl

Terraform state & REPOSITORY layout

terragrunt

├── gumgum-ads

│ ├── account.hcl

│ └── virginia

├── gumgum-ai

│ ├── account.hcl

│ ├── oregon

│ └── virginia

├── terragrunt.hcl

└── versioning.hcllocals {

remote_state_bucket = "terraform-state-${local.product_name}-${local.account_name}-${local.aws_region}"

remote_state_bucket_prefix = "${path_relative_to_include()}/terraform.tfstate"

}

remote_state {

backend = "s3"

config = {

encrypt = true

bucket = "${local.remote_state_bucket}"

key = "${local.remote_state_bucket_prefix}"

region = local.aws_region

dynamodb_table = "terraform-locks"

}

...

}

$ aws s3api list-buckets \

| jq -r '.Buckets[] | select(.Name | test("^terraform-state")) | .Name'

### In AI account

* terraform-state-verity-gumgum-ai-us-east-1

* terraform-state-verity-gumgum-ai-us-west-2

### In Ads account

* terraform-state-verity-gumgum-ads-us-east-1

# Example of accounts.hcl content

locals {

account_name = "gumgum-ads"

product_name = "verity"

}S3 layout follows code repo thanks to path_relative_to_include() dictated by Terragrunt.

REpository layout (Depth 0->N)

terraform/terragrunt

gumgum-ads

gumgum-ai

virginia

oregon

virginia

prod

prod

stage

dev

prod

stage

N0

FREE FORM STRUCTURE

For example

- N+5 - Jira project / Team namespace

- N+6 - Infrastructure bit made of multiple components (Kafka)

- N+7 - Single infrastructure component (Zookeeper)

N+1

N+2

N+3

N+4

Local development

# Create the `tf` alias that will spin up a docker container

# with all the tooling required to operate Verity infrastructure

export GIT_WORKSPACE=${HOME}/Workspace"

alias tf='docker run -it --rm \

-v ~/.aws:/root/.aws \

-v ~/.ssh:/root/.ssh \

-v ~/.kube:/root/.kube \

-v "$(pwd):/terragrunt" \

-v "${GIT_WORKSPACE}:/modules" \

-w /terragrunt \

lowess/terragrunt:0.12.29'### Terminal Laptop

$ cd $GIT_WORKSPACE/verity-infra-ops/terraform/terragrunt

$ tf

### Entered docker container

/terragrunt $ cd <path-to-component-you-want-to-test>

### Plan with terragrunt module override (point to local)

/terragrunt $ terragrunt plan --terragrunt-source /modules/terraform-verity-modules//<somemodule>

DEMo

INFRASTRUCTURE AS CODE GOTCHAS

Infrastructure code != Application code

#!/usr/bin/env python

import boto3

client = boto3.client('sqs')

sqs_queue = "ops-talk-sqs"

response = client.create_queue(

QueueName=sqs_queue,

Attributes = {

'DelaySeconds': 90

},

tags={

'Name': sqs_queue,

'Environment': 'dev'

}

)

print(response)diff --git a/queue.py b/queue.py

index c19f2f6..c4538e5 100644

--- a/queue.py

+++ b/queue.py

@@ -6,7 +6,7 @@ client = boto3.client('sqs')

sqs_queue = "ops-talk-sqs"

-response = client.create_queue(

+queue = client.create_queue(

QueueName=sqs_queue,

Attributes = {

'DelaySeconds': 90

@@ -17,4 +17,4 @@ response = client.create_queue(

}

)

-print(response)

+print(queue)Is this change harmless ---^ ?

INFRASTRUCTURE AS CODE GOTCHAS

Infrastructure code != Application code

# terraform.tf

locals {

sqs_name = "sqs-ops-talk"

}

resource "aws_sqs_queue" "terraform_queue" {

name = "${local.sqs_name}"

delay_seconds = 90

tags = {

Name = "${local.sqs_name}"

Environment = "dev"

}

}diff --git a/terraform.tf b/terraform.tf

index 3c8cb08..8981f1d 100644

--- a/terraform.tf

+++ b/terraform.tf

@@ -4,7 +4,7 @@ locals {

sqs_name = "sqs-ops-talk"

}

-resource "aws_sqs_queue" "terraform_queue" {

+resource "aws_sqs_queue" "queue" {

name = "${local.sqs_name}"

delay_seconds = 90

tags = {Is this change harmless ---^ ?

- Remember -

Infrastructure as code is made of code & state !

Deep-Dive

/ Terragrunt no-module / terragrunt provider OVERWRITes / Module versioning at scale / best practices

terragrunt with no modules

Please note that it is a better practice to build a module instead of writing plain terraform

# ---------------------------------------------------------------------------------------------------------------------

# TERRAGRUNT CONFIGURATION

# This is the configuration for Terragrunt, a thin wrapper for Terraform that supports locking and enforces best

# practices: https://github.com/gruntwork-io/terragrunt

# ---------------------------------------------------------------------------------------------------------------------

locals {

# Automatically load environment-level variables

environment_vars = read_terragrunt_config(find_in_parent_folders("env.hcl"))

# Extract out common variables for reuse

env = local.environment_vars.locals.environment

}

# Terragrunt will copy the Terraform configurations specified by the source parameter, along with any files in the

# working directory, into a temporary folder, and execute your Terraform commands in that folder.

terraform {}

# Include all settings from the root terragrunt.hcl file

include {

path = find_in_parent_folders()

}

# These are the variables we have to pass in to use the module specified in the terragrunt configuration above

inputs = {}main.tf

variable "aws_region" {}

provider aws {

region = var.aws_region

}

resource "..."terragrunt.hcl

DEMo

TERRAGRUNT Provider Overwrites

# ---------------------------------------------------------------------------------------------------------------------

# TERRAGRUNT CONFIGURATION

# This is the configuration for Terragrunt, a thin wrapper for Terraform that supports locking and enforces best

# practices: https://github.com/gruntwork-io/terragrunt

# ---------------------------------------------------------------------------------------------------------------------

locals {

# Automatically load environment-level variables

environment_vars = read_terragrunt_config(find_in_parent_folders("env.hcl"))

# Extract out common variables for reuse

env = local.environment_vars.locals.environment

}

# Terragrunt will copy the Terraform configurations specified by the source parameter, along with any files in the

# working directory, into a temporary folder, and execute your Terraform commands in that folder.

terraform {

source = "git::ssh://git@bitbucket.org/..."

}

generate "provider" {

path = "providers.tf"

if_exists = "overwrite_terragrunt"

contents = <<EOF

# Configure the AWS provider

provider "aws" {

version = "~> 2.9.0"

region = var.aws_region

}

EOF

}

# Include all settings from the root terragrunt.hcl file

include {

path = find_in_parent_folders()

}

# These are the variables we have to pass in to use the module specified in the terragrunt configuration above

inputs = {}terragrunt.hcl

DEMo

Warning !

Overwriting the providers.tf file from Terragrunt can lead to broken modules. You need to make sure that providers defined in the module are part of generate statement

Module versioning at scale

DEMo

$ tree -L 1 /terragrunt/gumgum-ai/virginia/prod/verity-api/dynamodb-*

dynamodb-verity-image

└── terragrunt.hcl

dynamodb-verity-images

└── terragrunt.hcl

dynamodb-verity-pages

└── terragrunt.hcl

dynamodb-verity-text

└── terragrunt.hcl

dynamodb-verity-text-source

└── terragrunt.hcl

dynamodb-verity-video

└── terragrunt.hcl# dynamodb-*/terragrunt.hcl

terraform {

source = "/terraform-verity-modules//verity-api/dynamodb?ref=1.4.2"

}# dynamodb-*/terragrunt.hcl

locals {

versioning_vars = read_terragrunt_config(find_in_parent_folders("versioning.hcl"))

version = lookup(local.versioning_vars.locals.versions, "verity-api/dynamodb", "latest")

}

terraform {

source = "/terraform-verity-modules//verity-api/dynamodb?ref=${local.version}"

}

# versioning.hcl

locals {

versions = {

"latest": "v1.7.2"

"verity-api/dynamodb": "v1.4.2"

"nlp/elasticache": "v1.7.2"

"namespace/app": "v1.3.1"

}

}When new feature comes in, bump the version of the namespace you want to upgrade

Best practices - using locals

locals {

### Ease the access of sg ids from the peered VPC

peer_scheduler_sg = "${module.peer_discovery.security_groups_json["scheduler"]}"

peer_kafka_monitoring_sg = "${module.peer_discovery.security_groups_json["monitoring"]}"

peer_tws_sg = "${module.peer_discovery.security_groups_json["tws"]}"

peer_pqp_sg = "${module.peer_discovery.security_groups_json["pqp"]}"

verity_api_sg = data.terraform_remote_state.verity_ecs.outputs.ecs_sg_id

hce_sg = data.terraform_remote_state.hce_ecs.outputs.ecs_sg_id

sg_description = "Access cluster from [${var.account_name}] "

}resource "aws_security_group_rule" "kafka_tcp_9092_ads_scheduler" {

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

source_security_group_id = local.peer_scheduler_sg ### ---> locals leads to cleaner code

security_group_id = aws_security_group.kafka.id

description = "${local.sg_description} ${local.peer_scheduler_sg}"

}

Locals = Data structure manipulation / Pattern enforcement / Cleaner code

Best practices - outputs for tfstate lookup

<module>

├── datasources.tf

├── ec2.tf

├── ecs.tf

├── outputs.tf

├── providers.tf

├── templates

│ └── ec2-cloud-config.yml.tpl

├── userdata.tf

├── variables.tf

└── versions.tf# <module>/outputs.tf

output "ecs_esg_id" {

value = spotinst_elastigroup_aws.ecs.id

}

output "ecs_sg_id" {

value = aws_security_group.ecs.id

}

outputs.tf

{

"version": 4,

"terraform_version": "0.12.24",

"serial": 24,

"lineage": "ade31deb-1be6-2953-5b1c-432e52ed44b8",

"outputs": {

"ecs_esg_id": {

"value": "sig-c5916298",

"type": "string"

},

"ecs_sg_id": {

"value": "sg-1d8f44c8ab69634da",

"type": "string"

}

},

"resources": [...]

}terraform.tfstate

Best practices - outputs for tfstate lookup

locals {

memcached_sgs = {

memcached-japanese-verity = data.terraform_remote_state.memcached_japanese_verity.outputs.memcached_sg_id

memcached-metadata-verity = data.terraform_remote_state.memcached_metadata_verity.outputs.memcached_sg_id

}

}

data "terraform_remote_state" "memcached_metadata_verity" {

backend = "s3"

config = {

bucket = var.remote_state_bucket

key = "${var.remote_state_bucket_prefix}/../../../../memcached-metadata-verity/terraform.tfstate"

region = var.aws_region

}

}

data "terraform_remote_state" "memcached_metadata_verity" {

backend = "s3"

config = {

bucket = var.remote_state_bucket

key = "${var.remote_state_bucket_prefix}/../../../../memcached-metadata-verity/terraform.tfstate"

region = var.aws_region

}

}

# Do something with local.memcached_sgs

resource "aws_security_group_rule" "memcached_tcp_11211_ecs_cluster" {

for_each = { for key, val in local.memcached_nlp_sgs: key => val }

type = "ingress"

from_port = 11211

to_port = 11211

protocol = "tcp"

description = "Access from ${title(var.ecs_cluster_name)}"

source_security_group_id = aws_security_group.ecs.id

security_group_id = each.value

}- REMEMBER -

The variable outputs stored in terraform.tfstate files should be your single source of truth...

Best practices - You trust me and vice versa

resource "aws_security_group_rule" "kafka_tcp_9092_pritunl {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value

source_security_group_id = local.pritunl_sg

description = "Access Kafka cluster from VPN"

}resource "aws_security_group_rule" "kafka_tcp_9092_monitoring {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value

source_security_group_id = local.monitoring_sg

description = "Access Kafka cluster from Monitoring"

}resource "aws_security_group_rule" "kafka_tcp_9092_some_client_app {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value ### ?????

source_security_group_id = local.client_app ### ?????

description = "Access Kafka cluster from Some client APP"

}terragrunt/gumgum-ai/virginia/prod/prism-kafka-cluster/kafka

- Remember -

If you need to rebuild the infra from scratch the order will be as follows VPC > Backends > Apps --- At the Backends stage, the Kafka cluster will be provisioned, Applications do not exists yet (🐔 & 🥚) which will break the provisioning

Best practices - You trust me and vice versa

terragrunt/gumgum-ai/virginia/prod/prism-kafka-cluster/kafka

.../prod/ops-talk/consumerapp

resource "aws_security_group_rule" "kafka_tcp_9092_pritunl {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value

source_security_group_id = local.pritunl_sg

description = "Access Kafka cluster from VPN"

}resource "aws_security_group_rule" "kafka_tcp_9092_monitoring {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value

source_security_group_id = local.monitoring_sg

description = "Access Kafka cluster from Monitoring"

}resource "aws_security_group_rule" "kafka_tcp_9092_some_client_app {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = local.client_app -- Trusts for security groups

source_security_group_id = each.value -- is flipped

description = "Access Kafka cluster from Some client APP"

}Best practices - LOOPS - Count is 😈

locals {

source_sg = "sg-60f3be13"

kafka_sgs = toset([

"sg-078f01cdfc0c41c9a",

"sg-09e683e9740fc3d8c"

])

}resource "aws_security_group_rule" "kafka_tcp_9092_newapp" {

count = length(local.kafka_sgs)

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = element(tolist(local.kafka_sgs), count.index)

source_security_group_id = local.source_sg

description = "Access Kafka cluster from ops-talk"

}resource "aws_security_group_rule" "kafka_tcp_9092_newapp" {

for_each = local.kafka_sgs

type = "ingress"

from_port = 9092

to_port = 9092

protocol = "tcp"

security_group_id = each.value

source_security_group_id = local.source_sg

description = "Access Kafka cluster from ops-talk"

}

Vs

DEMo

CHEATSHEET

Ops

TALKS

Knowledge worth sharing

By Florian