Paddle

2017/02/10 翁子皓 何憶琳

Outline

- Installation

- Quick start

- Run on GPU / Cluster

Installation

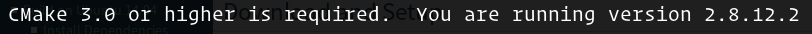

- cmake >= 3.0 (the doc says >= 2.8??)

- python 2.7

- protobuf

- numpy

NOTE: I use Anaconda for python and the packages

Installation

git clone https://github.com/baidu/Paddle paddle

cd paddle

mkdir build && cd build

cmake .. -DCMAKE_INSTALL_PREFIX=<path> [other options...]

make -j && make install

export PATH=<path>:$PATH

paddle # run paddle, it will automatically install missing python packagesQuick Start

- There's a quick start tutorial in the document

- Learn how to configure and run paddle for training and prediecting

Steps

- training / testing data

- define input (dataprovider.py)

- define model (trainer_config.py)

- define output

Input File

- Create a file that contains a list of our data filenames

- Paddle will loop through the list and read each file

dataprovider

-

Define input type

-

Read file and preprocess data

dataprovider

from paddle.trainer.PyDataProvider2 import *

# initializer: define input type and additional meta-data

# kwargs: passed from trainer_config.py

def initializer(settings, **kwargs):

settings.input_type = { 'label': integer_value(10), 'word': dense_vector(100) }

# process: use yield to return a data

# file_name: a name from train_list

@provider(init_hook=initializer, cache=CacheType.CACHE_PASS_IN_MEM)

def process(settings, file_name):

with open(file_name, 'r') as f:

for line in f:

label, word = line.split(':')

label = int(label)

word = word.split(',')

yield { 'label': label, 'word': word }trainer_config

- Configure parameters

- Define Layers

trainer_config

define_py_data_sources2(

train_list=trn,

test_list=tst,

module="dataprovider",

obj=process)

settings(

batch_size=batch_size,

learning_rate=2e-3,

learning_method=AdamOptimizer(),

regularization=L2Regularization(8e-4),

gradient_clipping_threshold=25)

trainer_config

data = data_layer(name="word", size=len(word_dict))

# Define a fully connected layer with logistic activation.

# (also called softmax activation).

output = fc_layer(input=data, size=2, act=SoftmaxActivation())

if not is_predict:

# For training, we need label and cost

# define the category id for each example.

# The size of the data layer is the number of labels.

label = data_layer(name="label", size=2)

# Define cross-entropy classification loss and error.

cls = classification_cost(input=output, label=label)

outputs(cls)

else:

# For prediction, no label is needed. We need to output

# We need to output classification result, and class probabilities.

maxid = maxid_layer(output)

outputs([maxid, output])Run Paddle

cfg=trainer_config.py

paddle train \

--config=$cfg \

--save_dir=./output \

--trainer_count=4 \

--log_period=1000 \

--dot_period=10 \

--num_passes=10 \

--use_gpu=false \

--show_parameter_stats_period=3000 \

2>&1 | tee 'train.log'

CLI arguments

-

--config: network architecture path.

-

--save_dir: model save directory.

-

--log_period: the logging period per batch.

-

--num_passes: number of training passes. One pass means the training would go over the whole training dataset once.

-

--config_args: Other configuration arguments.

-

--init_model_path: The path of the initial model parameter.

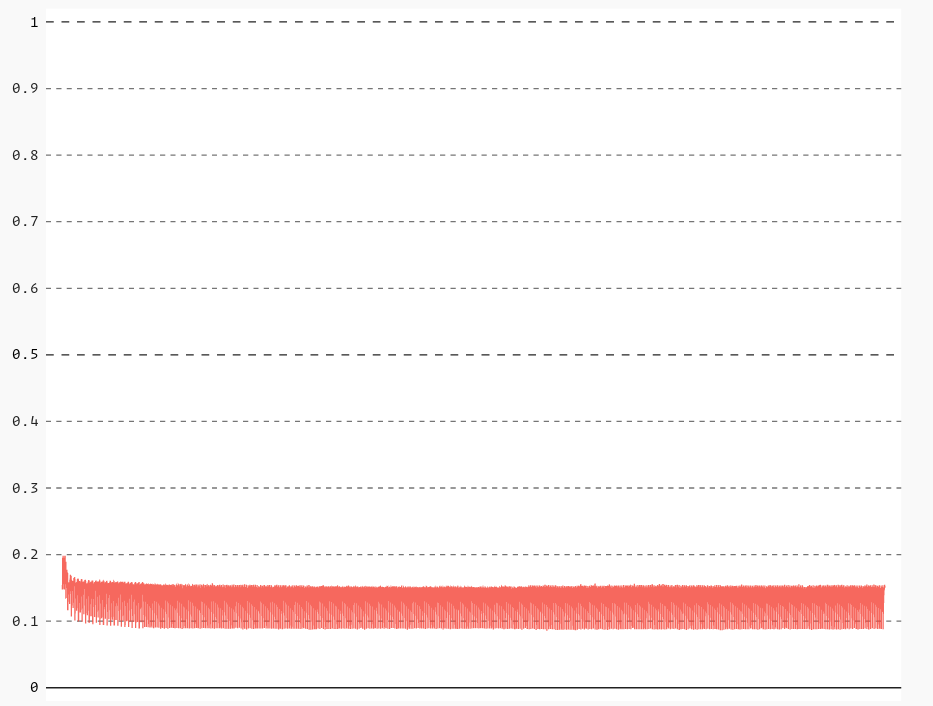

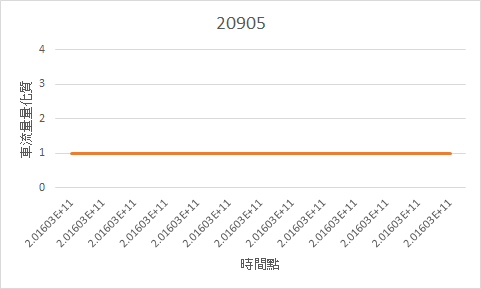

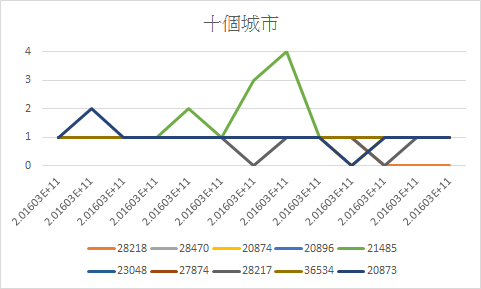

Result

- Bullet One

- Bullet Two

- Bullet Three

GPU / Cluster training

To train with GPU, we need to compile with GPU option

cmake .. -DWITH_GPU=ON

And run with GPU option

paddle train --use_gpu=true

GPU / Cluster training

- paddle/scripts/cluster_train

- conf.py

- paddle.py

- run.sh

TODO

- 研究Model (RNN, LSTM之類的)

- Cluster Training

- Scalability

- PCA