That Container Thing

High-Performance Computing w/ Docker & Kubernetes

Garrett Mills

Follow along: https://slides.com/glmdev/k8s/live

Wait, who's the kid?

Hi, there.

- Software Developer

- Linux Proselytizer

- KU CS Undergrad

- CRMDA Lab Tech

glmdev@ku.edu

So what's the deal?

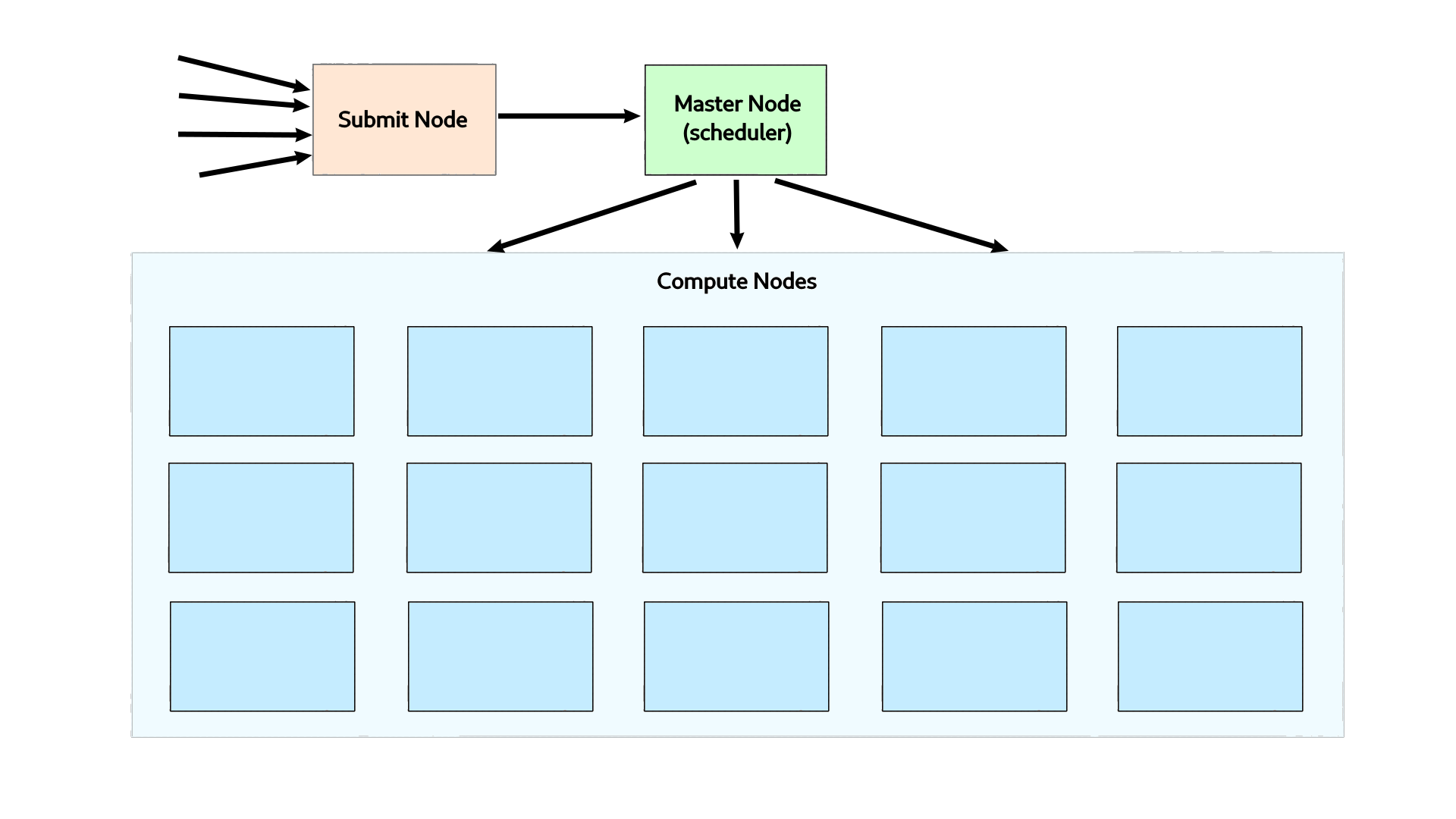

Traditional HPC

- Bare-Metal Nodes

- Scheduler

- Complex Environments

- Kinda Expensive

Pro: it's the standard

Con: it's expensive

Kubernetes

- Containers

- Scheduler

- Portable Environments

- Less Expensive*

Pro: cheaper & portable

Con: learning curve

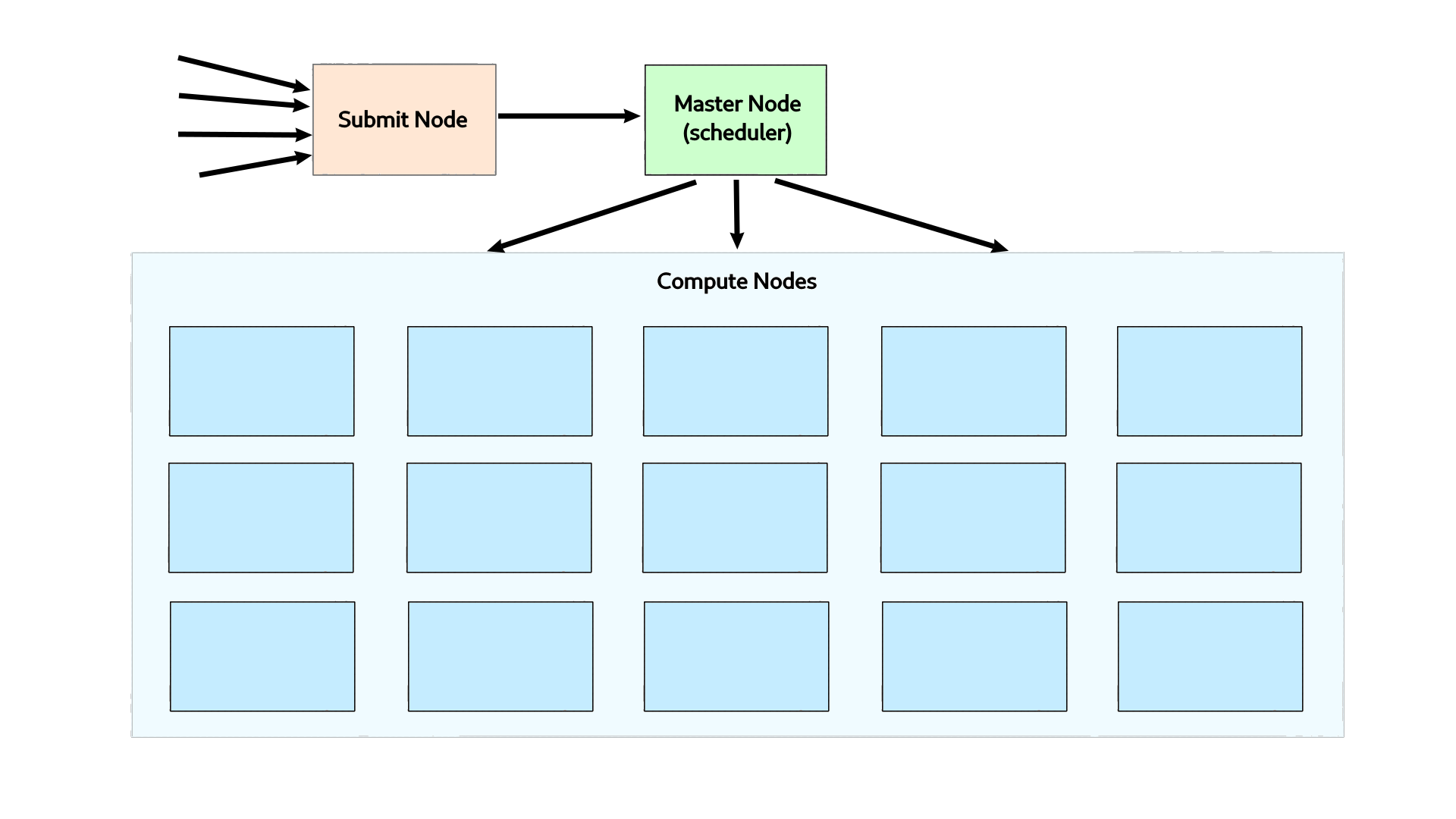

Traditional HPC

Jobs run on bare-metal.

Environment is static.

No guarantee that each node is the same.

Also, it's kinda pricey...

24 Nodes

$2080/mo

($125k/5yr)

(koo-ber-net-ees)

I'm going to mispronounce this.

So, what is it?

Production-grade container orchestration.

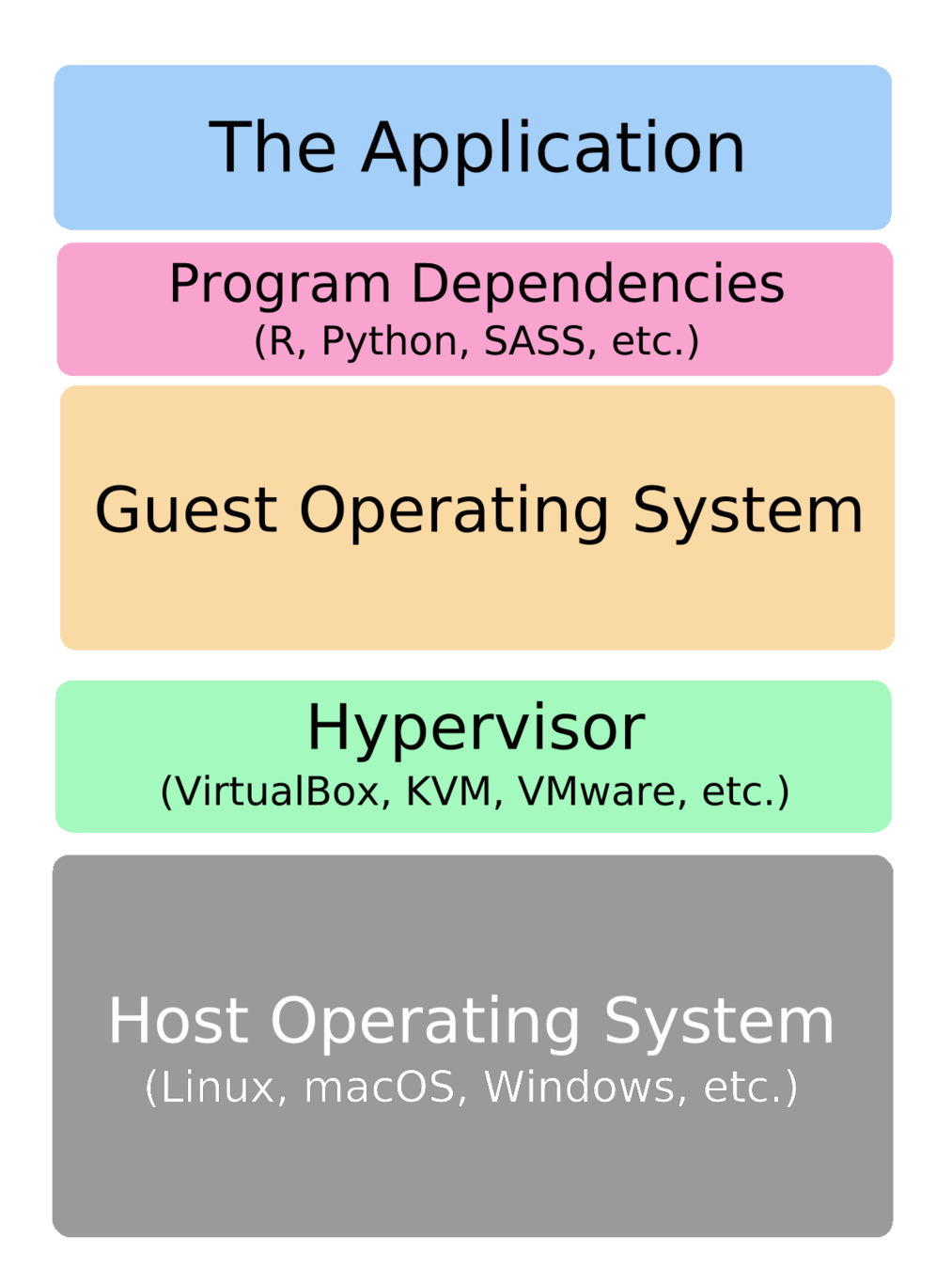

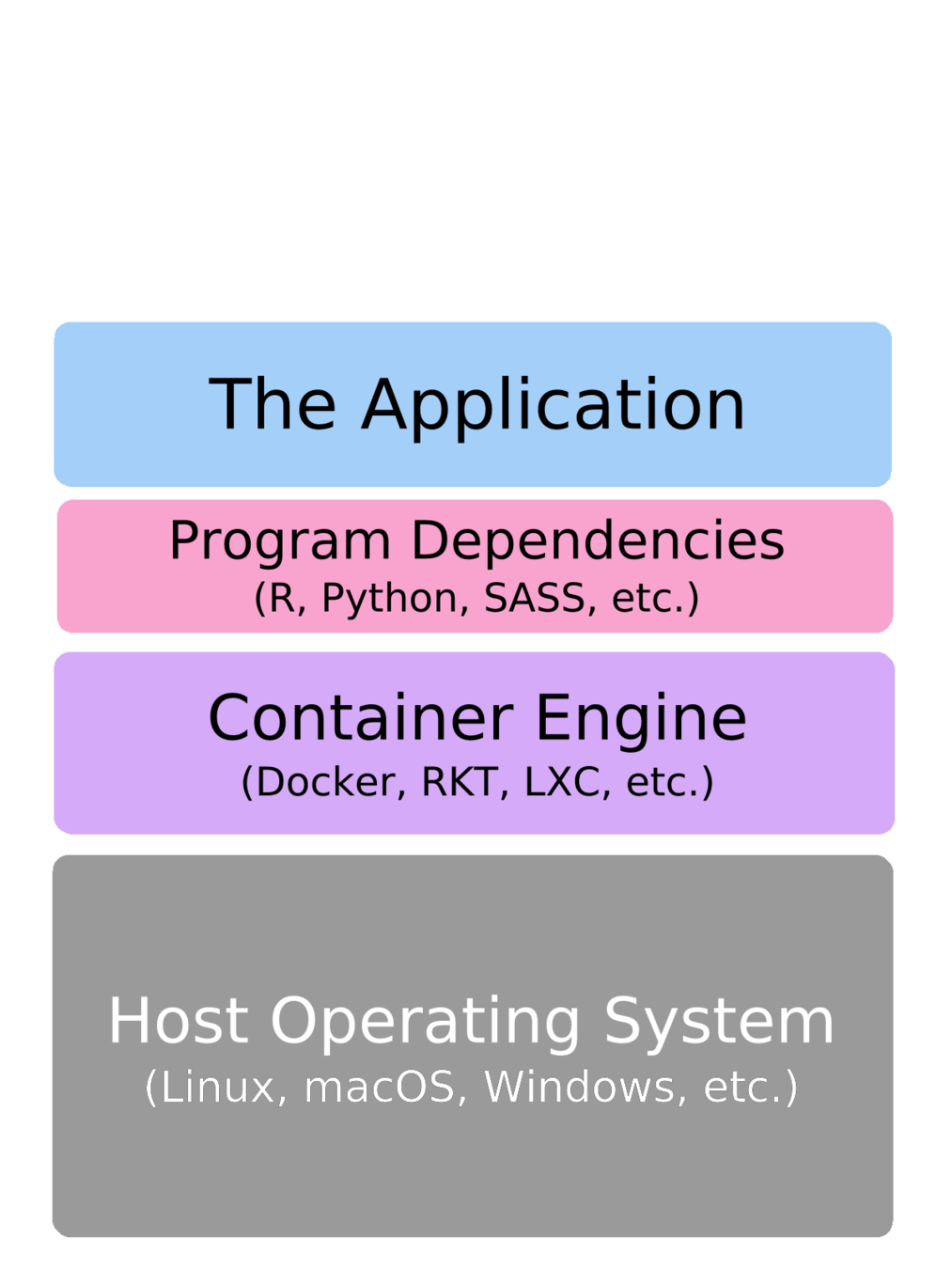

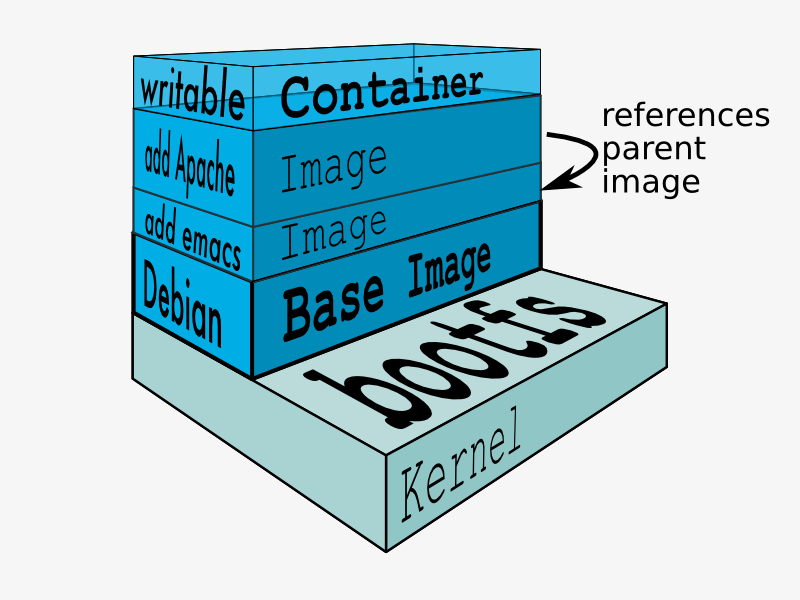

a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another

container (n.) -

Source: Docker Resources, https://www.docker.com/resources/what-container

Source: Docker Resources

Source: Docker Resources

Fedora

Remember that environment file from earlier?

module purge

module load legacy

module load emacs/26.1

module use /panfs/pfs.local/work/crmda/tools/modules

module load Rstats/3.5.1

module load mplus

module load SAS

module load anaconda

This strategy requires these modules to have been added to all of the nodes by the cluster administrators.

FROM everpeace/kube-openmpi:2.1.2-16.04-0.7.0

RUN apt-get update

RUN apt-get install -y fish python3-pip apt-transport-https software-properties-common python-software-properties

RUN apt-key adv --keyserver keyserver.ubuntu.com --recv-keys E298A3A825C0D65DFD57CBB651716619E084DAB9

RUN add-apt-repository -y 'deb [arch=amd64,i386] https://cran.rstudio.com/bin/linux/ubuntu xenial/'

RUN apt-get update

RUN apt-get install -y r-base

COPY ./Rinst.R /tmp

RUN R --vanilla -f /tmp/Rinst.R

RUN pip3 install numpy mpi4py

WORKDIR /clusterfs

Ew.

Containers are "built" into images that can be used instantly.

This is awesome.

- HPC Environment is abstracted away

- Containers are easy to customize

- Containers are predictable

- Containers are reproducible

So back to Kubernetes.

"container orchestration" : Kubernetes

::

"scheduler" : Traditional HPC

Remember this? It still applies (mostly).

Node > Pod > Container

Pro: Containers are awesome.

Pro: Kubernetes can be cloud-hosted.

(Pricing can be assessed per-job.)

For example:

5 nodes x 4 cores/node

20 cores total

10 hour runtime

$1.36

Source: Google Cloud, https://cloud.google.com/products/calculator/