Working of Systolic array

Convolution

- A systolic array, at any instant, performs a single matrix multiplication.

- A single GEMM operation involves weight load, feeding of input values to the array, optionally reading existing psum and feeding it to the array, and finally collecting the output.

- A RxS convolution is broken down into multiple 1x1 convolutions.

- 7-D loop(N, H, W, C, M, R, S)

- R, S - time multiplexed

- N, H, W - time axis

- C - row axis, time multiplexed

- M - column axis, time multiplexed

| 1 | 2 | 3 | 4 | 5 |

| 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 |

| 1 | 2 | 3 | 4 | 5 |

| 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 |

| 1 | 2 | 3 | 4 | 5 |

| 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 |

| 1 | 2 | 3 | 4 | 5 |

| 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 |

| a1 | b1 | c1 |

| d1 | e1 | f1 |

| g1 | h1 | i1 |

| a1 | a2 | a3 | a4 |

| a1 | a2 | a3 | a4 |

| a1 | a2 | a3 | a4 |

| a1 | a2 | a3 | a4 |

| a1 | b1 | c1 |

| d1 | e1 | f1 |

| g1 | h1 | i1 |

| a1 | b1 | c1 |

| d1 | e1 | f1 |

| g1 | h1 | i1 |

| a1 | b1 | c1 |

| d1 | e1 | f1 |

| g1 | h1 | i1 |

| a2 | b2 | c2 |

| d2 | e2 | f2 |

| g2 | h2 | i2 |

| a2 | b2 | c2 |

| d2 | e2 | f2 |

| g2 | h2 | i2 |

| a2 | b2 | c2 |

| d2 | e2 | f2 |

| g2 | h2 | i2 |

| a2 | b2 | c2 |

| d2 | e2 | f2 |

| g2 | h2 | i2 |

| 13 | 12 | 11 | 8 | 7 | 6 | 3 | 2 | 1 |

| 13 | 12 | 11 | 8 | 7 | 6 | 3 | 2 | 1 |

| 13 | 12 | 11 | 8 | 7 | 6 | 3 | 2 | 1 |

| 13 | 12 | 11 | 8 | 7 | 6 | 3 | 2 | 1 |

| l1 | m1 | n1 |

| p1 | q1 | r1 |

| x1 | y1 | z1 |

| l2 | m2 | n2 |

| p2 | q2 | r2 |

| x2 | y2 | z2 |

| l3 | m3 | n3 |

| p3 | q3 | r3 |

| x3 | y3 | z3 |

| l4 | m4 | n4 |

| p4 | q4 | r4 |

| x4 | y4 | z4 |

| l1 | m1 | n1 | p1 | q1 | r1 | x1 | y1 | z1 |

|---|

| l2 | m2 | n2 | p2 | q2 | r2 | x2 | y2 | z2 |

|---|

| l3 | m3 | n3 | p3 | q3 | r3 | x3 | y3 | z3 |

|---|

| l4 | m4 | n4 | p4 | q4 | r4 | x4 | y4 | z4 |

|---|

Ifmap

Ofmap

Weights

Feeding inputs to array

Collecting outputs from array

Systolic

array

ReLU

typedef struct { // 120 Total

ALU_Opcode alu_opcode; // 2

SRAM_index#(a) input_address; // 15

SRAM_index#(a) output_address; // 15

Dim1 output_height; // OH' // 8

Dim1 output_width; // OW' // 8

Dim2 window_height; // R // 4

Dim2 window_width; // S // 4

Dim1 mem_stride_OW; // S_OW // 8

Dim1 mem_stride_R; // S_R // 8

Dim1 mem_stride_S; // S_S // 8

Dim1 num_active; //Number of filters(M) // 8

Bool use_immediate; // 1

Dim1 immediate_value; // 8

Pad_bits#(b) padding; // 23

} ALU_params#(numeric type a, numeric type b) deriving(Bits, Eq, FShow);{

Max,

addr_1,

addr_1,

OH,

OW,

1,

1,

1,//doesnt matter

1,

1,

32,

True,

0,

<>

}MaxPool

typedef struct { // 120 Total

ALU_Opcode alu_opcode; // 2

SRAM_index#(a) input_address; // 15

SRAM_index#(a) output_address; // 15

Dim1 output_height; // OH' // 8

Dim1 output_width; // OW' // 8

Dim2 window_height; // R // 4

Dim2 window_width; // S // 4

Dim1 mem_stride_OW; // S_OW // 8

Dim1 mem_stride_R; // S_R // 8

Dim1 mem_stride_S; // S_S // 8

Dim1 num_active; //Number of filters(M) // 8

Bool use_immediate; // 1

Dim1 immediate_value; // 8

Pad_bits#(b) padding; // 23

} ALU_params#(numeric type a, numeric type b) deriving(Bits, Eq, FShow);{

Max,

addr_1,

addr_2,

OH,

OW,

R,

S,

OW-S+1,

Sy,

Sx*OW,

32,

False,

0,

<>

}BatchNorm

typedef struct { // 120 Total

ALU_Opcode alu_opcode; // 2

SRAM_index#(a) input_address; // 15

SRAM_index#(a) output_address; // 15

Dim1 output_height; // OH' // 8

Dim1 output_width; // OW' // 8

Dim2 window_height; // R // 4

Dim2 window_width; // S // 4

Dim1 mem_stride_OW; // S_OW // 8

Dim1 mem_stride_R; // S_R // 8

Dim1 mem_stride_S; // S_S // 8

Dim1 num_active; //Number of filters(M) // 8

Bool use_immediate; // 1

Dim1 immediate_value; // 8

Pad_bits#(b) padding; // 23

} ALU_params#(numeric type a, numeric type b) deriving(Bits, Eq, FShow);{

Add,

addr_1,

addr_2,

OH,

OW,

OH,

OW,

1,

1,

1, //doesn't matter

32,

False,

0,

<>

}Example 1

- Conv2D

- input: 8 x 8 x 64

- filters: 32 x 3 x 3 x 64

- output: 8 x 8 x 32

- ReLU

- BatchNorm

- Systolic array of size 32 x 32

- buffer sizes sufficient to hold all tensors

Example 1

wgt, 0000

(0,0), 1000

inp, 0001

pop prev, push prev, pop next, push next

(0,1), 0000

(0,2), 0000

(2,2), 0000

(0,0), 1000

relu, 1000

out, 1000

inp, 0001

(0,1), 0000

(0,2), 0000

(2,2), 0001

BN, 0001

Example 1 - Small input buffer

wgt, 0000

(0,0), 1000

inp, 0001

pop prev, push prev, pop next, push next

(0,1), 0000

(0,2), 0000

(2,2), 0100

(0,0), 1000

relu, 1000

out, 1000

inp, 0011

(0,1), 0000

(0,2), 0000

(2,2), 0001

BN, 0001

Example 2

- Conv2D

- input: 8 x 8 x 32

- filters: 64 x 3 x 3 x 32

- output: 8 x 8 x 64

- ReLU

- BatchNorm

- Systolic array of size 32 x 32

- buffer sizes sufficient to hold all tensors

Example 2

wgt, 0000

(0,0), 1000

relu, 1000

out, 1000

inp, 0001

pop prev, push prev,

pop next, push next

(0,1), 0000

(0,2), 0000

(2,2), 0001

First 32 filters

BN, 0001

(0,0), 0000

(0,1), 0000

(0,2), 0000

(2,2), 0001

Last 32 filters

relu, 1000

BN, 0001

out, 1000

Example 2 - Small weight buffer

wgt, 0000

(0,0), 1000

relu, 1000

out, 1000

inp, 0001

pop prev, push prev,

pop next, push next

(0,1), 0000

(0,2), 0000

(2,2), 0101

First 32 filters

BN, 0001

(0,0), 1000

(0,1), 0000

(0,2), 0000

(2,2), 0001

Last 32 filters

wgt, 0011

relu, 1000

BN, 0001

out, 1000

Example 2

wgt, 0000

(0,0), 1000

relu, 1000

out, 1000

inp, 0001

pop prev, push prev,

pop next, push next

(0,1), 0000

(0,2), 0000

(2,2), 0101

First 32 filters

BN, 0001

(0,0), 1000

(0,1), 0000

(0,2), 0000

(2,2), 0001

Last 32 filters

wgt, 0011

relu, 1000

BN, 0001

out, 1000

Example 2 - Small output buffer

wgt, 0000

(0,0), 1000

relu, 1000

out, 1000

inp, 0001

pop prev, push prev,

pop next, push next

(0,1), 0000

(0,2), 0000

First 32 filters

BN, 0101

(0,1), 0000

(0,2), 0000

(2,2), 0001

Last 32 filters

relu, 1000

BN, 0001

out, 1000

wgt, 0011

(2,2), 0101

(0,0), 1010

Example 2 - Small output buffer

wgt, 0000

(0,0), 1000

relu, 1001

out, 1100

inp, 0001

pop prev, push prev,

pop next, push next

(0,1), 0000

(0,2), 0000

First 32 filters

(0,1), 0000

(0,2), 0000

(2,2), 0001

Last 32 filters

relu, 1000

out, 1000

nop, 0110

wgt, 0011

(2,2), 0101

(0,0), 1010

Mapper Algorithm

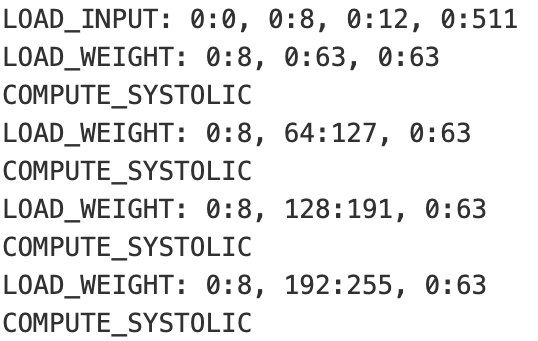

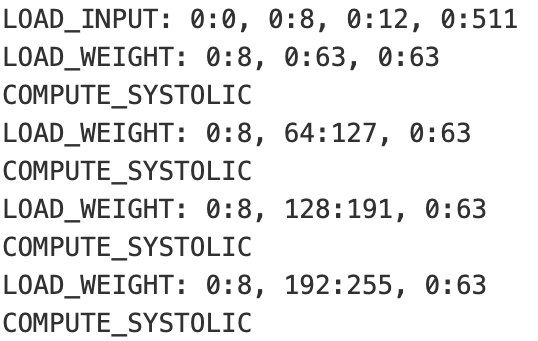

GEMM on systolic array

Consists of three computational axes - row, column, time

- row: channels (C)

- column: filters (M)

- time: N * H * W

- space-time: R, S

- output buffer: NHW, M

- input buffer: NHW, C

- weight buffer: C, M, R, S

Systolic array

- 32 x 32 array

- 8-bit input, weight

- 16-bit output

- 2 KB input buffer

- 8 KB weight buffer

- 4 KB output buffer

Conv layer

- input: 8 x 8 x 64 - 4 KB

- wgt: 2 x 2 x 64 x 64 - 16 KB

- output: 8 x 8 x 64 - 8 KB

- C - folds : 64 / 32 = 2

- M - folds : 64 / 32 = 2

- size of input fold = 8 x 8 x 32 = 2 KB

- size of out fold = 8 x 8 x 32 = 4 KB

Computation folds

| F : 0 - 31 | F : 0- 31 | F: 32- 64 | F: 32 - 64 | |

| C: 0 - 31 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 0 - 31 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

| C: 32 - 63 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 32 - 63 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

Computation folds

| F : 0 - 31 | F : 0- 31 | F: 32- 64 | F: 32 - 64 | |

| C: 0 - 31 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 0 - 31 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

| C: 32 - 63 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 32 - 63 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

outputs: same as previous fold

inputs: same as previous fold

weights: 32 x 32 new weights

Computation folds

| F : 0 - 31 | F : 0- 31 | F: 32- 64 | F: 32 - 64 | |

| C: 0 - 31 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 0 - 31 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

| C: 32 - 63 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 32 - 63 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

outputs: different from previous fold

inputs: same as previous fold

weights: 32 x 32 new weights

Computation folds

| F : 0 - 31 | F : 0- 31 | F: 32- 64 | F: 32 - 64 | |

| C: 0 - 31 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 0 - 31 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

| C: 32 - 63 | (0, 0) | (0, 1) | (0, 0) | (0, 1) |

| C: 32 - 63 | (1, 0) | (1, 1) | (1, 0) | (1, 1) |

outputs: same as previous fold

inputs: different from previous fold

weights: 32 x 32 new weights

Systolic array

- 32 x 32 array

- 8-bit input, weight

- 16-bit output

- 2 KB input buffer

- 8 KB weight buffer

-

4 KB2 KB output buffer

Conv layer

- input: 8 x 8 x 64 - 4 KB

- wgt: 2 x 2 x 64 x 64 - 16 KB

- output: 8 x 8 x 64 - 8 KB

- C - folds : 64 / 32 = 2

- M - folds : 64 / 32 = 2

- size of input fold = 8 x 8 x 32 = 2 KB

- size of out fold = 8 x 8 x 32 = 4 KB

Computation folds

| F : 0 - 31 | F : 0- 31 | F : 0- 31 | F : 0- 31 | F: 32- 64 | F: 32- 64 | F: 32 - 64 | F: 32- 64 | |

| C: 0 - 31 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 0 - 31 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

| C: 32 -63 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 32 -63 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

Computation folds

| F : 0 - 31 | F : 0- 31 | F : 0- 31 | F : 0- 31 | F: 32- 64 | F: 32- 64 | F: 32 - 64 | F: 32- 64 | |

| C: 0 - 31 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 0 - 31 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

| C: 32 -63 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 32 -63 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

outputs: same as previous fold

inputs: same as previous fold

weights: 32 x 32 new weights

Computation folds

| F : 0 - 31 | F : 0- 31 | F : 0- 31 | F : 0- 31 | F: 32- 64 | F: 32- 64 | F: 32 - 64 | F: 32- 64 | |

| C: 0 - 31 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 0 - 31 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

| C: 32 -63 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 32 -63 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

outputs: store prev output, then write

inputs: same as previous fold

weights: same as previous fold

Computation folds

| F : 0 - 31 | F : 0- 31 | F : 0- 31 | F : 0- 31 | F: 32- 64 | F: 32- 64 | F: 32 - 64 | F: 32- 64 | |

| C: 0 - 31 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 0 - 31 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

| C: 32 -63 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 32 -63 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

outputs: store prev output, then write

inputs: same as previous fold

weights: 32 x 32 new weights

Computation folds

| F : 0 - 31 | F : 0- 31 | F : 0- 31 | F : 0- 31 | F: 32- 64 | F: 32- 64 | F: 32 - 64 | F: 32- 64 | |

| C: 0 - 31 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 0 - 31 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

| C: 32 -63 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 | (0, 0), 0-3 | (0,0), 4-7 | (0, 1), 0-3 | (0,1), 4-7 |

| C: 32 -63 | (1, 0), 0-3 | (1,0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 | (1, 0), 0-3 | (1, 0), 4-7 | (1, 1), 0-3 | (1,1), 4-7 |

outputs: same as previous fold

inputs: different from previous fold

weights: 32 x 32 new weights

Loop Transformations

Weight Stationary

for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Weight Stationary

for n = 1 to N

for m = 1 to M_folds

for mm = 1 to SYS_W

for e = 1 to E

for f = 1 to F

for c = 1 to C_folds

for cc = 1 to SYS_H

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Loop Tiling

for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Weight Stationary

for m = 1 to M_folds

for c = 1 to C_folds

for n = 1 to N

for e = 1 to E

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Loop Interchange

for n = 1 to N

for m = 1 to M_folds

for mm = 1 to SYS_W

for e = 1 to E

for f = 1 to F

for c = 1 to C_folds

for cc = 1 to SYS_H

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Weight Stationary

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to N*E

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Loop Fusion

for m = 1 to M_folds

for c = 1 to C_folds

for n = 1 to N

for e = 1 to E

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Weight Stationary

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for nene = 1 to T_size

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Loop Tiling

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to N*E

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Weight Stationary

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for nenef = 1 to T_size*F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Loop Fusion

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for nene = 1 to T_size

for f = 1 to F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Weight Stationary

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for r = 1 to R

for s = 1 to S

for nenef = 1 to T_size*F

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_Loop Interchange

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for nenef = 1 to T_size*F

for r = 1 to R

for s = 1 to S

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]

_for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for r = 1 to R

for s = 1 to S

for nenef = 1 to T_size*F

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Weight Stationary

Final loop nest

Initial loop nest

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to T_folds

for r = 1 to R

for s = 1 to S

for nenef = 1 to T_size*F

for mm = 1 to SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Weight Stationary

Innermost: One systolic fold

NHWC - Input

RSCM - Weight

NEFM - Output

for m = 1 to M_folds

for c = 1 to C_folds

for ne = 1 to NE_folds

for mm = 1 to SYS_W

for nenef = 1 to F*NE_Size # F*NE_Size <= SYS_H

for ccrs = 1 to C_Size*R*S # buffer constraint

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Output Stationary

Innermost: One systolic fold

CNHW - Input

RSCM - Weight

NEFM - Output

for ne = 1 to NE_folds

for c = 1 to C_folds

for m = 1 to M_folds

for rsmm = 1 to M_Size*R*S # constrained by buffer size

for nenef = 1 to F*NE_Size # F*NE_Size <= SYS_W

for cc = 1 to SYS_H

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]for n = 1 to N

for m = 1 to M

for e = 1 to E

for f = 1 to F

for c = 1 to C

for r = 1 to R

for s = 1 to S

output[n][m][e][f] += input[n][e][f][c] * weight[r][s][c][m]Input Stationary

Innermost: One systolic fold

NHWC - Input

RSMC - Weight

NEFM - Output

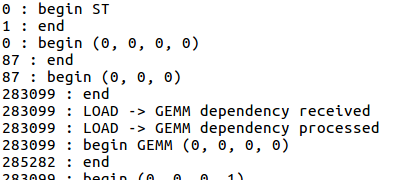

Simulator

- Why simulator?

- Functional modelling - verify if correct values are being generated at each time step

- Performance modelling - compute rough execution time for a given instruction trace

- bottleneck analysis of hardware

- cost-model for compiler optimizer

SCALE-Sim

- cycle-accurate systolic-array simulator

- can model computation cycles accurately

- Drawbacks

- models only compute time, no memory access time considered

- layers are simulated sequentially, no latency hiding between them

- SRAM size has zero impact on latency

- ALU operations not supported

ShaktiMaan simulator

Model

H/W Config

Mapper

ISA trace

array size, buffer capacity

Functional Simulation

ISA trace

Simulator

Operand values at time step t

- Values computed in the last instruction

- Contents of the weight buffer

- Instructions yet to be resolved

- \( \ldots\)

Performance Simulation

ISA trace

Simulator

Overall latency

Layerwise results

Trace Log

HW Utilisation

Simulator module

- Memory access: DRAMSim2, a cycle-accurate simulator

- Key idea: dependencies always preserve global ordering

- Each module preserves own timestamp

LOAD WGT

GEMM

ReLU

STORE

LOAD INP

LOAD WGT

LOAD INP

GEMM

ReLU

STORE

Software Stack - challenges

- map DNNs to execute on our accelerator

- ML practitioners

- multiple frameworks in use - TF, PyTorch, MxNet

- new operators (eg: Batch Normalisation)

- ShaktiMAAN accelerator

- mapping is non-trivial - huge search space, difficult to find optimum mapping

- rigid hardware - cannot reconfigure values once synthesized

Compiler Flow

PyTorch model

TensorFlow model

TVM Compiler

Relay graph

Optimizer

Relay graph

(optimized)

Codegen

shared library(.so)

Runtime

Hardware

Compiler Flow

PyTorch model

TensorFlow model

TVM Compiler

Relay graph

Optimizer

Relay graph

(optimized)

Codegen

shared library(.so)

Runtime

Hardware

- Unify multiple different DNN representations into a single intermediate representation (IR)

Compiler Flow

PyTorch model

TensorFlow model

TVM Compiler

Relay graph

Optimizer

Relay graph

(optimized)

Codegen

shared library(.so)

Runtime

Hardware

- break computation to smaller chunks which fit on the hardware

- a series of loop transformation - tiling, unrolling, etc.

TVM AutoTuner - Simulated annealing

Reinforcement Learning based optimizer

cost-model based on simulator

Compiler Flow

PyTorch model

TensorFlow model

TVM Compiler

Relay graph

Optimizer

Relay graph

(optimized)

Codegen

shared library(.so)

Runtime

Hardware

- Generate systolic ISA instructions from Relay graph

- Upstream support with TVM: new operators in PyTorch requires no extra effort

Offload computation to accelerator

Execute unsupported operations on CPU

Compiler Flow

PyTorch model

TensorFlow model

TVM Compiler

Relay graph

Optimizer

Relay graph

(optimized)

Codegen

shared library(.so)

Runtime

Hardware

TVM Runtime schedules operations to CPU or accelerator

Accelerator runtime configures the accelerator to perform a certain operation

Relay graph for Conv2d + Bias addition + ReLU

%1 = nn.conv2d(%data, %weight, ...) %2 = add(%1, %bias) %3 = nn.relu(%2)