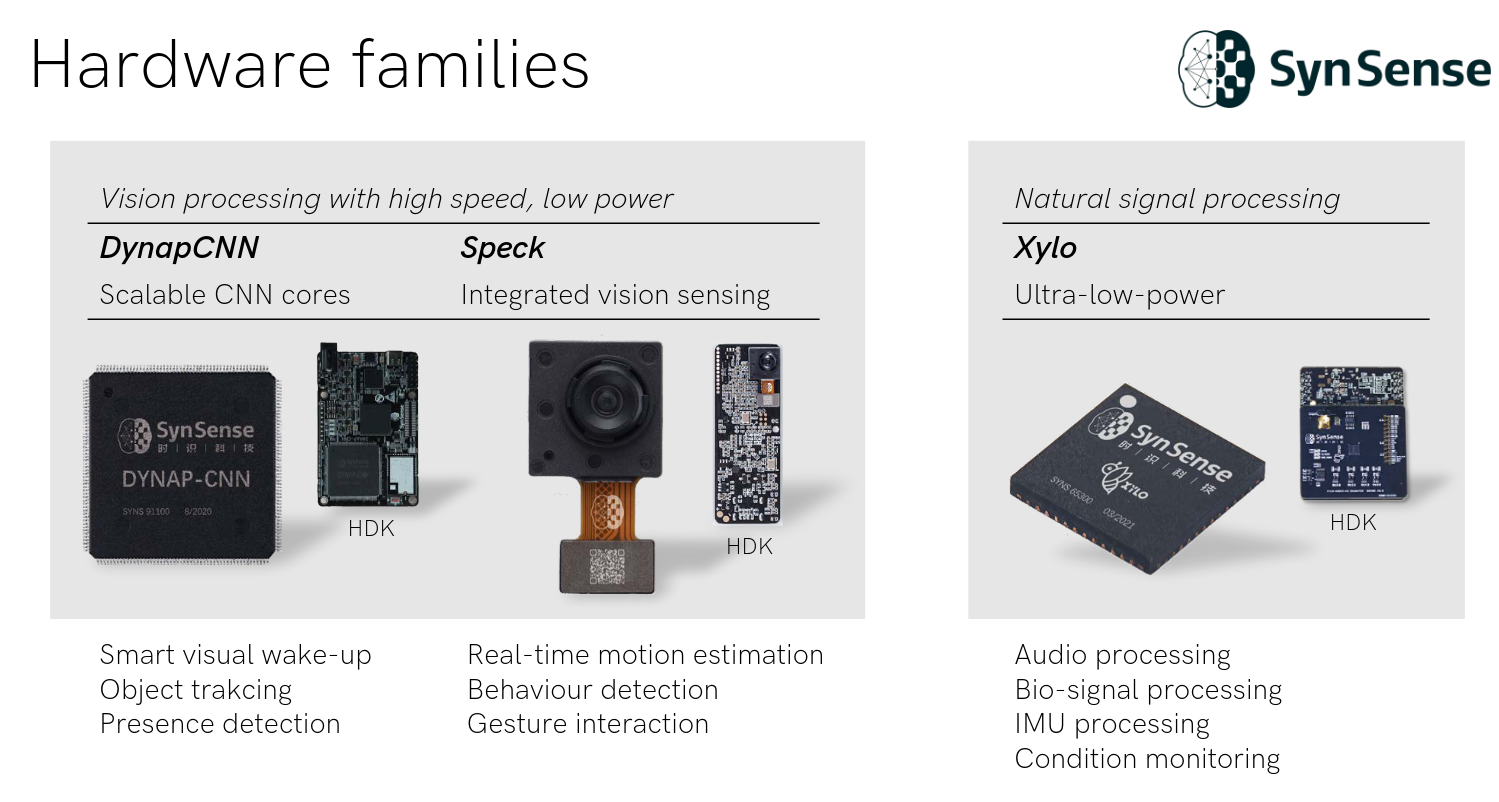

- Company focuses on ultra-low-power inference on the edge

- Hardware is digital asynchronous for Speck and digital synchronous for

-

- Designing the machine learning pipeline to train our models

- Focus on engineering the tools for gradient-based optimization of SNNs, data wrangling

- Establish the use of good practices to make training and deployment faster

- Training SNNs for better performance through data augmentation

- Architectural exploration such as recurrence in the network, spike frequency adaptation

- How to save energy, fewer spikes is the goal

- Training Spiking Neural Networks Using Lessons from Deep Learning: Eshragian, Neftci, Wang, Lenz

- Adversarial Attacks on Spiking Convolutional Networks: Büchel, Lenz, Sheik, Sorbaro

- EXODUS: Stable and Efficient Training of Spiking Neural Networks: Bauer, Lenz, Haghighatshoar, Sheik

- under submission: Ultra-low-power image classification on neuromorphic hardware

- Converting an ANN to an SNN using temporal coding and a single spike per neuron

- A method to normalise ANN activation which scales to deeper layers

- CapoCaccia workshop: presented our chips, gave tutorials and demos

- AMLD: a full-day workshop with our hardware, outreach to new students

- Open Neuromorphic: Platform for neuromorphic open source code and hardware

- NeuroBench: benchmarking and metrics for neuromorphic computing

- Leading role in the algorithms vision team

- Strong engineering background

- 3 papers published in the last year, 1 under submission

- 2 patents submitted

- Involved in numerous community efforts

Made with Slides.com