Towards training robust computer vision models for inference on neuromrophic hardware

Gregor Lenz

TU Delft, 10.3.2023

- BSc/MSc (2014) in biomedical engineering at UAS Technikum Vienna, Austria

- PhD (2021) in neuromorphic engineering at Sorbonne University Paris, France

- Worked for Prophesee, on Intel's Loihi

- Now machine learning engineer at SynSense

About me

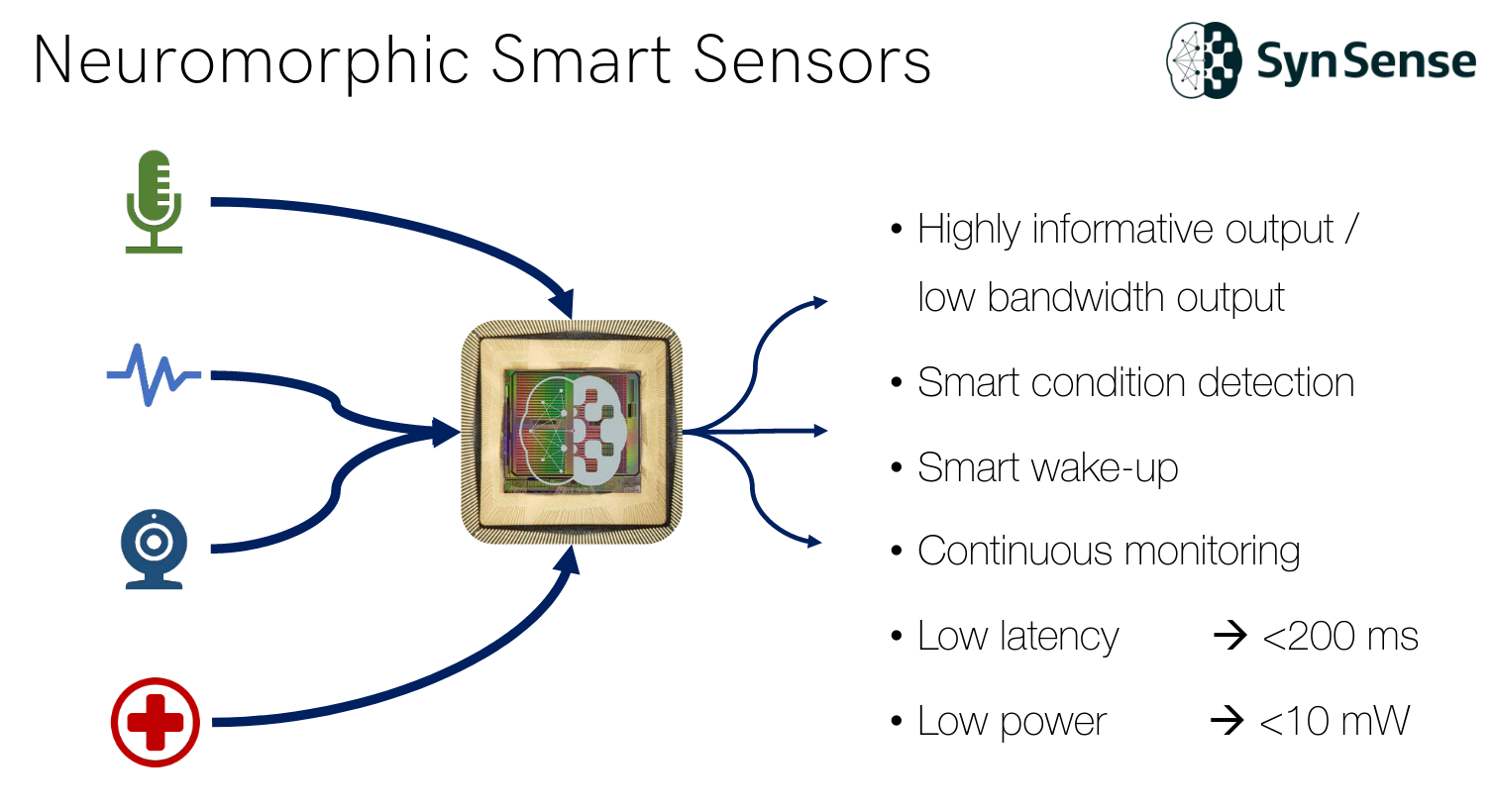

Neuromorphic computing

- Takes inspiration from biological systems to process information much more efficiently

- Has bottom-up approach to imitation

- Hardware a key factor

Event-based datasets

- Neuromorphic cameras not easy to come by currently

- Rely on curated datasets to start exploring new data type

- Data rate from event-based cameras can easily reach 100 MB/s

- Prophesee's automotive object detection dataset is 3.5 TB in size for <40h recording

Efficient compression for event data

https://open-neuromorphic.org

Efficient compression for event data

Efficient compression for event data

https://github.com/open-neuromorphic

Where are we so far?

- Publicly available dataset

- Reading from an efficient format that saves disk space

- Apply transformations that are suitable for PyTorch

import tonic

transform = tonic.transforms.ToFrame(sensor_size=(128, 128, 2), time_window=3000)

dataset = tonic.datasets.DVSGesture(save_to="data", transform=transform)

from torch.utils.data import DataLoader

testloader = DataLoader(

dataset,

batch_size=8,

collate_fn=tonic.collation.PadTensors(batch_first=True),

)

frames, targets = next(iter(testloader))Training models on event data

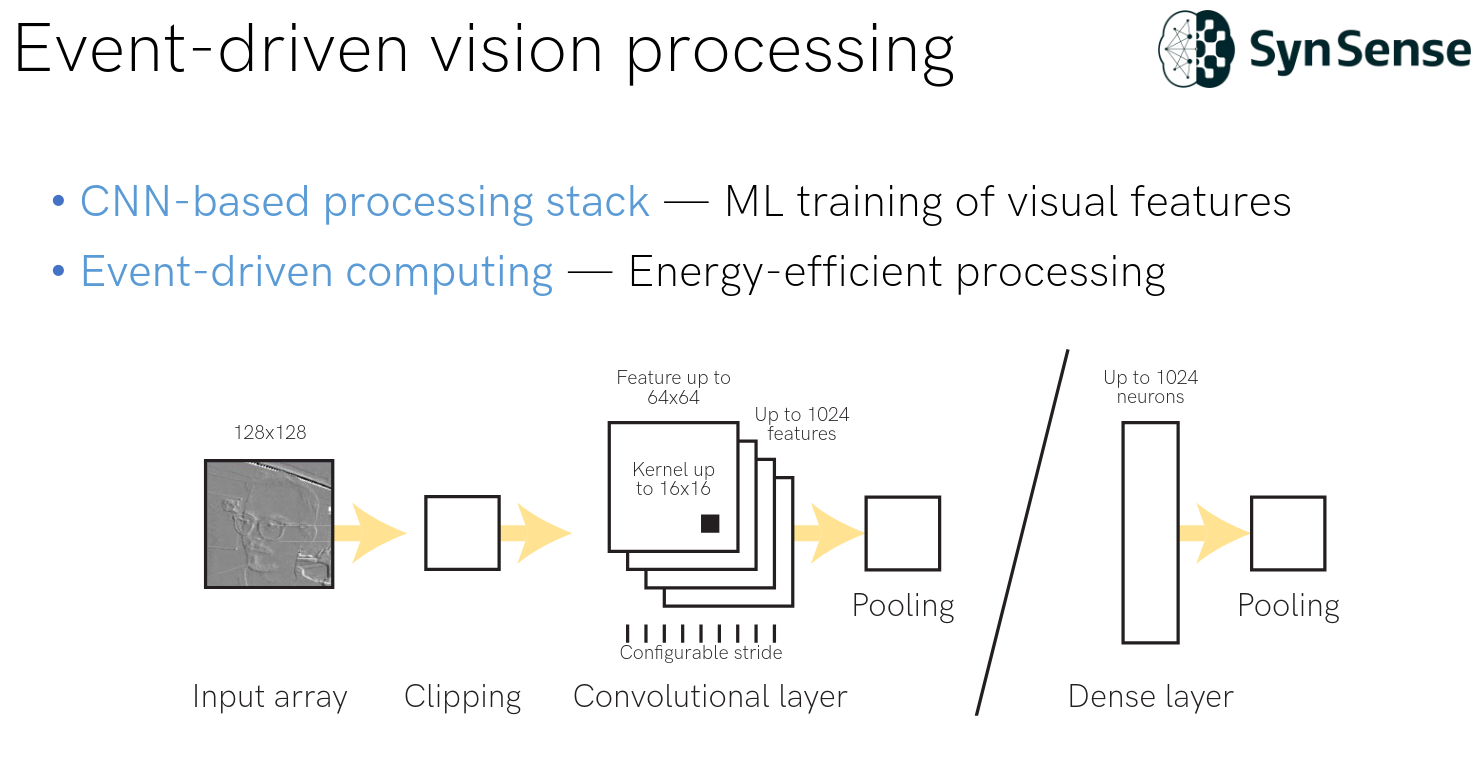

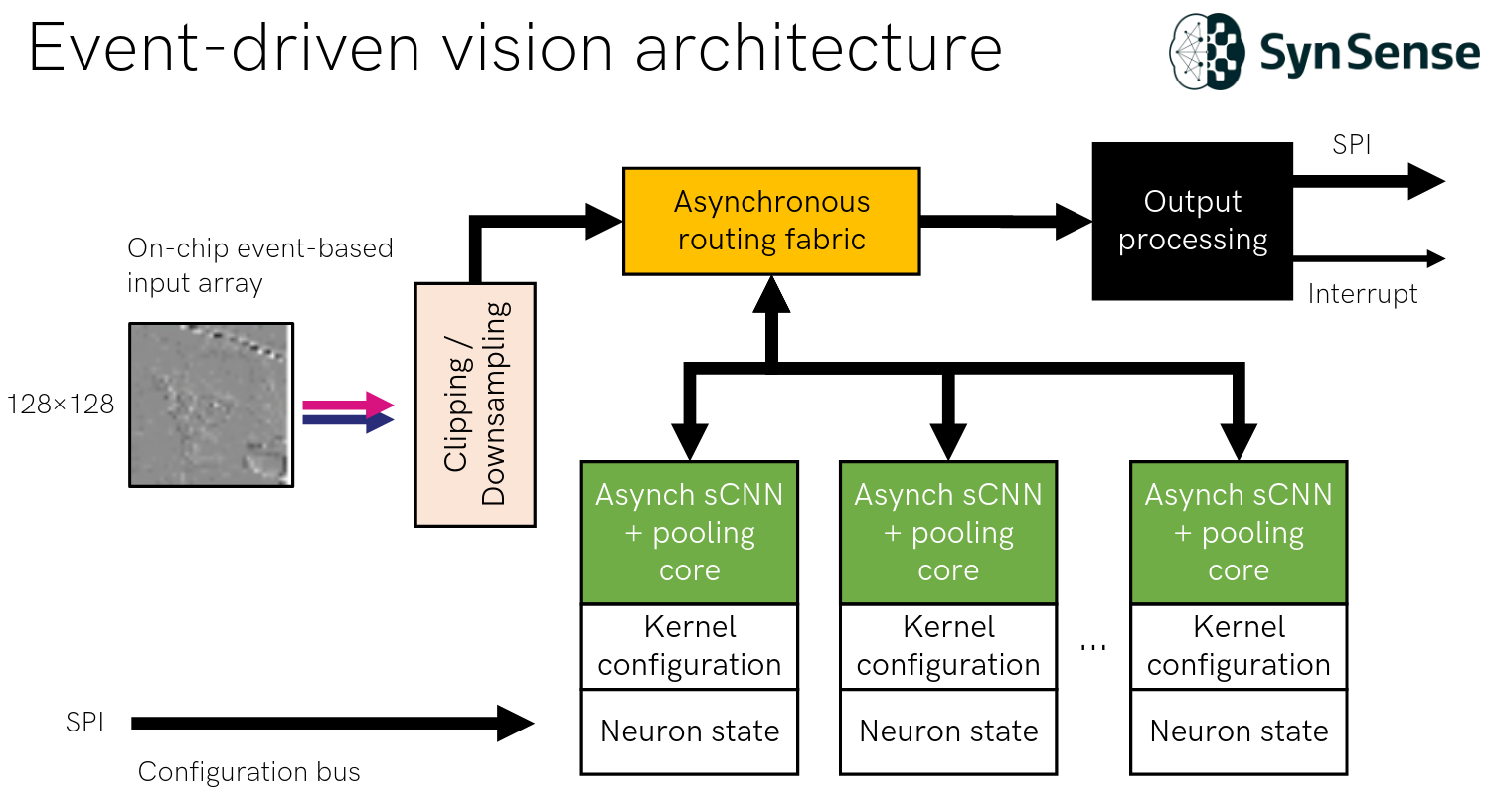

- Could bin events to frames and train CNN / visual transformer on it --> high latency, no memory

- Want to make use of spatial and temporal sparsity --> spiking neural networks

- Anti-features at SynSense: intricate neuron models, biologically-plausible learning rules

- Focus on training speed and robustness

Leaky integrate and fire neurons

animation from https://norse.github.io/norse/pages/working.html

Training SNNs

- Focus on gradient-based optimization

good results

complexity of BPTT scales badly

- Simple architectures: feed-forward, integrate and fire neurons

power-efficient execution on hardware

infinite memory not ideal

- Needs to match hardware constraints

event-based vs clocked processing

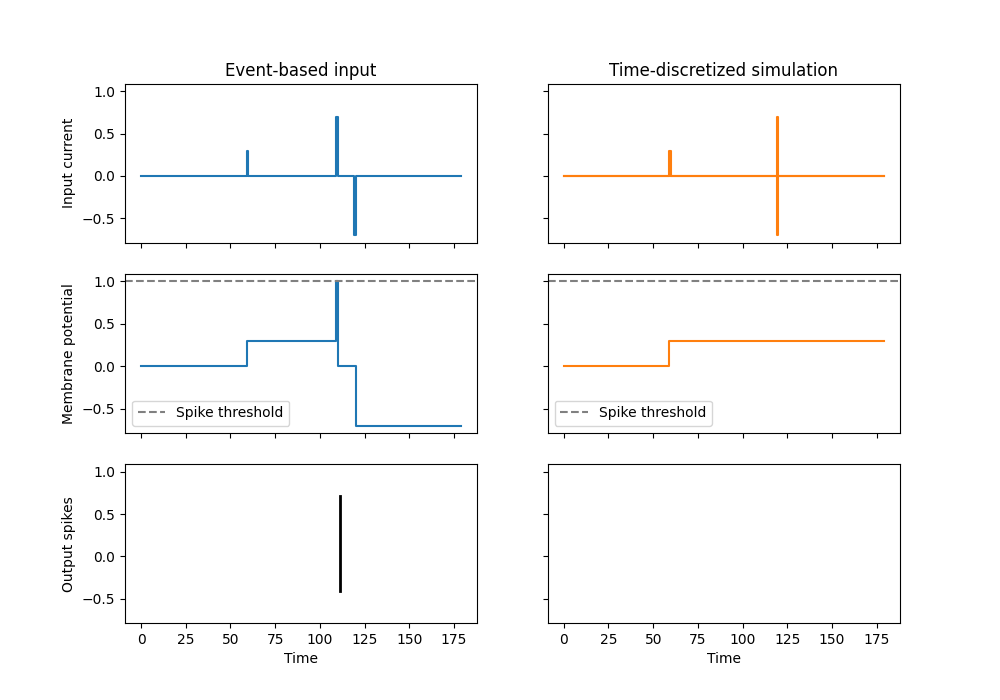

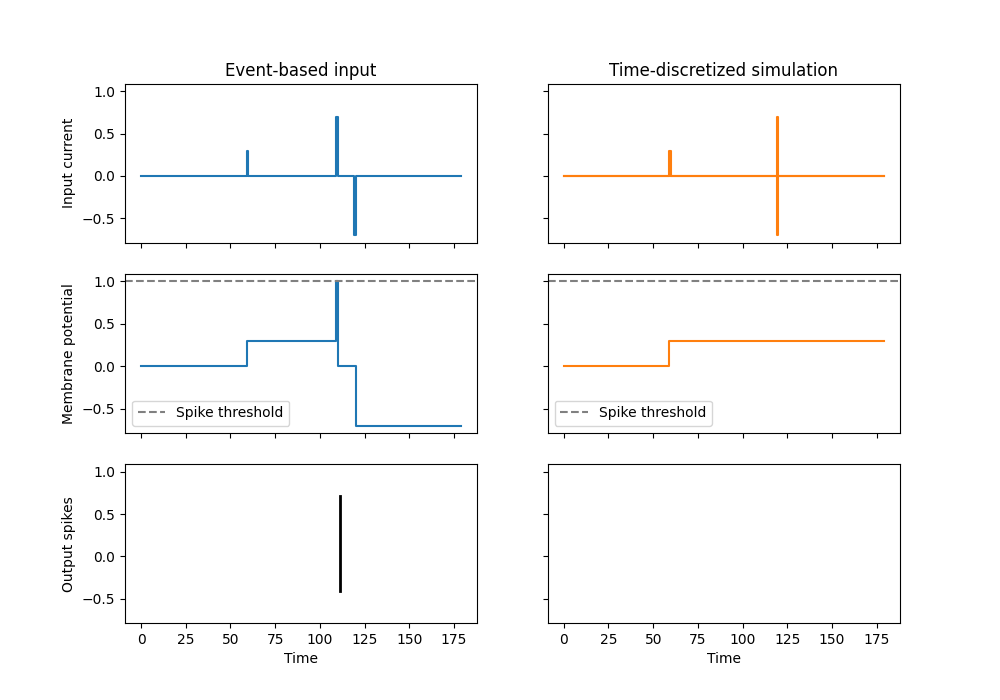

Event-based vs clocked computation

Dealing with event-based data on a time-discretized system

- Fine-grained discretization

- Reducing weight/threshold ratios

- Spread out spiking activity

- Penalize high amount of spikes in the same receptive field

Noisy event camera output

- 50/60/100 Hz flicker generated from artificial light sources

- Hot pixels: pixels that fire with >50Hz even though no change in illumination

- Mitigation: augmentation with noise recordings, low-pass filters

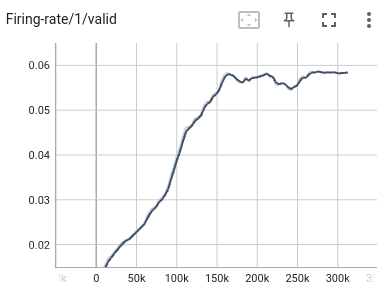

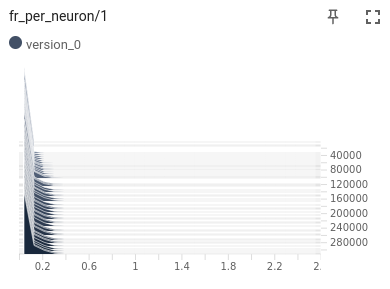

Monitoring

- Firing rates, synaptic operations, temporal density.

Ideally per neuron!

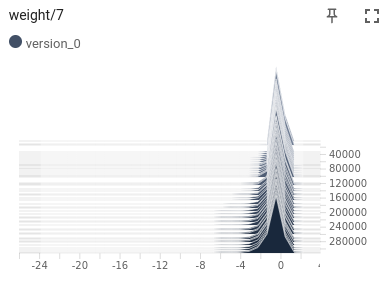

Monitoring

- Weight distributions to account for quantization

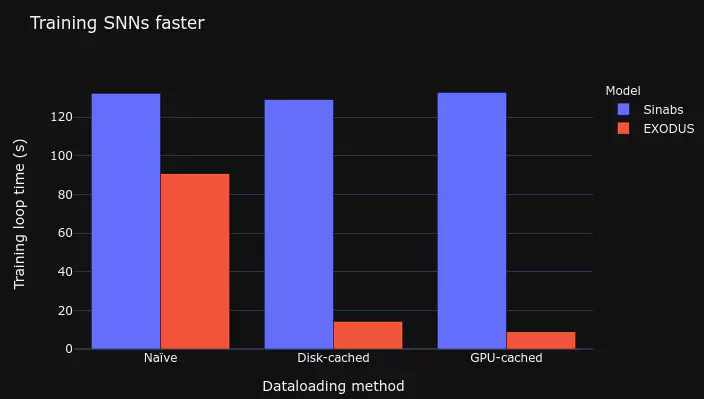

EXODUS: Stable and Efficient Training of Spiking Neural Networks

Bauer, Lenz, Haghighatshoar, Sheik, 2022

Speed up SNN training

https://lenzgregor.com/posts/train-snns-fast/

Speed up SNN training

Practical steps to SNN training

- Time investment in setting up the right tools pays off

- Understand your data

- Start with simple model, increase complexity gradually

- SNN models typically have very high variance

- Monitor intermediate layers automatically, also on-chip!

- Identify training bottlenecks (data, backprop, logging?) to iterate faster

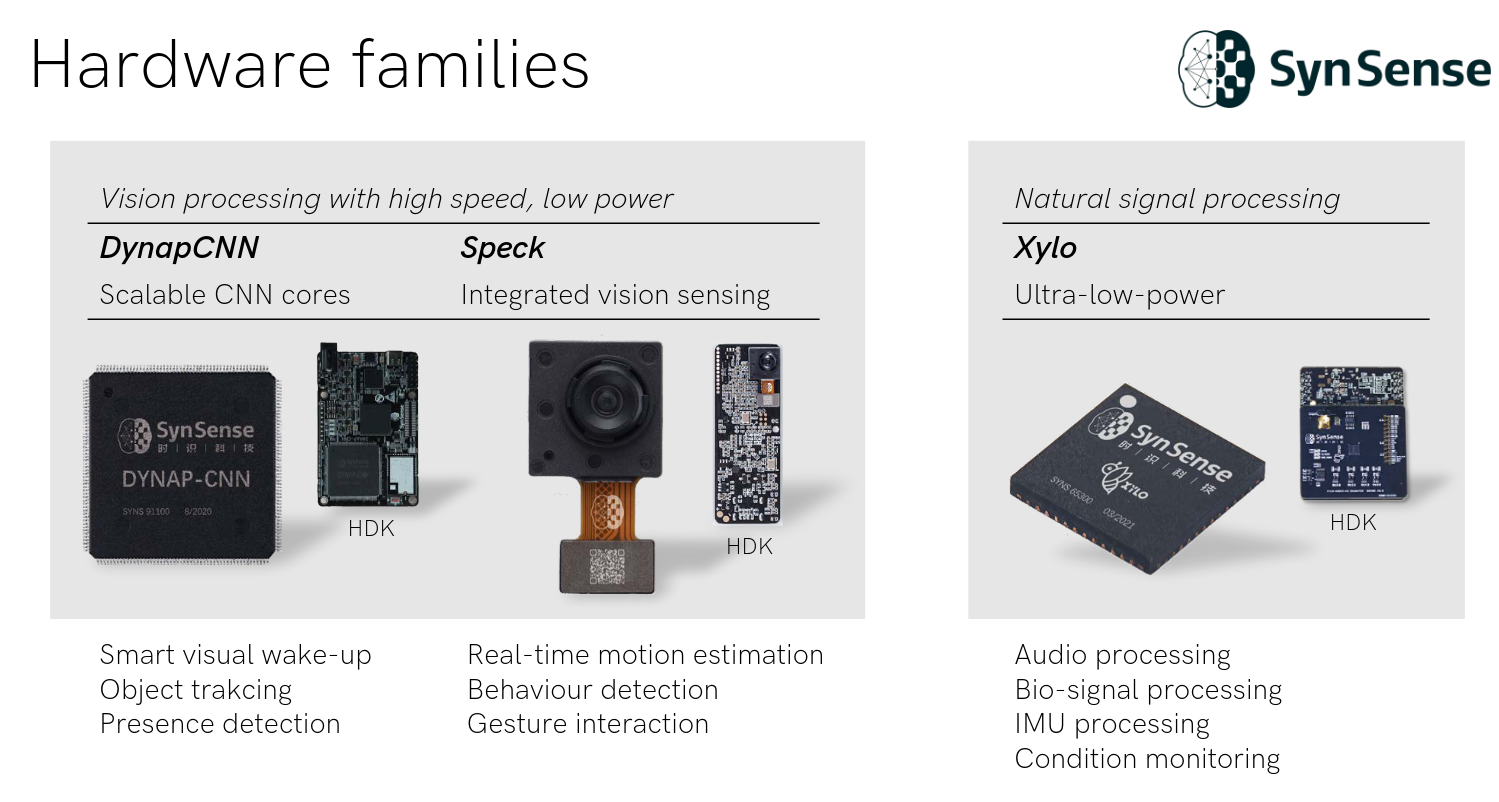

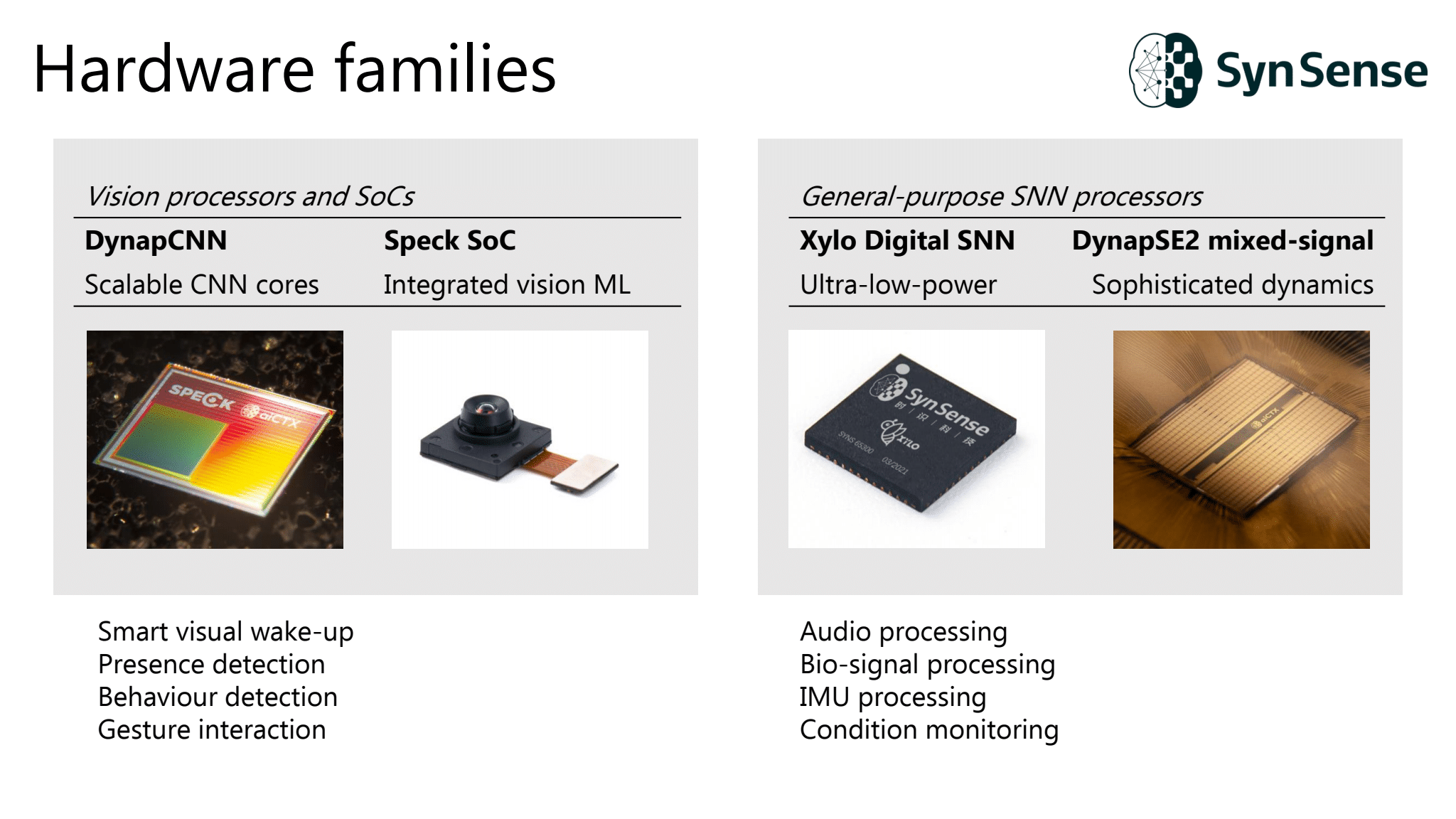

Model definition and deployment to Speck

import torch.nn as nn

import sinabs.layers as sl

model = nn.Sequential(

nn.Conv2d(2, 8, 3),

sl.IAF(),

nn.AvgPool2d(2),

nn.Conv2d(8, 16, 3),

sl.IAF(),

nn.AvgPool2d(2),

nn.Flatten(),

nn.Linear(128, 10),

sl.IAF(),

)

# training...

from sinabs.backend.dynapcnn import DynapcnnNetwork

dynapcnn_net = DynapcnnNetwork(

snn=model,

input_shape=(2, 30, 30)

)

dynapcnn_net.to("speck2b")

Demo videos

Conclusion

- Events are a new data format different from images, audio, text

- Real-life challenges include noisy data, resource constraints, async computing paradigm

- We need the full pipeline: sensor, algorithm, hardware

https://lenzgregor.com

Gregor Lenz

Next talks

- April 4th (online): ONM Hands-on session with Sinabs and Speck

- Telluride 2023 (in person): Open-Source Neuromorphic Hardware, Software and Wetware