Improving Front-end Quality

Alex Gyoshev

What makes the legacy... legacy?

How do you feel when you work with the legacy codebase?

Why do you feel this way?

How did this happen?

How do we prevent it from happening again?

How do these help?

- Tests & types

- Unit testing & TDD

- Testing tips

- Code Coverage

- E2E tests

Tests & Types

Do we need both anyway?

Great talk on the topic: https://www.destroyallsoftware.com/talks/ideology

Tests & Types

Tests

are examples

Types

are categories

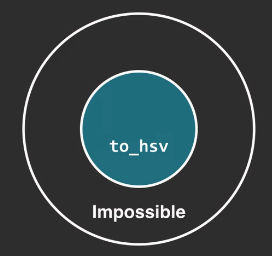

to_hsl :: RGB -> HSL

to_hsl(Date)

// Error

to_hsl(null)

// Error

to_hsl( RGB(-1,-2,-3) )

// OK!to_hsl( RGB(-1,-2,-3) )

// Error

to_hsl(null)

// Error (at runtime!)

to_hsl( RGB(255,0,0) )

// HSL(0,100,50)Tests & Types

Tests

are examples

Types

are categories

to_hsl :: RGB -> HSL

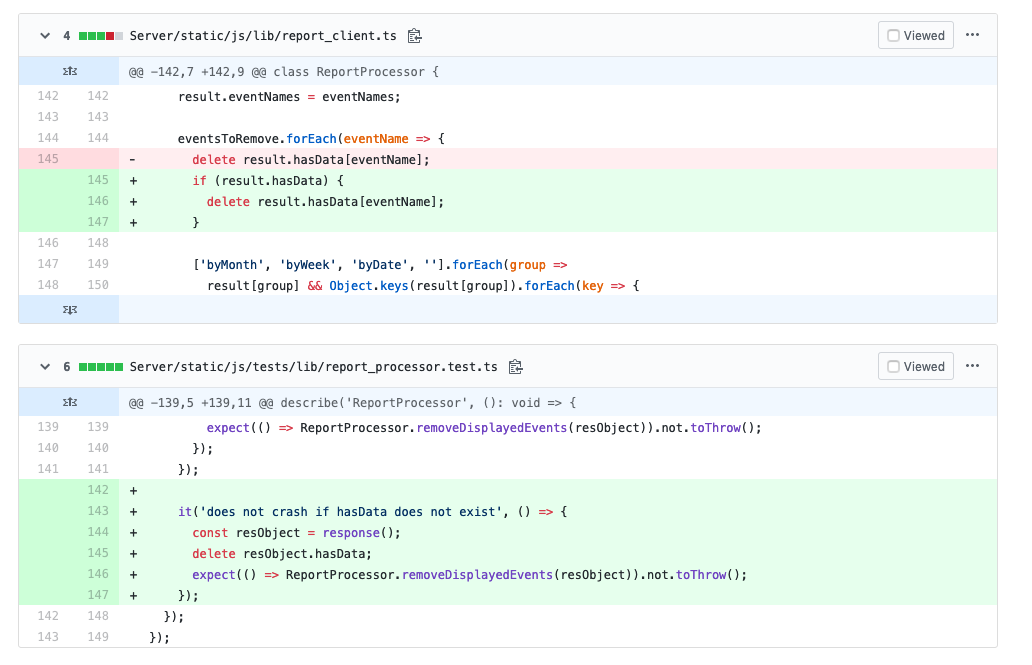

Tests & Types

Types remove classes of errors

They do not ensure correctness

Tests improve probability of correctness

Faster than clicking through the interface

Ensure correctness of use cases

Bonus: improves (software) design

Unit tests & TDD

Is there a difference?

Unit tests & TDD

Unit testing is having tests for the code

TDD is writing a test, then writing the code to pass it

- Ensures that tests can fail

- Ensures path coverage

Is TDD slower to do?

Yes!

No.

If you only take into account writing code.

If you take into account verification.

Tests are automated verification.

Also: It is a skill. You are slow at first.

Opinion: TDD is fun!

You write code all the time.

Maximum flow

(not leaving vim/IDE)

Opinion: TDD is bad for exploration

PoC first, then TDD

TDD in Legacy? Yes.

- Split new code into module

- TDD the module

- Minimize surface between legacy & module

- Regression won't happen again.

- Makes module dependencies very clear

- Improves design!

TDD in Legacy? Yes.

Regression won't happen again.

Testing tips

Tests should be very fast & predictable

- Fast feedback = fast development

- Timeouts? Mock time.

- Randomness? Mock it.

- Async requests? Mock responses.

Testing tips

Mock time

beforeEach(() => jasmine.clock().install());

afterEach(() => jasmine.clock().uninstall());

it('resolves promise after timeout', async () => {

const spy = jasmine.createSpy();

const promise = RequestManager.timeoutPromise(spy, 1000);

jasmine.clock().tick(999);

expect(spy).not.toHaveBeenCalled();

jasmine.clock().tick(1);

expect(spy).toHaveBeenCalled();

});

Testing tips

Mock randomness

it('gives random quotes', () => {

// https://www.xkcd.com/221/

jasmine.spyOn(Math, 'random').and.returnValue(0.8);

expect( getRecommendation([ 'a', 'b', 'c', 'd' ]) ).toEqual('d');

});

Sources of randomness:

- Math.random

- Date.now() / new Date()

- setTimeout()

Testing tips

Mock async operations

it('loads data from the server', () => {

const requestSpy = spyOn(Axios, 'get')

.and.callFake(

() => Promise.resolve({

data: [...]

})

);

const page = mount(MyPage);

expect(requestSpy.calls.count()).toEqual(1);

expect(requestSpy.calls.argsFor(0)[0].url).toEqual('foo');

});

- Knock, knock

- An async test.

- Who's there?

Testing tips

Small tests, clear requirements

it('works', () => {

// 10 assertions

});

// vs

it('works in case A', () => {

// assertion A

});

it('works in case B', () => {

// assertion B

});Failed: it works

=> start debugging

Failed: it works in case B

=> go fix case B

Testing tips

AAA: Arrange, Act, Assert

it('works in case A', () => {

// arrange

const page = mount(MyPage);

// act

page.find(Button).trigger("click");

// assert

expect(page.emitted().save).toBeTruthy();

});

Arrange, Act, Assert, Act, Assert, Act, Assert...

= 3 tests, extract "Arrange"

Testing tips

Avoid testing components internals

it('works in case A', () => {

const page = mount(MyPage);

page.vm._save(); // locks implementation in place

expect(page.emitted().save).toBeTruthy();

});

Testing only public API allows easier change of implementation.

AKA refactoring.

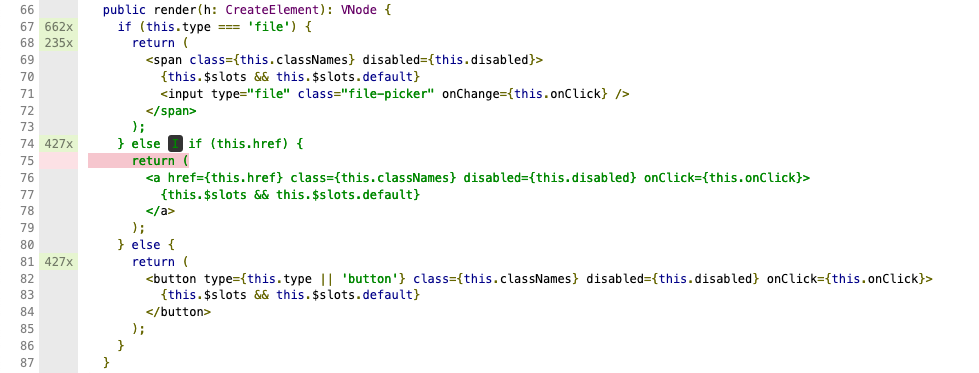

TDD = Good design?

Not always, but improves odds

// FilterController.prototype.render

self.startDate = self.report.navigator.options.actualStartDate || new Date();

self.endDate = self.report.navigator.options.actualEndDate || new Date();

var canHideConfidence = !self.report.navigator.isDatasetAbTest;

view.html(Leanplum.templates.reportFilters({

// ...

confidence: self.report.navigator.options.confidence,

hasGroupBy: self.report.navigator.hasGroupBy(),

viewCohorts: self.report.navigator.options.viewCohorts,

viewConfidence: self.report.navigator.options.viewConfidence || !canHideConfidence,

showViewStudyButton: self.report.navigator.isCampaignOrStudy(),

viewStudyUrl: self.report.navigator.getSetupPageUrl(),

viewStudyButtonLabel: self.report.navigator.getPageKind(),

holdbackToggleOptions: self.report.navigator.getHoldbackToggleOptions(),

report: self.report.navigator.report

}));

Warning: Legendary code ahead

TDD = Good design?

Not always, but improves odds

- Makes you think about dependencies

- Documents examples

- Allows refactoring without stress 🏖

Code Coverage

Does it work?

Code Coverage

Shows you what you haven't tested

Code Coverage

Doesn't ensure correctness, either

// implementation

function to_hsl() {

return HSL(0,100,50);

}

// this test brings coverage to 100%

expect(

to_hsl( RGB(255,0,0) )

).toEqual(

HSL(0,100,50)

);

// nothing else works, thoughOnly useful if combined with the other approaches

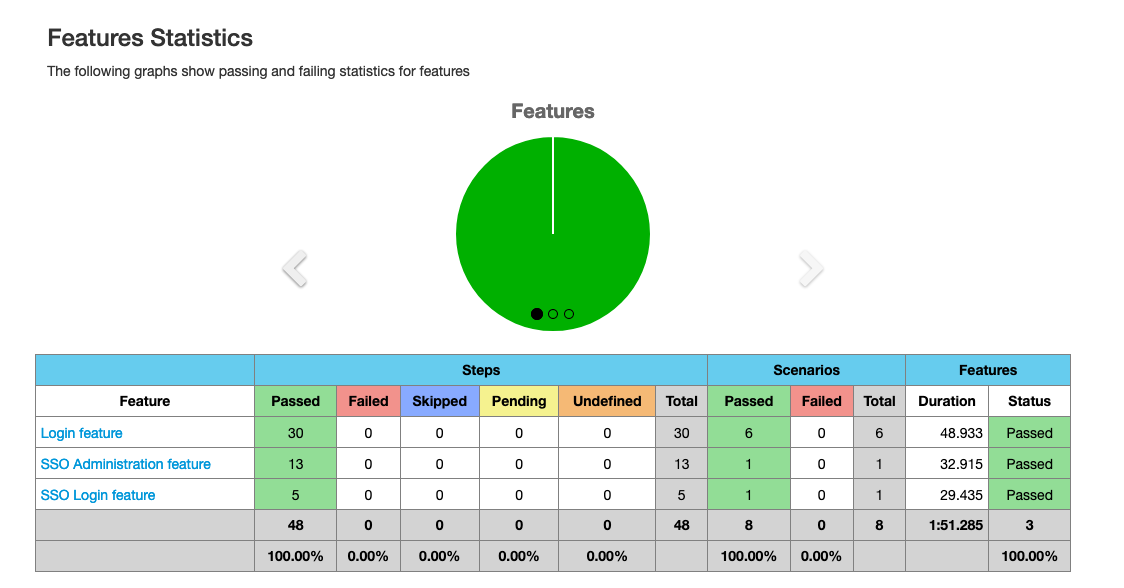

Code Coverage

Success story

- Coverage report shows a red branch

- There is a unit test for that?

- Turns out test was incorrect 🤦♂️

- Caught an actual bug

(SSO copy buttons didn't work)

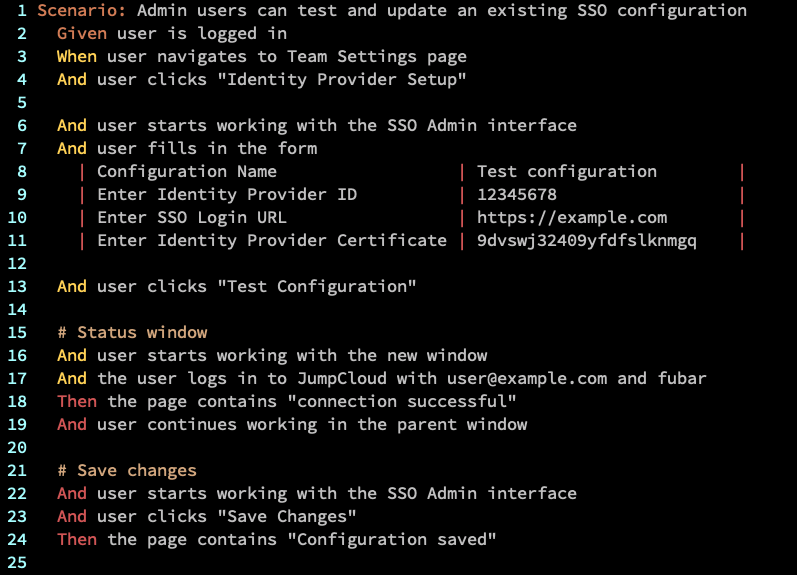

End-to-end tests (E2E)

Can customers get stuff done?

End-to-end tests (E2E)

End-to-end tests (E2E)

mvn clean install

-Denvironment=STAGING # environment

-Dapplication=SsoApp # app settings (appId, URL)

-Dtest='RunSSOTests' # runs tests by tag (@SSO)

-Dtype=local. # not CI

Thanks!

And here's to no-stress deployments 🍹