A gentle introduction to Particle Filters

EA503 - DSP - Prof. Luiz Eduardo

Agenda

- Tools from statistics + Recap

- Particle filters and its components

- An algorithm (In Python)

Classical statistics

We place probability distributions on data

(only data is random)

Bayesian statistics

We place probability distributions in the model and in parameters

Probability represents uncertainty

Posterior

Probability

Likelihood

Prior

Bayesian statistics

Recursively,

Bayesian statistics

Every time we get a new step, we should be able to improve our state estimation

Kalman Filter

Prediction + correction

Constraint: It needs to be a Gaussian distribution

It is also a recursive structure

Can we represent these recursive models as chains?

Markov chain

X is the true information

We may not have access to the true information, but to a measured (estimated) value

Hidden Markov chain

What can we say about this model in terms of P?

Goal:

Consider all the observations for the estimation

[marginal distribution]

Goal:

We know

and

Considering that this is a recursive process,

Combining these Probabilities:

Goal:

We know

and

Considering that this is a recursive process,

Combining these Probabilities:

Goal:

We know

and

Considering that this is a recursive process,

Combining these Probabilities:

Goal:

We know

and

Considering that this is a recursive process,

Combining these Probabilities:

Not

Relevant in this formula

Goal:

In a particular point,

Sum x (it'll remove )

Goal:

Get rid of

Current:

Another manipulation

Goal:

Final

arrangement:

And this is the set of ideas we need for understanding...

[joint distribution]

Agenda

- Tools from statistics + Recap

- Particle filters and its components

- An algorithm (In Python)

Goal:

Final

arrangement:

This computation can be REALLY heavy (O^2)

[joint distribution]

Let's use points (particles) to represent our space

Let's estimate this joint distribution as a sum of weighted trajectories of this particles

Goal:

Final

arrangement:

In pictures

Goal:

Final

arrangement:

In pictures

Goal:

Final

arrangement:

In formulas

The sum of these weights is 1

The joint distribution becomes a sum of impulses with weights

The weight is proportional to three components:

1.

Observation probability

2.

Transition probability

3.

Computation

from previous step

We can build a recursive formula for the weight of a given particle i

The steps to the algorithm

1. Use n particles to represent distributions over hidden states; create initial values

2. Transition probability: Sample the next state from each particle

3. Calculate the weights and normalize them

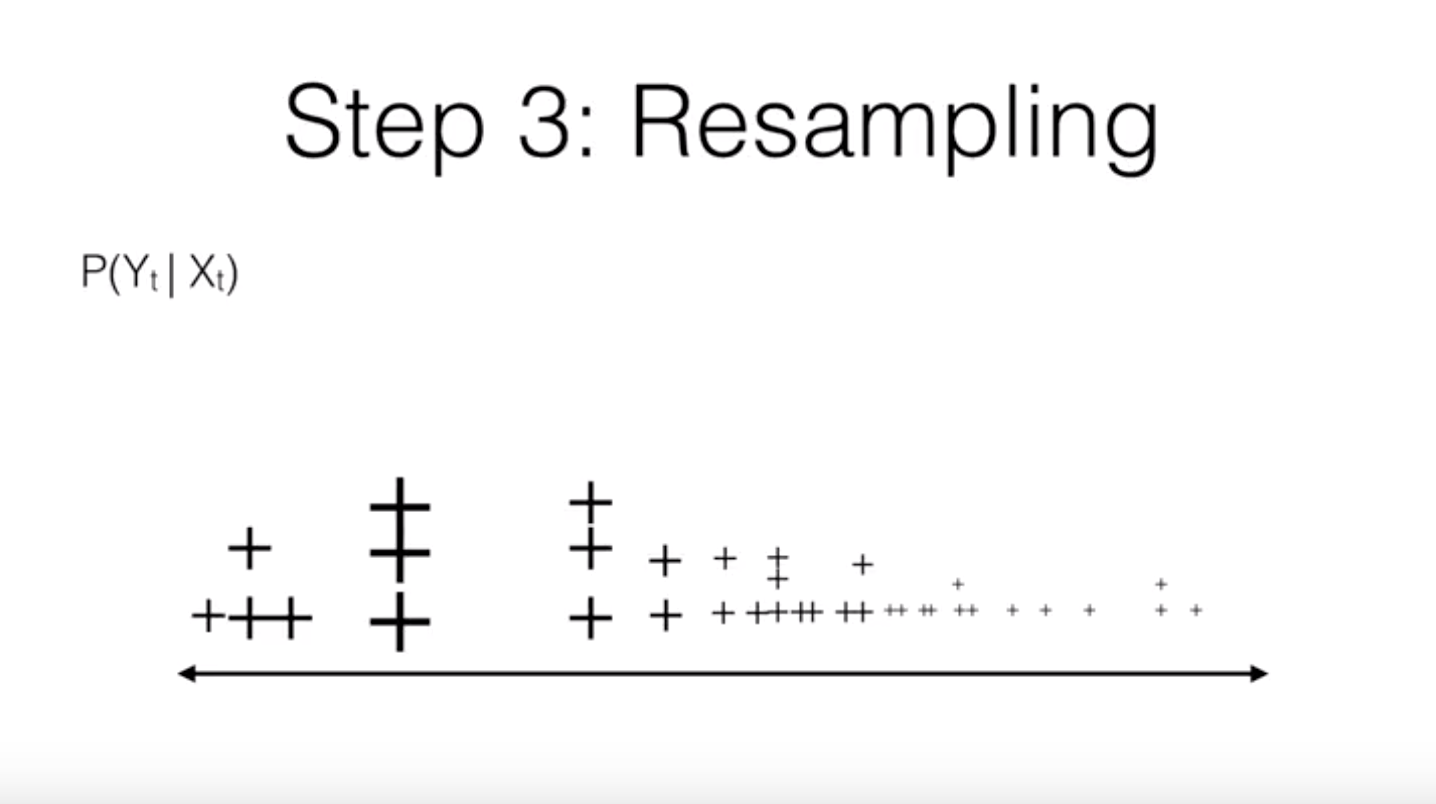

4. Resample: generate a new distribution of particles

loop

In pictures

In pictures

In pictures

In pictures

A concrete example

1 sample/s

A concrete example

is a phase angle, given

A concrete example

This is the measurement model

A concrete example

This is the measurement model

(it comes from domain knowledge)

A concrete example

This is the process model

A concrete example

This is state space - now we apply the algorithm

Agenda

- Tools from statistics + Recap

- Particle filters and its components

- An algorithm (In Python)