Python

~ Crash Course ~

UNIFEI - June, 2018

Hello!

Prof. Maurilio

Hanneli

Prof. Bonatto

Moodle

<3

Questions

- What is this course?

- A: An attempt to bring interesting subjects to the courses

- Why are the slides in English?

- I am reusing some material that I prepared before

- What should I expect?

- This is the first time we give this course. We will be looking forward to hearing your feedback

Rules and Notes

- Don't skip classes

- The final assignment is optional

- Feel free to ask questions

- We plan to record the classes and upload the videos

DISCLAIMER

This is NOT a Python tutorial

[GIF time]

Goals

- First contact with Python

- Tools for science and Engineering

- How to ask proper questions to Google

- (try to) Use all the code that is already done

- Some recipes for transforming a problem into an automated task

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

(Note: This list can change!)

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

(Note: This list can change!)

The benefits of Python

The benefits of Python

- Easy to learn

- Lots of libraries

- Lots of libraries for science and engineering

- Good performance

- Good for Web Development

- Good for automating tasks

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

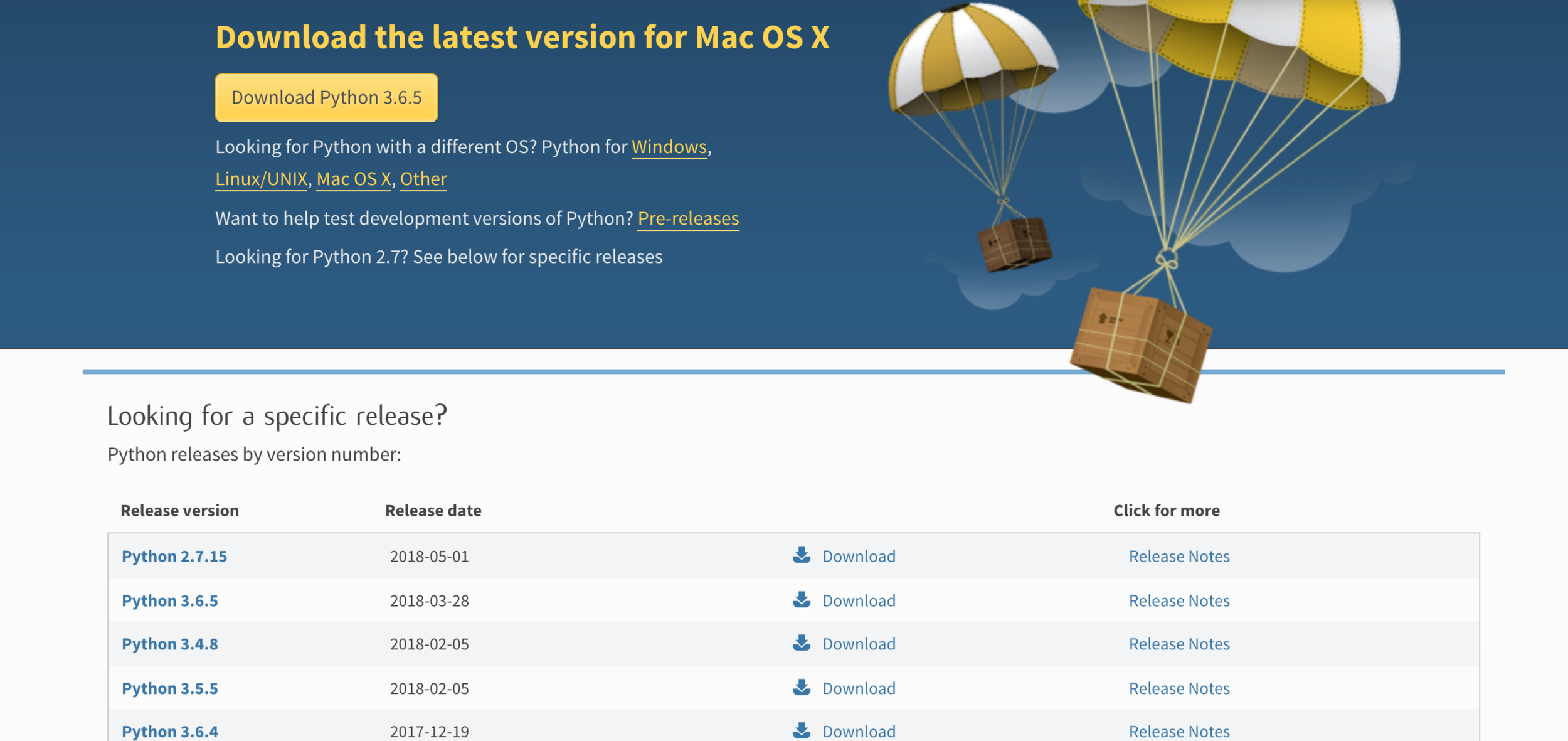

Linux, MacOS

Python is already there :)

Windows

https://www.python.org/downloads/windows/

Python 3 or Python 2?

Python 3, please

We don't really need to install Python to use it

Let's try it out!

There is a good place for writing and testing your Python code

Jupyter Notebooks

Jupyter Notebooks

- Easy to see the results

- Easy to test

- Good for documenting your research

- Easy to share

You can use it online or in your computer

How can we get all the cool libraries written in Python?

It is time for the black screen os h4ck3rs

You need to use your terminal (Linux, MacOS) or the Windows Prompt

pip

Once you install pip, you can have all the cool libraries in your machine

Ex: Jupyter Notebooks

pip install jupyterjupyter notebookQuestion:

Which libraries can I download?

In practice

Tips:

- Look for Open Source tools

- Check the source and see if the library is stable and has frequent updates

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

We often need to collect online data (for research, personal projects, etc)

Scraping my wishlists at Amazon

What do we do now?

scrapy (install it with pip)

pip install scrapyGoal

1. Obtain the book title and price of all the items of the wishlist

2. Save all this information in a local file

Goal

1. Obtain the book title and price of all the items of the wishlist

2. Save all this information in a local file

Everything that you see in a webpage is...

Text

It is all a bunch of text with markups to indicate style, position of the elements,...

This is what we call HTML

image from amazon.com.br

We must dig into this text and find the information we want

The idea of the algorithm

For every book, get the title and price; move to the next book; stop when you reach the end of the wishlist

image from amazon.com.br

Can the library (scrapy) do that?

Smart move

scrapy shell 'https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW'response.text

How do I reproduce this action into a Jupyter Notebook or Python file?

Use the command 'scrapy shell' was unfair

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

print(response.text)short_parser.py

C:\> scrapy runspider short_parser.pyC:\> scrapy runspider short_parser.py > page.txtimport scrapy

from scrapy.crawler import CrawlerProcess

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

print(response.text)

process = CrawlerProcess({

'USER_AGENT': 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)'

})

process.crawl(ShortParser)

process.start()

the script will block here until the crawling is finishedshort_parser.py

C:\> python short_parser.py

TOO MANY THINGS GOING ON!!!111

Goal

1. Obtain the book title and price of all the items of the wishlist

2. Save all this information in a local file

Questions:

- What is 'class'?

- How did you know about that method parse?

- I have a big chunk of code. Now what? How do I extract the information I need?

Wrap Up

- Python basics

- Installation

- Using the tools and the language

- Extra: Where to learn more (Coursera)

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

print(response.text)short_parser.py

Read the Docs! https://doc.scrapy.org/

The Docs will help you using the library. They usually have examples

Going back to our problem

1. Obtain the book title and price of all the items of the wishlist

2. Save all this information in a local file

We still need to clean up the information inside the HTML and collect only a few information about the books

Since HTML has "markups", we can fetch the information we want

(whiteboard time - how does HTML represent the content?)

All the books are inside this element 'div#item-page-wrapper'

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")amazon_spider.py

Note: Python relies on indentation.

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")amazon_spider.py

What is the output of this method call?

Scrapy shell is useful here!

scrapy shell 'https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW'In [4]: books_root = response.css("#item-page-wrapper")

In [5]: books_root

Out[5]: [<Selector xpath="descendant-or-self::*[@id = 'item-page-wrapper']"

data='<div id="item-page-wrapper" class="a-sec'>]

Let's see the examples again. We have something called 'Selector'

In [4]: books_root = response.css("#item-page-wrapper")

In [5]: books_root

Out[5]: [<Selector xpath="descendant-or-self::*[@id = 'item-page-wrapper']"

data='<div id="item-page-wrapper" class="a-sec'>]

Out[5]: [<Selector xpath="descendant-or-self::*[@id = 'item-page-wrapper']"

data='<div id="item-page-wrapper" class="a-sec'>]

The information of every book is inside the 'div#itemMain_[WeirdCode]'

How can we select

'div#itemMain_[WeirdCode]' ?

We know this information always starts with itemMain_, followed by anything

We can use a Regular Expression - we tell to the algorithm: 'Select the items that are called #itemMain[I_dont_care]'

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")

books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')amazon_spider.py

What is the output of this method call?

Back to Scrapy shell!

scrapy shell 'https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW'In [4]: books_root = response.css("#item-page-wrapper")

In [5]: books_root

Out[5]: [<Selector xpath="descendant-or-self::*[@id = 'item-page-wrapper']"

data='<div id="item-page-wrapper" class="a-sec'>]In [6]: books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')

In [7]: books

Out[7]:

[<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I2Y87PJXIXDI9X" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I1B5CM41FDBQQU" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I3JPI776YSPWGL" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I2HEQ767OEDQND" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I12P3TV8OT8VUQ" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I2PUS0RW7H14QK" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I2Z1XDSQWY482G" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_I1UIZOCDVDCJCN" class='>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_IVVEST5SABEDL" class="'>,

<Selector xpath='//div[re:test(@id, "itemMain_*")]' data='<div id="itemMain_IO58MH5C88JMF" class="'>]Those are the books! Now we can obtain the title and the price

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")

books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')amazon_spider.py

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")

books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')

for book in books:amazon_spider.py

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls =

['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")

books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')

for book in books:

book_info = book.xpath('.//div[re:test(@id, "itemInfo_*")]')

title = book_info.xpath('.//a[re:test(@id, "itemName_*")]/text()')

.extract_first()amazon_spider.py

Now we have the values of title and price, for every iteration

We want these results only when the code runs

yield

import scrapy

class ShortParser(scrapy.Spider):

name = 'shortamazonspider'

start_urls = ['https://www.amazon.com.br/gp/registry/wishlist/3DA4I0ZLH8ADW']

def parse(self, response):

books_root = response.css("#item-page-wrapper")

books = books_root.xpath('//div[re:test(@id, "itemMain_*")]')

for book in books:

book_info = book.xpath('.//div[re:test(@id, "itemInfo_*")]')

title = book_info.xpath('.//a[re:test(@id, "itemName_*")]/text()').extract_first()

price = book_info.xpath('.//span[re:test(@id, "itemPrice_*")]//span/text()').extract_first()

yield {'Title':title, 'Last price':price }amazon_spider.py

Going back to our problem

1. Obtain the book title and price of all the items of the wishlist

2. Save all this information in a local file

Problem: this code only considers the first books. There are many books. How can we fetch info from all the books?

(This is an optional homework!)

Note about scraping data: Digging into the HTML structure can be tricky

There may be many ways to extract the data and the code can get obsolete with any minor changes on the webpage

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

New problem: We don't need to scrape the data. It comes from a .csv file

For example, the students of this course

From the form, I obtained a python_ext.csv file

julianafandrade@hotmail.com,Juliana Figueiredo de Andrade,34293,ECO

jianthomaz1994@gmail.com,Jian Thomaz De Souza ,2018013906,ECO

ranierefr@hotmail.com,Frederico Ranieri Rosa,2017020601,ECO

pievetp@gmail.com,Dilson Gabriel Pieve,2017006335,ECO

henrqueolvera@gmail.com,Henrique Castro Oliveira,2018003703,CCO

fabio.eco15@gmail.com,Fábio Rocha da Silva,31171,ECO

ftdneves@gmail.com,Frederico Tavares Direne Neves,2016014744,ECO

gabrielocarvalho@hotmail.com,Gabriel Oraboni Carvalho,33549,CCO

laizapaulino1@gmail.com,Laiza Aparecida Paulino da Silva,2016001209,SIN

rodrigogoncalvesroque@gmail.com,Rodrigo Gonçalves Roque,2017004822,CCO

jlucasberlinck@hotmail.com,João Lucas Berlinck Campos ,2016017450,ECO

sglauber26@gmail.com,Glauber Gomes de Souza,2016014127,CCO

ricardoweasley@hotmail.com,Ricardo Dalarme de Oliveira Filho,2018002475,CCO

caikepiza@live.com,Caike De Souza Piza,2016005404,ECO

henriquempossatto@gmail.com,Henrique Marcelino Possatto ,2017007539,ECO

souza.isa96@gmail.com,Isabela de Souza Silva,2017000654,SIN

felipetoshiohikita@gmail.com,FELIPE TOSHIO HIKITA DA SILVA,34948,ECOWe want to get this information into Python and analyse it

LMGTFY

'Load CSV file to Python'

Pandas Library

Install it with pip

pip install pandas

Recipe

- Add the python_ext.csv file to a directory

- Create a file students.py at the same directory

import pandas as pd

df = pd.read_csv('python_ext.csv')

print(df)3. On the terminal, run `python students.py`

Now we can manipulate this data

Send emails

Generate QRCodes

Statistics

df.groupby('curso').count()['matricula']Tip: Obtaining data

Agenda

- Why Python?

- Getting Started (installation, important tools)

- Hello, Python! (super basic, boring and necessary intro)

- Our first problem: Getting online data

- Data and Numpy

- More interesting libraries

Wrap Up

A complete Scraper

Pandas and Datasets

Exercises

Moodle

<3

Working with Arrays

LMGTFY

'library arrays in Python'

NumPy Library

Install it with pip

pip install numpyTry these examples from DataCamp

(note: you can skip the first part where they suggest installing Anaconda)

Extra - challenge from Kaggle (Jun 11th - Jun 17th)

Feedback

Thank you :)

Questions?

hannelitaa@gmail.com

@hannelita