PlanGAN - Model-based planning for multi-goal,

sparse-reward environments

Henry Charlesworth and Giovanni Montana

Warwick Manufacturing Group, University of Warwick

NeurIPS 2020

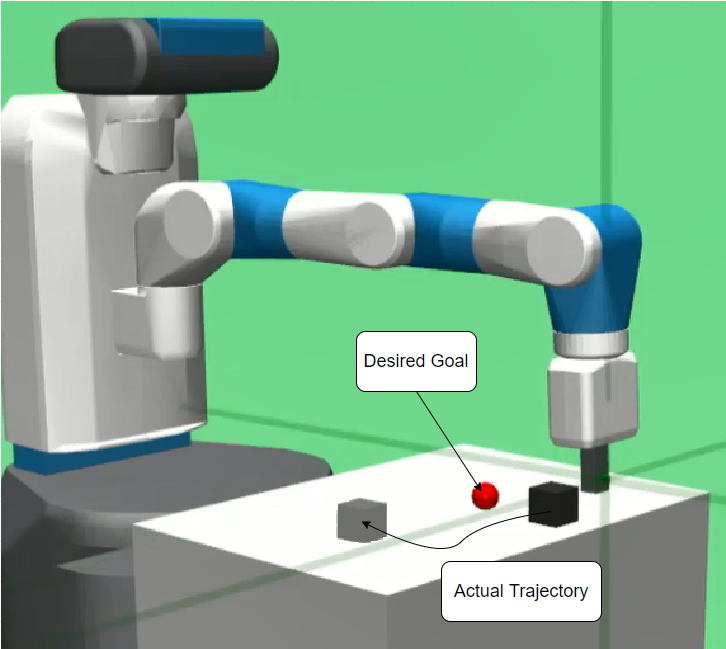

Motivation

- Sparse rewards - usually easier to specify when a task is complete rather than defining a reward function

- Want to train agents capable of carrying out multiple different tasks

- Currently, best RL methods for these kinds of task are model-free (Hindsight Experience Replay)

- But model-based RL can often be substantially more sample efficient - can we come up with a model-based approach that works for sparse-reward, multi-goal problems?

Methodology

- Build upon the same principle that underlies Hindsight Experience Replay - that trajectories that don't achieve the specified goal still contain useful information for how to achieve the goal(s) that actually were achieved

Methodology

- Aim: a goal-conditioned generative model (GAN) that can produce plausible trajectories that lead from current state towards a specified goal state

- Train on gathered experience - relabel desired goal to be a goal achieved at some later point during the observed trajectory.

- Gives us lots of example data to train on!

Methodology

- Gather data using a planner to plan actions using many trajectories produced by generative model

Results

- Massively outperforms normal model-based method

- Significantly more sample efficient than model-free methods designed for these kinds of problems!