Enhancing Long-Term Memory using Hierarchical Aggregate Tree in

Large Language Models

Aadharsh Aadhithya

CB.EN.U4AIE20001

Project Review : MiniProject

Guides: Dr. Sachin Kumar S & Dr. Soman K.P

Problem Statement

- Large Language Models are trained to complete sentences.

- These models are finetuned on "Instruction Following datasets" to make it follow instructions and respond in human like manner.

- Figuring out the right input to give to the model, to get the desired output, have turned out to be a "skill", and is often termed as prompt engineering.

Problem Statement

LLM

Prompt

Output

Problem Statement

LLM

Prompt

Output

LLMs are "closed". Only way to feed in information about external world is through the prompts.

Problem Statement

LLM

Prompt

Output

LLMs are "closed". Only way to feed in information about external world is through the prompts.

Problem Statement

LLM

Prompt

Output

LLMs are "closed". Only way to feed in information about external world is through the prompts.

However, amount of information you can put in prompts is limited by the model's "Context Length".

Problem Statement

LLM

Prompt

Output

LLMs are "closed". Only way to feed in information about external world is through the prompts.

However, amount of information you can put in prompts is limited by the model's "Context Length".

Hence there is a need to efficiently and smartly choose what to put in prompts based on user's input and past conversation.

Problem Statement

LLM

Prompt

Output

However, amount of information you can put in prompts is limited by the model's "Context Length".

Hence there is a need to efficiently and smartly choose what to put in prompts based on user's input and past conversation.

For example: A long Open Domain conversation, we cannot put entire conversation in context (It might go for even years)

Existing Methods

Existing Methods

Retrieval

Agumented

Memory

Agumented

[1]Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat, and Mingwei Chang. 2020. Retrieval augmented

language model pre-training. In ICLR.

[2] Patrick Lewis, Ethan Perez, Aleksandara Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Hein-

rich Kuttler, Mike Lewis, Wen tau Yih, Tim Rock- täschel, Sebastian Riedel, and Douwe Kiela. 2020.

Retrieval-augmented generation for knowledge- intensive nlp tasks. In NeurIPS.

[3]Xinchao Xu, Zhibin Gou, Wenquan Wu, Zheng-Yu Niu, Hua Wu, Haifeng Wang, and Shihang Wang. 2022b.

Long time no see! open-domain conversation with long-term persona memory. In Findings of ACL

- Some sort of retrieval system is employed to retrieve "Relevant chunk" and inject to prompt

- "Train" a memory management model

Existing Methods

Retrieval

Agumented

Memory

Agumented

[1]Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat, and Mingwei Chang. 2020. Retrieval augmented

language model pre-training. In ICLR.

[2] Patrick Lewis, Ethan Perez, Aleksandara Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Hein-

rich Kuttler, Mike Lewis, Wen tau Yih, Tim Rock- täschel, Sebastian Riedel, and Douwe Kiela. 2020.

Retrieval-augmented generation for knowledge- intensive nlp tasks. In NeurIPS.

[3]Xinchao Xu, Zhibin Gou, Wenquan Wu, Zheng-Yu Niu, Hua Wu, Haifeng Wang, and Shihang Wang. 2022b.

Long time no see! open-domain conversation with long-term persona memory. In Findings of ACL

- Some sort of retrieval system is employed to retrieve "Relevant chunk" and inject to prompt

- "Train" a memory management model

- We should go over entire corpus

- We need "training data" which is expensive

Existing Methods

Related Work

* Preprint Just Released on 29th Aug,2023

* Today: November

Lots of these papers and preprints gets accepted in conferences like NeurIPS, ACL, ICLR,etc. That underscores the relevance of the current work

Existing Methods

Related Work

This work proposes sort of "Running Summary" of the information.

Existing Methods

Related Work

This work proposes sort of "Running Summary" of the information.

However, prone to forgetting finer details, if required. And not scalable to data's like books,novels,etc.

Methedology

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

The tree consists of "nodes"

HST of memory length 2

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

Attributes

Operations

- ID

- text

- prev_states

- parent

- aggregator

- children

- Update Parent

- Add child

- Remove Child

- Update Text

- Hash Children

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

- ID - UID, generated using information of child nodes

- text - Content of this node

- prev_states - Hashmap/Dictionary of all childnodes this node have seen

- parent - Pointer to its parent

- aggregator - A function, that sets this node's property to aggregate of child nodes property

- children - List of child nodes

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

Attributes

Operations

- ID

- text

- prev_states

- parent

- aggregator

- children

- Update Parent

- Add child

- Remove Child

- Update Text

- Hash Children

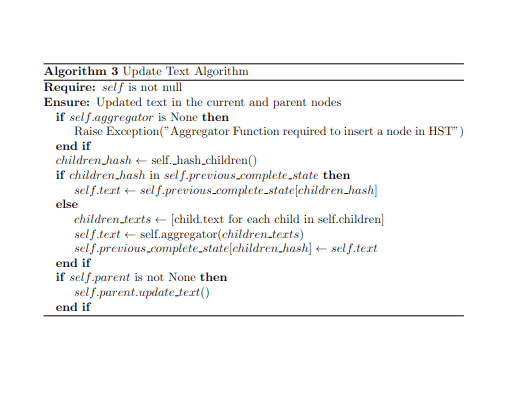

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

Operations

- Update Parent

- Add child

- Remove Child

- Update Text

- Hash Children

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

Operations

- Update Parent

- Add child

- Remove Child

- Update Text

- Hash Children

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Nodes in HAT

Operations

- Update Parent

- Add child

- Remove Child

- Update Text

- Hash Children

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Attributes

Operations

- memory length

- aggregator

- layers

- num layers

- Insert Node

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Attributes

- memory length - Number of nodes that should be aggregated in each layer

- aggregator - Function takes in text aggregates and returns text

- layers - List of nodes layer wise

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Attributes

Operations

- memory length

- aggregator

- layers

- num layers

- Insert Node

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Operations

- Insert Node

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(node,L)

L.append(node)

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(node,L)

Check if Number of nodes is a multiple of memory length. If not, add it as a child to last node in the above layer

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(node,L)

Check if Number of nodes is a multiple of memory length. If yes, remove recent memory length childs from last node in above layer and add new node(np). call insert(np,L-1)

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(np,L-1)

Check if Number of nodes is a multiple of memory length. If yes, remove recent memory length childs from last node in above layer and add new node(np). call insert(np,L-1)

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(np,L-1)

This recursively goes on. If L==0, then create a new layer and add children

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(np,L-1)

This recursively goes on. If L==0, then create a new layer and add children

Note: Because we are hashing children, When we remove children, we need not compute the summaries again.

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Speaker 1 chat

Speaker 2 chat

Level 1 Summary

Level 2 Summary

Entire Summary

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

More Generally

Given a Task T, We should now find best strategy to traverse the tree

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

More Generally

Given a Task T, We should now find best strategy to traverse the tree

Actions at each state: Up,Down,Left,Right

states: nodes

Train an Agent?

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

More Generally

Given a Task T, We should now find best strategy to traverse the tree

Actions at each state: Up,Down,Left,Right

states: nodes

Train an Agent?

Baseline: GPT as Agent

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Baseline: GPT as Agent

memory

agent

context

user query

action

mem

manager

if A== "OK"

Retrieved context

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Baseline: GPT as Agent

memory

agent

context

user query

action

mem

manager

if A== "OK"

Retrieved context

Memory Module

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Baseline: GPT as Agent

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Baseline: GPT as Agent

Note: This framework can be used for a variety of tasks. However, now we concentrate on open domain conversations

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT for Conversations

Stratergy

Start from Root node. If you need details go down. If you need information from the past go right.

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

HAT

Insert(node)

Check if Number of nodes is a multiple of memory length. If yes, remove recent memory length childs from last node in above layer and add new node

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Prompt Used

You are an agent traversing a tree. Given a user message and details/personas from a conversation, your task is to determine if the context provided is sufficient to answer the user's question by the other speaker. You begin at the root node, which should contain a high-level summary of all information up to this point. If more information is needed, you can explore the tree, following the traversal history provided. Avoid circular paths and make sure to explore effectively based on the traversal history. If after 7-8 traversals the information remains unavailable, use the 'U' action. If you reach the bottom or edge of the tree, reset with the 'S' action. The tree's structure is as follows:

1) Tree depth corresponds to information resolution. Leaf nodes are individual sentences from chats, and higher nodes summarize their children. Descend for more details, ascend for high-level information.

2) Horizontal position reflects time. The rightmost node is the most recent. Depending on the query and information, decide whether to search in the past or future. Move left for past information, right for future information.

Layer '0' is the root. Layer number increases with depth, and node number increases to the right. Stay within the permitted limits of layers and nodes.

Pay attention to the 'err' field for feedback on your moves.

Your actions can only be:

- U: Up

- D: Down

- R: Right

- L: Left

- S: Reset (Return to root node)

- O: Current Information Sufficient to Respond

- U: Current Information Insufficient to Respond

#############

Traversal History: {self.memory.get_current_traversal()}

Latest Moves and Reasons: {self.memory.traversals[:-3]}

#############

Current Location: Layer: {self.memory.layer_num}, Node: {self.memory.node_num}

Total Layers: {len(self.memory.hst.layers)},

Total Nodes in Current Layer: {len(self.memory.hst.layers[self.memory.layer_num]) if (self.memory.layer_num < len(self.memory.hst.layers)) else 0}

#############

Details/Personas: {self.memory._get_memory()}

#############

User Message: {user_message}

############

Errors: {err}

############

Action:

Reason: (Tell reason why you chose the action)

"""

Methodology

I propose to use a new data structure called "Hierarchical Aggregate Tree" (HAT)

Prompt Used

You are in a natural conversation. Given with a memory and user query, respond (You are Speaker 2) such that you continue the conversation and

answer the user query if any from the memory. If memory is not sufficient to answer, tell i dont know.

###########

memory: {mem}

###########

user query: {user_query}

##########

Speaker 2:

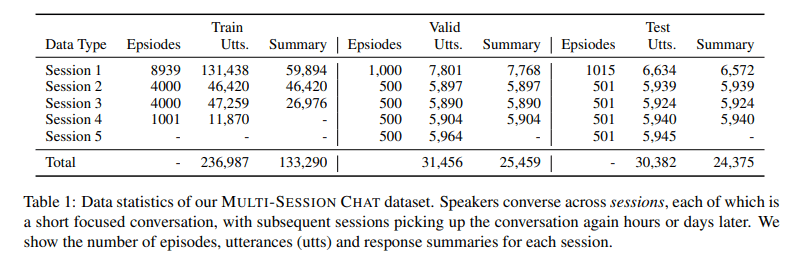

Dataset and Evaluation

Dataset

We use the "Multi Session Chat" dataset [5]

[5] Xu, Jing, Arthur Szlam, and Jason Weston. "Beyond goldfish memory: Long-term open-domain conversation." arXiv preprint arXiv:2107.07567 (2021).

Dataset

We use the "Multi Session Chat" dataset [5]

[5] Xu, Jing, Arthur Szlam, and Jason Weston. "Beyond goldfish memory: Long-term open-domain conversation." arXiv preprint arXiv:2107.07567 (2021).

Metrics

BLEU (Bilingual Evaluation Understudy)

weights

ratio of the number of n-grams in the candidate that match n-grams in any reference

Brevity Penalty

Metrics

F1

Precision: proportion of words (or n-grams) in the candidate sentence that are also found in the reference sentence.

Recall: proportion of words (or n-grams) in the reference sentence that are also found in the candidate sentence

Metrics

DISTINCT (Distinct-N)

Measures the diversity of the response

Results

Results

Text

- The MSC dataset has about 500 conversations in its Test set, spanning over 5 Sessions

- We Follow the Methodology described, and compare the summary generated at end of every session with the gold summary given in the dataset

Results

Text

| F1 | BLEU-1/2 | |

|---|---|---|

| Ours | 38.88 | 37.98/16.18 |

| [7] | 25.35 | 28.52/11.63 |

[7]Wang, Qingyue, et al. "Recursively summarizing enables long-term dialogue memory in large language models." arXiv preprint arXiv:2308.15022 (2023).

* Results are reported just for Session 2. Results for other sessions are yet to be obtained

| DISTINCT-1/2 | |

|---|---|

| Ours | 0.76/0.88 |

Results

Text

Results

Text

Beyond Numbers: A example

Speaker 1: What kind of car do you own? I have a jeep.

Speaker 2: I don't own my own car! I actually really enjoying walking and running, but then again, I live in a small town and semi-close to work.

Speaker 1: Ah I see! I like going to the gym to work out.

Speaker 2: I'm a computer programmer. What do you do for work?

Speaker 1: I work in marketing. Do you have any hobbies?

Speaker 2: I love to grill! I love meat, especially steak.

Speaker 1: Oh neat! I don't eat meat but I do like some grilled veggies.

Speaker 2: My family is from Alaska. Where are you from?

Speaker 1: I'm from New England. It must be cold up there.

Speaker 2: It's so cold! I think that's why I love wearing warm pants!

Speaker 1: Haha I feel you. I love wearing warm pants in the winter.

Speaker 2: Do you play any sports?

Session 2

Results

Text

Beyond Numbers: A example

Speaker 1: What kind of car do you own? I have a jeep.

Speaker 2: I don't own my own car! I actually really enjoying walking and running, but then again, I live in a small town and semi-close to work.

Speaker 1: Ah I see! I like going to the gym to work out.

Speaker 2: I'm a computer programmer. What do you do for work?

Speaker 1: I work in marketing. Do you have any hobbies?

Speaker 2: I love to grill! I love meat, especially steak.

Speaker 1: Oh neat! I don't eat meat but I do like some grilled veggies.

Speaker 2: My family is from Alaska. Where are you from?

Speaker 1: I'm from New England. It must be cold up there.

Speaker 2: It's so cold! I think that's why I love wearing warm pants!

Speaker 1: Haha I feel you. I love wearing warm pants in the winter.

Speaker 2: Do you play any sports?

Session 2

Session 3

Speaker 1:Did you manage to go for a run today?

Results

Text

Beyond Numbers: A example

Session 3

Speaker 1:Did you manage to go for a run today?

Note: No where did Speaker 2 Explicitly mentioned he went for a run today till now

Usual LLM Behaviour

Results

Text

Beyond Numbers: A example

Speaker 1:Did you manage to go for a run today?

Our Method

-

Action: 'D' (Descending the tree)

- Reason: The user is asking whether the other speaker went out on a run today. This requires exploring the more recent nodes in the tree for relevant information.

-

Action: 'S' (Resetting and exploring the tree)

- Reason: The current location in the tree does not contain specific information related to running, necessitating a reset to find relevant information.

-

Action: 'D' (Descending the tree)

- Reason: To find information about going for a run, descending the tree is necessary to explore previous messages.

-

Action: '' (No specific action)

- Reason: Not provided.

-

Action: 'D' (Descending the tree)

- Reason: To check if there is any mention of the other speaker going for a run, descending the tree is required to explore information in lower layers.

-

Action: 'R' (Moving right in the tree)

- Reason: Having already explored downwards and reset, moving to the right is necessary to find information possibly in the future.

-

Action: 'D' (Descending the tree)

- Reason: To find related information about going out on a run today, it's best to explore the lower nodes.

-

Action: 'O' (OK with the current context)

- Reason: Given the user's query about going out for a run and Speaker 2's persona, which includes enjoyment in walking and running, it is assumed Speaker 2 might have gone out for a run.

- Layer: 3

- Node: 1

- Final Memory: "Speaker 2: I don't own my own car! I actually really enjoy walking and running, but then again, I live in a small town and am semi-close to work."

Results

Text

Beyond Numbers: A example

Speaker 1:Did you manage to go for a run today?

Our Method

-

Action: 'D' (Descending the tree)

- Reason: The user is asking whether the other speaker went out on a run today. This requires exploring the more recent nodes in the tree for relevant information.

-

Action: 'S' (Resetting and exploring the tree)

- Reason: The current location in the tree does not contain specific information related to running, necessitating a reset to find relevant information.

-

Action: 'D' (Descending the tree)

- Reason: To find information about going for a run, descending the tree is necessary to explore previous messages.

-

Action: '' (No specific action)

- Reason: Not provided.

-

Action: 'D' (Descending the tree)

- Reason: To check if there is any mention of the other speaker going for a run, descending the tree is required to explore information in lower layers.

-

Action: 'R' (Moving right in the tree)

- Reason: Having already explored downwards and reset, moving to the right is necessary to find information possibly in the future.

-

Action: 'D' (Descending the tree)

- Reason: To find related information about going out on a run today, it's best to explore the lower nodes.

-

Action: 'O' (OK with the current context)

- Reason: Given the user's query about going out for a run and Speaker 2's persona, which includes enjoyment in walking and running, it is assumed Speaker 2 might have gone out for a run.

- Layer: 3

- Node: 1

- Final Memory: "Speaker 2: I don't own my own car! I actually really enjoy walking and running, but then again, I live in a small town and am semi-close to work."

Results

Text

Beyond Numbers: A example

Speaker 1:Did you manage to go for a run today?

Our Method

-

Action: 'D' (Descending the tree)

- Reason: The user is asking whether the other speaker went out on a run today. This requires exploring the more recent nodes in the tree for relevant information.

-

Action: 'S' (Resetting and exploring the tree)

- Reason: The current location in the tree does not contain specific information related to running, necessitating a reset to find relevant information.

-

Action: 'D' (Descending the tree)

- Reason: To find information about going for a run, descending the tree is necessary to explore previous messages.

-

Action: '' (No specific action)

- Reason: Not provided.

-

Action: 'D' (Descending the tree)

- Reason: To check if there is any mention of the other speaker going for a run, descending the tree is required to explore information in lower layers.

-

Action: 'R' (Moving right in the tree)

- Reason: Having already explored downwards and reset, moving to the right is necessary to find information possibly in the future.

-

Action: 'D' (Descending the tree)

- Reason: To find related information about going out on a run today, it's best to explore the lower nodes.

-

Action: 'O' (OK with the current context)

- Reason: Given the user's query about going out for a run and Speaker 2's persona, which includes enjoyment in walking and running, it is assumed Speaker 2 might have gone out for a run.

- Layer: 3

- Node: 1

- Final Memory: "Speaker 2: I don't own my own car! I actually really enjoy walking and running, but then again, I live in a small town and am semi-close to work."

When we return this reason and context, LLM is more likely to tell yes I went for a run

Results

Text

Beyond Numbers: A example

Speaker 1:Did you manage to go for a run today?

Our Method

When we return this reason and context, LLM is more likely to tell yes I went for a run

Actual Response: 'Yes I actually was able too. I am considering joining the local gym. Do you prefer going to the gym?' (From Dataset)

Directions

Future Directions

- Can we "Train" our agent for sequential Decision Making, instead of relying on GPT?

- Now we compared just summaries. We should compare the actual responses and report that results

- Try Different Strategies for exploring the tree