19AIE205

Sleep stage classification using different ML algorithms

Python for Machine Learning

Aadharsh Aadhithya - CB.EN.U4AIE20001

Anirudh Edpuganti - CB.EN.U4AIE20005

Madhav Kishore - CB.EN.U4AIE20033

Onteddu Chaitanya Reddy - CB.EN.U4AIE20045

Pillalamarri Akshaya - CB.EN.U4AIE20049

Team-1

Sleep stage classification using different ML algorithms

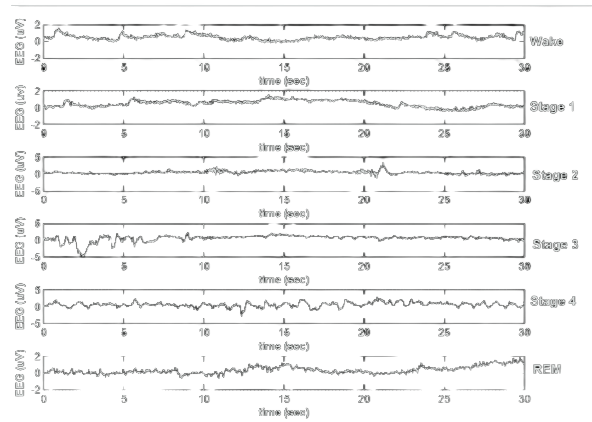

DataSet

DataSet

Delta

Theta

Alpha

Beta

K-Complex

0.5-4

4-8

8-13

13-22

0.5-1.5

Hz

Hz

Hz

Hz

Hz

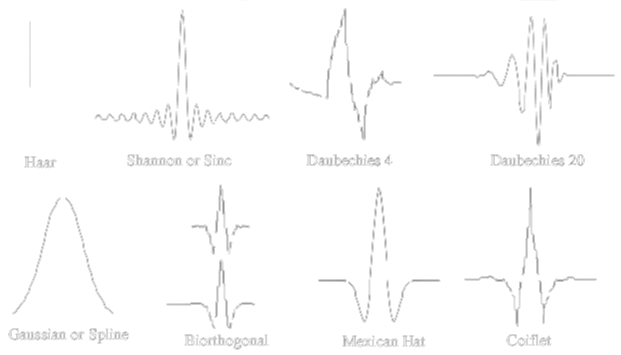

Continious Wavelet Transform

Time-Frequency Analysis Framework

Continious Wavelet Transform

Time-Frequency Analysis Framework

Continious Wavelet Transform

Time-Frequency Analysis Framework

Similarity

Measure

Mother

Wavelet

Continious Wavelet Transform

Time-Frequency Analysis Framework

Continious Wavelet Transform

Time-Frequency Analysis Framework

Continious Wavelet Transform

Time-Frequency Analysis Framework

Delta

Theta

Alpha

Beta1

K-Complex

0.5-4

4-8

8-13

13-22

0.5-1.5

Hz

Hz

Hz

Hz

Hz

Beta2

22-35

Hz

Sleep spindles

12-14

Hz

CWT

Delta

Theta

Alpha

Beta1

K-Complex

0.5-4

4-8

8-13

13-22

0.5-1.5

Hz

Hz

Hz

Hz

Hz

Beta2

22-35

Hz

Sleep spindles

12-14

Hz

CWT

Features

Random Forest

Random Forest

Dataset

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Random Forest

Dataset

| idx | x1 | x2 | x3 | y |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Data 1

Random Forest

Dataset

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Data 1

Random Forest

Dataset

| 3 | 1.1 | 3.1 | 2.8 | 3 |

|---|

| idx | x1 | x2 | x3 | y |

|---|

| . | . | . | . | . |

|---|

| . | . | . | . | . |

|---|

| 1 | 1.2 | 3.4 | 4.5 | 0 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

| 2 | 1.5 | 2.3 | 4.1 | 1 |

|---|

Data 1

Random Feature Selection

Random Forest

Dataset

Data 1

Random Forest

Dataset

Data 1

Data 2

Random Forest

Dataset

Data 1

Data 2

Data 3

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

Bootstrapped Data

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

Random Forest

Dataset

Data 1

Decision Tree

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

Random Forest

Dataset

Data 1

| x1 | x2 | x3 |

|---|

| x1 | x2 |

|---|---|

| 1.3 | 3.8 |

| 1.3 | 3.8 | 4.9 |

|---|

Predict

Random Forest

Dataset

Data 1

| x1 | x2 | x3 |

|---|

| 1.3 | 3.8 | 4.9 |

|---|

Predict

| x1 | x2 |

|---|---|

| 1.3 | 3.8 |

Prediction

0

Random Forest

Dataset

| x1 | x2 | x3 |

|---|

| 1.3 | 3.8 | 4.9 |

|---|

Predict

Prediction

0

Data 2

| x1 | x3 |

|---|---|

| 1.3 | 4.9 |

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

0

0

3

1

0

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

0

0

3

1

0

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

0

0

3

1

0

Majority Voting

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

0

0

3

1

0

Majority Voting

0

Random Forest

Dataset

Data 1

Data 2

Data 3

Data 4

Data 5

0

0

3

1

0

| x1 | x2 | x3 |

|---|

| 1.3 | 3.8 | 4.9 |

|---|

Labelled as

| y |

|---|

| 0 |

|---|

Support Vector Machine

Support Vector Machine

Text

Binary Classification

Support Vector Machine

Text

Binary Classification

Yes

No

Support Vector Machine

Text

Multi-Class Classification

Support Vector Machine

Text

Multi-Class Classification

Class 1

Class 2

Class 3

Support Vector Machine

Text

Multi-Class Classification

Class 1

Class 2

Class 3

Types

Support Vector Machine

Text

Multi-Class Classification

One vs Rest

One vs One

Support Vector Machine

Text

Multi-Class Classification

One vs Rest

One vs One

One vs One

Support Vector Machine

Text

Multi-Class Classification

One vs One

Labels

Support Vector Machine

Text

Multi-Class Classification

One vs One

Support Vector Machine

Text

Multi-Class Classification

One vs One

One vs Rest

Support Vector Machine

Text

Multi-Class Classification

One vs Rest

Support Vector Machine

Text

Multi-Class Classification

One vs Rest

INTUITION

Support Vector Machine

Text

One vs Rest

INTUITION

1

2

3

Text

Text

Text

Text

New data

Text

Ask

Text

Ask

Text

Ask

Text

Ask

Text

K-Nearest Neighbours

K-Nearest Neighbours

K-Nearest Neighbours

New data

K-Nearest Neighbours

New data

Suppose K=1

K-Nearest Neighbours

New data

Suppose K=1

K-Nearest Neighbours

New data

Suppose K=1

K-Nearest Neighbours

New data

Suppose K=2

K-Nearest Neighbours

New data

Suppose K=2

K-Nearest Neighbours

New data

Suppose K=4

K-Nearest Neighbours

New data

Suppose K=4

Multi Layer Network

Multi Layer Network

Multi Layer Network

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

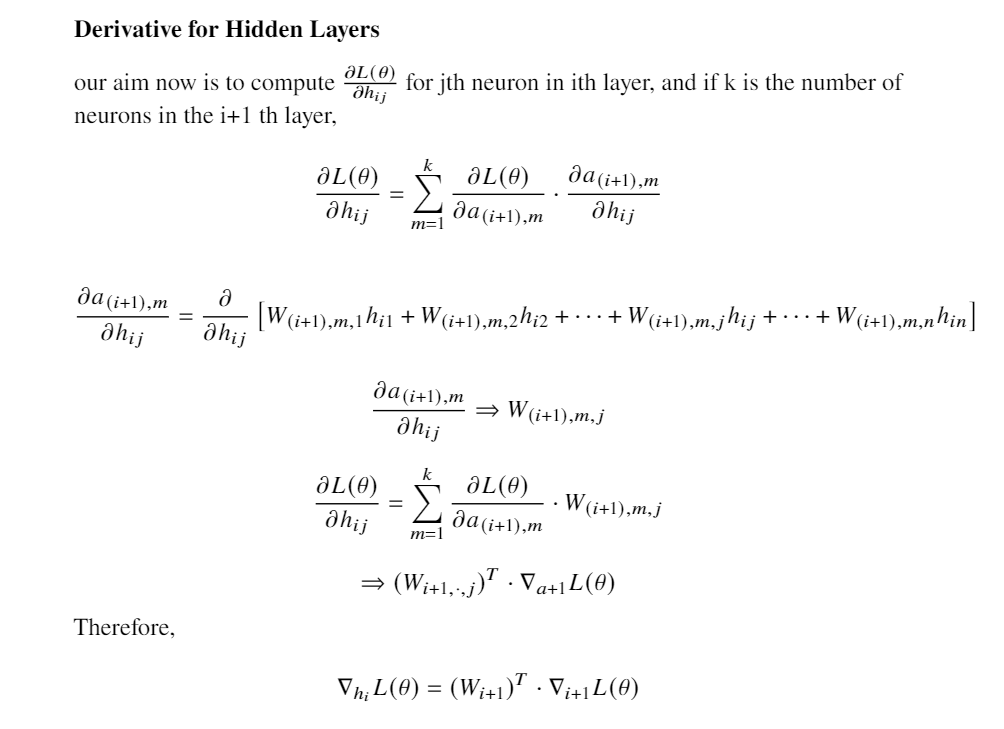

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

We can derive

To Be Computed

Multi Layer Network

BackPropagation

We can derive

To Be Computed

Here , We Can Resort To Using The Chain Rule .

How G changes with x?

Changing x changes h(x)

Changing h changes g

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Follow The RED Path !

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

For Softmax output layer and Sigmoid Activation function

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

So , now , we have all the vectorized components to build our chain

Multi Layer Network

Full Story