NEURAL NETWORKS

and beyond....

Aadharsh Aadhithya A

Amrita Vishwa VIdhyapeetham

Center for Computational Engineering and Networking

NEURAL NETWORKS

and beyond....

- Introduction

- Perceptron

- Single-layered Neural Network

- Multi layered Neural Network

- Implicit Layers

- Implicit Function Theorem

- Opt Net

- Future Directions

Why NN???????

Why NN???????

What AI?

Why NN???????

What AI?

Goals Of AI??

Why NN???????

What AI?

Turings Test on Intellegence

What exactly is Intellegenceee??

Why NN???????

What AI?

Turings Test on Intellegence

Why NN are able to "Mimic Intelligence"

Why NN???????

What AI?

Turings Test on Intellegence

Are NN's Really Intellgent?

Why NN???????

What AI?

Are NN's Really Intellgent? No. Why Then?

Introduction

Introduction

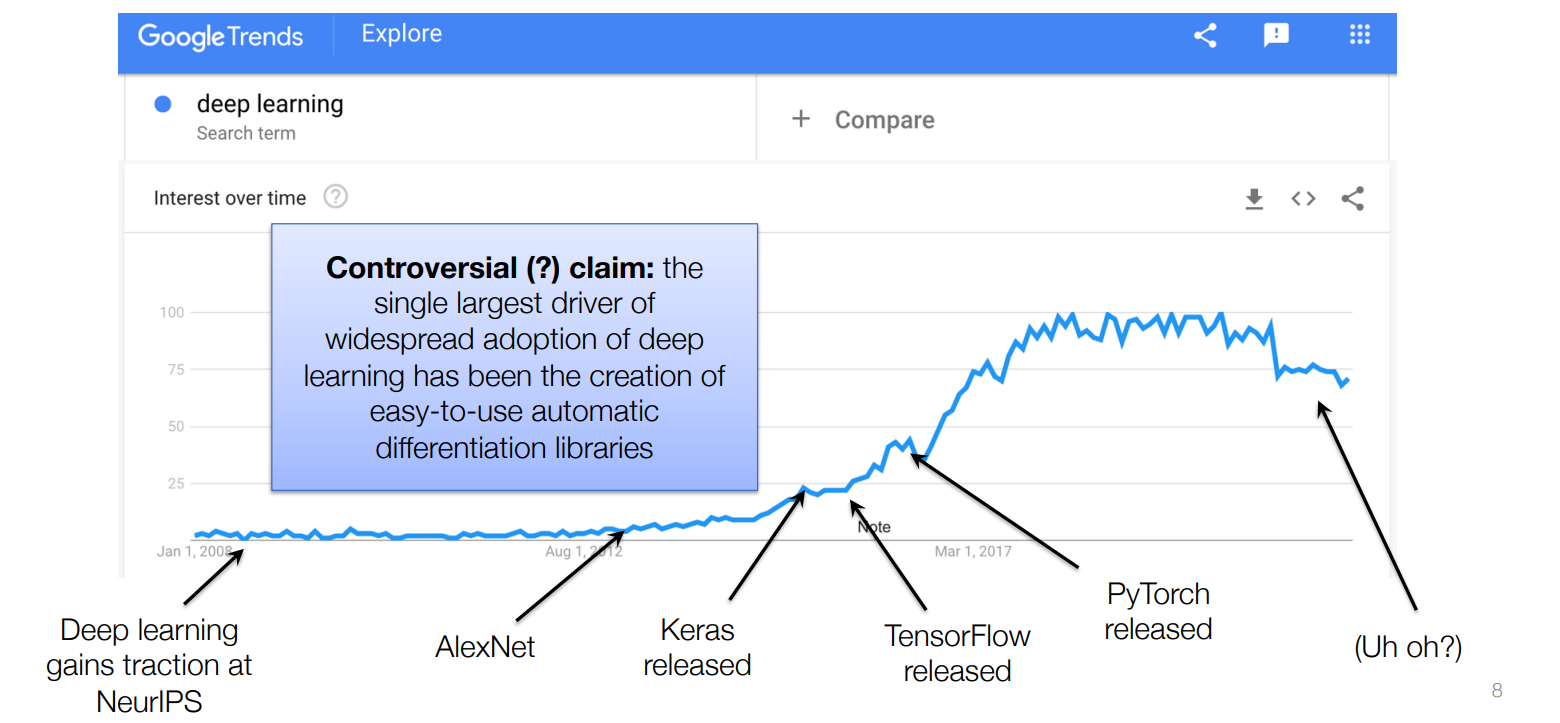

Why Deep Learning?? (Applications)

What Are Neural Networks??

What Are Neural Networks??

Models, That mimic Human Intelligence?

How to mimic?

- Associations

- Connections

But, Where Are Associations Stored??

- By Mid 1800's, It was discovered that brain was made up of connected cells called "Neurons"

- Neurons Excite and Simulate each other.

- Neurons connect to other neurons. The processing/capacity of the brain is a function of these connections

MP Neuron

End to END Learning

End to END Learning

Learn mapping directly from input to output without the need for intermediate representations

Overcome cumbersome methods like feature extraction, multiple processing

steps.

End to END Learning

Perceptrons

Perceptrons

Perceptrons

Perceptrons

Perceptrons

Perceptrons

Perceptrons

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Sigmoid Function

Single layer neural network

Single layer neural network

OOPs! 🤥

Wasn't the true output 1?

Single layer neural network

OOPs! 🤥

Wasn't the true output 1?

We Should Punish the Network , so

It Behaves Properly

Single layer neural network

OOPs! 🤥

Wasn't the true output 1?

We Should Punish the Network , so

It Behaves Properly

LOSS FUNCTIONS

Answer:

Single layer neural network

LOSS FUNCTIONS

Binary Cross Entropy Loss

Judge?? Nah!, Classify 😎

The Gradient of the Loss Function dictates whether to increase or decrease the wights and bias of a neural network

The Gradient of the Loss Function dictates whether to increase or decrease the wights and bias of a neural network

"Gradient" points up the curve in the increasing direction , so we need to move int the opposite direction

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Single layer neural network

Multi Layer Network

Multi Layer Network

Multi Layer Network

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

Forward Pass

Multi Layer Network

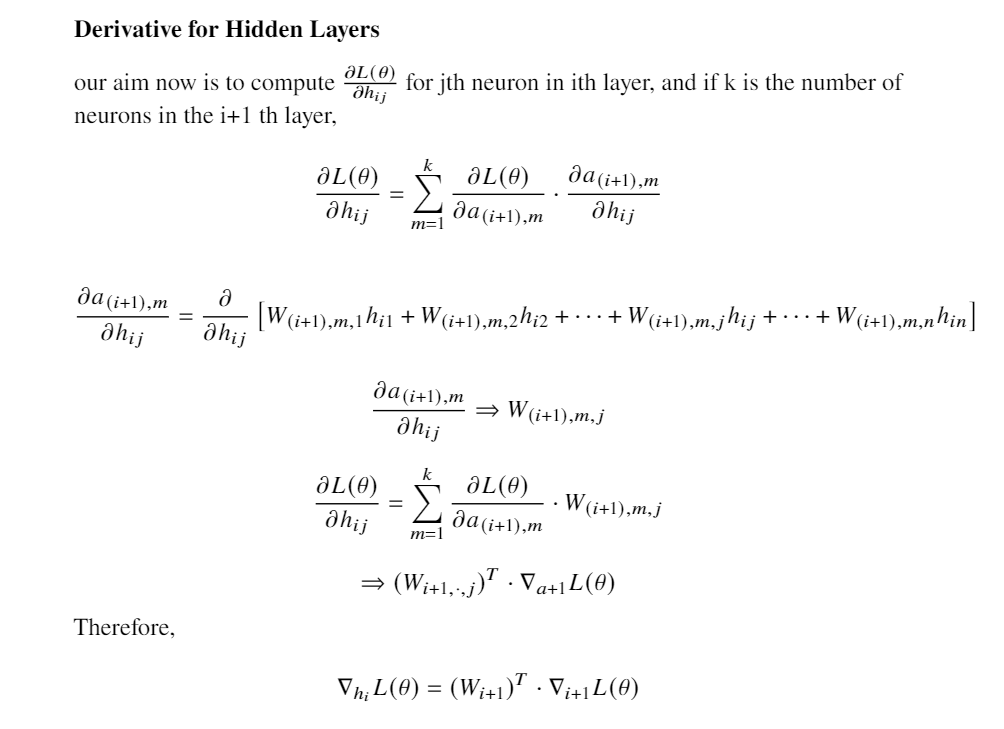

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

We can derive

To Be Computed

Multi Layer Network

BackPropagation

We can derive

To Be Computed

Here , We Can Resort To Using The Chain Rule .

How G changes with x?

Changing x changes h(x)

Changing h changes g

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Follow The RED Path !

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

Multi Layer Network

BackPropagation

(Project report)

For Softmax output layer and Sigmoid Activation function

Multi Layer Network

BackPropagation

Source : Project Report , Group01.pdf

Multi Layer Network

BackPropagation

Source : Project Report , Group01.pdf

Multi Layer Network

BackPropagation

Source : Project Report , Group01.pdf

Multi Layer Network

BackPropagation

So , now , we have all the vectorized components to build our chain

Source : Project Report , Group01.pdf

Multi Layer Network

Full Story

Source : Project Report , Group01.pdf

Multi Layer Network

import numpy as np # import numpy library

from util.paramInitializer import initialize_parameters # import function to initialize weights and biases

class LinearLayer:

"""

This Class implements all functions to be executed by a linear layer

in a computational graph

Args:

input_shape: input shape of Data/Activations

n_out: number of neurons in layer

ini_type: initialization type for weight parameters, default is "plain"

Opitons are: plain, xavier and he

Methods:

forward(A_prev)

backward(upstream_grad)

update_params(learning_rate)

"""

def __init__(self, input_shape, n_out, ini_type="plain"):

"""

The constructor of the LinearLayer takes the following parameters

Args:

input_shape: input shape of Data/Activations

n_out: number of neurons in layer

ini_type: initialization type for weight parameters, default is "plain"

"""

self.m = input_shape[1] # number of examples in training data

# `params` store weights and bias in a python dictionary

self.params = initialize_parameters(input_shape[0], n_out, ini_type) # initialize weights and bias

self.Z = np.zeros((self.params['W'].shape[0], input_shape[1])) # create space for resultant Z output

def forward(self, A_prev):

"""

This function performs the forwards propagation using activations from previous layer

Args:

A_prev: Activations/Input Data coming into the layer from previous layer

"""

self.A_prev = A_prev # store the Activations/Training Data coming in

self.Z = np.dot(self.params['W'], self.A_prev) + self.params['b'] # compute the linear function

def backward(self, upstream_grad):

"""

This function performs the back propagation using upstream gradients

Args:

upstream_grad: gradient coming in from the upper layer to couple with local gradient

"""

# derivative of Cost w.r.t W

self.dW = np.dot(upstream_grad, self.A_prev.T)

# derivative of Cost w.r.t b, sum across rows

self.db = np.sum(upstream_grad, axis=1, keepdims=True)

# derivative of Cost w.r.t A_prev

self.dA_prev = np.dot(self.params['W'].T, upstream_grad)

def update_params(self, learning_rate=0.1):

"""

This function performs the gradient descent update

Args:

learning_rate: learning rate hyper-param for gradient descent, default 0.1

"""

self.params['W'] = self.params['W'] - learning_rate * self.dW # update weights

self.params['b'] = self.params['b'] - learning_rate * self.db # update bias(es)

Multi Layer Network

import numpy as np # import numpy library

class SigmoidLayer:

"""

This file implements activation layers

inline with a computational graph model

Args:

shape: shape of input to the layer

Methods:

forward(Z)

backward(upstream_grad)

"""

def __init__(self, shape):

"""

The consturctor of the sigmoid/logistic activation layer takes in the following arguments

Args:

shape: shape of input to the layer

"""

self.A = np.zeros(shape) # create space for the resultant activations

def forward(self, Z):

"""

This function performs the forwards propagation step through the activation function

Args:

Z: input from previous (linear) layer

"""

self.A = 1 / (1 + np.exp(-Z)) # compute activations

def backward(self, upstream_grad):

"""

This function performs the back propagation step through the activation function

Local gradient => derivative of sigmoid => A*(1-A)

Args:

upstream_grad: gradient coming into this layer from the layer above

"""

# couple upstream gradient with local gradient, the result will be sent back to the Linear layer

self.dZ = upstream_grad * self.A*(1-self.A)

Multi Layer Network

def compute_stable_bce_cost(Y, Z):

"""

This function computes the "Stable" Binary Cross-Entropy(stable_bce) Cost and returns the Cost and its

derivative w.r.t Z_last(the last linear node) .

The Stable Binary Cross-Entropy Cost is defined as:

=> (1/m) * np.sum(max(Z,0) - ZY + log(1+exp(-|Z|)))

Args:

Y: labels of data

Z: Values from the last linear node

Returns:

cost: The "Stable" Binary Cross-Entropy Cost result

dZ_last: gradient of Cost w.r.t Z_last

"""

m = Y.shape[1]

cost = (1/m) * np.sum(np.maximum(Z, 0) - Z*Y + np.log(1+ np.exp(- np.abs(Z))))

dZ_last = (1/m) * ((1/(1+np.exp(- Z))) - Y) # from Z computes the Sigmoid so P_hat - Y, where P_hat = sigma(Z)

return cost, dZ_lastMulti Layer Network

def data_set(n_points, n_classes):

x = np.random.uniform(-1,1, size=(n_points, n_classes)) # Generate (x,y) points

mask = np.logical_or ( np.logical_and(x[:,0] > 0.0, x[:,1] > 0.0), np.logical_and(x[:,0] < 0.0, x[:,1] < 0.0)) # True for 1st & 3rd quadrants

y = 1*mask

return x,y

no_of_points = 10000

no_of_classes = 2

X_train, Y_train = data_set(no_of_points, no_of_classes)

for i in range(10):

print(f'The point is {X_train[i,0]} , {X_train[i,1]} and the class is {Y_train[i]}')

Multi Layer Network

# define training constants

learning_rate = 0.6

number_of_epochs = 5000

np.random.seed(48) # set seed value so that the results are reproduceable

# (weights will now be initailzaed to the same pseudo-random numbers, each time)

# Our network architecture has the shape:

# (input)--> [Linear->Sigmoid] -> [Linear->Sigmoid] -->(output)

#------ LAYER-1 ----- define hidden layer that takes in training data

Z1 = LinearLayer(input_shape=X_train.shape, n_out=4, ini_type='xavier')

A1 = SigmoidLayer(Z1.Z.shape)

#------ LAYER-2 ----- define output layer that takes in values from hidden layer

Z2= LinearLayer(input_shape=A1.A.shape, n_out= 1, ini_type='xavier')

A2= SigmoidLayer(Z2.Z.shape)Multi Layer Network

costs = [] # initially empty list, this will store all the costs after a certian number of epochs

# Start training

for epoch in range(number_of_epochs):

# ------------------------- forward-prop -------------------------

Z1.forward(X_train)

A1.forward(Z1.Z)

Z2.forward(A1.A)

A2.forward(Z2.Z)

# ---------------------- Compute Cost ----------------------------

cost, dZ2 = compute_stable_bce_cost(Y_train, Z2.Z)

# print and store Costs every 100 iterations and of the last iteration.

if (epoch % 100) == 0:

print("Cost at epoch#{}: {}".format(epoch, cost))

costs.append(cost)

# ------------------------- back-prop ----------------------------

Z2.backward(dZ2)

A1.backward(Z2.dA_prev)

Z1.backward(A1.dZ)

# ----------------------- Update weights and bias ----------------

Z2.update_params(learning_rate=learning_rate)

Z1.update_params(learning_rate=learning_rate)

# if (epoch % 100) == 0:

# plot_decision_boundary(lambda x: predict_dec(Zs=[Z1, Z2], As=[A1, A2], X=x.T, thresh=0.5), X=X_train.T, Y=Y_train , save=True)Multi Layer Network

Multi Layer Network

def predict_loc(X, Zs, As, thresh=0.5):

"""

helper function to predict on data using a neural net model layers

Args:

X: Data in shape (features x num_of_examples)

Y: labels in shape ( label x num_of_examples)

Zs: All linear layers in form of a list e.g [Z1,Z2,...,Zn]

As: All Activation layers in form of a list e.g [A1,A2,...,An]

thresh: is the classification threshold. All values >= threshold belong to positive class(1)

and the rest to the negative class(0).Default threshold value is 0.5

Returns::

p: predicted labels

probas : raw probabilities

accuracy: the number of correct predictions from total predictions

"""

m = X.shape[1]

n = len(Zs) # number of layers in the neural network

p = np.zeros((1, m))

# Forward propagation

Zs[0].forward(X)

As[0].forward(Zs[0].Z)

for i in range(1, n):

Zs[i].forward(As[i-1].A)

As[i].forward(Zs[i].Z)

probas = As[n-1].A

# convert probas to 0/1 predictions

for i in range(0, probas.shape[1]):

if probas[0, i] >= thresh: # 0.5 the default threshold

p[0, i] = 1

else:

p[0, i] = 0

# print results

print ("predictions: " + str(p))Multi Layer Network

Implicit Layers

Implicit Layers

Explicit Layers

Implicit Layers

Implicit Layers

But Why?? 😕

Implicit Layers

But Why?? 😕

"Instead of specifying how to compute the layer's output from the input , we specify the conditions that we want the layer's output to satisfy"

Implicit Layers

But Why?? 😕

Recent Years Have seen several Efficient ways to Differentiate through constructs like Argmin and Argmax

then , Backpropagation Requires Gradients

1.

Gould, S. et al. On Differentiating Parameterized Argmin and Argmax Problems with Application to Bi-level Optimization. arXiv:1607.05447 [cs, math] (2016).

Implicit Layers

But How?? 😕

Differentiating Through Implicit Layers?? 🤔

Implicit Layers

But Why?? 😕

Differentiating Through Implicit Layers?? 🤔

The Implicit Function Theorem

Implicit Layers

The Implicit Function Theorem

Implicit Layers

The Implicit Function Theorem

Implicit Layers

The Implicit Function Theorem

Then the Implicit function theorem tells ,

Implicit Layers

The Implicit Function Theorem

Then the Implicit function theorem tells ,

Implicit Layers

The Implicit Function Theorem

Now , we know that there exists a local function z*,

Implicit Layers

The Implicit Function Theorem

Now , we know that there exists a local function z*,

Implicit Layers

The Implicit Function Theorem

Implicit Layers

The Implicit Function Theorem

Implicit Layers

The Implicit Function Theorem

Now this can be connected to standard auto diffs tools..

Implicit Layers

Implicit Layers , Bring In Structure to the layers.

Encode Domain knowledge

Differentiable Optimization

Implicit Layers

Implicit Layers , Bring In Structure to the layers.

Encode Domain knowledge

Differentiable Optimization

Implicit Layers

OPTNET

Amos, Brandon, and J. Zico Kolter. 2019. OptNet: Differentiable Optimization as a Layer inNeural Networks.arXiv:1703.00443 [cs, math, stat](14 October 2019). arXiv: 1703.00443

Implicit Layers

OPTNET

KKT Conditions

"Necessary and Sufficient

Conditions for optimality "

Implicit Form

Implicit Function Theorem

Implicit Layers

Our Trails And Fails

Implicit Layers

Our Trails And Fails

Adrian-Vasile Duka,

Neural Network based Inverse Kinematics Solution for Trajectory Tracking of a Robotic Arm,

Procedia Technology,Volume 12, 2014, Pages 20-27, ISSN 2212-0173,

https://doi.org/10.1016/j.protcy.2013.12.451.

(https://www.sciencedirect.com/science/article/pii/S2212017313006361)

Abstract: Planar two and three-link manipulators are often used in Robotics as testbeds for various algorithms or theories. In this paper, the case of a three-link planar manipulator is considered. For this type of robot a solution to the inverse kinematics problem, needed for generating desired trajectories in the Cartesian space (2D) is found by using a feed-forward neural network.

Keywords: robotic arm; planar manipulator; inverse kinematics; trajectory; neural networks

Adrian-VasileDuka Showed Training Neural Networks , for inverse kinematics , for a planar 3 link manipulator based on input - end effector coordinates using 1 hidden layer and 100 neurons

Implicit Layers

Our Trails And Fails

We Tried to Simulate Obstacle , by removing a circular region from randomly generated data..

Then Tried Appending the previous architecture with an optnet layer.

Implicit Layers

Our Trails And Fails

We Tried to Simulate Obstacle , by removing a circular region from randomly generated data..

Then Tried Appending the previous architecture with an optnet layer.

But we hit a roadblock..

class Link3IK(nn.Module):

def __init__(self,n,m,p):

super().__init__()

torch.manual_seed(0)

z = cp.Variable(n)

self.P = cp.Parameter((n,n))

self.q = cp.Parameter(n)

self.G = cp.Parameter((m,n))

self.h = cp.Parameter(m)

self.A = cp.Parameter((p,n))

self.b = cp.Parameter(p)

self.nn_output = cp.Parameter(3)

scale_factor = 1e-4

self.Ptch = torch.nn.Parameter(scale_factor*torch.randn(n,n))

self.qtch = torch.nn.Parameter(scale_factor*torch.randn(n))

self.Gtch = torch.nn.Parameter(scale_factor*torch.randn(m,n))

self.htch = torch.nn.Parameter(scale_factor*torch.randn(m))

self.Atch = torch.nn.Parameter(scale_factor*torch.randn(p,n))

self.btch = torch.nn.Parameter(scale_factor*torch.randn(p))

self.objective = cp.Minimize(0.5*cp.sum_squares(self.P @ self.z) + self.q @ self.z )

self.constraints = [self.G@self.z-self.h <= 0 , self.A@self.z == self.b]

raise NotImplementedError # include nn_output in the cvxpy problem.

self.problem = cp.Problem(self.objective, self.constraints)

self.net = nn.Sequential(

nn.Linear(2, 100),

nn.Sigmoid(),

nn.Linear(100, 3),

nn.Softmax()

)

self.cvxpylayer = CvxpyLayer(self.problem, parameters=[self.P,self.q,self.G,self.h,self.A,self.b,self.nn_output], variables=[self.z])

def forward(self, X):

nn_output = self.net(X)

output = self.cvxpylayer(self.Ptch, self.qtch, self.Gtch, self.htch, self.Atch, self.btch, nn_output)[0]

return outputIn order to include nn_output , we needed knowledge about convex spaces.. 😔

Future Potential Prospects

1.

Maric, F., Giamou, M., Khoubyarian, S., Petrovic, I. & Kelly, J. Inverse Kinematics for Serial Kinematic Chains via Sum of Squares Optimization. 2020 IEEE International Conference on Robotics and Automation (ICRA) 7101–7107 (2020) doi:10.1109/ICRA40945.2020.9196704.

Maric et al ., Casted Inverse kinematics as a sum of squares QCQP problem and solved using a custom solver

We could use that formulation to solve these problems using CVXPYlayers

Based On our understanding....

Future Potential Prospects

1.

Maric, F., Giamou, M., Khoubyarian, S., Petrovic, I. & Kelly, J. Inverse Kinematics for Serial Kinematic Chains via Sum of Squares Optimization. 2020 IEEE International Conference on Robotics and Automation (ICRA) 7101–7107 (2020) doi:10.1109/ICRA40945.2020.9196704.

- Reinforcement Learning

Learning Constraints in the state space

- Control

Things like LQE for Starting

Based On our understanding....

Role of BIAS

Role of BIAS

- Introduce an additional degree of freedom

- Offset any systematic errors or inconsistencies in the data

- Shift the decision boundary and better fit the training data

- In case of class imbalance shift decision boundary towards the minority class

Bias Variance Tradeoff

Bias Variance Tradeoff

Minimize both bias and

variance to achieve optimal predictive performance

High Bias

- Underfits the data

- Cannot capture complexity

- Poor predictive performance

High Variance

- Sensitive to data fluctuations

- Fits noise and underlying patterns

- Poor performance on unseen data

Bias Variance Tradeoff

Right balance for optimal performance

Regularization,

cross-validation, and Ensembling

Vanishing Gradient Problem

Vanishing Gradient Problem

Gradient of loss function w.r.t weights become very small

Prevalent in deeper networks as gradient is propagated through multiple layers making it smaller

Weights in earlier layers do not receive sufficient updates

Vanishing Gradient Problem

Activation functions like sigmoid have limited range causing derivatives to be mall for large inputs

Initialising small weights can make the gradients smaller

Vanishing Gradient Problem

Solution

ReLU, weight initialisation methods

(Xavier Initialisation)

Skip connections,

which allow the gradient to bypass some of the layers and propagate more directly to the earlier layers.

Advantages of Deeper Networks

Advantages of Deeper Networks

- Increased Representational Power

Learn Complex features

- Improved Feature Extraction

Lower layers can learn basic features while higher layers learn abstract features

More discrimination of features

- Better Generalization

Can filter noise and focus on genaralising patterns