Matched-filtering & Deep Learning Networks

In collaboration with Prof. Dr. Zhou-Jian Cao

Webniar, July 19th, 2020

He Wang (王赫)

[hewang@mail.bnu.edu.cn]

Department of Physics, BNU

Based on: PhD thesis (HTML); 10.1103/PhysRevD.101.104003

- Background & Related Works

- Our Past Works

- Our Motivation

- How we built the MFCNN

- Conclusion & Way Forward

Content

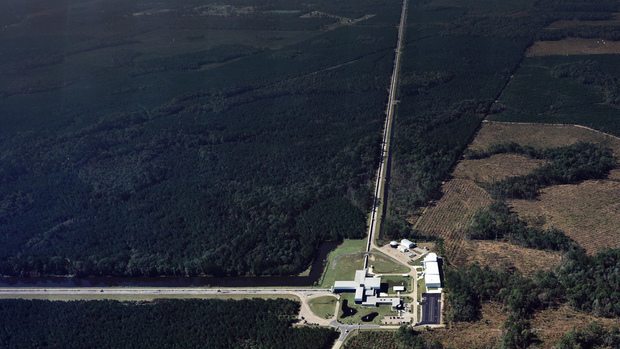

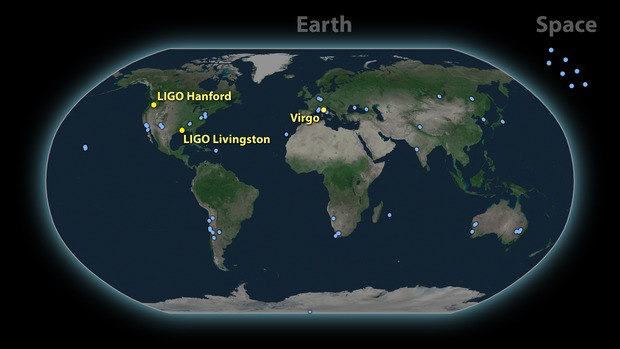

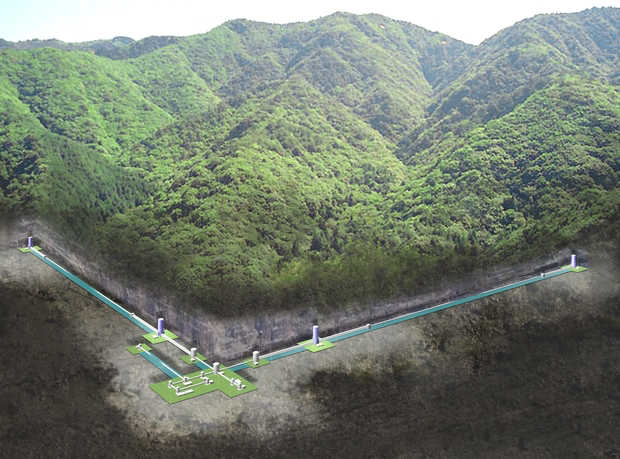

LIGO Hanford (H1)

LIGO Livingston (L1)

KAGRA

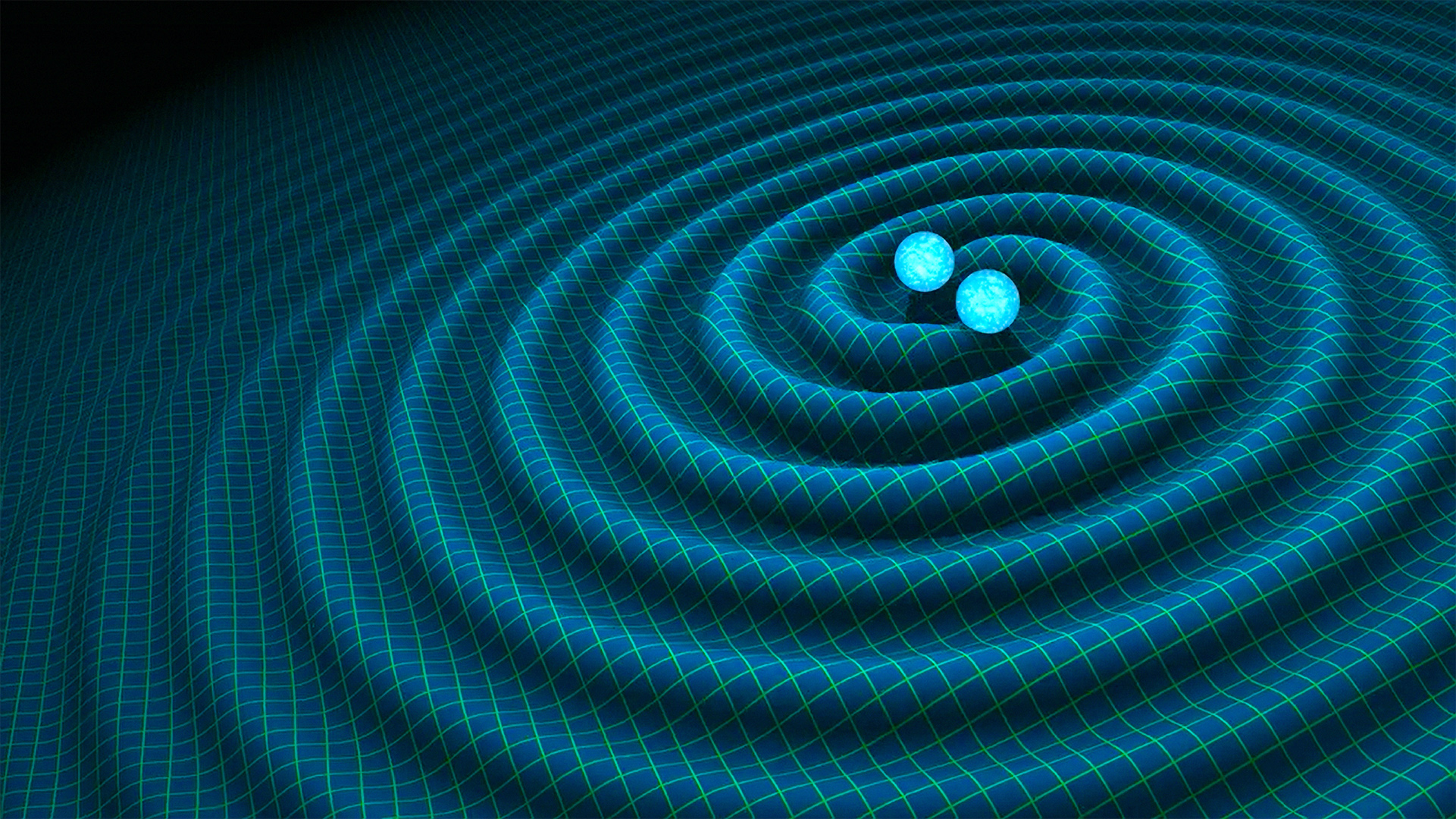

Gravitational-wave Astronomy

Observational Experiment

Theoretical Modeling

Data Analysis

GW151012

GW170729

GW170809

GW170818

GW170823

GW170121

GW170304

GW170721

(GW151205)

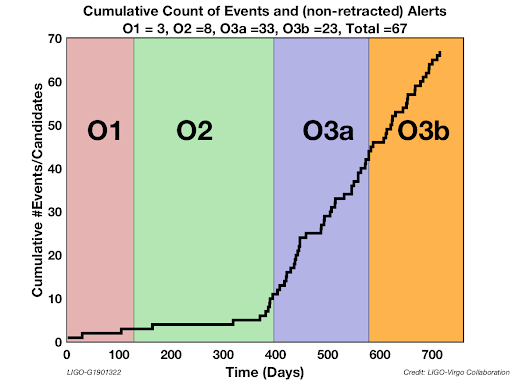

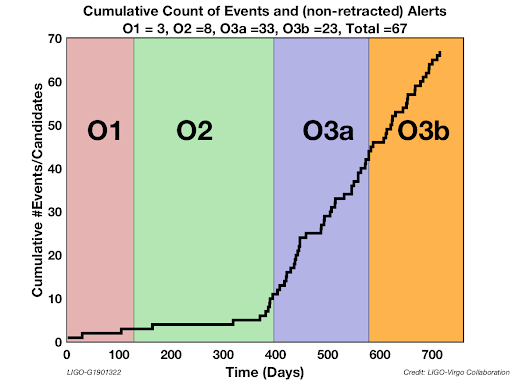

GW Event Detections

O1

O2

O3

GWTC2 (?)

2-OGC (2020)

GW Detection & Data Analysis

...

GW Data Analysis: Challenges and Opportunities

Anomalous non-Gaussian transients, known as glitches

Lack of GW templates

Real-time / low-latency analysis of the raw big data

Inadequate matched-filtering method

A threshold is used on SNR value to build our templates bank with a maximum loss of 3% of its SNR.

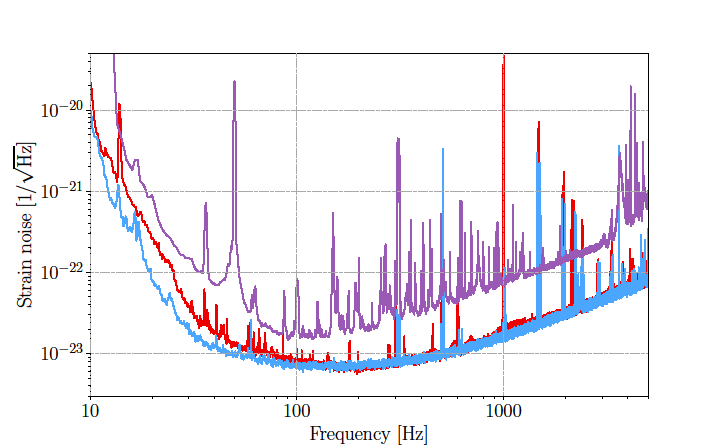

Noise power spectral density

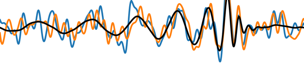

Matched filtering Technique:

Optimal detection technique for templates, with Gaussian and stationary detector noise.

credits G. Guidi

Anomalous non-Gaussian transients, known as glitches

Lack of GW templates

Real-time / low-latency analysis of the raw big data

Inadequate matched-filtering method

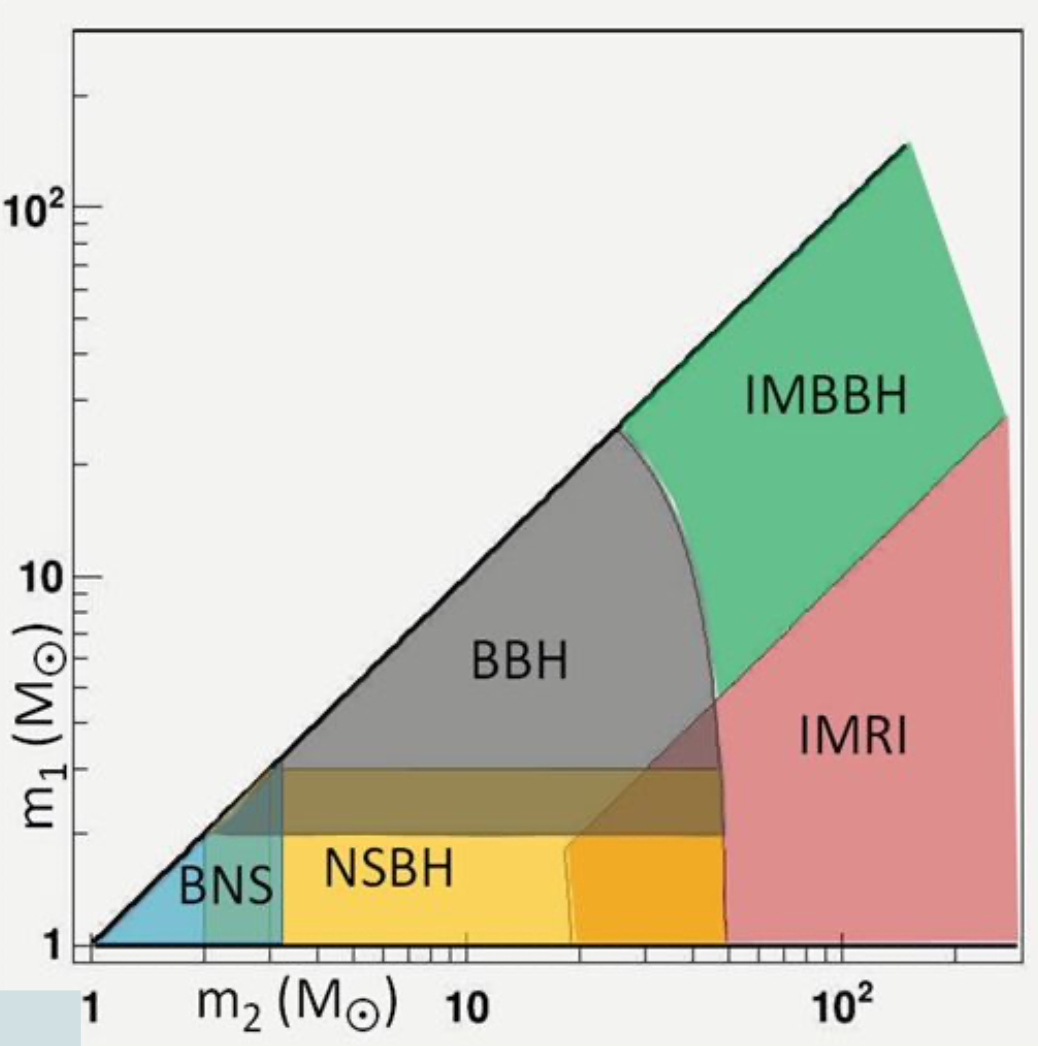

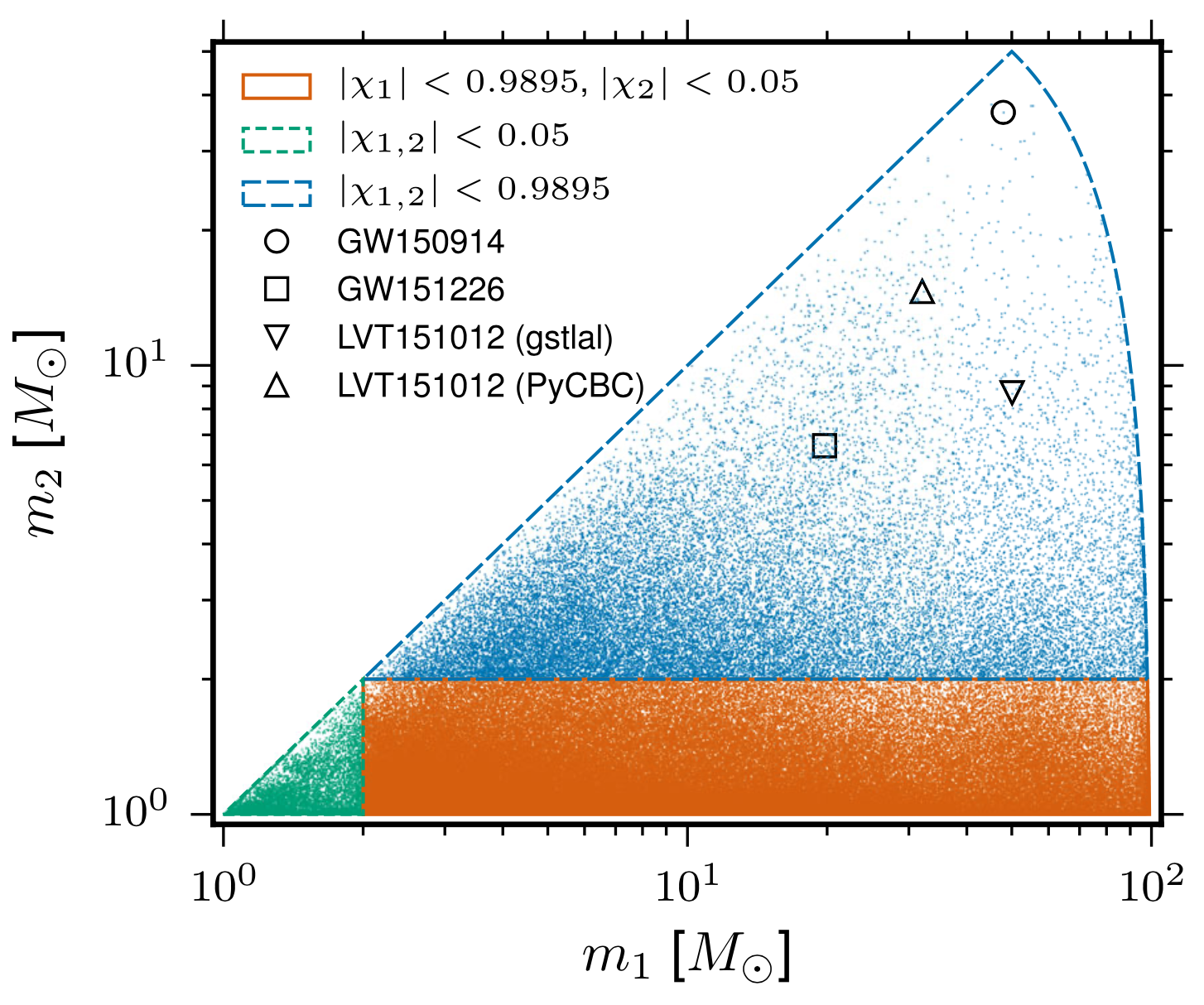

The 4-D search parameter space in O1

covered by the template bank

to circular binaries for which the spin of the systems is aligned (or antialigned) with the orbital angular momentum of the binary.

~250,000 template waveforms are used.

The template that best matches GW150914

GW Data Analysis: Challenges and Opportunities

Anomalous non-Gaussian transients, known as glitches

Lack of GW templates

Real-time / low-latency analysis of the raw big data

Inadequate matched-filtering method

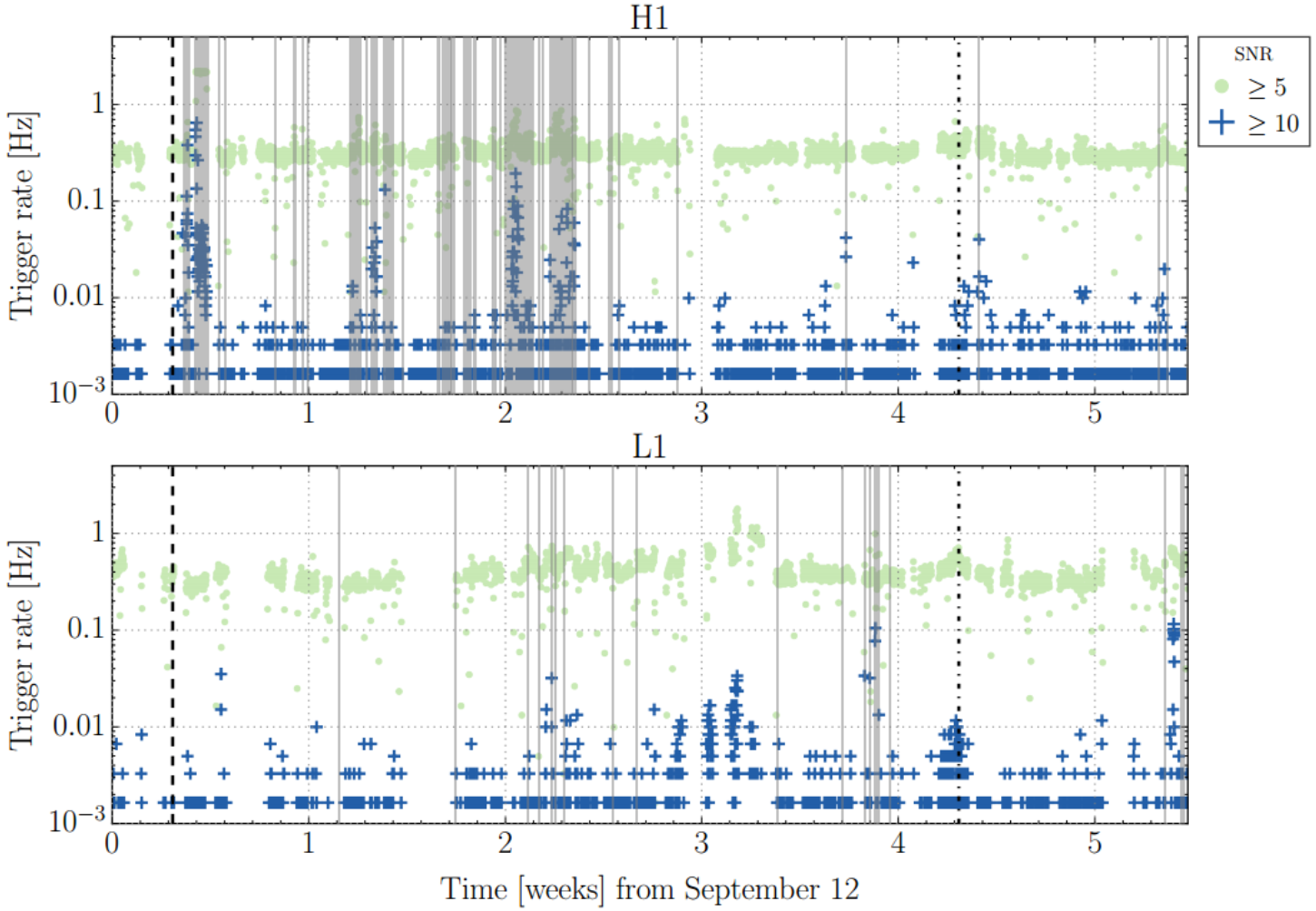

How many "trash" events?

LIGO L1 and H1 triggers rates during O1

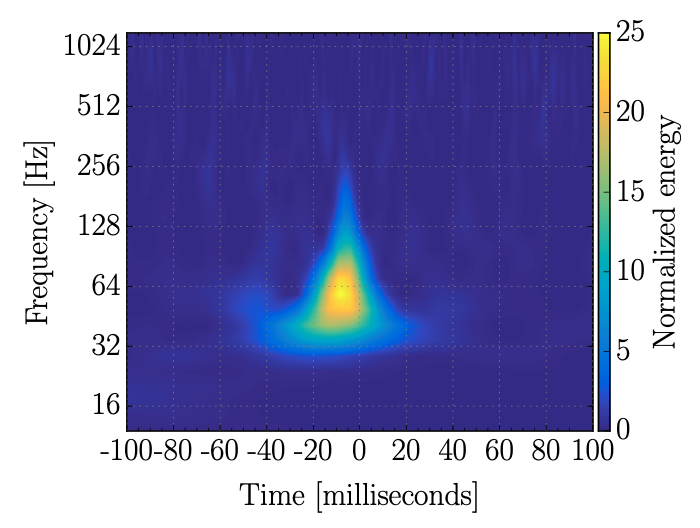

A 'blip' glitch

GW Data Analysis: Challenges and Opportunities

Anomalous non-Gaussian transients, known as glitches

Lack of GW templates

Real-time / low-latency analysis of the raw big data

Inadequate matched-filtering method

GW Data Analysis: Challenges and Opportunities

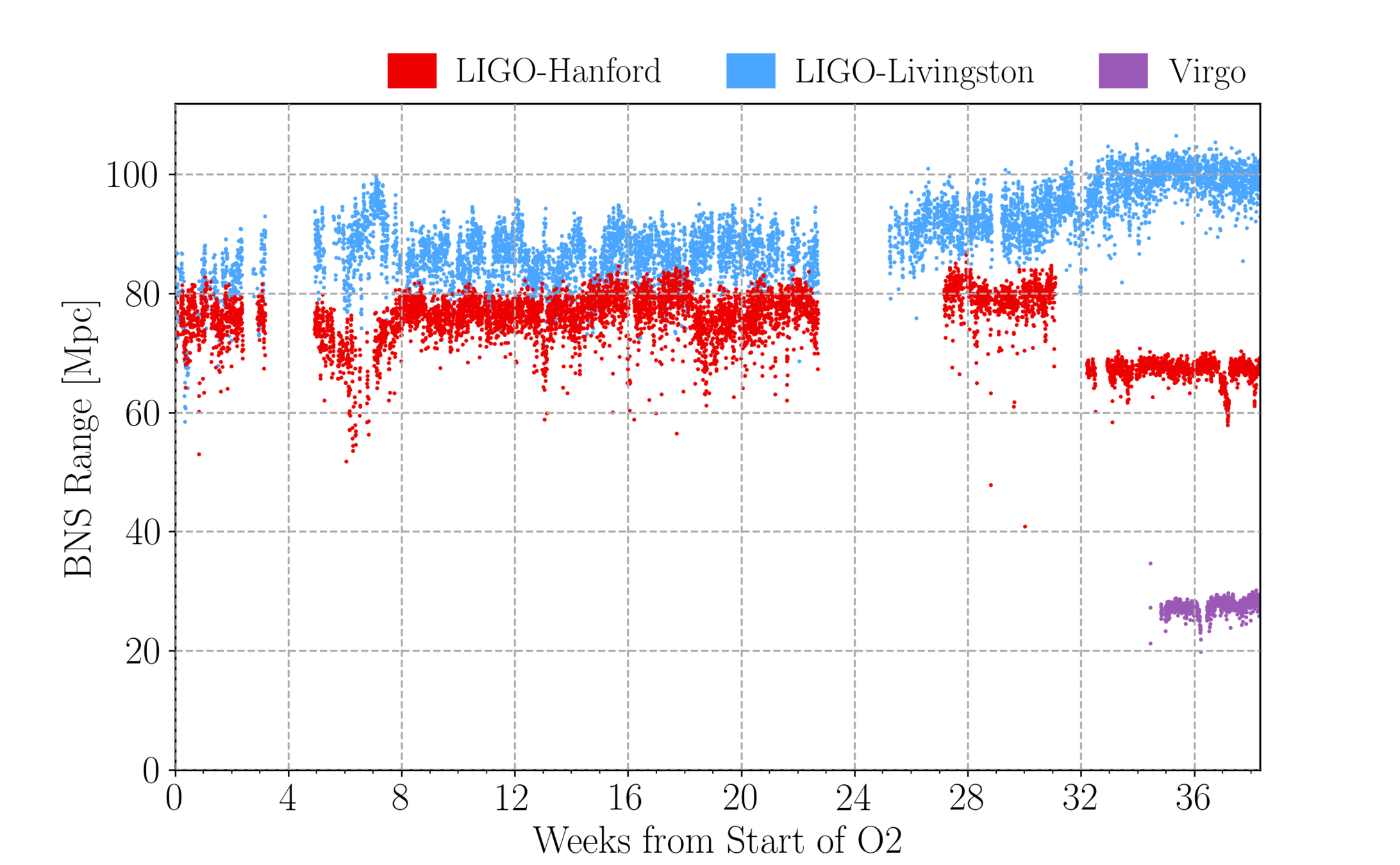

A new era of multi-messenger astronomy

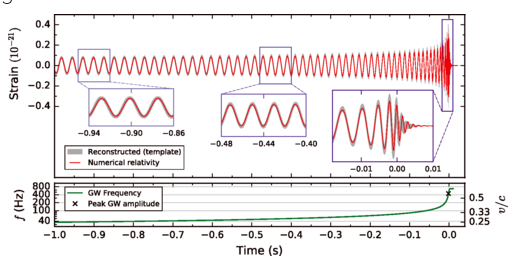

GW170817: Very long inspiral "chirp" (>100s) firmly detected by the LIGO-Virgo network,

GRB 170817A: 1.74\(\pm\)0.05s later, weak short gamma-ray burst observed by Fermi (also detected by INTEGRAL)

First LIGO-Virgo alert 27 minutes later.

Anomalous non-Gaussian transients, known as glitches

Lack of GW templates

Inadequate matched-filtering method

GW Data Analysis: Challenges and Opportunities

Covering more parameter-space (interpolation)

Automatic generalization to new sources (extrapolation)

Resilience to real non-Gaussian noise (Robustness)

Acceleration of existing pipelines

(Speed, <0.1ms)

-

Phys.Rev.D 97 (2018) 4, 044039

- The first attempt

-

Phys.Rev.Lett 120 (2019) 14, 141103

- Matched-filtering vs CNN

...

-

Phys.Rev.D 100 (2019) 6, 063015

- GW events for BBH

-

Phys.Rev.D 101 (2020) 10, 104003

- All GW events in O1/O2

Why Deep Learning ?

Proof-of-principle studies

Production search studies

Milestones

Real-time / low-latency analysis of the raw big data

More related works, see Survey4GWML (https://iphysresearch.github.io/Survey4GWML/)

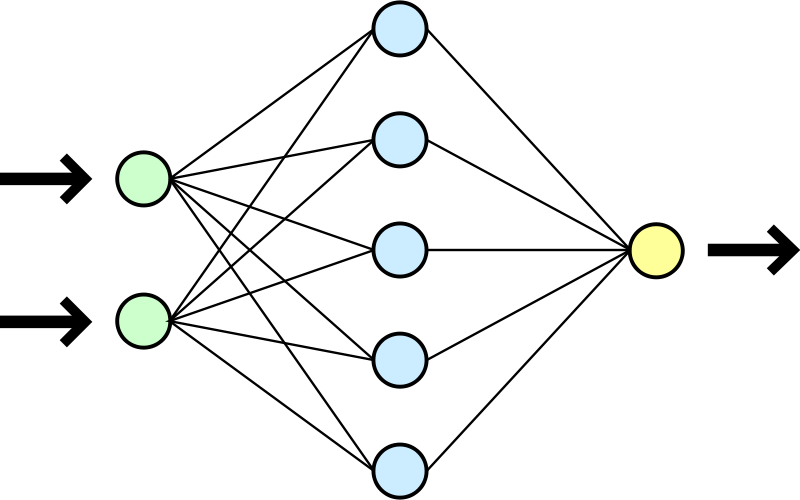

Map / Algorithm

Input

Output

A number

A sequence

Yes or No

Our model / network

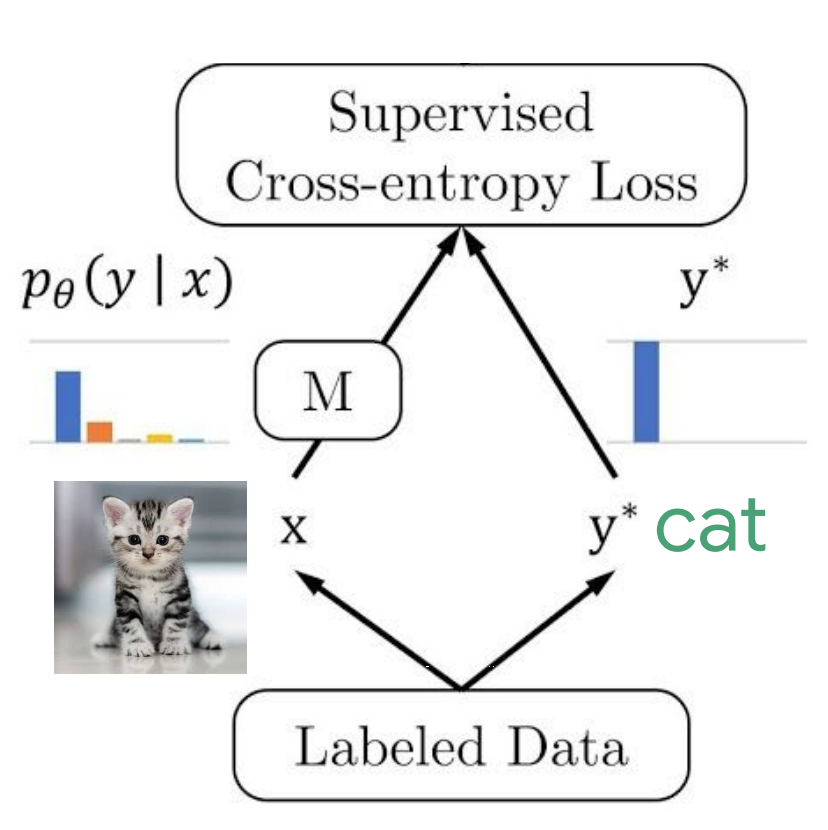

Extra: ABC of Machine Learning

Past attempts on stimulated noise (1/3)

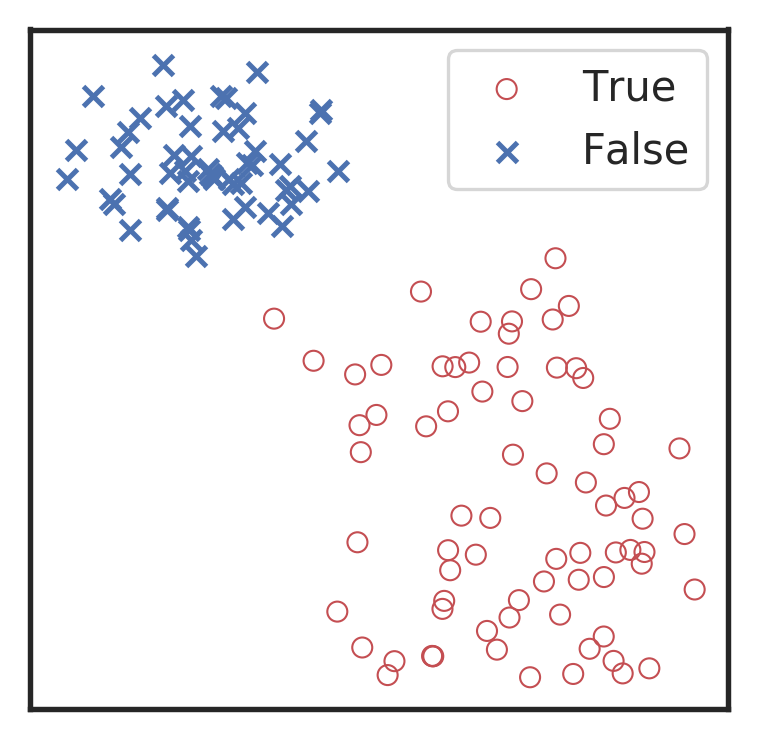

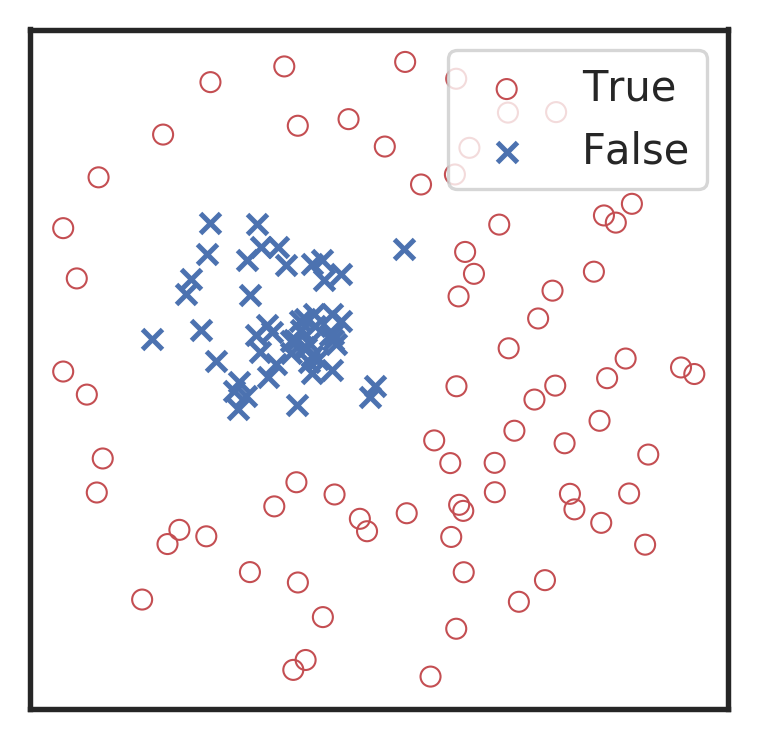

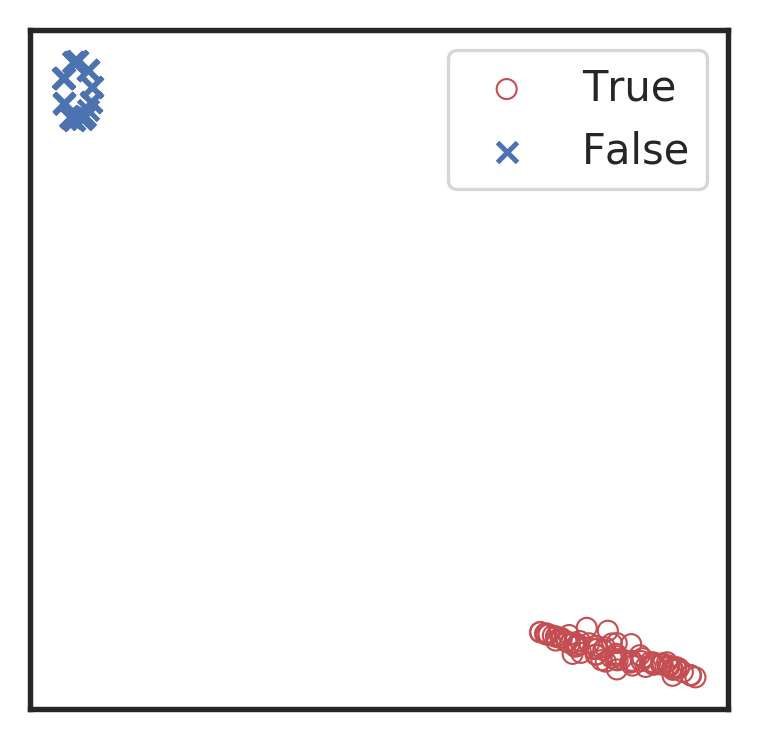

Visualization for the high-dimensional feature maps of learned network in layers for bi-class using t-SNE.

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

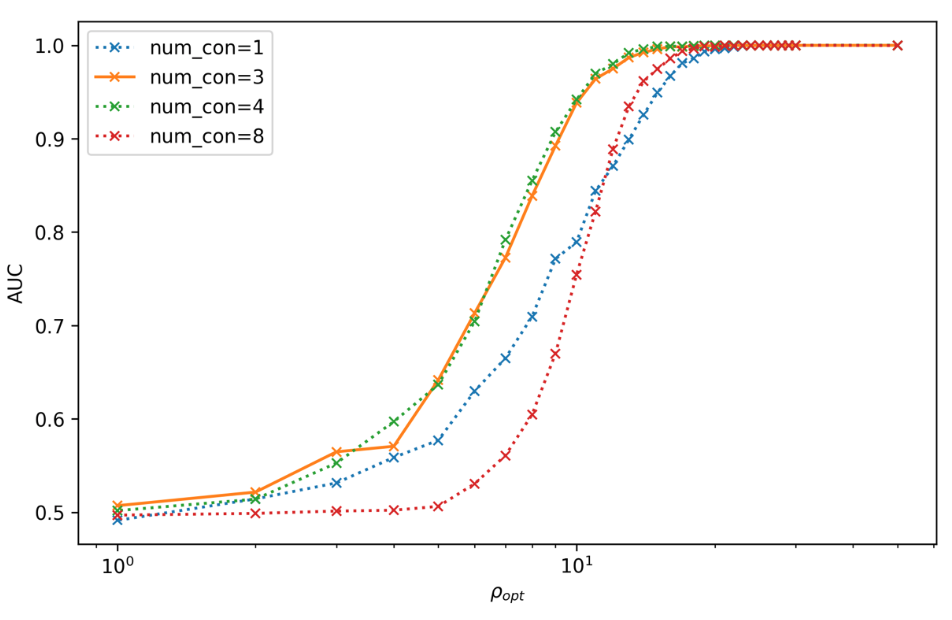

Effect of the number of the convolutional layers on signal recognizing accuracy.

Fine-tune Convolutional Neural Network

- The Influence of hyperparameters?

Past attempts on stimulated noise (1/3)

- The capability on extrapolation generalization.

Fine-tune Convolutional Neural Network

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

- The Influence of hyperparameters?

- A glimpse of model interpretability using visualization.

Visualization of the top activation on average at the \(3\)rd layer projected back to time domain using the deconvolutional network approach

The top activated

The top activated

Past attempts on stimulated noise (2/3)

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

Marginal!

Extracted features play a decisive role.

- The Influence of hyperparameters?

- A glimpse of model interpretability using visualization.

Past attempts on stimulated noise (2/3)

Visualization of the top activation on average at the \(3\)rd layer projected back to time domain using the deconvolutional network approach

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

Marginal!

- The Influence of hyperparameters?

Marginal!

- A glimpse of model interpretability using visualization.

Extracted features play a decisive role.

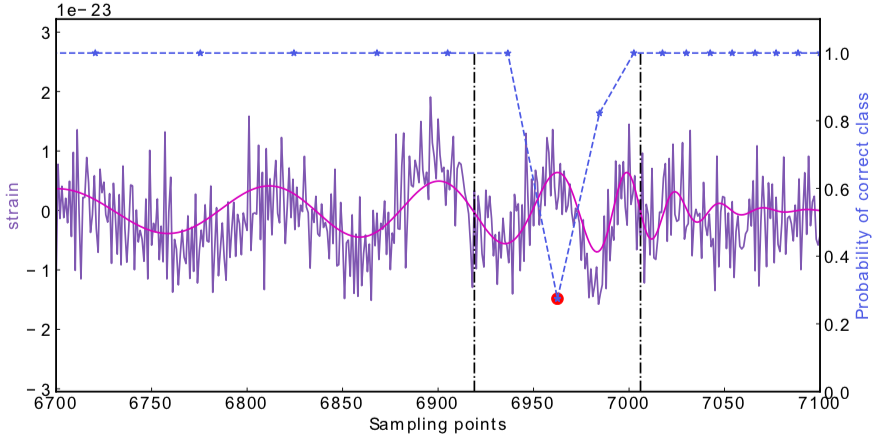

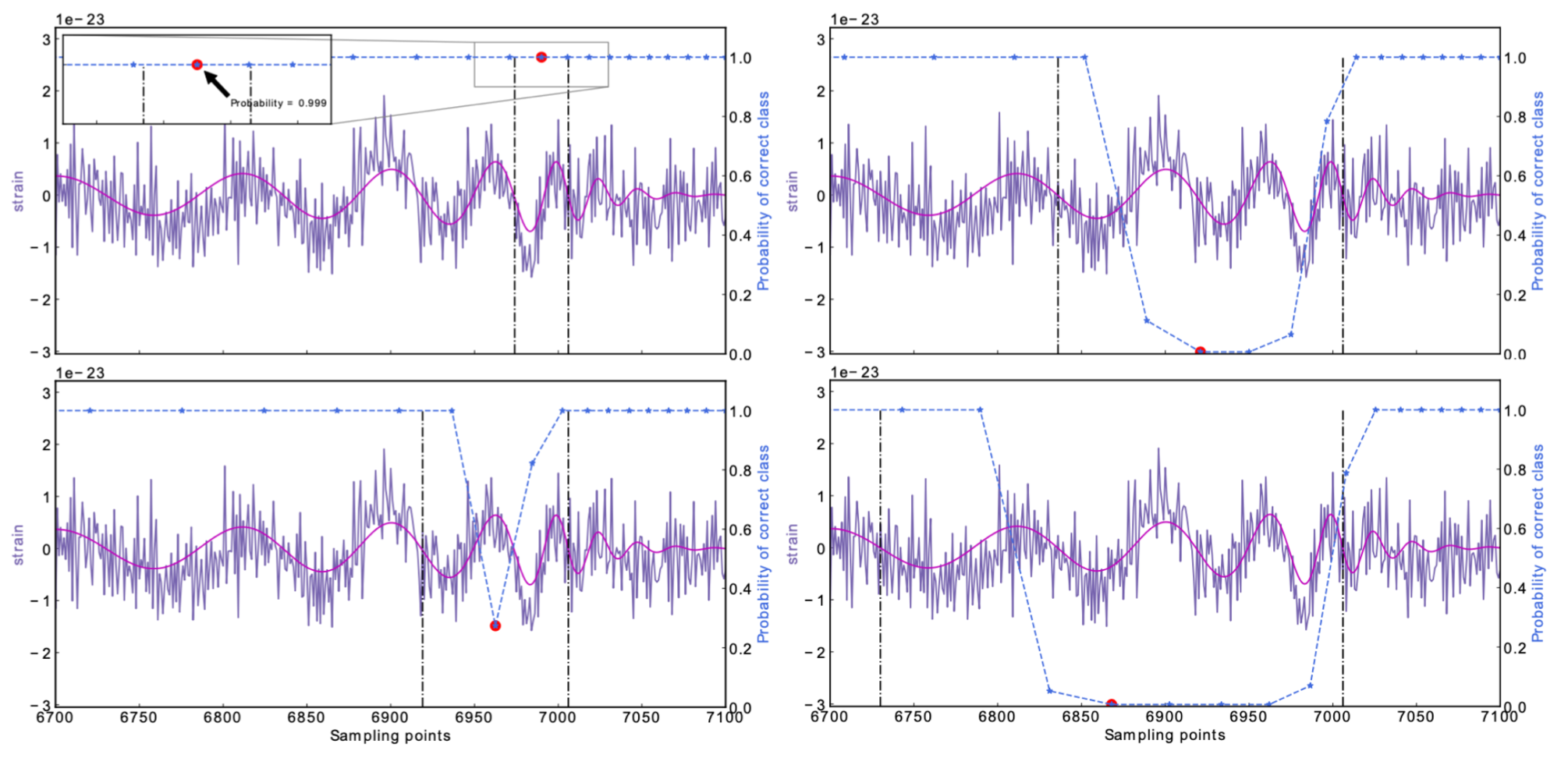

Occlusion Sensitivity

- Identify what kind of feature is learned.

Past attempts on stimulated noise (3/3)

High sensitivity to the peak features of GW.

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

- The Influence of hyperparameters?

- A glimpse of model interpretability using visualization.

Extracted features play a decisive role.

Occlusion Sensitivity

- Identify what kind of feature is learned.

Past attempts on stimulated noise (3/3)

High sensitivity to the peak features of GW.

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

Marginal!

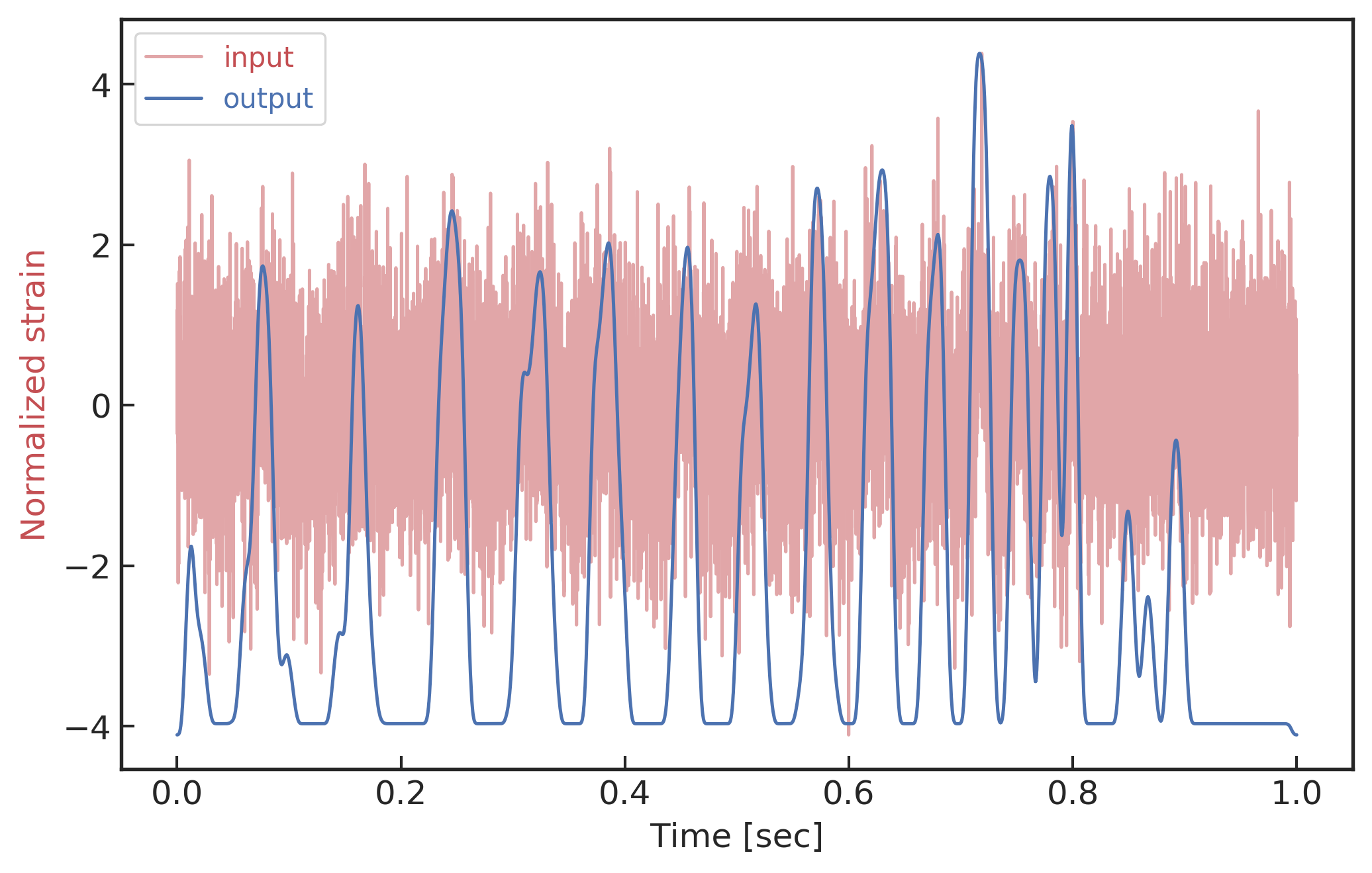

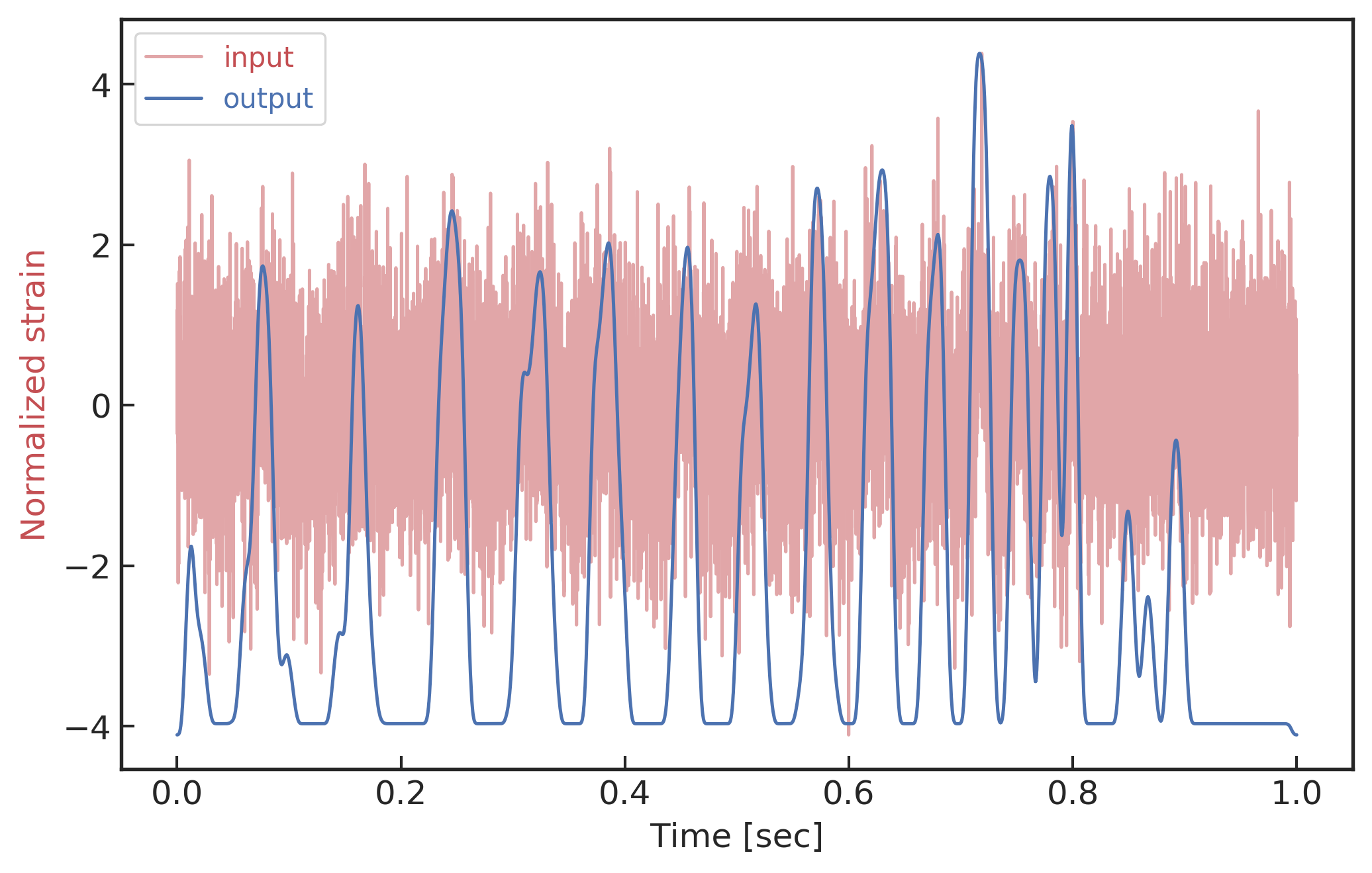

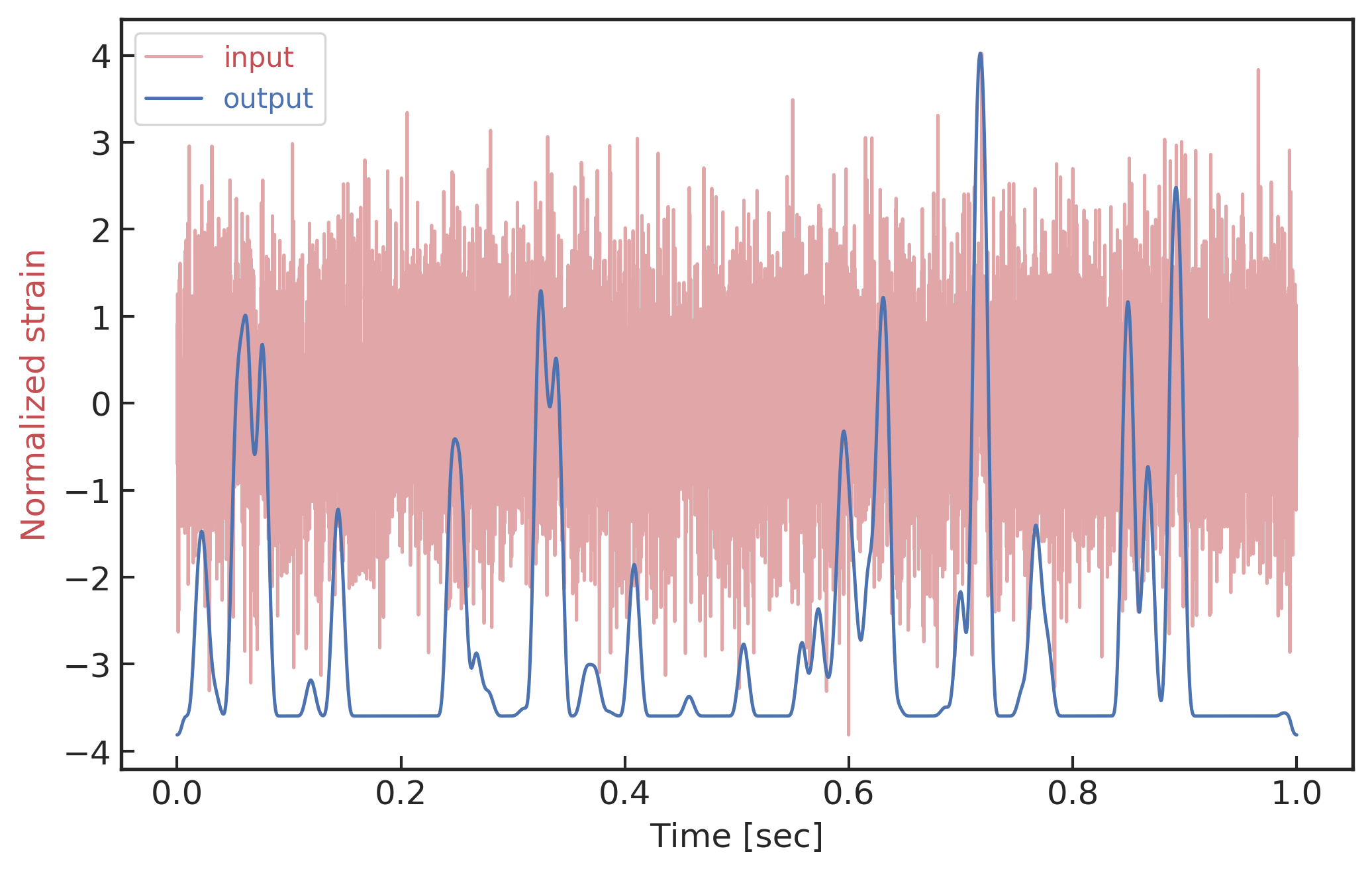

Past attempts on real LIGO noise

- However, when on real noises from LIGO, this approach does not work that well.

(too sensitive against the background + hard to find the events)

A specific design of the architecture is needed.

[as Timothy D. Gebhard et al. (2019)]

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

Past attempts on real LIGO noise

A specific design of the architecture is needed.

- However, when on real noises from LIGO, this approach does not work that well.

(too sensitive against the background + hard to find the events)

[as Timothy D. Gebhard et al. (2019)]

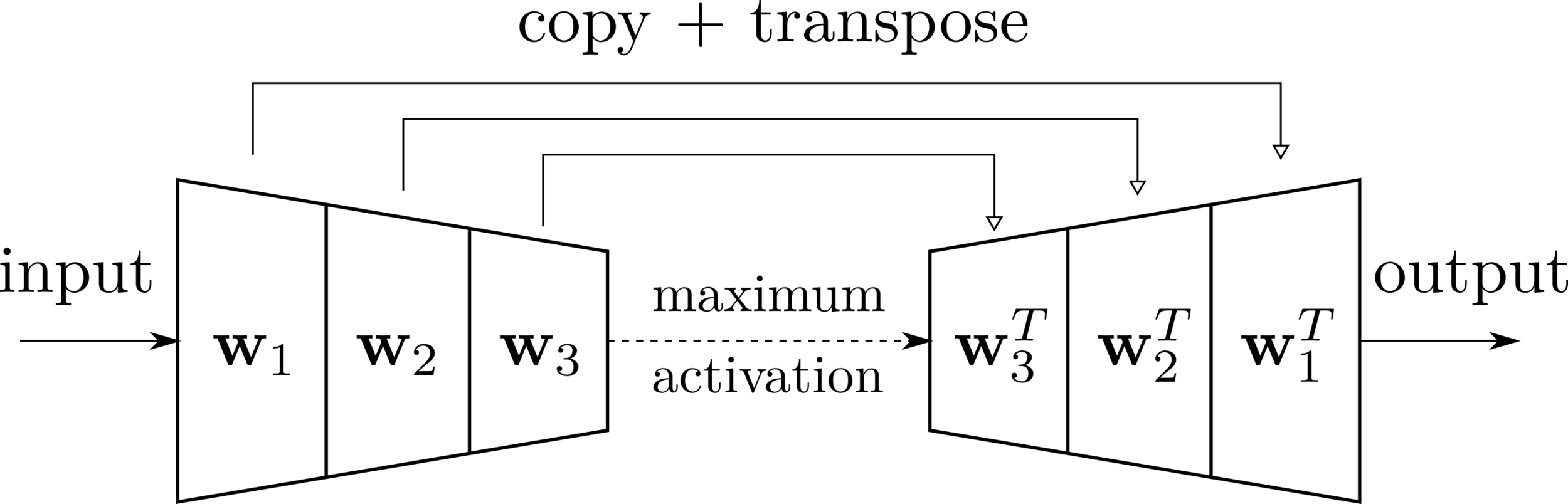

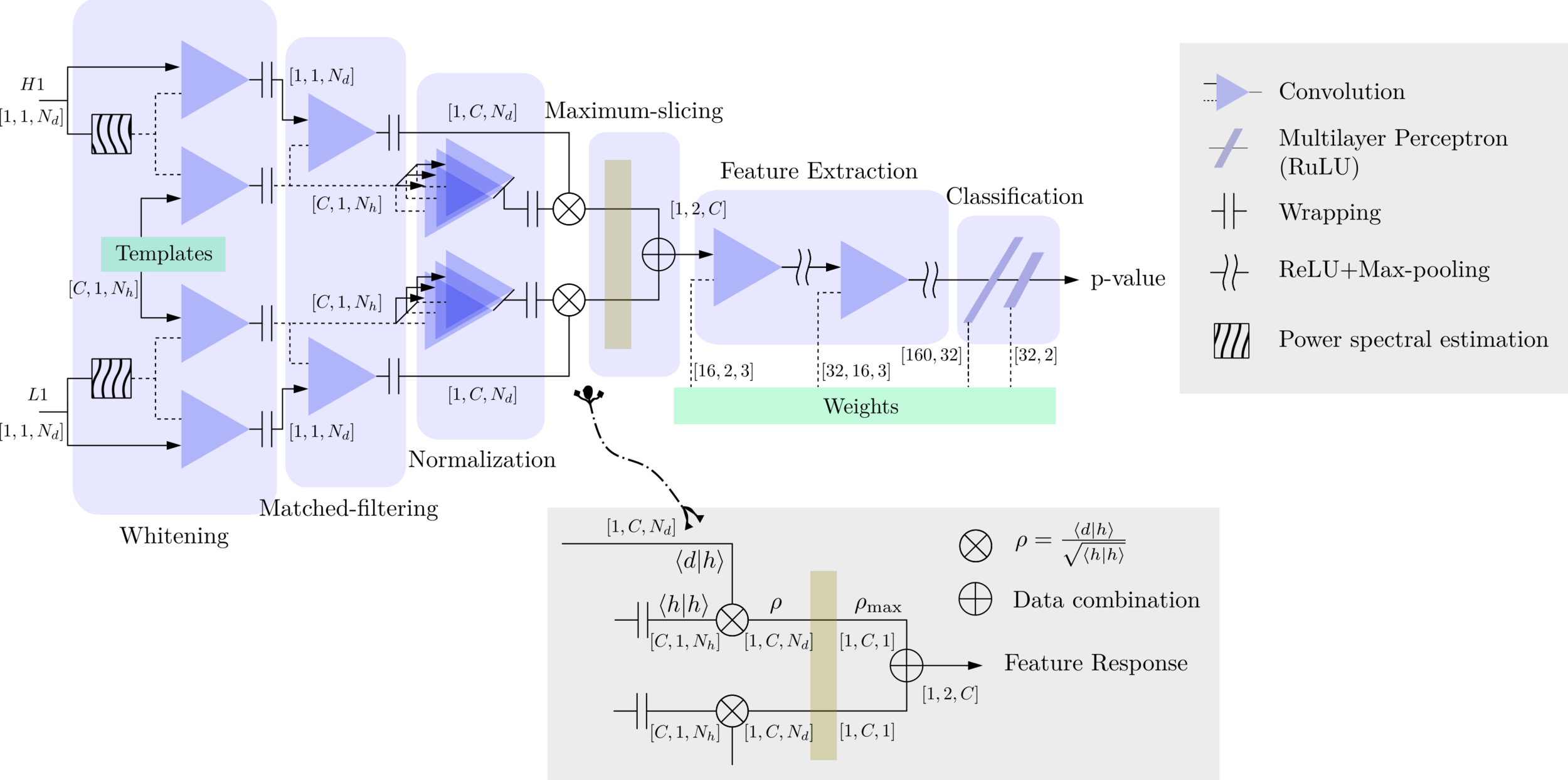

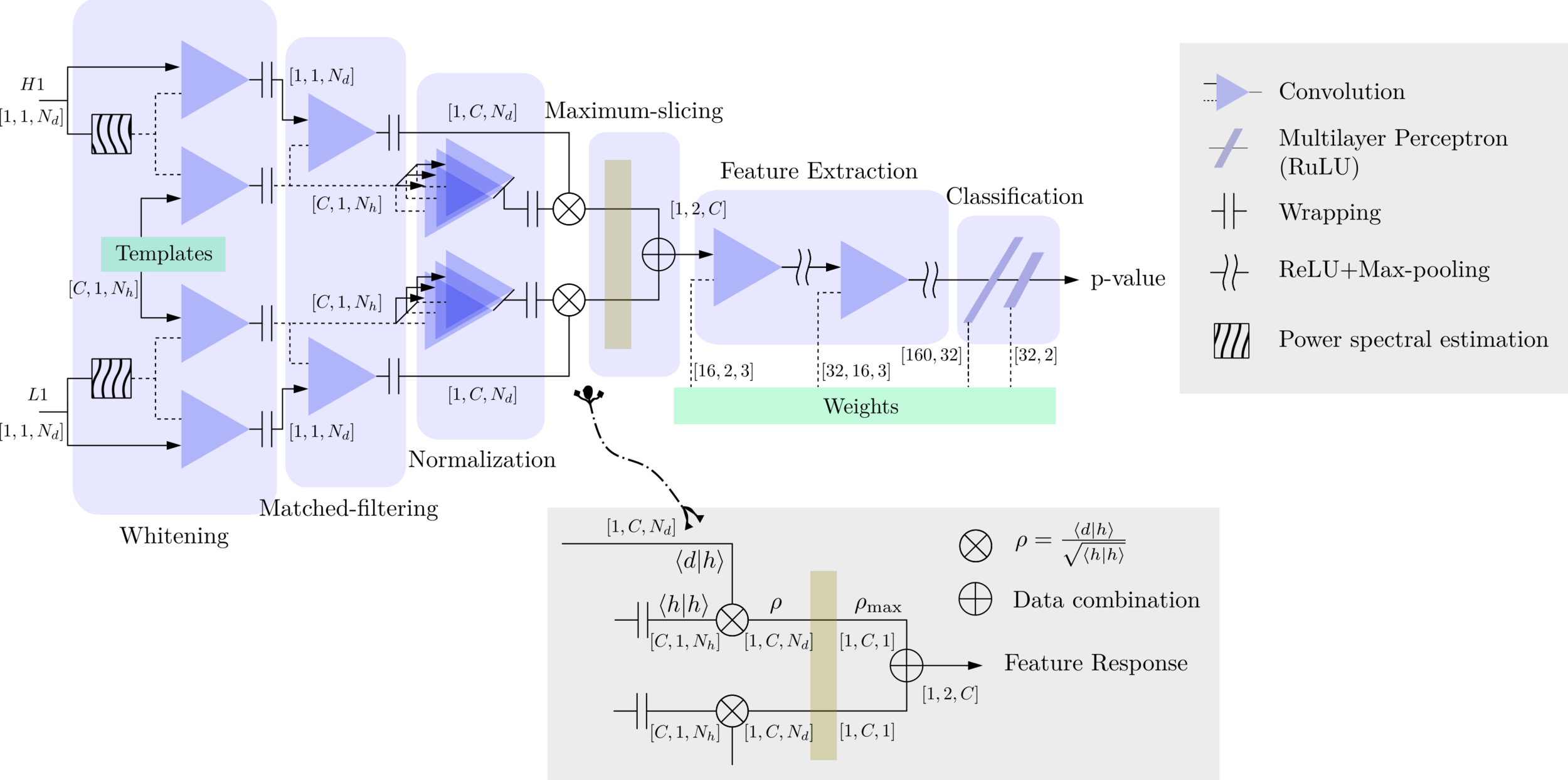

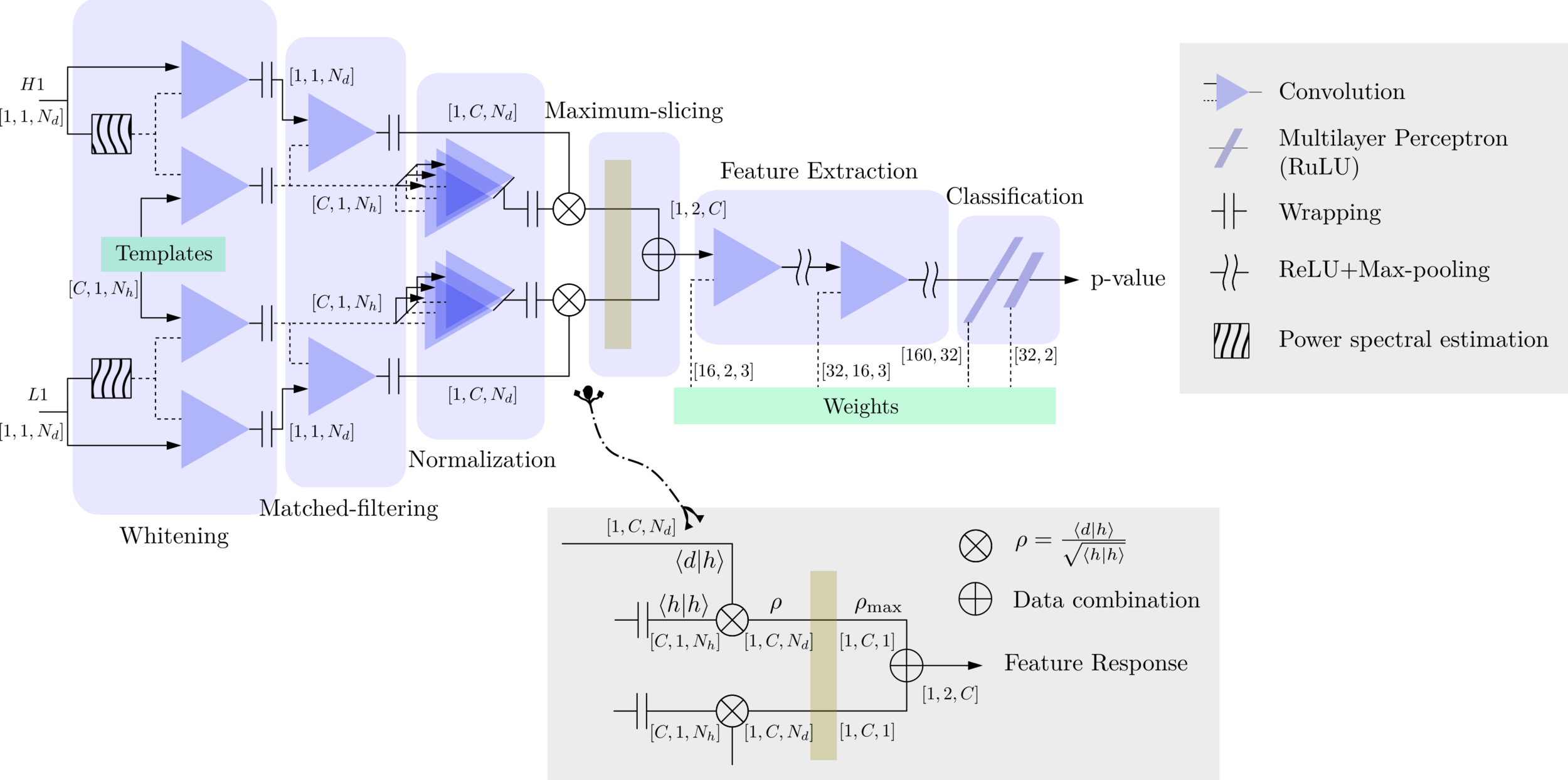

MFCNN

MFCNN

MFCNN

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

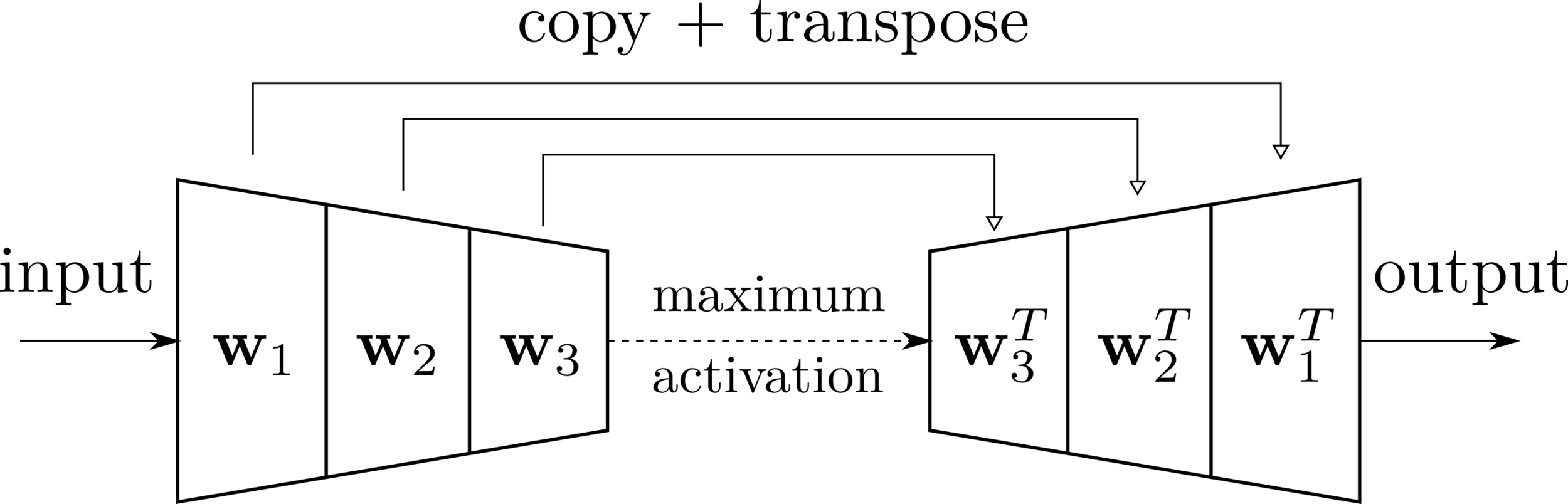

Our Motivation

- With the closely related concepts between the templates and kernels , we attempt to address a question of:

Matched-filtering (cross-correlation with the templates) can be regarded as a convolutional layer with a set of predefined kernels.

>>Is it matched-filtering ?

>>Wait, It can be matched-filtering!

Classification

Feature extraction

Convolutional neural network (ConvNet or CNN)

- In practice, we use matched filters as an essential component of feature extraction in the first part of the CNN for GW detection.

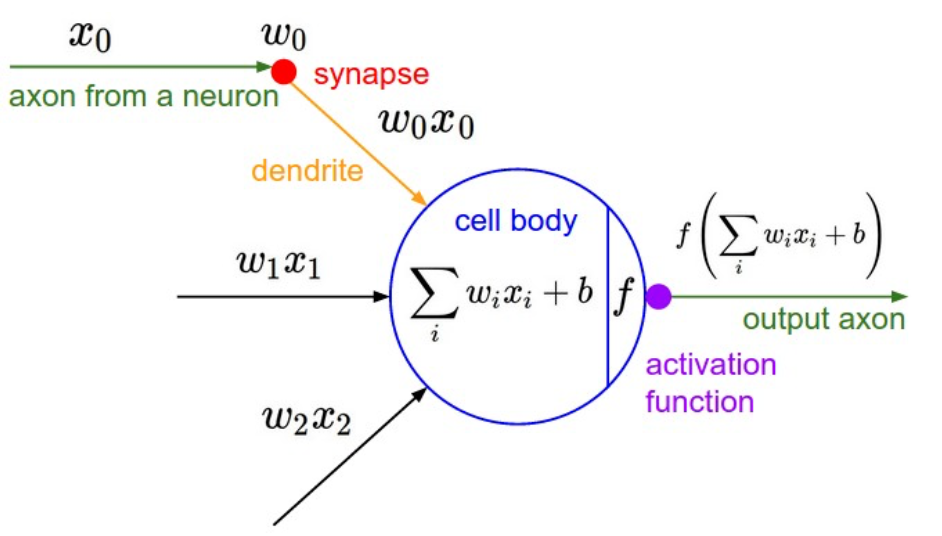

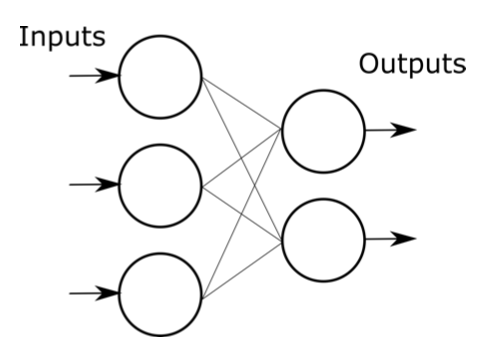

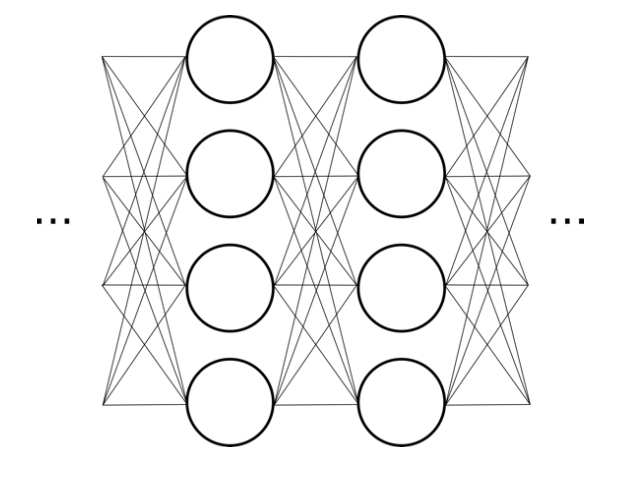

(1/2) Review: artificial neural networks (linear part)

- One sample vs one neural unit

- \(N\) samples vs one neural unit

- one samples vs \(M\) neural units (one layer)

- \(N\) samples vs \(M\) neural units (one layer)

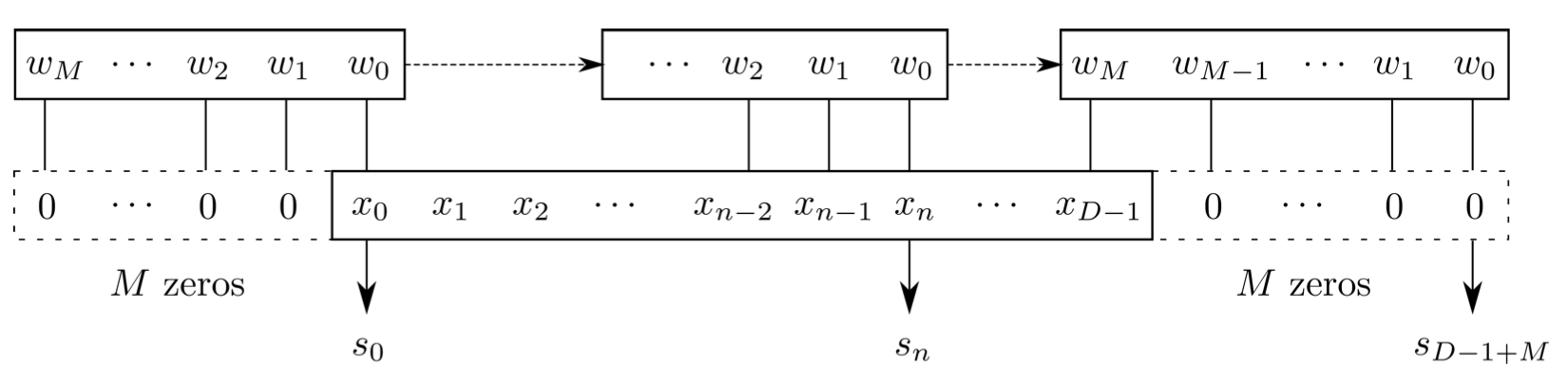

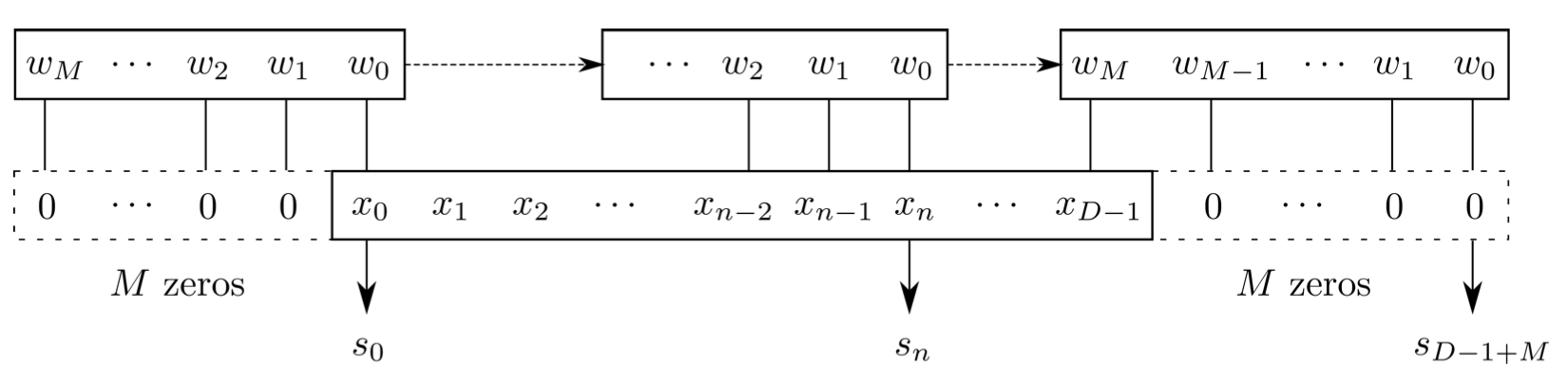

(2/2) Review: the many facets of convolution operation

- Flip-and-slide Form

- Integral Form

- Discrete Form

- Matrix Form :

- It corresponds to a convolutional layer in deep learning with one kernel (channel);

- kernel size (K) of 4; padding (P) is 3; stride (S) is 1.

- It corresponds to a convolutional layer in deep learning with one kernel (channel);

- kernel size (K) of 4; padding (P) is 3; stride (S) is 1.

(2/2) Review: the many facets of convolution operation

- Flip-and-slide Form

- Integral Form

- Discrete Form

- Matrix Form :

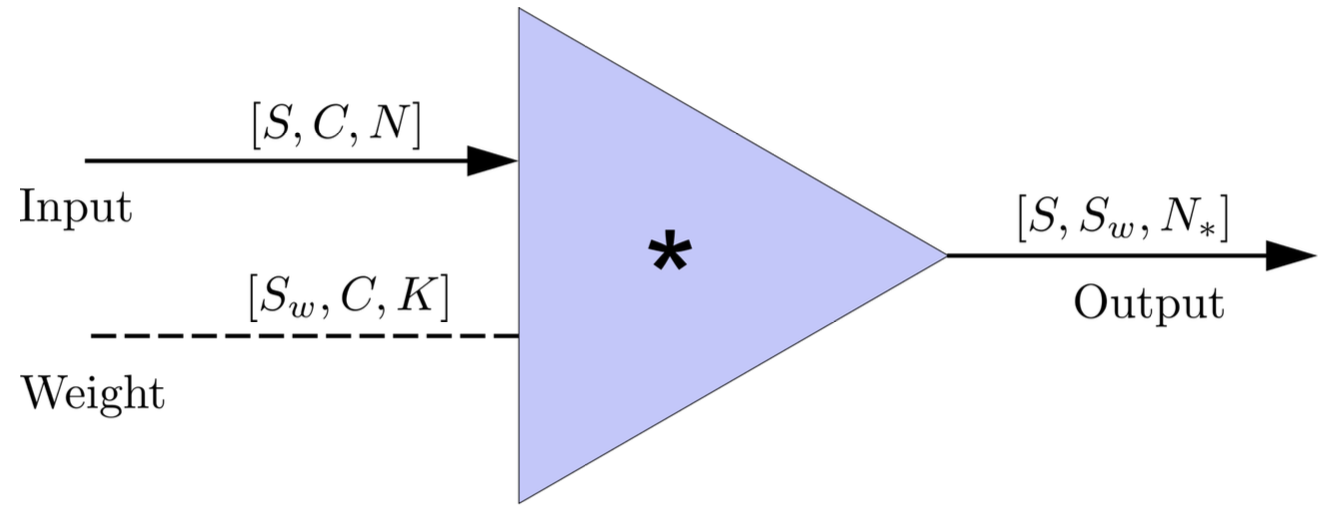

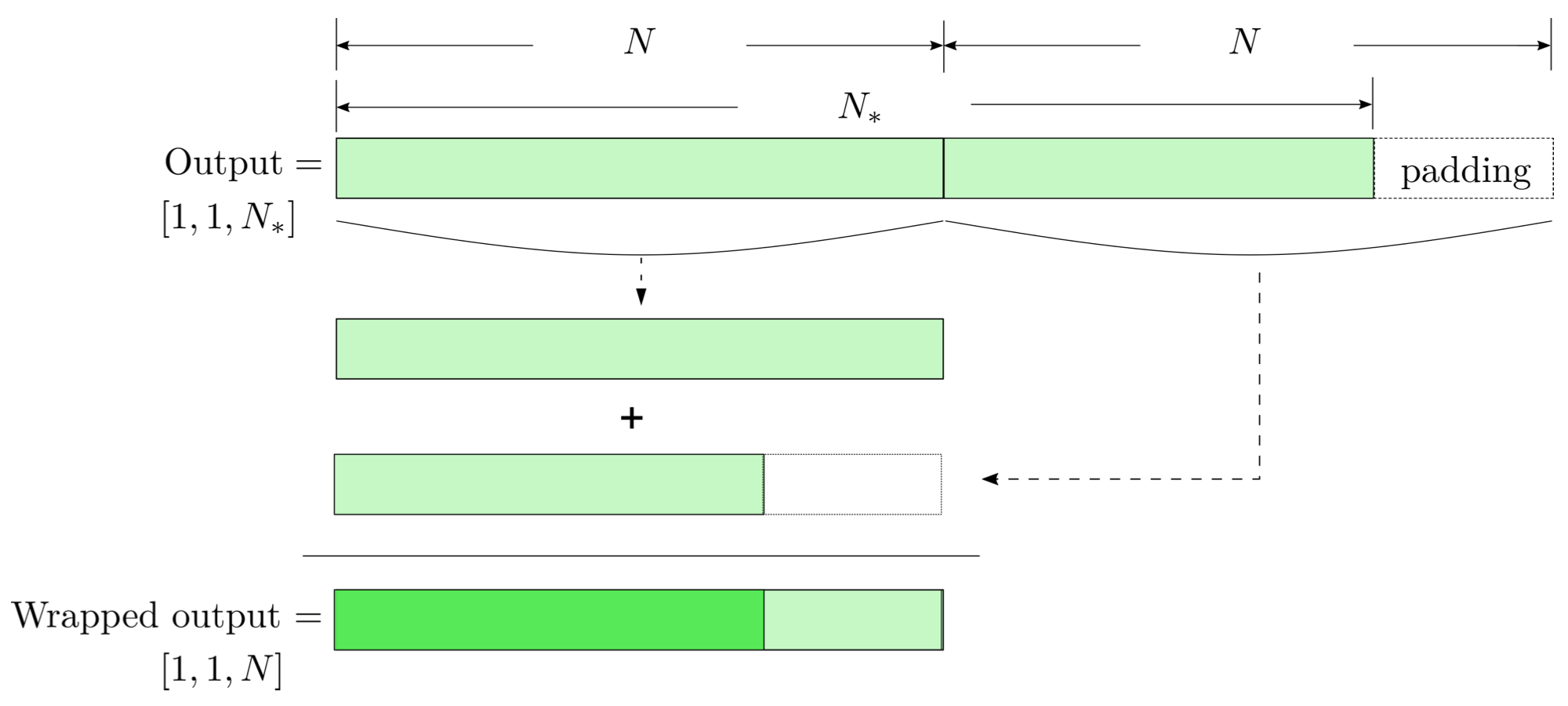

- In the 1-D convolution (\(*\)), given input data with shape [batch size, channel, length] :

FYI: \(N_\ast = \lfloor(N-K+2P)/S\rfloor+1\)

(A schematic illustration for a unit of convolution layer)

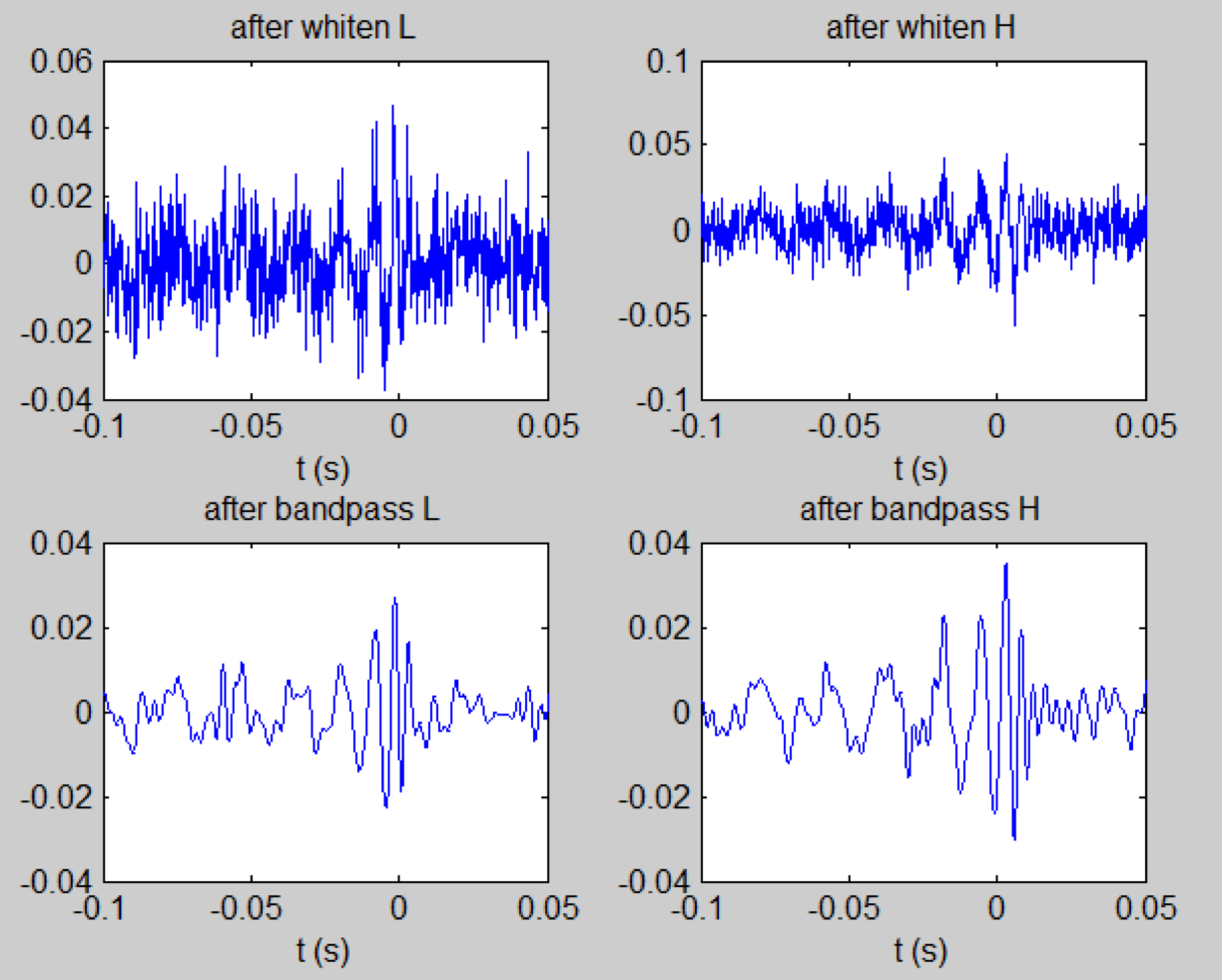

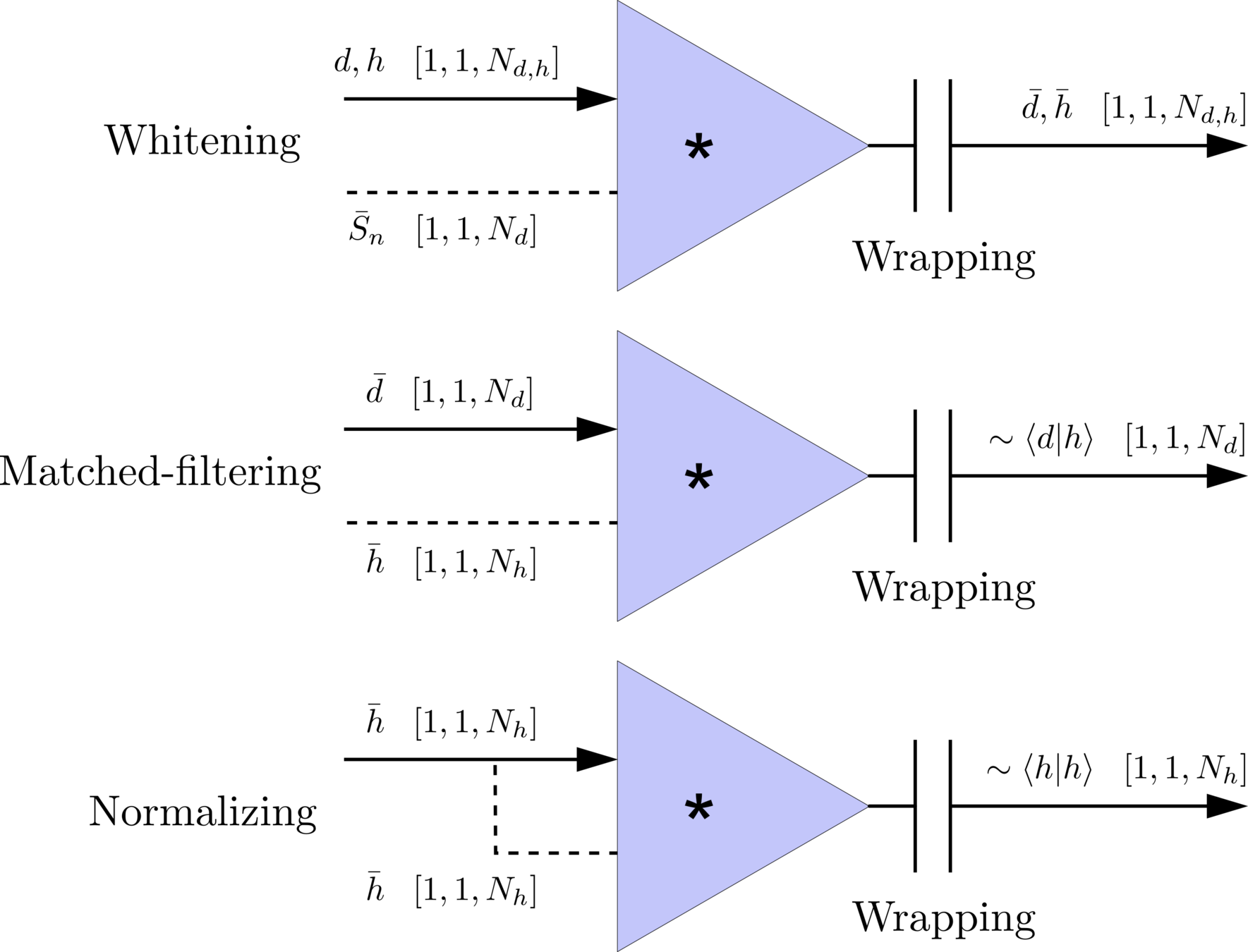

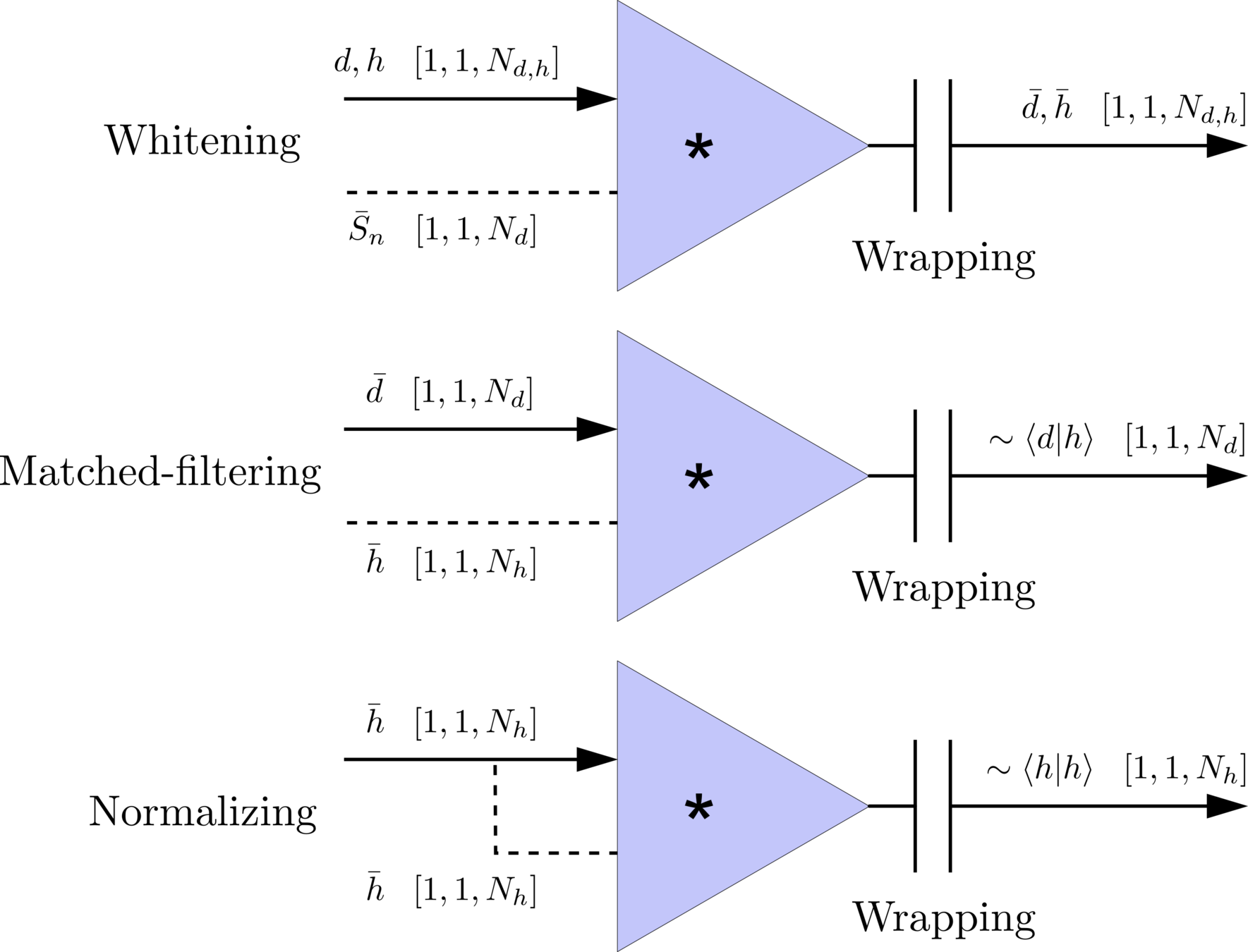

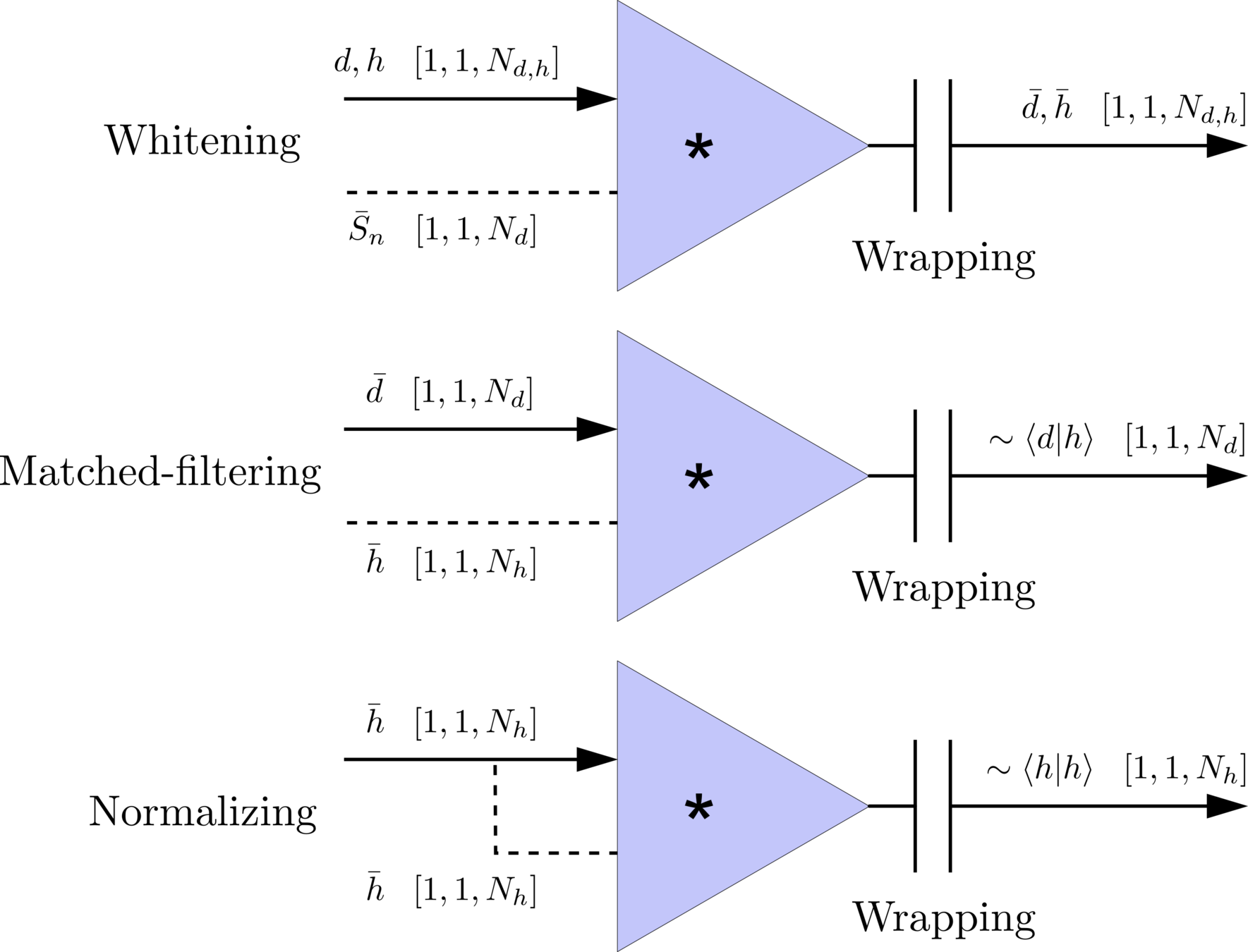

Matched-filtering in time domain

\(S_n(|f|)\) is the one-sided average PSD of \(d(t)\)

(whitening)

where

Time domain

Frequency domain

(normalizing)

(matched-filtering)

- The square of matched-filtering SNR for a given data \(d(t) = n(t)+h(t)\):

Matched-filtering in time domain

- The square of matched-filtering SNR for a given data \(d(t) = n(t)+h(t)\):

\(S_n(|f|)\) is the one-sided average PSD of \(d(t)\)

(whitening)

where

Time domain

Frequency domain

(normalizing)

(matched-filtering)

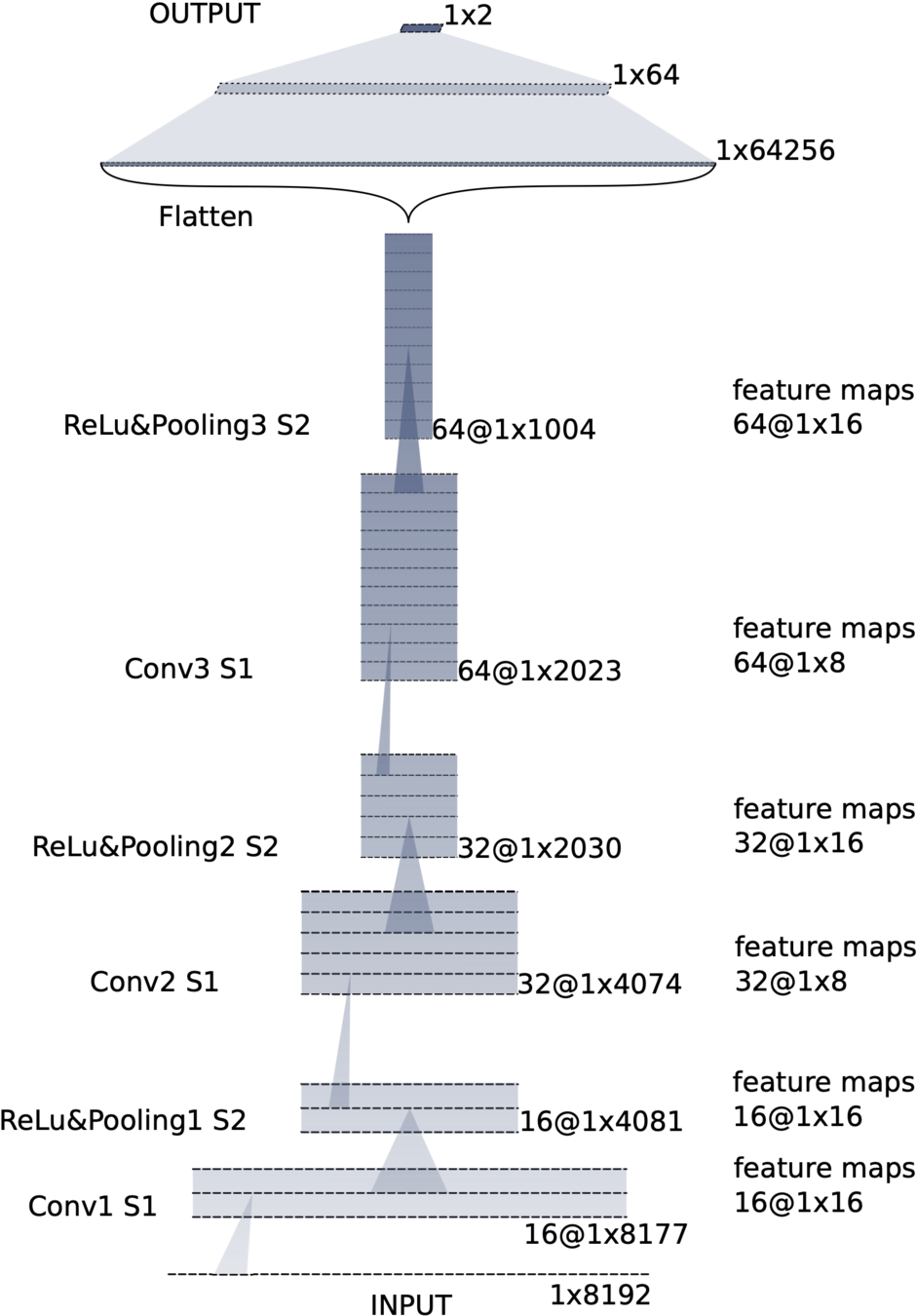

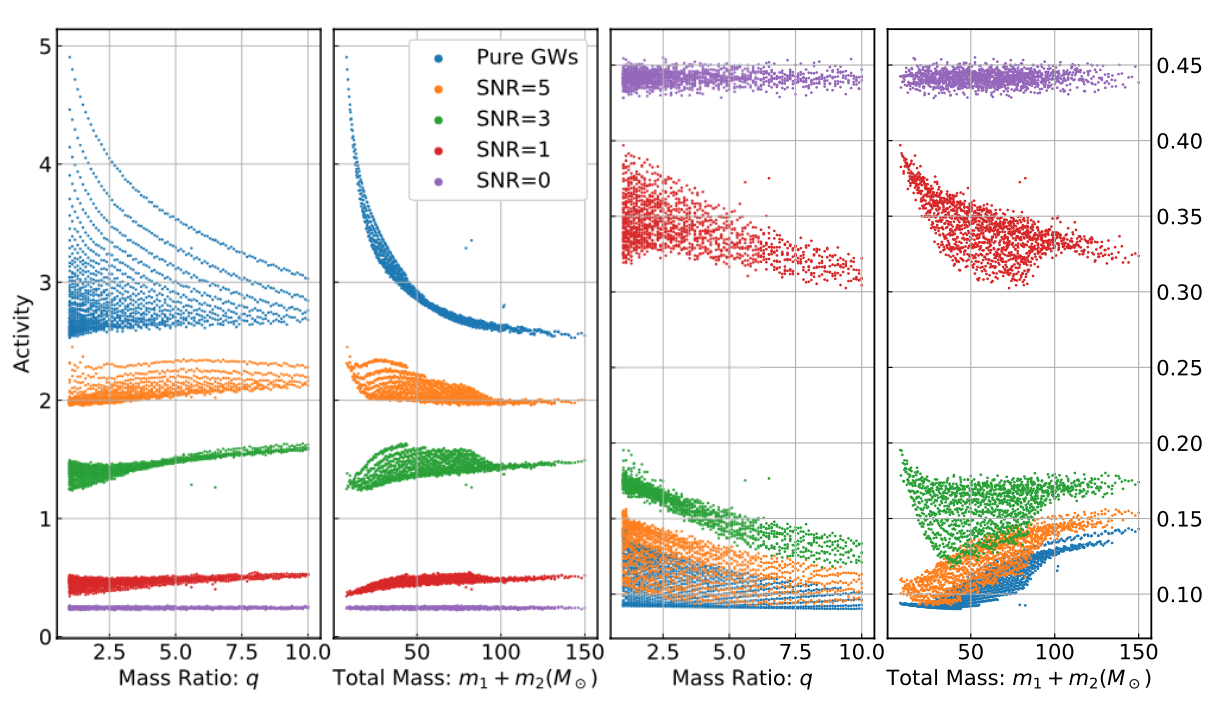

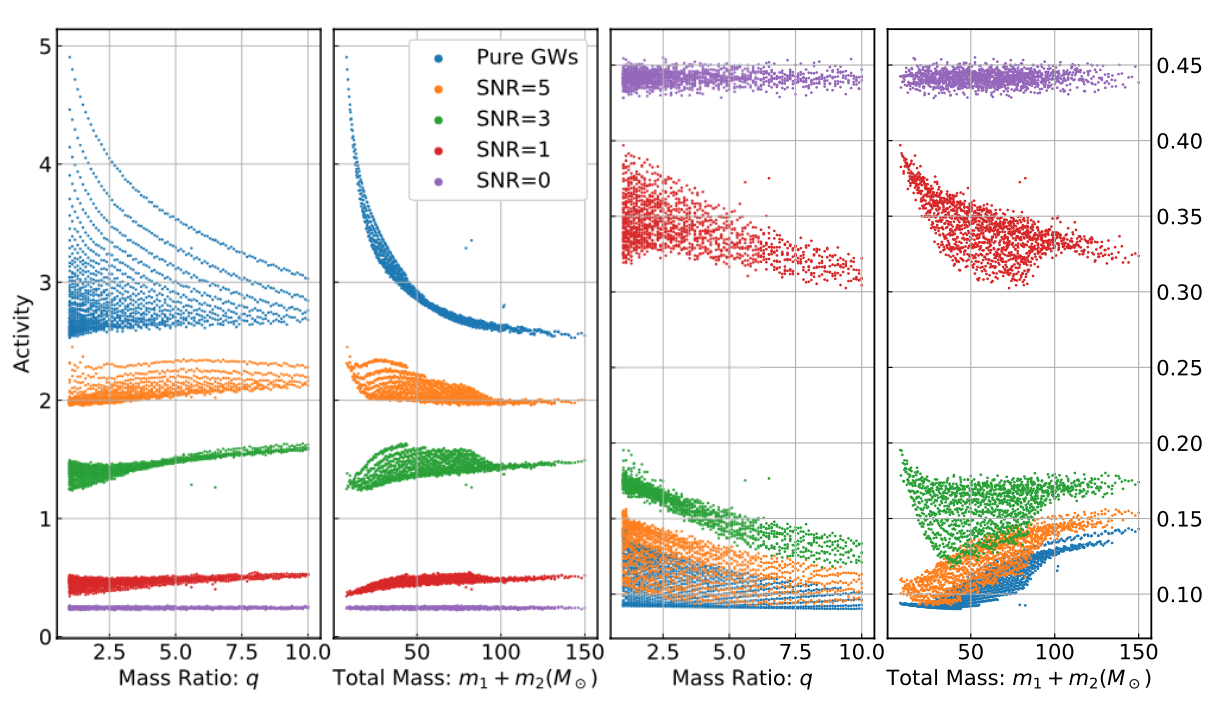

Deep Learning Framework

modulo-N circular convolution

Matched-filtering Convolutional Neural Network (MFCNN)

Input

Output

- The structure of MFCNN:

Matched-filtering Convolutional Neural Network (MFCNN)

Input

Output

- The structure of MFCNN:

- In the meanwhile, we can obtain the optimal time \(N_0\) (relative to the input) of feature response of matching by recording the location of the maxima value corresponding to the optimal template \(C_0\).

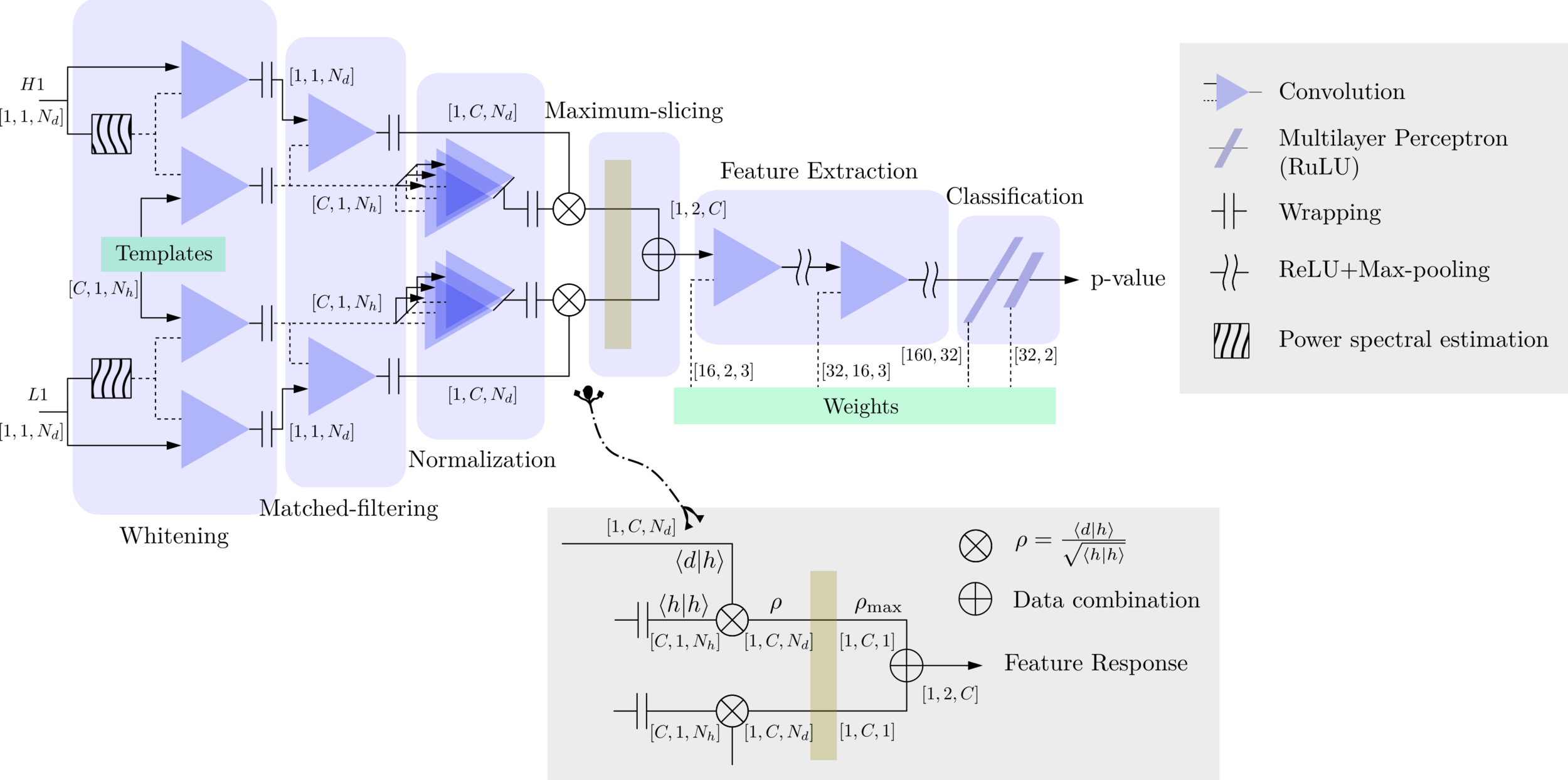

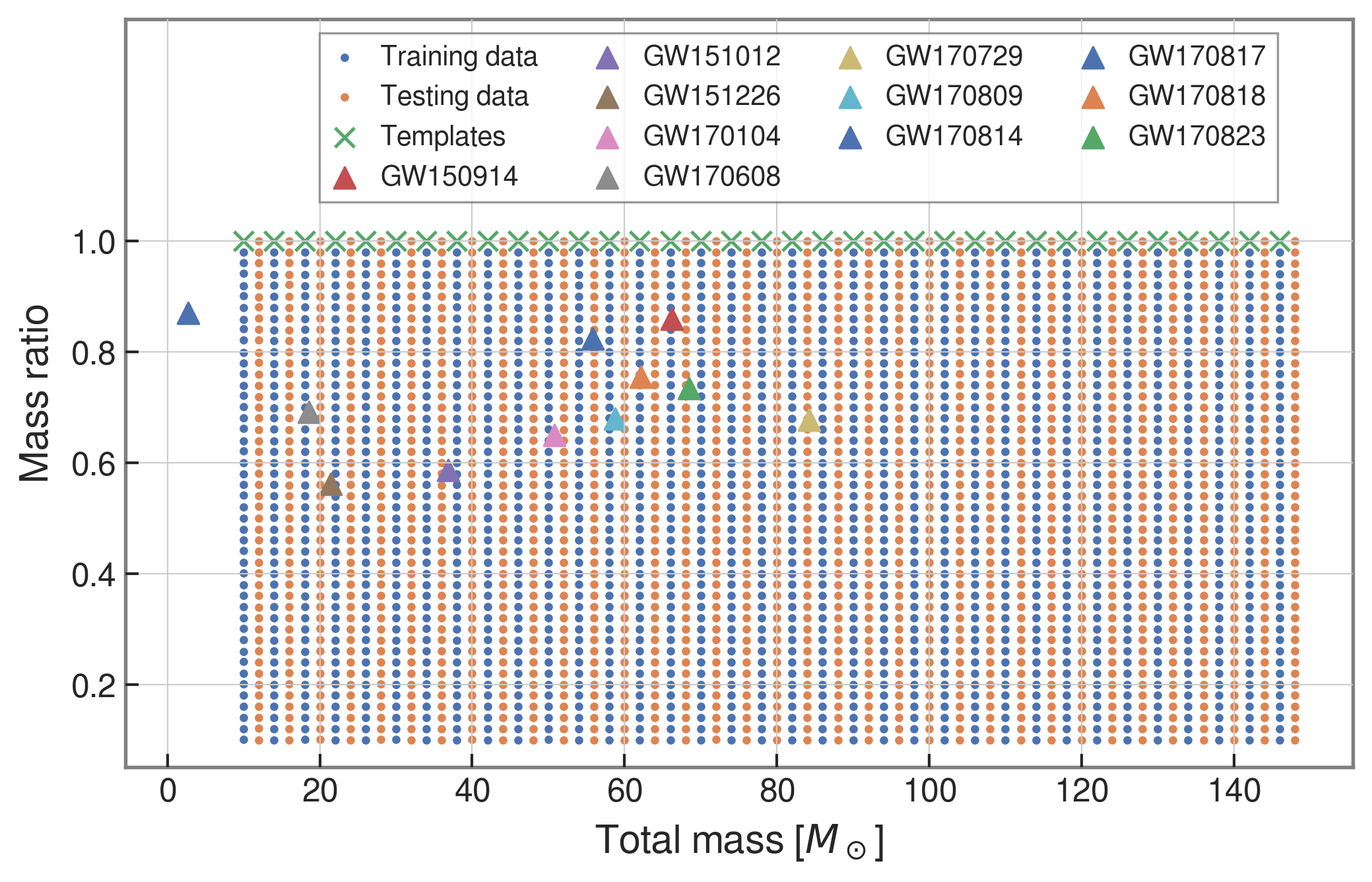

Training Configuration and Search Methodology

- The background noises for training/testing are sampled from a closed set (33*4096s) in the first observation run (O1) in the absence of the segments (4096s) containing the first 3 GW events.

FYI: sampling rate = 4096Hz

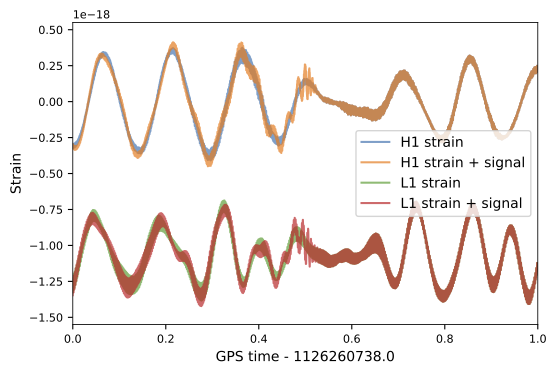

- We use SEOBNRE model [Cao et al. (2017)] to generate waveform, we only consider circular, spinless binary black holes.

| template | waveform (train/test) | |

|---|---|---|

| Number | 35 | 1610 |

| Length (s) | 1 | 5 |

| equal mass |

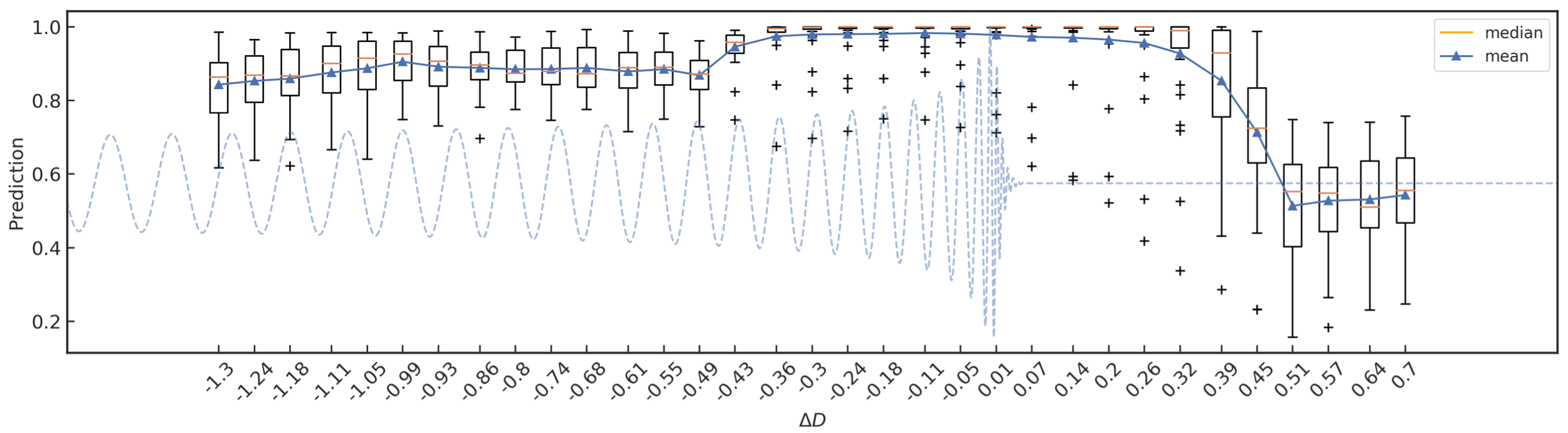

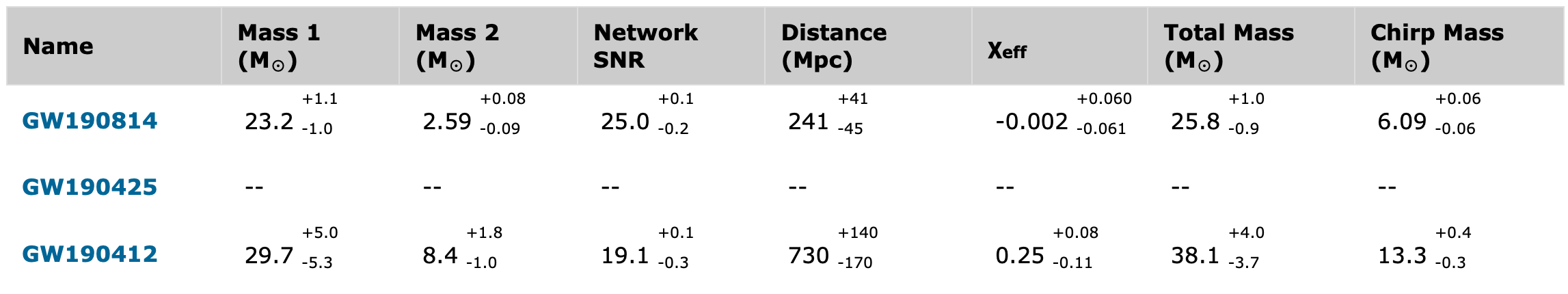

- The total mass and mass ratio of training or test data and templates are shown below. The 11 GW events for both O1 and O2 are also shown.

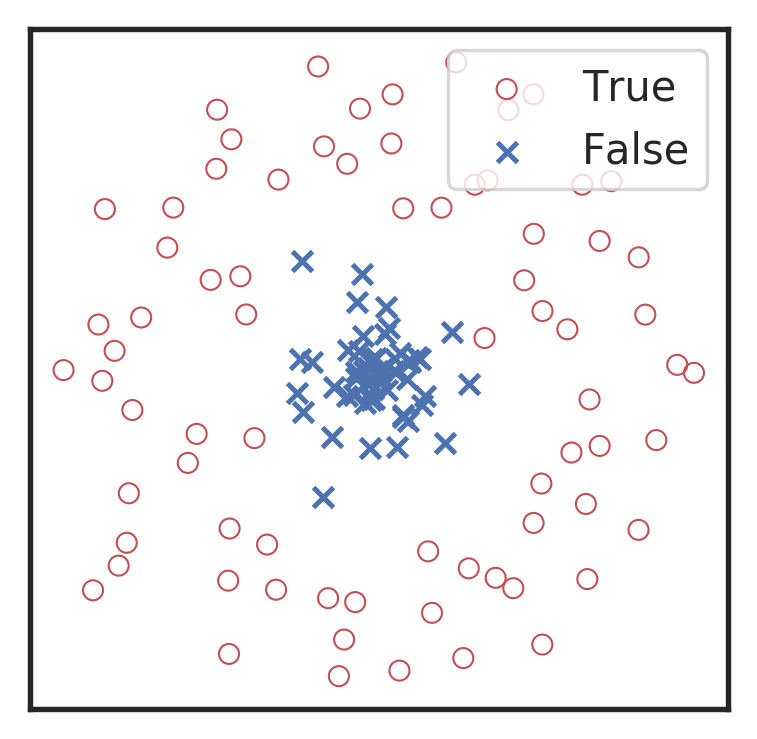

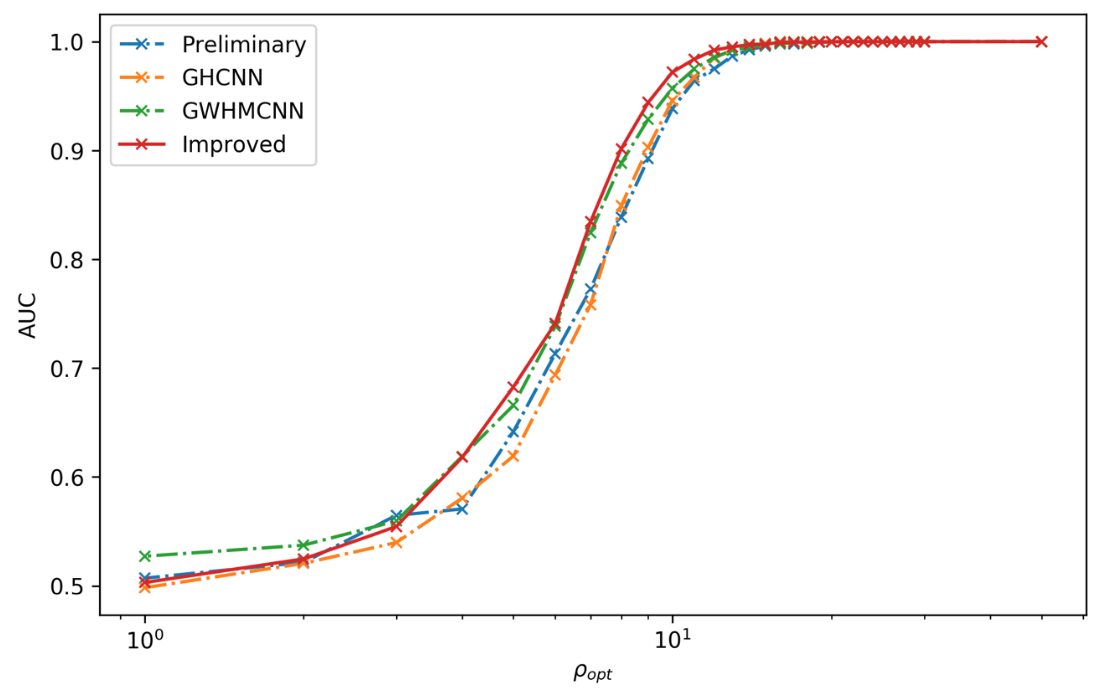

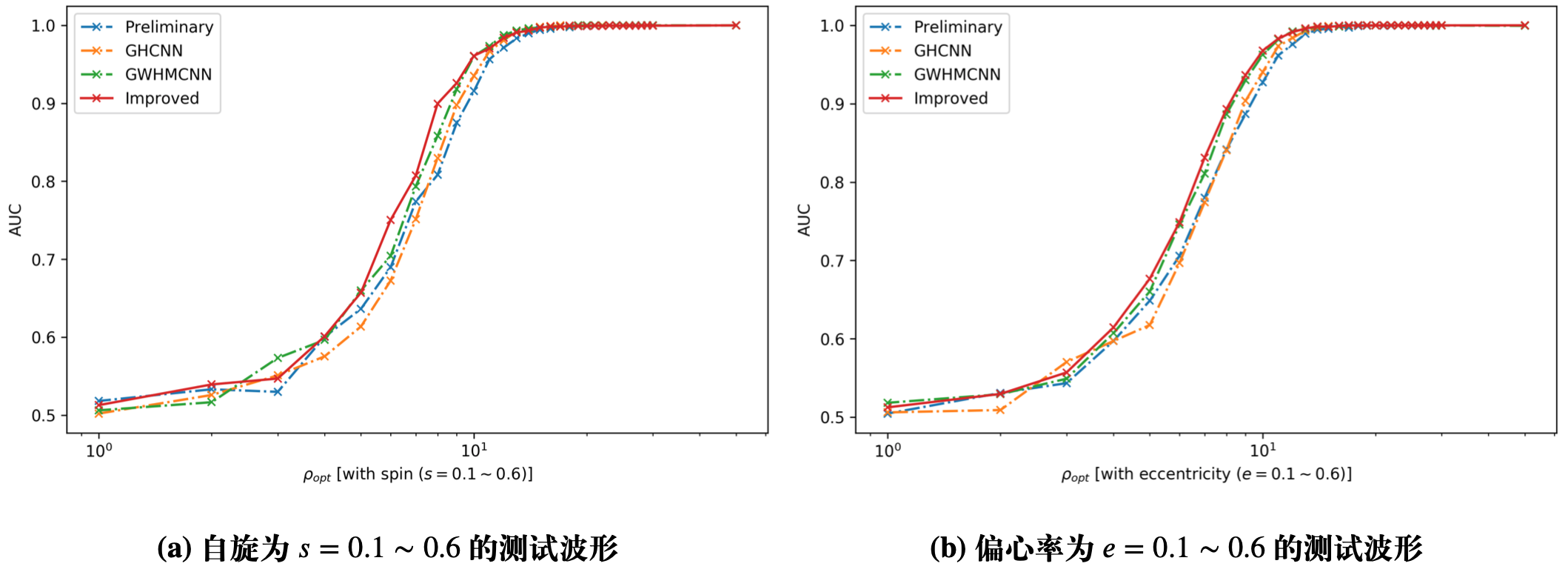

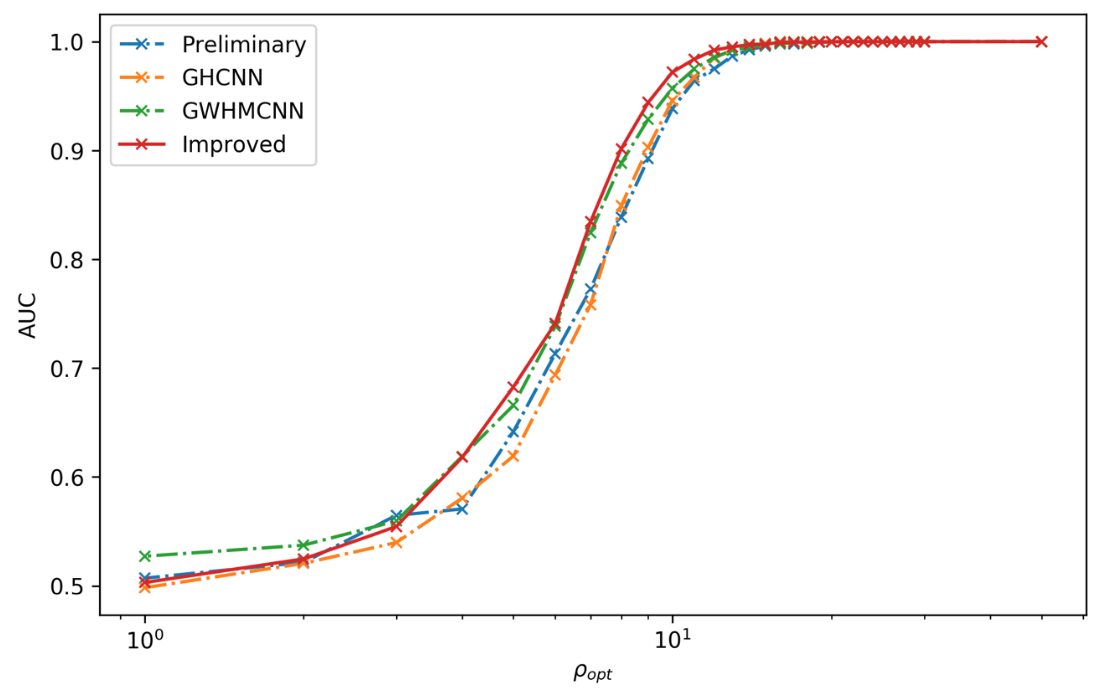

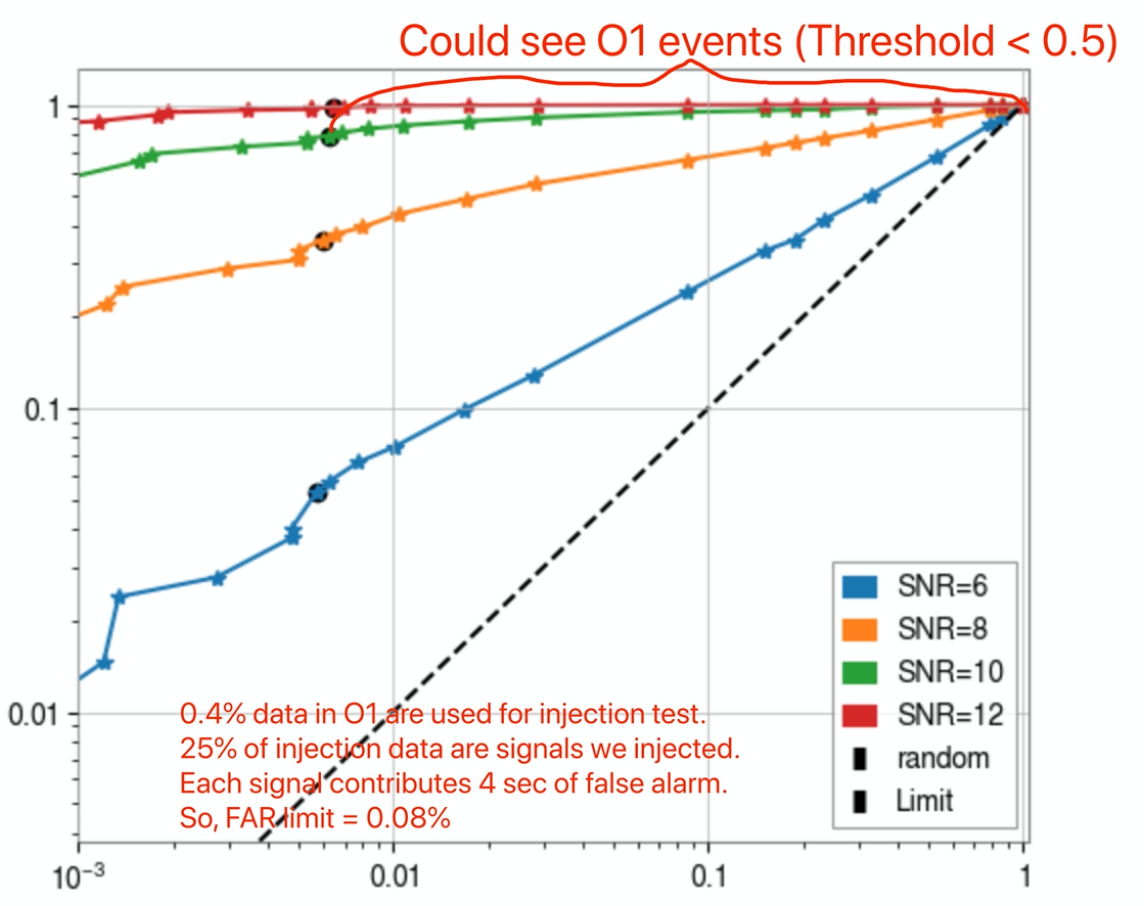

- Sensitivity estimation (ROC)

True Positive Rate

False Alarm Rate

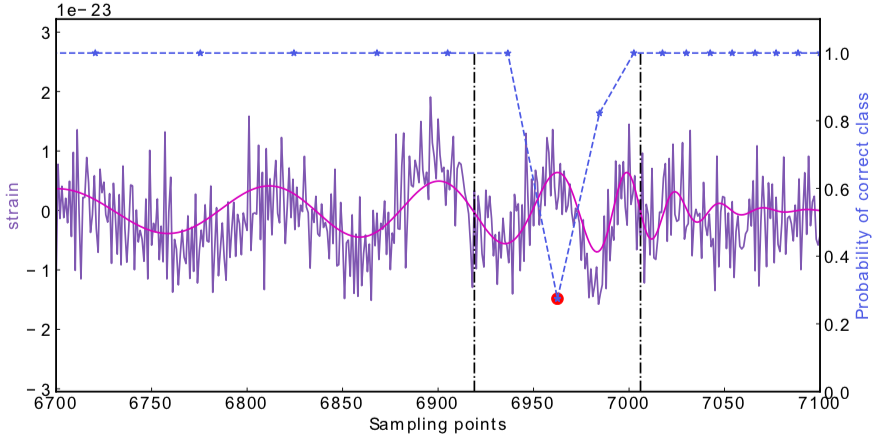

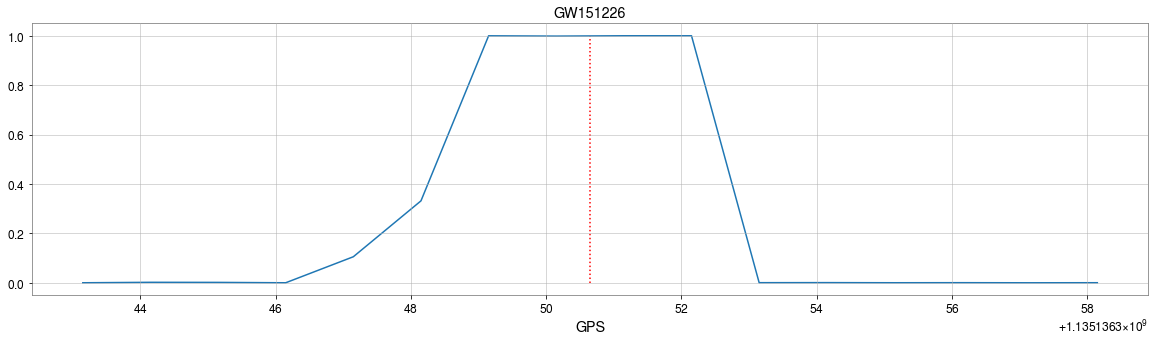

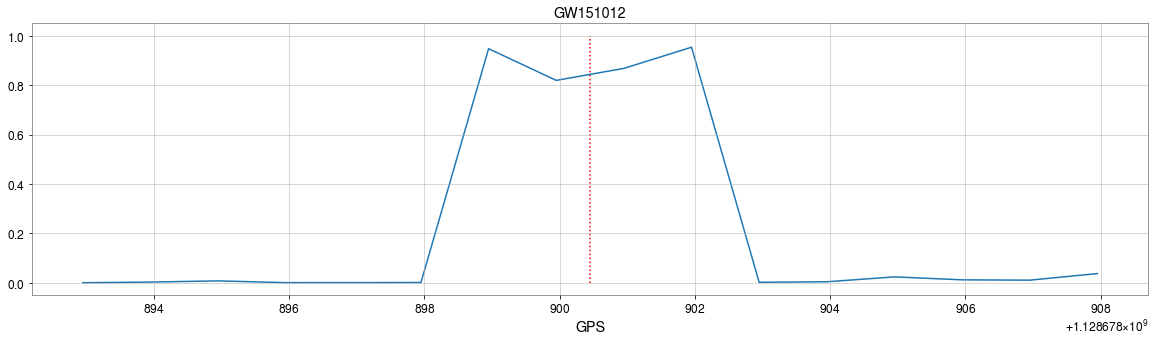

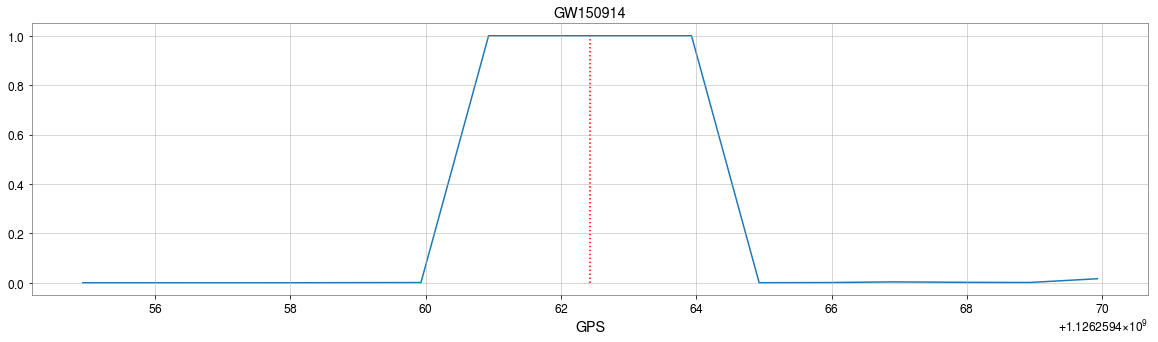

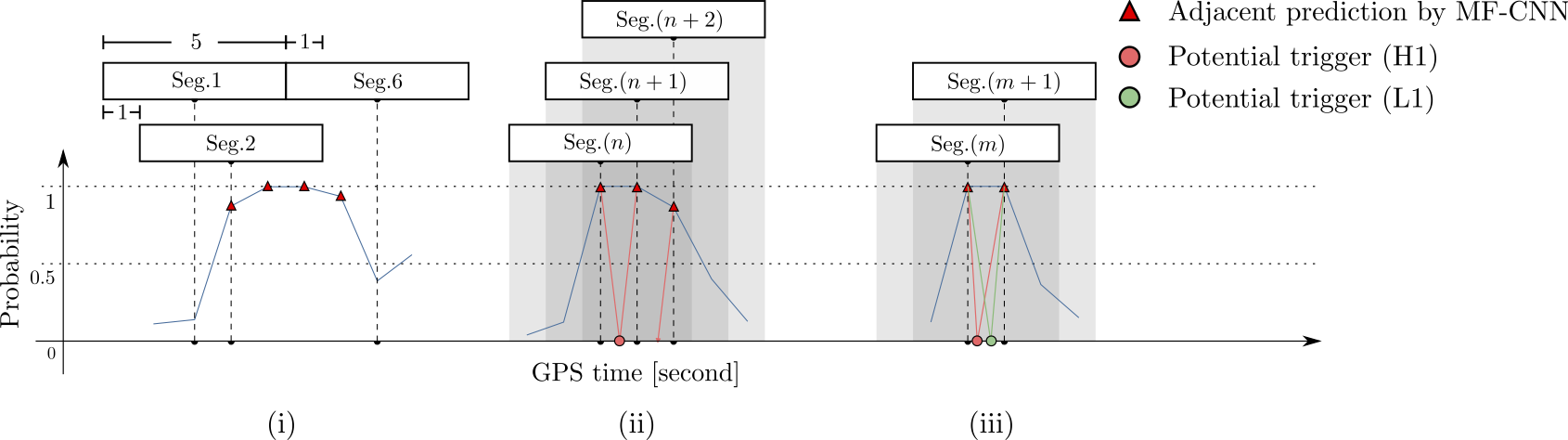

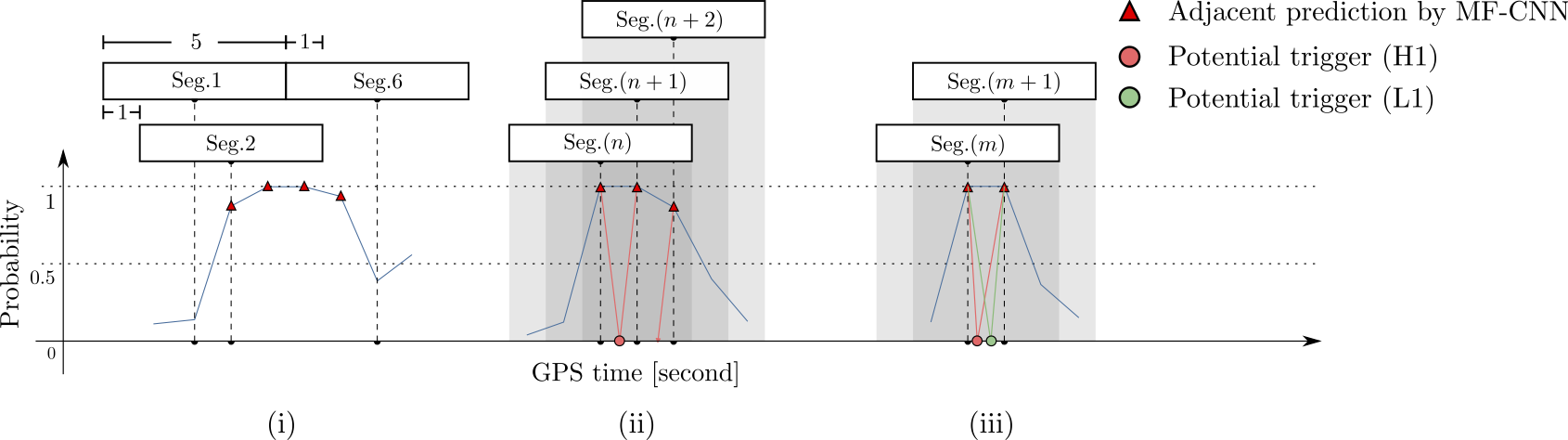

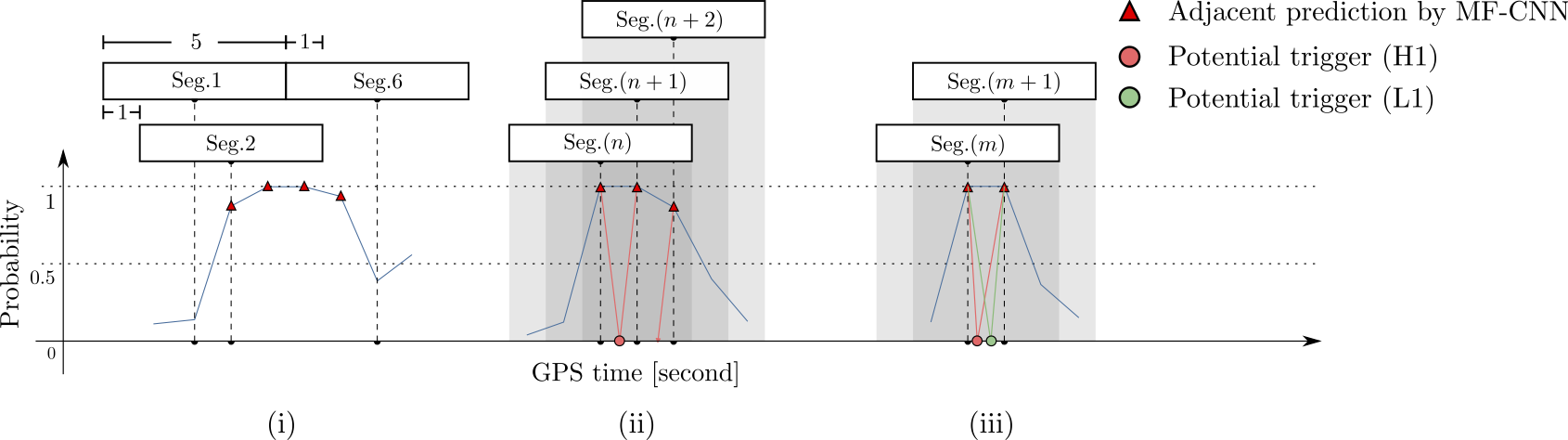

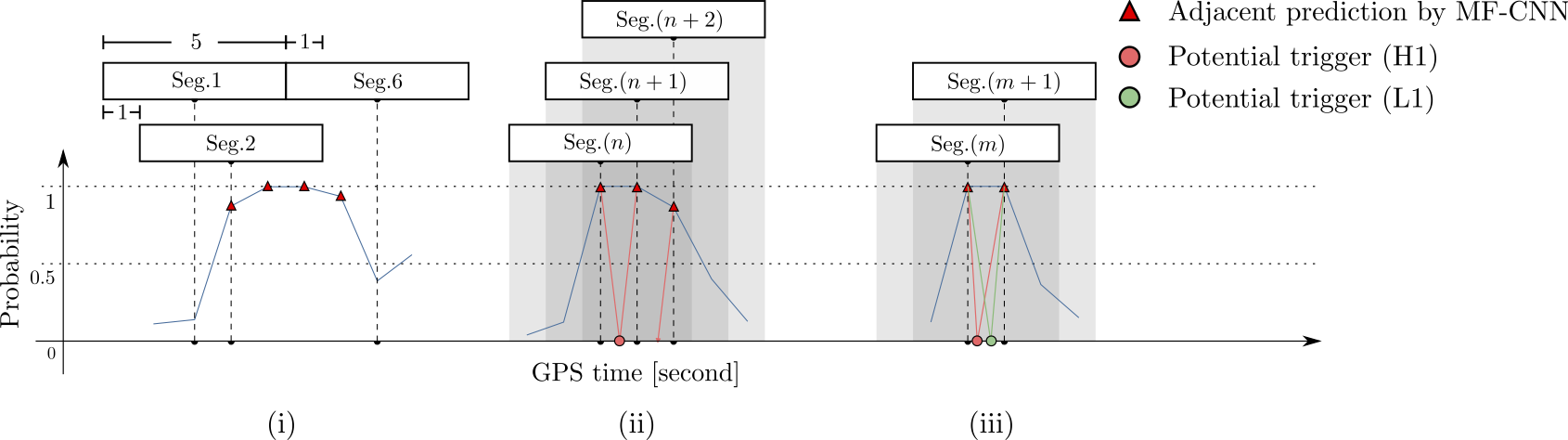

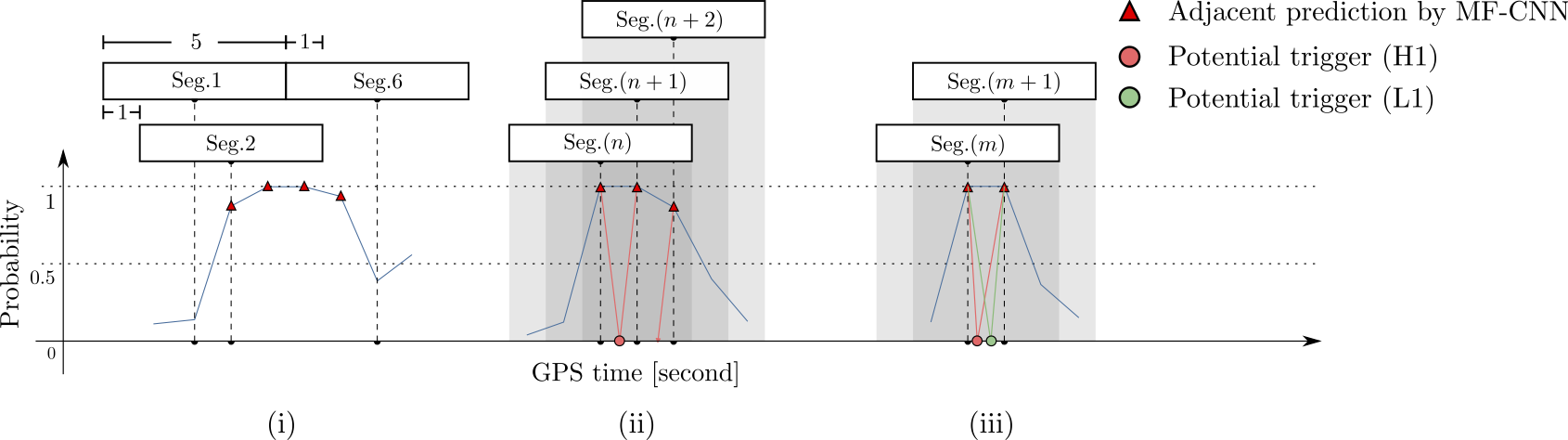

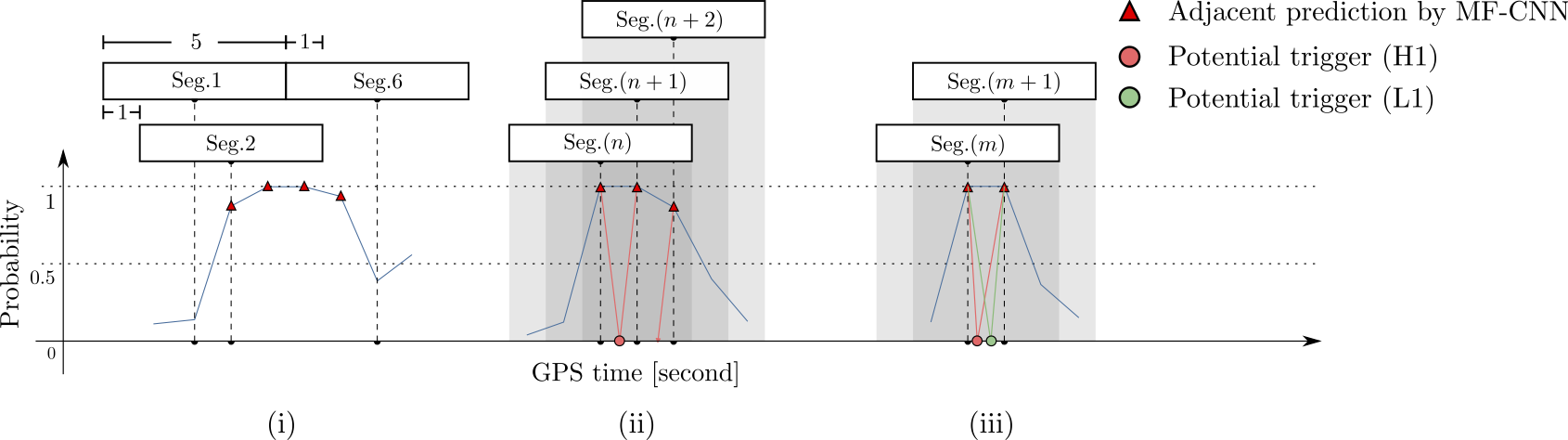

- Every 5 seconds segment as input of our MF-CNN with a step size of 1 second.

- The model can scan the whole range of the input segment and output a probability score.

- In the ideal case, with a GW signal hiding in somewhere, there should be 5 adjacent predictions for it with respect to a threshold.

Training Configuration and Search Methodology

- Every 5 seconds segment as input of our MF-CNN with a step size of 1 second.

- The model can scan the whole range of the input segment and output a probability score.

- In the ideal case, with a GW signal hiding in somewhere, there should be 5 adjacent predictions for it with respect to a threshold.

input

Training Configuration and Search Methodology

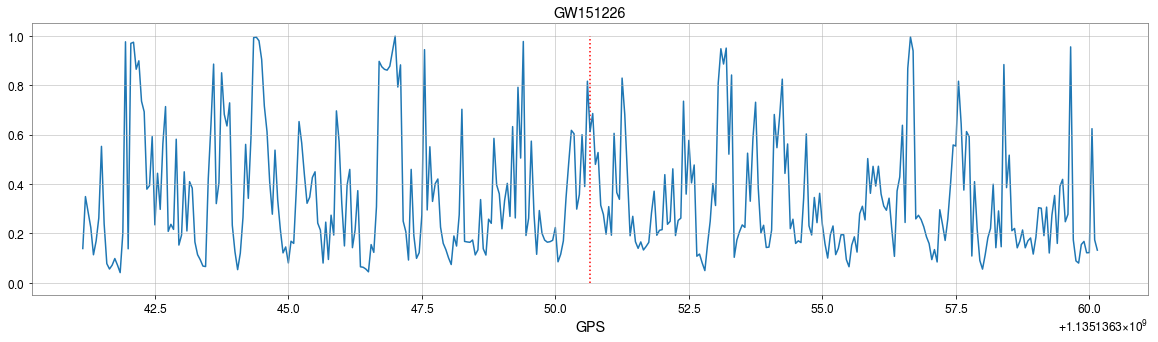

Search Results on the Real LIGO Recordings

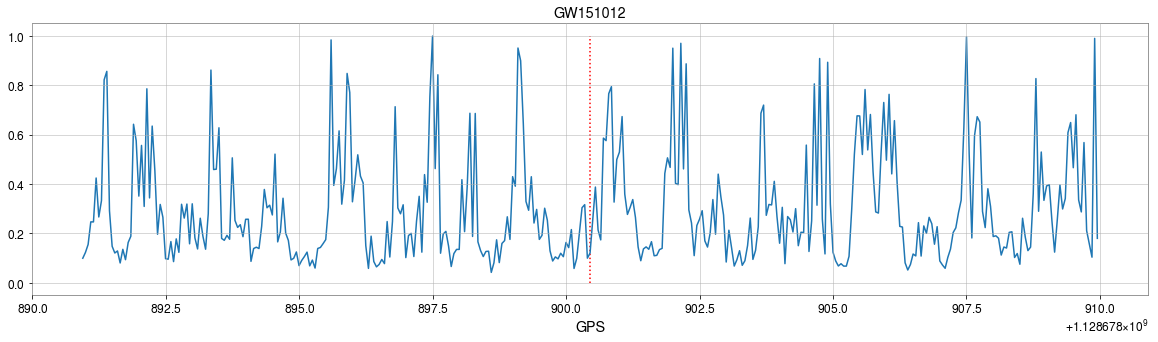

- Recovering three GW events in O1.

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

- Recovering all GW events in O2, even including GW170817 event.

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

- Recovering all GW events in O2, even including GW170817 event.

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

- Recovering all GW events in O2, even including GW170817 event.

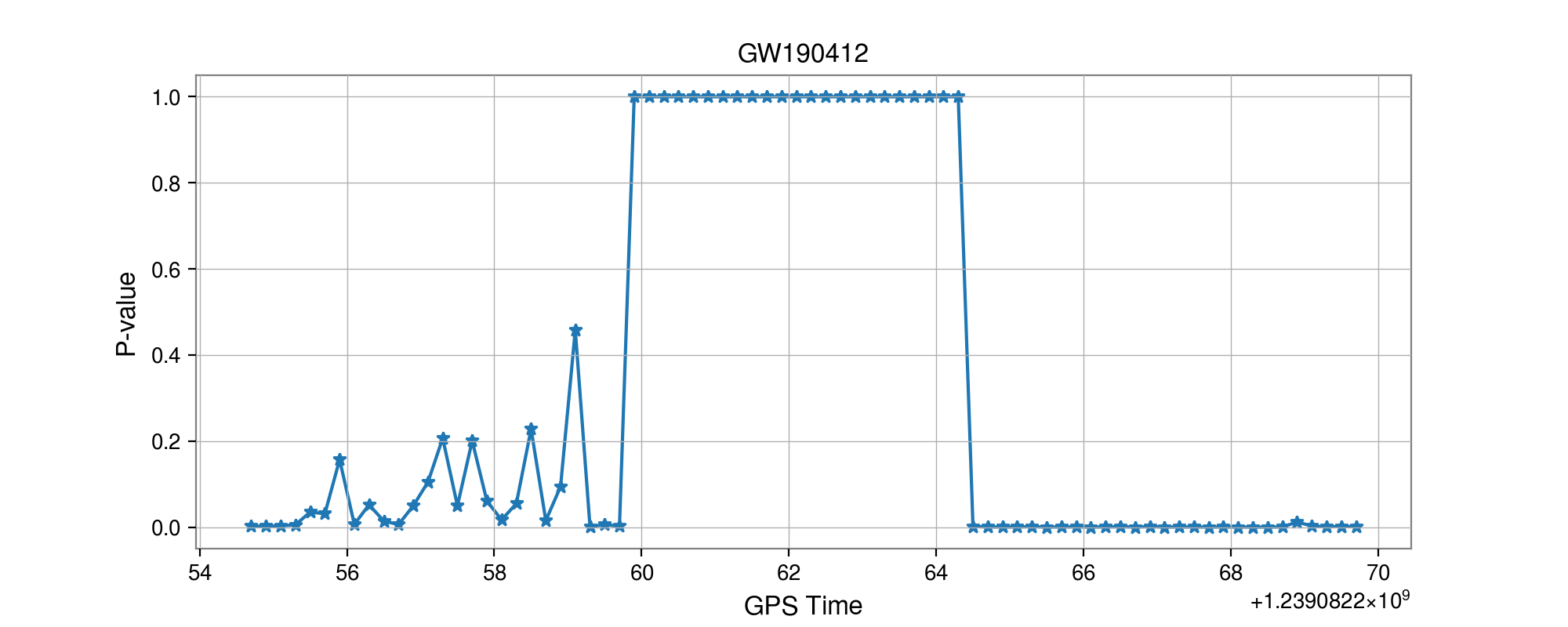

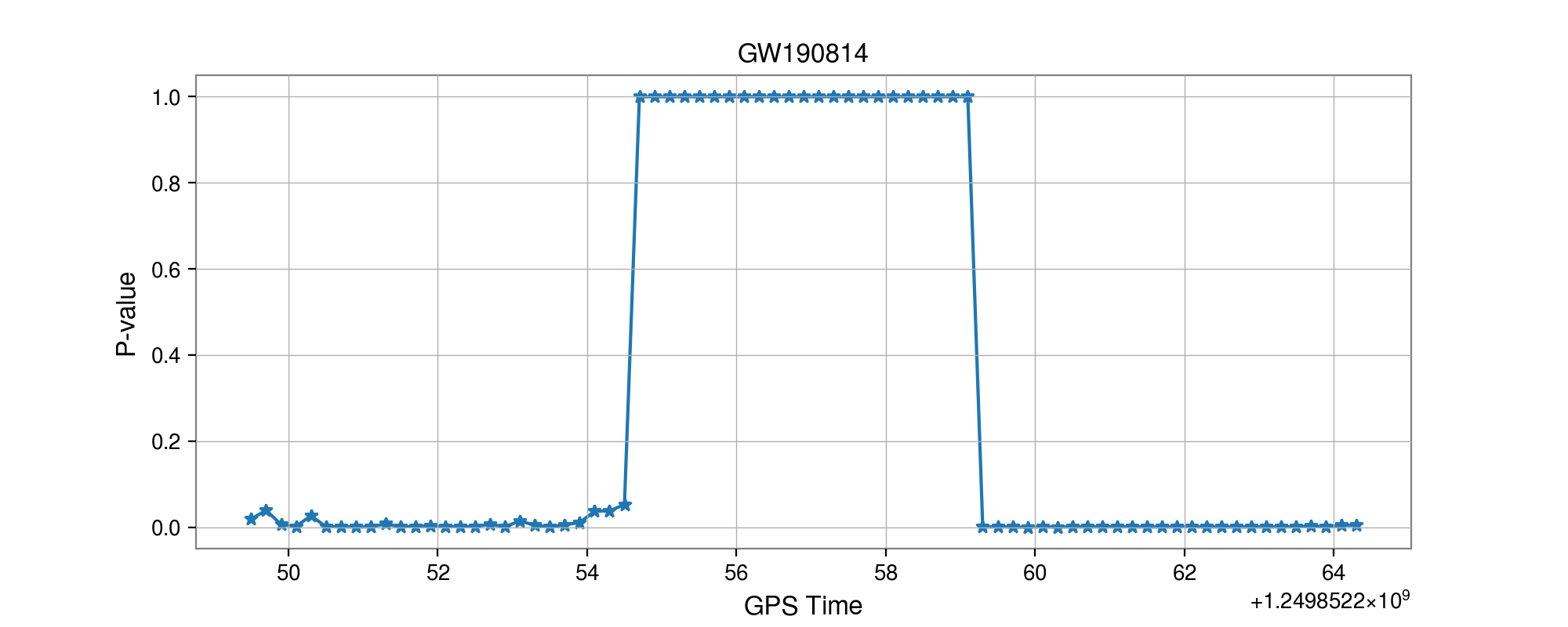

- Our MFCNN can also clearly mark the newly reported GW190412 and GW190814 events in O3a.

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

- Recovering all GW events in O2, even including GW170817 event.

- Our MFCNN can also clearly mark the newly reported GW190412 and GW190814 events in O3a.

-

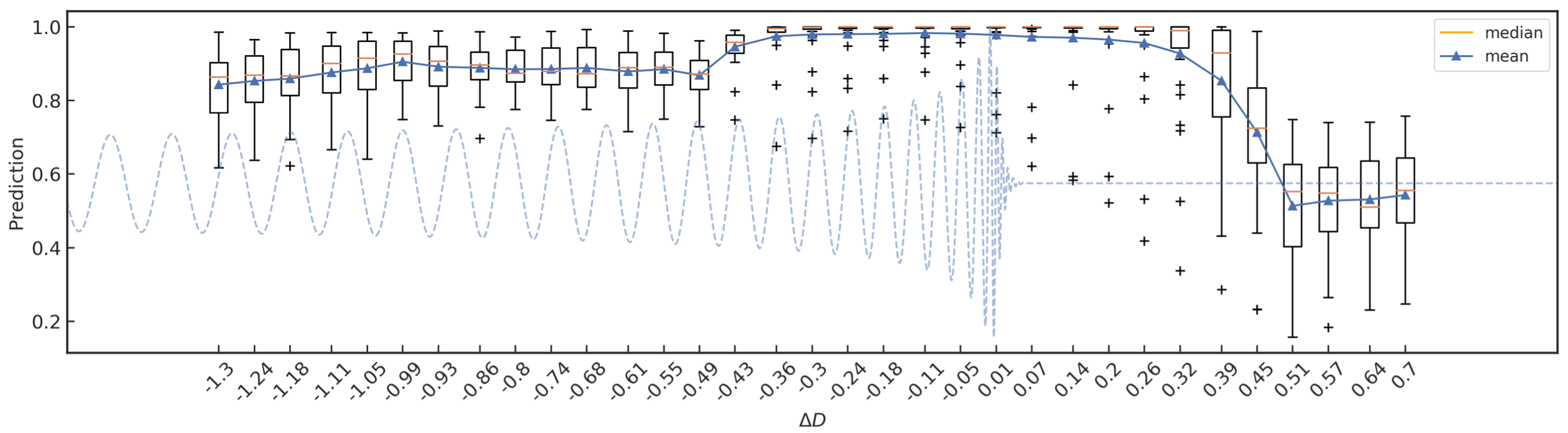

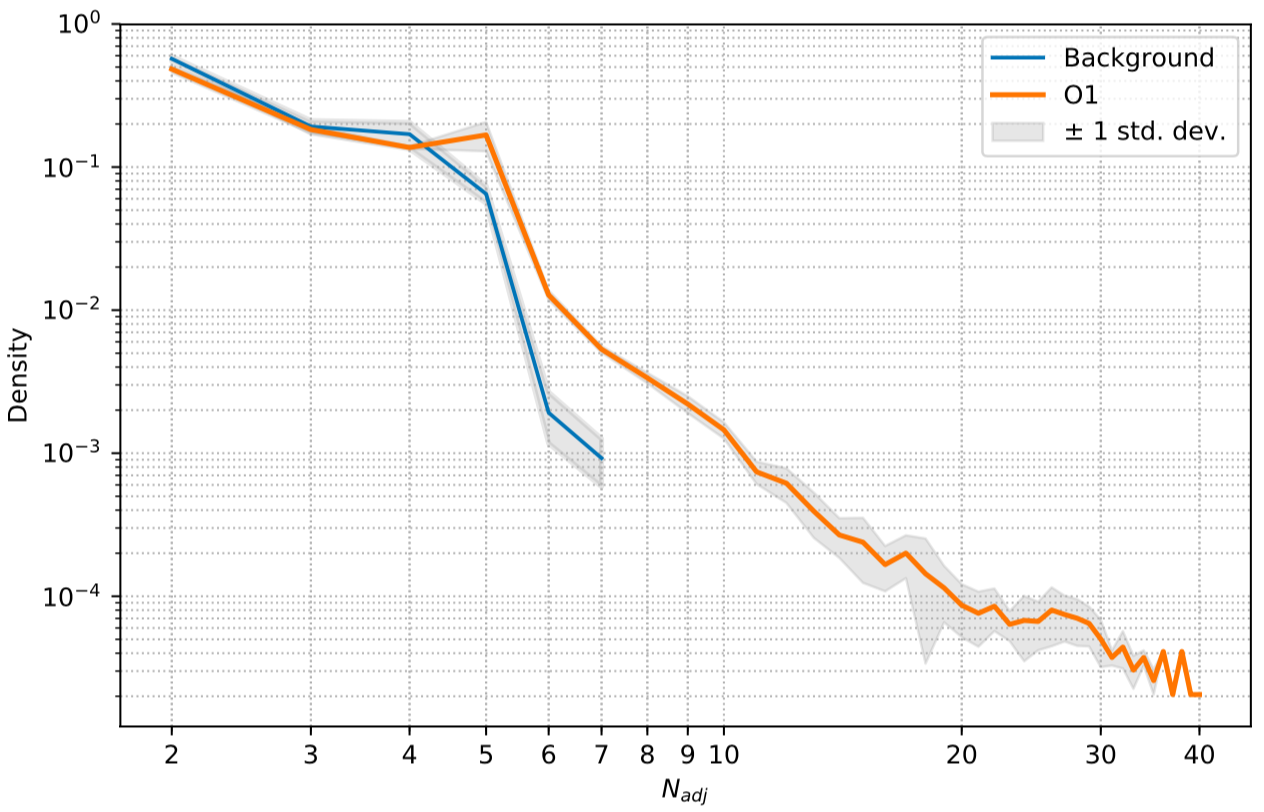

Statistical significance on O1

- Count a group of adjacent predictions as one "trigger block".

- For pure background (non-Gaussian), monotone trend should be observed.

- In the ideal case, with a GW signal hiding in somewhere, there should be 5 adjacent predictions for it with respect to a threshold.

Number of Adjacent prediction

a bump at 5 adjacent predictions

Search Results on the Real LIGO Recordings

- Recovering three GW events in O1.

- Recovering all GW events in O2, even including GW170817 event.

- Our MFCNN can also clearly mark the newly reported GW190412 and GW190814 events in O3a.

-

Statistical significance on O1

- Count a group of adjacent predictions as one "trigger block".

- For pure background (non-Gaussian), monotone trend should be observed.

- In the ideal case, with a GW signal hiding in somewhere, there should be 5 adjacent predictions for it with respect to a threshold.

-

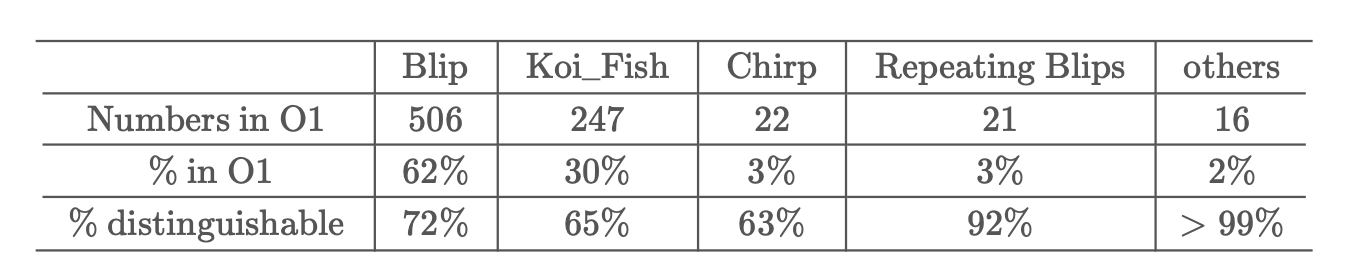

Glitches identification

- According to GravitySpy Dataset, there are 7368 glitches included in O1 data, 812 of which fall in our trigger set or about 90% of known instrumental glitches is distinguishable.

Conclusion & Way Forward

-

Some benefits from MF-CNN architecture:

-

Simple configuration for GW data generation and almost no data pre-processing.

- It works on a non-stationary background.

-

Easy parallel deployments, multiple detectors can benefit a lot from this design.

- Efficient searching with a fixed window.

-

- The main understanding of the algorithms:

- GW templates are used as likely features for matching.

- Generalization of both matched-filtering and neural networks.

- Matched-filtering can be rewritten as a convolutional neural layer.

-

Need to be improved:

-

Higher sensitivity

-

Lower false alarm rate (appropriate metric for estimation)

-

For more GW sources.

-

-

Look forward

-

Parameter estimation (the current “holy grail” of machine learning for GWs.)

-

GW denoising

-

"Statistical Learning" / "Theory of Machine Learning" /

-

...

-

This slide: https://slides.com/iphysresearch/mf_dl

Conclusion & Way Forward

-

Some benefits from MF-CNN architecture:

-

Simple configuration for GW data generation and almost no data pre-processing.

- It works on a non-stationary background.

-

Easy parallel deployments, multiple detectors can benefit a lot from this design.

- Efficient searching with a fixed window.

-

- The main understanding of the algorithms:

- GW templates are used as likely features for matching.

- Generalization of both matched-filtering and neural networks.

- Matched-filtering can be rewritten as a convolutional neural layer.

-

Need to be improved:

-

Higher sensitivity

-

Lower false alarm rate (appropriate metric for estimation)

-

For more GW sources.

-

-

Look forward

-

Parameter estimation (the current “holy grail” of machine learning for GWs.)

-

GW denoising

-

"Statistical Learning" / "Theory of Machine Learning" /

-

...

-

for _ in range(num_of_audiences):

print('Thank you for your attention!')This slide: https://slides.com/iphysresearch/mf_dl