Supervised Open Information Extraction

Open Information Extraction (Open IE) :is systems

extract tuples of natural language expressions that

represent the basic propositions asserted by a sentence.

Usages :They have been used for

a wide variety of tasks, such as textual entailment , question answering

, and knowledge base populations

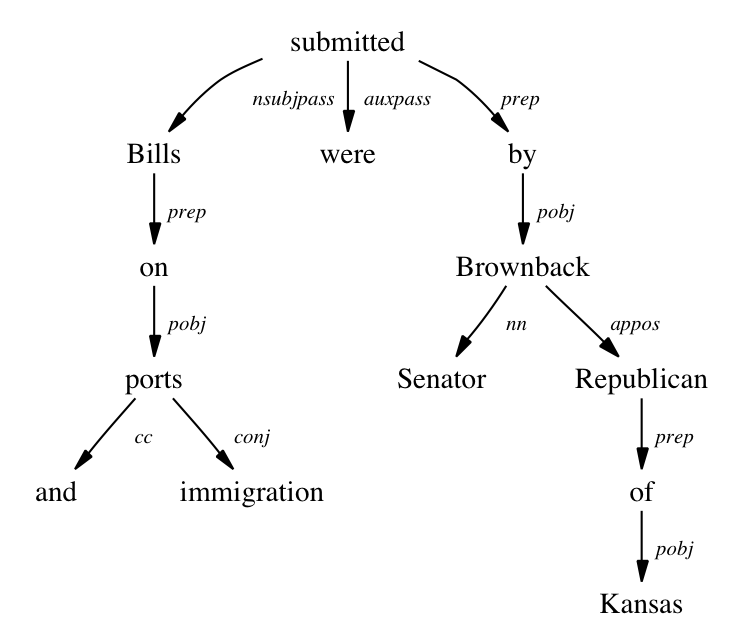

- Open IE was used in Semi-supervised approach or rule based algorithm . In this paper they present new data and method for open IE to improve the performance they used supervised learning .

- They extend QA-SRL techniques and apply it to the QAMR corpus.

Background :

Different open IE system and flavors:

Open IE development and lack of standard benchmark dataset make various Open IE systems tackling different facets of the same task.

Open IE Corpora:

They create and make available a new Open IE training corpus, All Words Open IE (AW-OIE), derived from QAMR

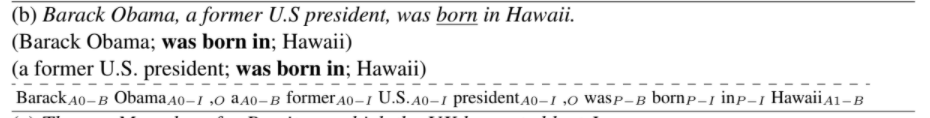

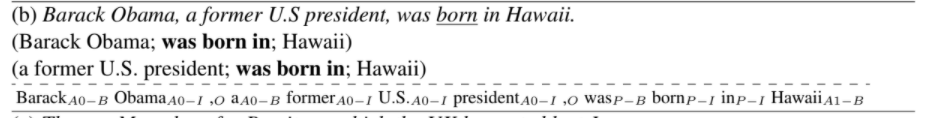

BIO Encoding:

Each tuple is encoded with respect to a single predicate, where argument labels indicate their position in the tuple

Text

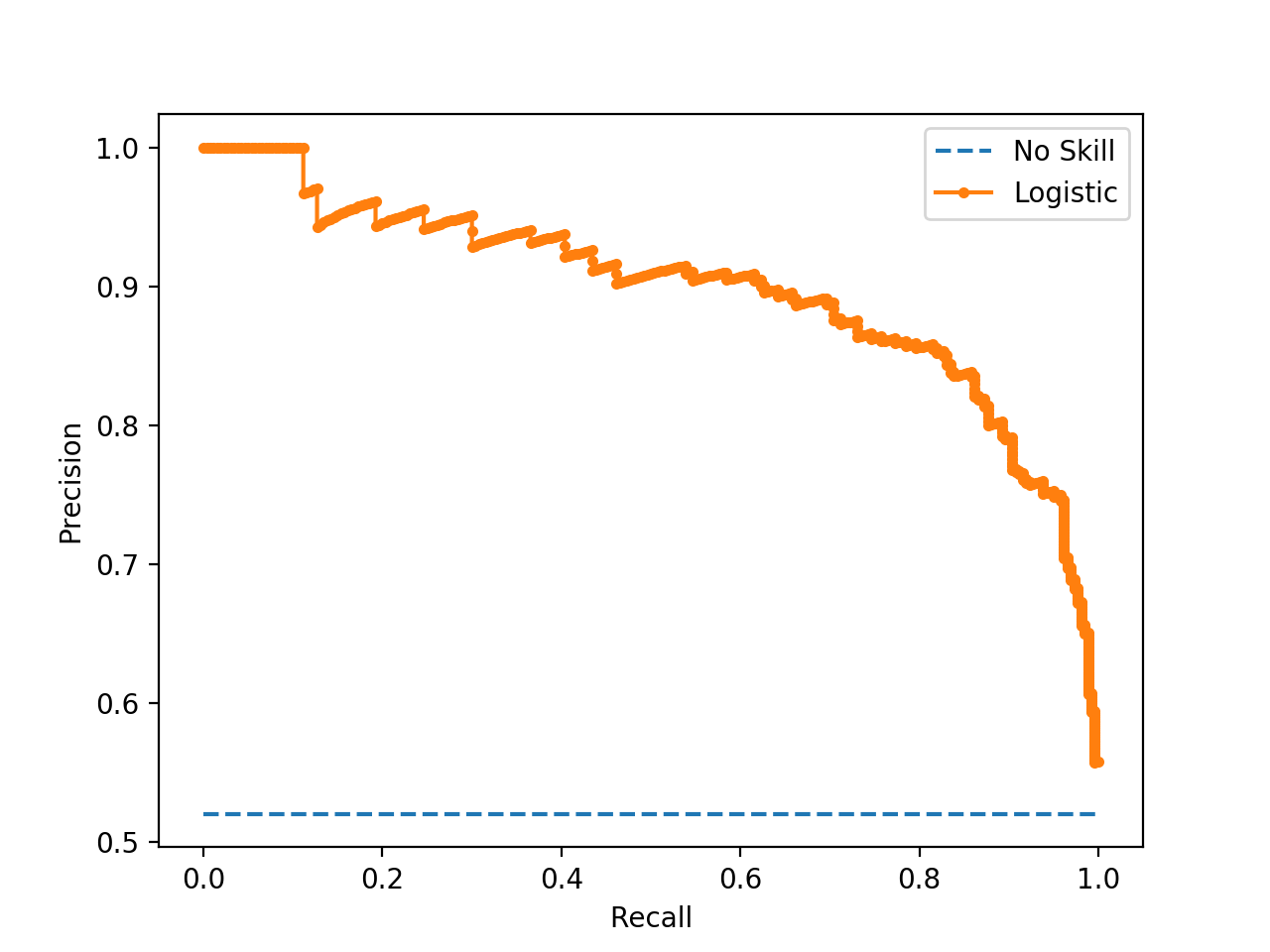

Evaluation

Metrics

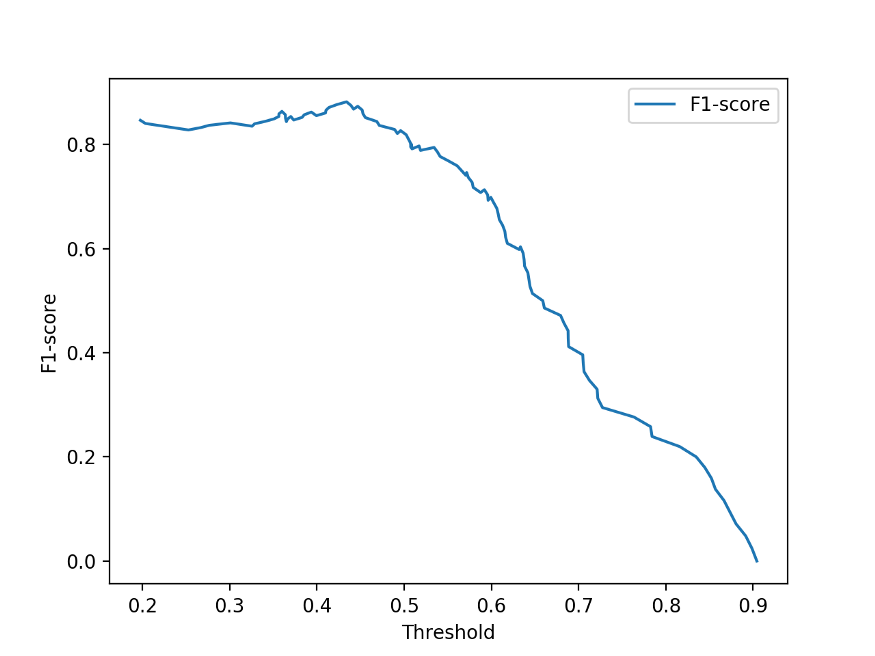

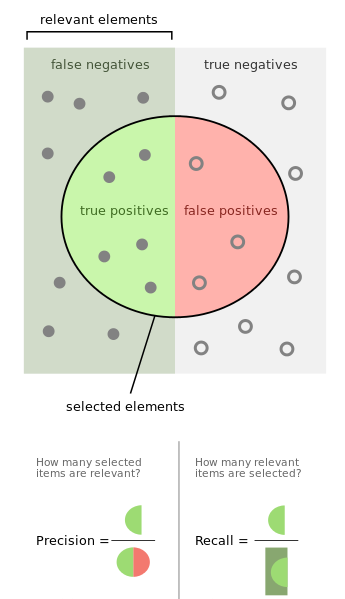

- Precision-recall (PR) curve

- Compute the area under the PR curve(AUC)

- F-measure

Precision =

True Positives / (True Positives + False Positives)

Recall =

True Positives / (True Positives + False Negatives)

F1 =

2* precision* recall/

precision + recall

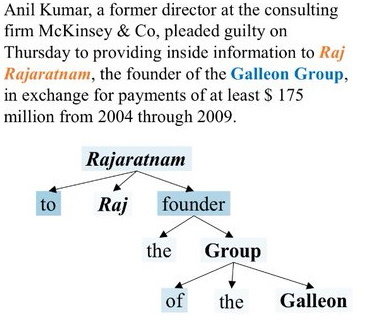

The sheriff standing against the wall spoke in a very soft voice

Matching function

The Sheriff;

spoke; &

in a soft voice

The sheriff standing against the wall;

spoke;

in a very soft voice

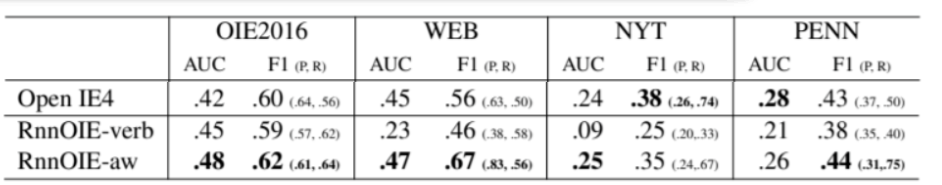

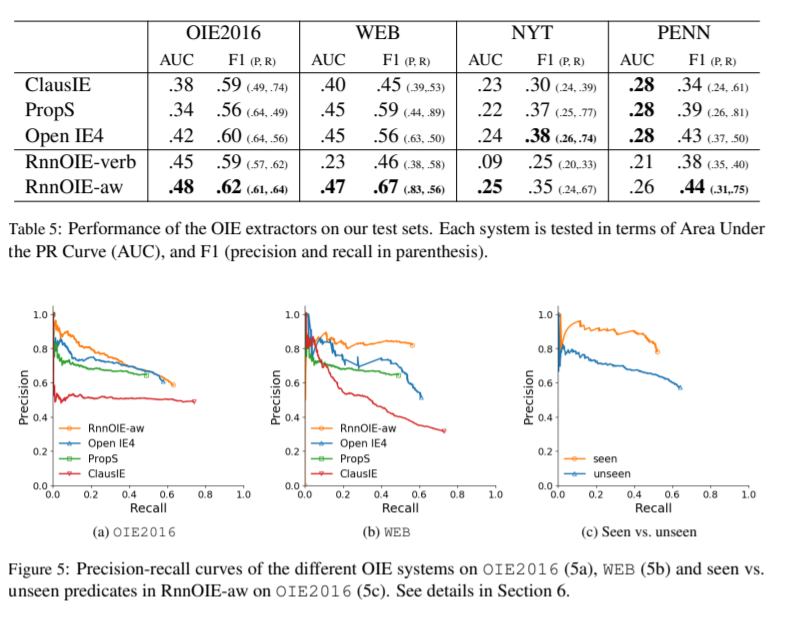

| OIE2016 | WEB, NYT, PENN |

|---|---|

| Penn Treebank gold syntactic trees | predicted trees |

Evaluation result-1

Rank 1 1 1 1 1 3 2 1

Furthermore, on all of the test sets,

extending the training set significantly improves our model’s performance

Test set: Only | Include

verb predicates | nominalizations

Evaluation result-2

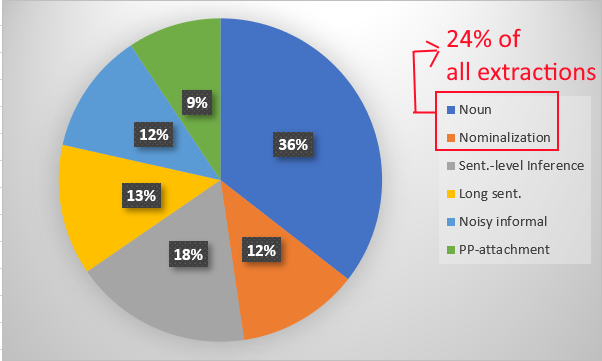

Performance Analysis - unseen predicates

The unseen part contains 145 unique predicate lemmas in 148 extractions,

24% out of the 590 unique predicate lemmas

&

7% out of the 1993 total extractions

RnnOIE-aw

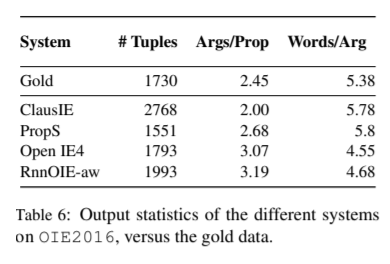

Performance Analysis - Argument length and number

Performance Analysis - Runtime analysis

| ClausIE | PropS | Open IE4 | RnnOIE | |

|---|---|---|---|---|

| Xeon 2.3GHz CPU | 4.07 | 4.59 | 15.38 | 13.51 |

| Efficiency % | 26.5% | 29.8% | 100% | 87.8% |

Data set: 3200 sentences from OIE2016

Using GPU : RnnOIE get 11.047 times faster (149.25 sentences/sec)

(sentences/sec)

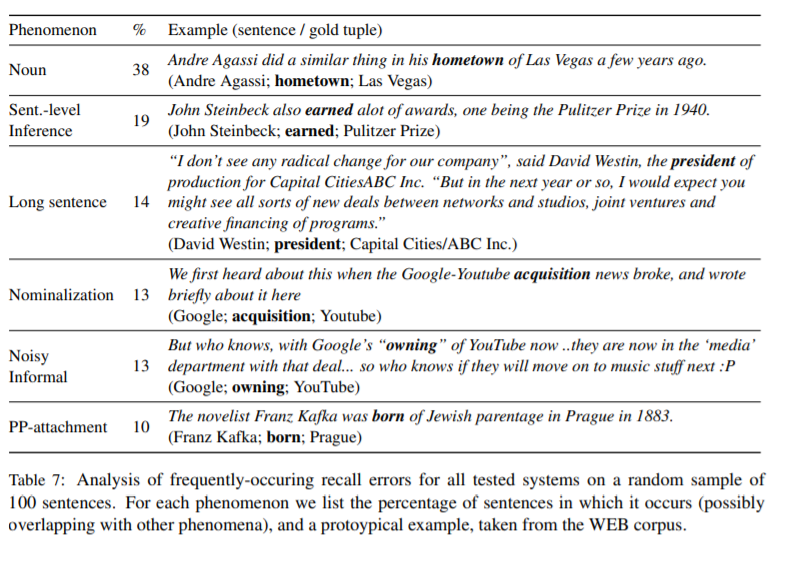

Performance Analysis - Error analysis

a random sample of 100 recall errors:

> 40 words

(Avg. :29.4 words/sentence )

Conclusion

Open IE

bi-LSTM

sequence tagging problem

formulating

BIO encoding

confidence score

extend

OIE2016

QAMR

train

QA-SRL paradigm

Pros and Cons

What's unique and impressive

- Supervise system

- Provide confidence scores for tuning their PR tradeoff

- Their system did perform well compare to others

What's need to be explain further

- Runtime analysis: GPU V.S. CPU

- Bullet Two

- Bullet Three