TensorFlow

A Brief Introduction

History

Basics+Demo

Next

November 2015

Google releases an open source version to the public

Designed with massive neural nets in mind

November 2015

I start geeking out about machine learning after reading a blog post on recurrent neural networks.

I have no idea how any of this works, but it's exciting!

December 2015

I still don't even know which questions to ask

Data Science guild is forming

Literally Today

I know a few things about TensorFlow

I'll share what I know and hand-wave the rest

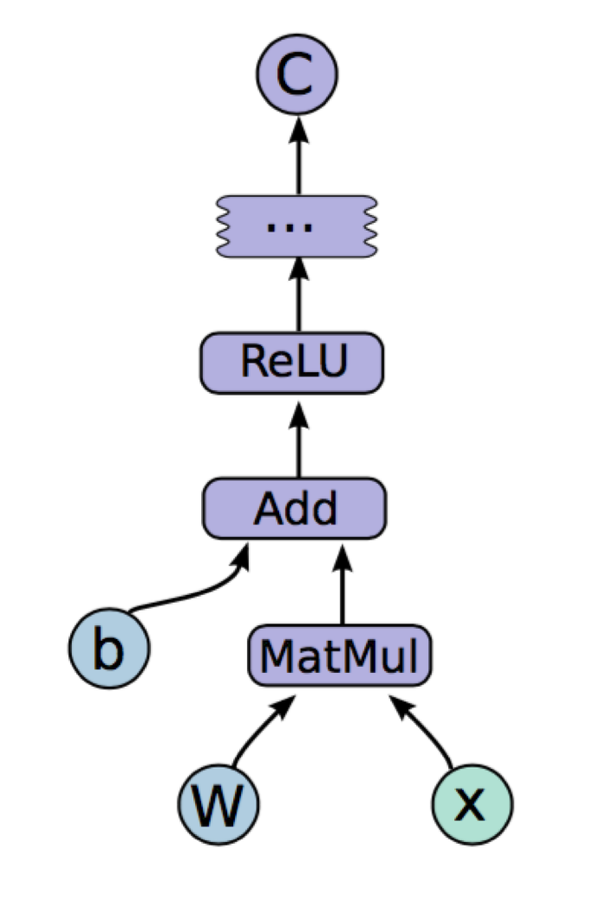

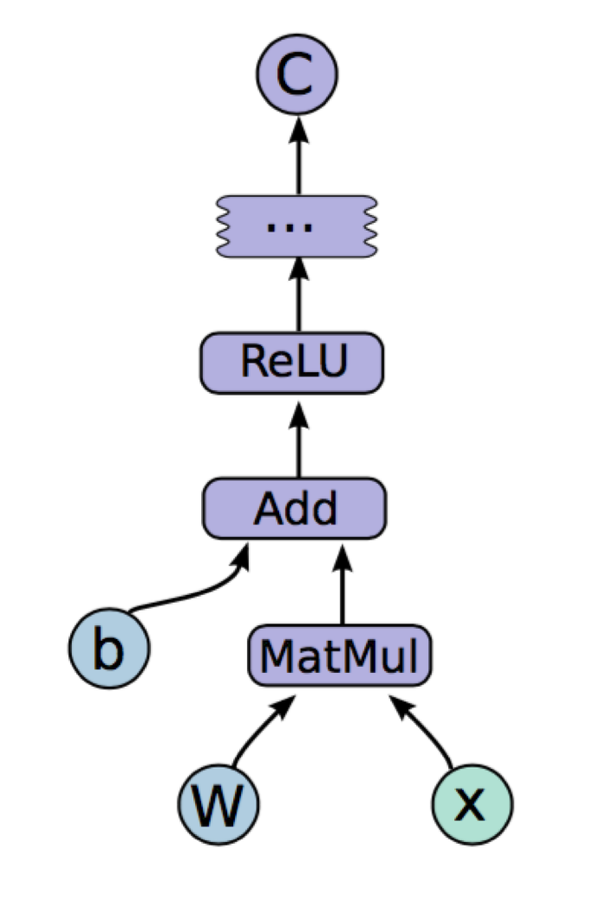

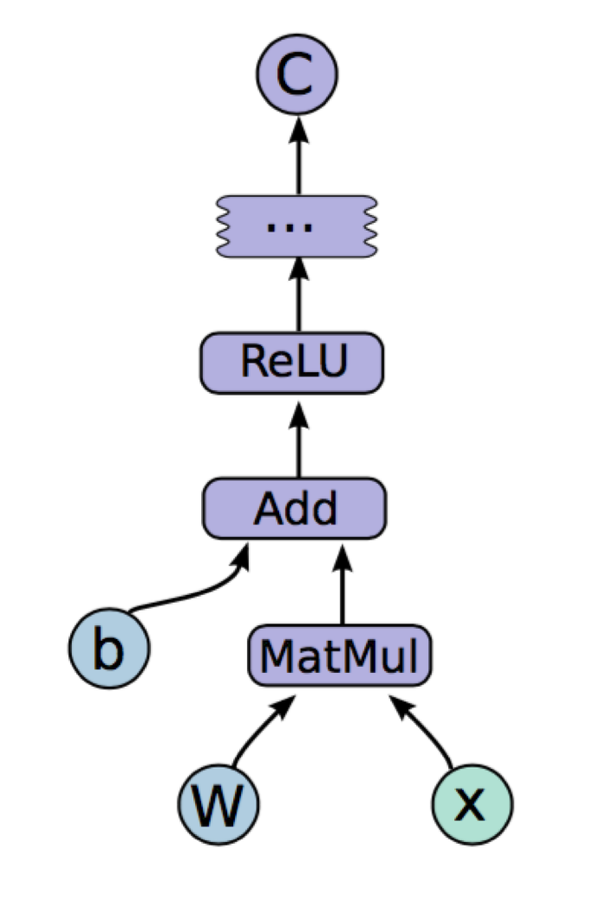

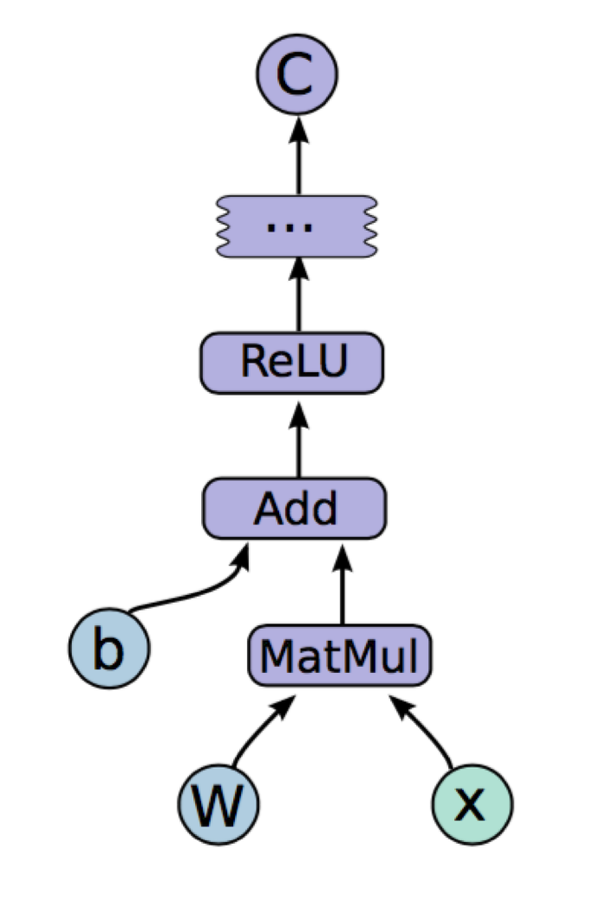

How It Works™

Models are constructed as computational graphs

Nodes on the graph are "Ops"

Edges represent Tensors (i.e. typed multi-dimensional arrays)

Manages the environment for executing ops in a graph

Specifies which device executes part of all of a graph

Each session has one graph

Each graph may be run in multiple sessions

Building a Graph

Done in some "front-end" language

Python

C++ (Yeah, right)

Running a Graph

Model inputs typically "fed" in via placeholder ops

Model outputs specified by "fetching" certain ops

Trained values as Variable ops which must be initialized before execution

Demonstration

MNIST

Dataset containing images of handwritten digits. The classification problem of converting images to digits is a common benchmark for machine learning models.

Softmax Regression

1. Aggregate evidence in support of each class ("digit")

2. Convert the evidence into a likelihood for each given class

3. Train a set of weights to optimize the output probabilities.

Basic

Summaries

TensorBoard

Example Code

Checked into the guild repo under tensorflow-mnist-examples

Tutorials

The TensorFlow project has a ton of additional docs and tutorials. This is based on the introductory tutorial.

Impressions

Pros

- Open source community

- The API is pretty extensive and built to be extended

- Graphs structure make it easy to reason about models

- Designed for deep learning, but also very generalized

- Built for performance

- TensorBoard seems promising

Cons

- You still need to know what you're doing

- There is fairly large framework

- Separation of concerns seems challenging

- Not available in JavaScript (yet)

Next Steps (for me)

- Find a slightly more practical model to build

- Try to scale up the training

- Learn to Python more better