Streams

in NodeJS

What are Streams?

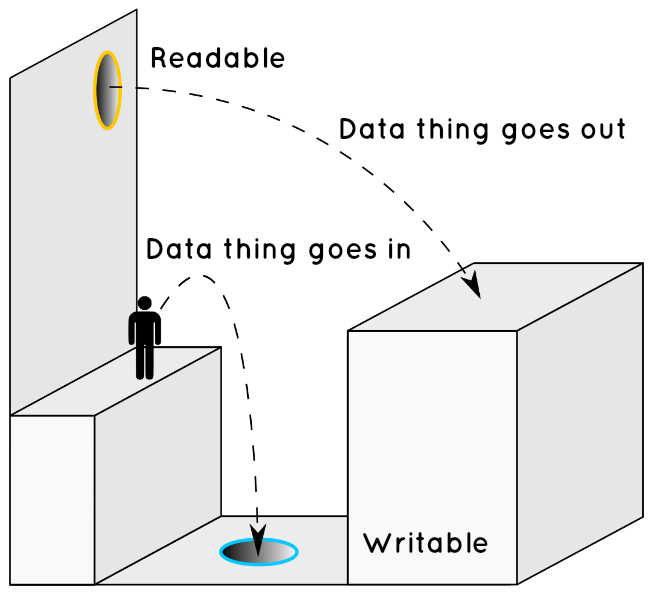

Streams are an abstract interface for objects that want to read, write, or read & write data.

send, receive, or both

Why?

Streams are a faster way to work with IO bound data, and is easier than not using streams.

EXACTLY how it works

All streams are instances of EventEmitter

Stream classes can be accessed from

require('stream')

Stream classes extend EventEmitter

- Readable

- Writable

- Duplex

- Transform

it('should emit event', () => {

const myEmitter = new EventEmitter()

myEmitter.on('event', data => {

assert.equal(data, 'This is the data')

})

})

it('this of callback should be instanceof emitter', () => {

const myEmitter = new EventEmitter()

myEmitter.emit('event')

myEmitter.on('event', function() {

assert.instanceOf(this, EventEmitter)

})

myEmitter.emit('event')

})

it('callbacks are synchronous by default', () => {

const myEmitter = new EventEmitter()

const callbackOrdering = []

myEmitter.emit('event')

myEmitter.on('event', function() {

callbackOrdering.push('first callback')

})

myEmitter.on('event', function() {

callbackOrdering.push('second callback')

})

setTimeout(() => {

assert.deepEqual(callbackOrdering, [

'first callback',

'second callback'

])

}, 0)

myEmitter.emit('event')

})

it('callbacks can be async', () => {

const myEmitter = new EventEmitter()

const callbackOrdering = []

myEmitter.emit('event')

myEmitter.on('event', function() {

// similar to setImmediate()

process.nextTick(() => {

callbackOrdering.push('first callback')

})

})

myEmitter.on('event', function() {

callbackOrdering.push('second callback')

})

setTimeout(() => {

assert.deepEqual(callbackOrdering, [

'second callback',

'first callback'

])

}, 10)

myEmitter.emit('event')

})

Many of the built-in modules in Node implement the streaming interface:

Node streams are very similar to unix streams

ls -a node_modules/ | sort | grep 'a'In unix, streams are implemented by the shell with | pipes.

Why you should use streams

const http = require('http')

const fs = require('fs')

const server = http.createServer((req, res) => {

fs.readFile(`${__dirname}/data.txt`, (err, data) => {

res.end(data);

})

})

server.listen(8000)const http = require('http')

const fs = require('fs')

const server = http.createServer((req, res) => {

const stream = fs.createReadStream(`${__dirname}/data.txt`)

stream.pipe(res)

})

server.listen(8000)vs

const http = require('http')

const fs = require('fs')

var oppressor = require('oppressor')

const server = http.createServer((req, res) => {

const stream = fs.createReadStream(`${__dirname}/data.txt`)

stream.pipe(oppressor(req)).pipe(res)

})

server.listen(8000)Pipes

Readable streams can be piped to Writable streams

a.pipe( b ) b.pipe( c ) a.pipe( b ).pipe( c )

Where a and b implement Readable Streams

b and c implement Writable Streams

Pipes

Similar to this behavior

a.on('data', function(chunk){

b.write(chunk);

});

b.on('data', function(chunk){

c.write(chunk);

});

Where a and b implement Readable Streams

b and c implement Writable Streams

const fs = require('fs')

const server

= require('http').createServer()

server.on('request', (req, res) => {

const src

= fs.createReadStream('./big.file')

src.on('data', function(chunk) {

const ready = res.write(chunk)

if (ready === false) {

this.pause()

res.once(

'drain',

this.resume.bind(this)

)

}

})

src.on('end', function() {

res.end()

})

})

server.listen(8000)

const fs = require('fs')

const server

= require('http').createServer()

server.on('request', (req, res) => {

const src

= fs.createReadStream('./big.file')

src.pipe(res)

})

server.listen(8000)

Without Pipe

With Pipe

Pipe is a convenience that handles backpressure by default...

Readable

A Readable stream is a source of data that can be read.

A Readable stream will not start emitting data until something is ready to receive it.

Readable streams are in one of two states

- paused (default)

- flowing

Readable

When a Readable stream is paused, the stream.read() method must be invoked to receive the next chunk of data.

When a Readable stream is flowing, data is sent out from the source immediately as it is available.

remember that this

is the default state

Readable

to switch to the Flowing state, do any of these

-

Add a 'data' event handler to listen for data.

-

Invoking the pipe() method to send data to a Writable stream

-

Invoke the resume() method

- the event handler function signature is function(data){...}

-

If there are no event handlers listening for 'data' and if there is no pipe() destination, then resume() will cause data to be lost.

Readable

To switch a stream to the paused state, do any of these.

- If there are no pipe() destinations

- If there are pipe destinations

- Invoke the pause() method

- Remove any 'data' event handlers

- and Remove all pipe destinations by invoking the unpipe() method

Readable

Events

'readable'

When a chunk of data can be read from the stream, it will emit a 'readable' event.

readable.on('readable', function() {

// there is some data to read now

});Readable

Events

'data'

When a chunk of data is ready to be read from the stream, it will emit a 'data' event.

And pass the chunk of data to the event handler

readable.on('data', function(chunk) {

console.log('got '+chunk.length+' bytes of data');

// do something with chunk

});If the stream has not been explicitly paused, attaching a 'data' event listener will switch the stream into the flowing state

Readable

Events

'end'

When there is no more data to be read, and all of the data has been consumed, the 'end' event will be emitted.

readable.on('end', function() {

console.log('all data has been consumed');

});data is considered consumed when every chunk has been flowed to a 'data' event handler, piped to a writable stream, or by repeatedly invoking read() until there is no more data available from the stream.

Readable

Events

'data' and 'end'

To get all the data from a readable stream, just concatenate each chunk together.

var entireDataBody = '';

readable.on('data', function(chunk) {

entireDataBody += chunk;

});

readable.on('end', function() {

// do something with entireDataBody;

});Readable

Events

'close'

When the readable stream source has been closed (by the underlying system), the 'close' event will be emitted.

Not all streams will emit the 'close' event.

Readable

Events

'error'

When the readable stream throws an error, it will emit the 'error' event.

The event will pass an Error Object to the event handler.

Personally, I don't use any of this.

If you always use pipes, you don't have to worry about flowing vs paused modes, nor backpressure.

Doing it manually is messy. That's the beauty of the .pipe() method. If you need a more complex transformation, then I recommend extending a transform stream and piping that.

For example,

const through2 = require('through2')

const toUpperCase = through2((data, enc, cb) => {

cb(null, Buffer.from(data.toString().toUpperCase()))

})

process.stdin.pipe(toUpperCase).pipe(process.stdout)through2 library makes it insanely easy to write pipe-able transforms. Highly recommended.

What's a Buffer?

a small location in your computer, usually in the RAM, where data is temporally gathered, waits, and is eventually sent out for processing during streaming.

Readable

Methods

readable.read([size])

size is an optional argument to specify how much data to read

read() will return a String, Buffer, or null

var entireDataBody = '';

readable.on('readable', function() {

var chunk = readable.read();

while (chunk !== null) {

entireDataBody += chunk;

chunk = readable.read();

}

});Readable

Methods

readable.setEncoding(encoding)

encoding is a string defining which encoding to read the data in, otherwise it will return a buffer.

such as 'utf8' or 'hex'

readable.setEncoding('utf8');

readable.on('data', function(chunk) {

// chunk is a string encoded in utf-8

});otherwise it will return a buffer

Readable

Methods

readable.pause()

will cause a stream in the flowing state, to stop emitting 'data' events, and enter the paused state

returns itself

readable.resume()

will cause a stream in the paused state to enter the flowing state and resume emitting 'data' events.

returns itself

readable.isPaused()

will return whether the stream is paused or not, Boolean

Readable

Methods

readable.pipe(destination[, options])

destination is a Writable stream to write data to.

pipe() streams all data from the readable stream, to the writable destination. returns a readable stream, allowing multiple pipe destinations.

readable.unpipe([destination])

removes a pipe destination. If the optional destination argument is set, then only that pipe destination will be removed. If no arguments are used, then all pipe destinations are removed.

Writable

Events

'drain'

If writable.write(chunk) returns false, then 'drain' will be emitted when the writable stream is ready to write more data, when the readable stream has finished handling all the data that's been sent so far and the buffer is drained.

Writable

Events

'finish'

When the end() method has been invoked, and all data has been flushed to the underlying system, the 'finish' event will be emitted.

Writable

Events

'pipe'

When the readable.pipe() method is invoked, and adds this writable stream as its destination, the 'pipe' event will be emitted.

The event handler will be invoked with the readable stream as a callback argument.

writer.on('pipe', function(readableStream) {

console.error('something is piping into me, the writer');

});

reader.pipe(writer); // invokes the event handler aboveWritable

Events

'unpipe'

When the readable.unpipe() method is invoked, and removes this writable stream from its destinations,

the 'unpipe' event will be emitted.

The event handler will be invoked with the readable stream as a callback argument.

writer.on('unpipe', function(readableStream) {

console.error('something stopped piping into me, the writer');

});

reader.pipe(writer);

reader.unpipe(writer); // invokes the event handler aboveWritable

Events

'error'

When an error in writing or piping data is thrown, the 'error' event is emitted.

The event handler is invoked, an Error object passed to the callback function.

const fs = require('fs')

const file = fs.createWriteStream('./big.file')

for (let i = 0; i <= 1e6; i++) {

file.write(

'Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.\n'

)

}

file.end()

Writable

Methods

writable.write(chunk[, encoding][, callback])

chunk is a String or Buffer to write. encoding is an optional argument to define the the encoding type if chunk is a String. callback is an optional callback function that will be invoked when the chunk of data to be written is flushed.

Returns a boolean, true if the data was handled completely.

writer.write(data, 'utf8'); // returns booleanWritable

Methods

writable.setDefaultEncoding(encoding)

encoding is a the new default encoding to used to write data.

Returns a boolean, true if the encoding was valid and set.

writable.setDefaultEncoding('utf8');Writable

Methods

writable.cork()

Forces all writes to be buffered.

Buffered data will be flushed either

when uncork() or end() is invoked.

writable.uncork()

Flush all data that has been buffered since cork() was invoked.

Writable

Methods

writable.end([chunk][, encoding][, callback])

chunk is an optional String or Buffer to write. encoding is an optional argument to define the the encoding type if chunk is a String. callback is an optional callback function that will be invoked when the stream is finished.

writable.write('hello, ');

writable.end('world!');Duplex

Duplex streams are streams that implement both the Readable and Writable interfaces.

readableAndWritable.read(data);

readableAndWritable.write(data);

readable.pipe(readableAndWritable);

readableAndWritable.pipe(writable);Transform

Transform streams are Duplex streams where the output is in some way computed from the input.

readableAndWritable.read(data);

readableAndWritable.write(data);

readable.pipe(readableAndWritable);

readableAndWritable.pipe(writable);const { Transform } = require('stream')

const upperCaseTr = new Transform({

transform(chunk, encoding, callback) {

this.push(chunk.toString().toUpperCase())

callback()

}

})

process.stdin.pipe(upperCaseTr).pipe(process.stdout)