CosmosDb

Why do you think distributed database are different ?

- Spread data across multiple containers

- Multiple sources of data to read and write

- Scalabilty

- Data delivered quickly from location nearest the user

- Automatic Failover when a server is down

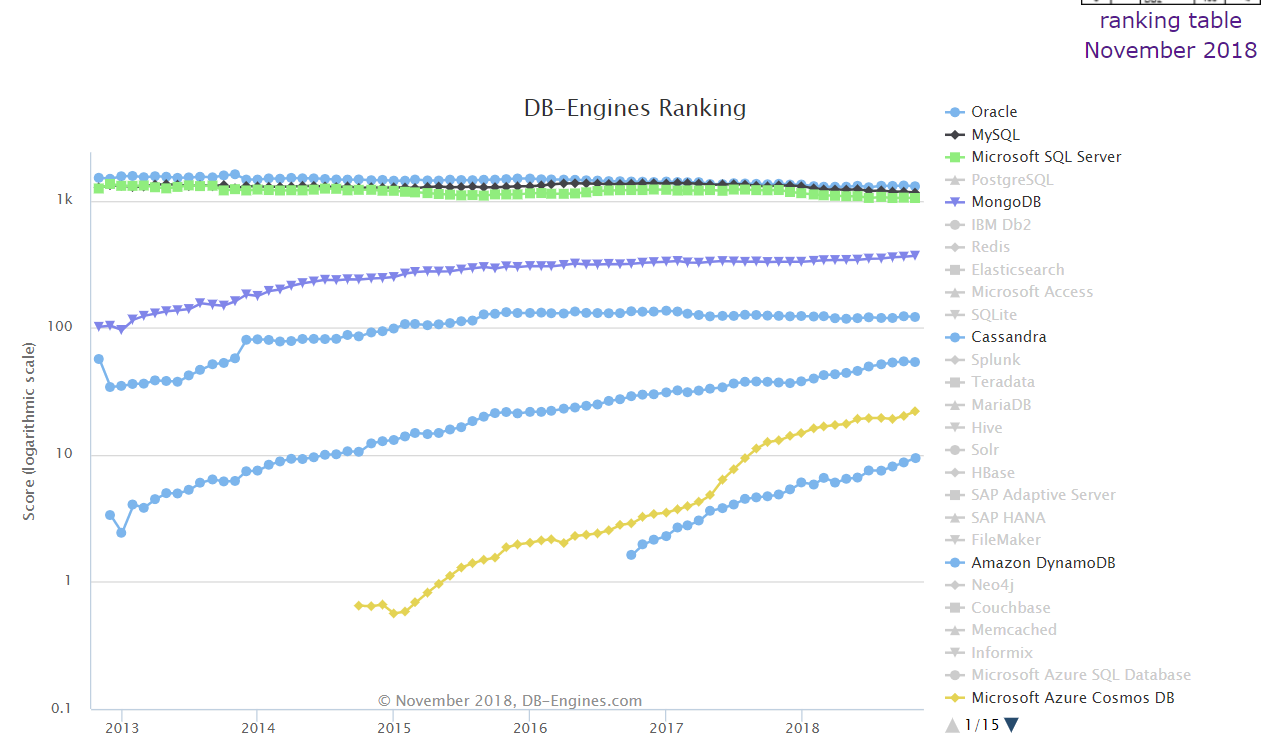

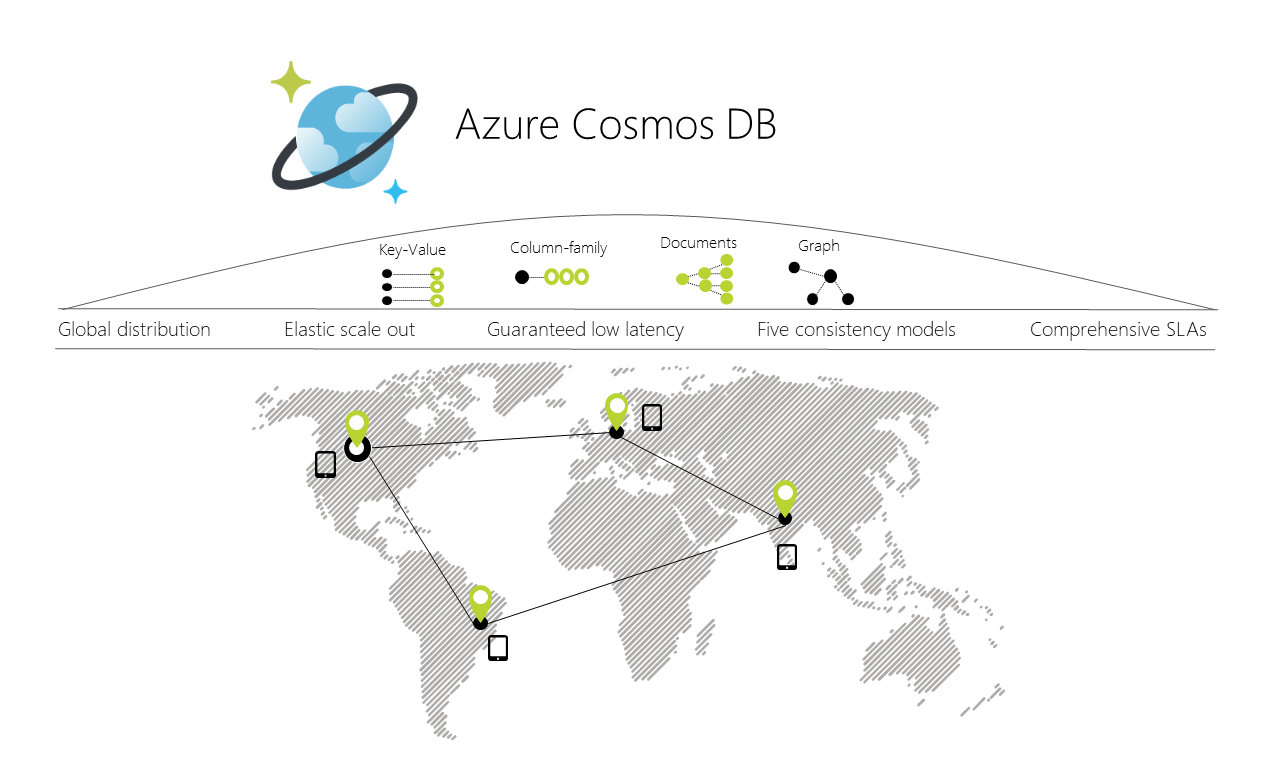

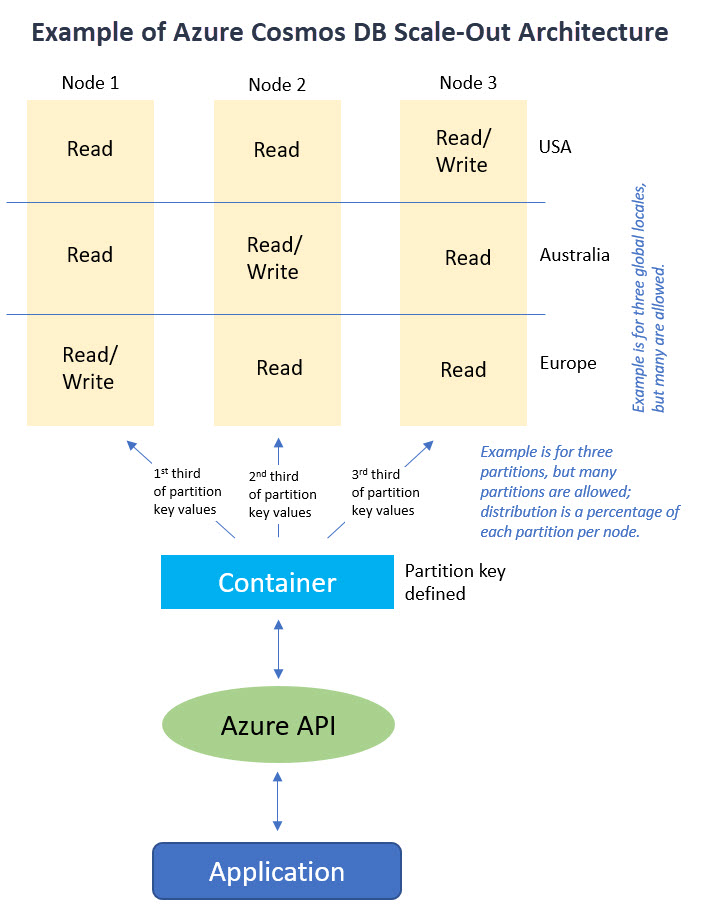

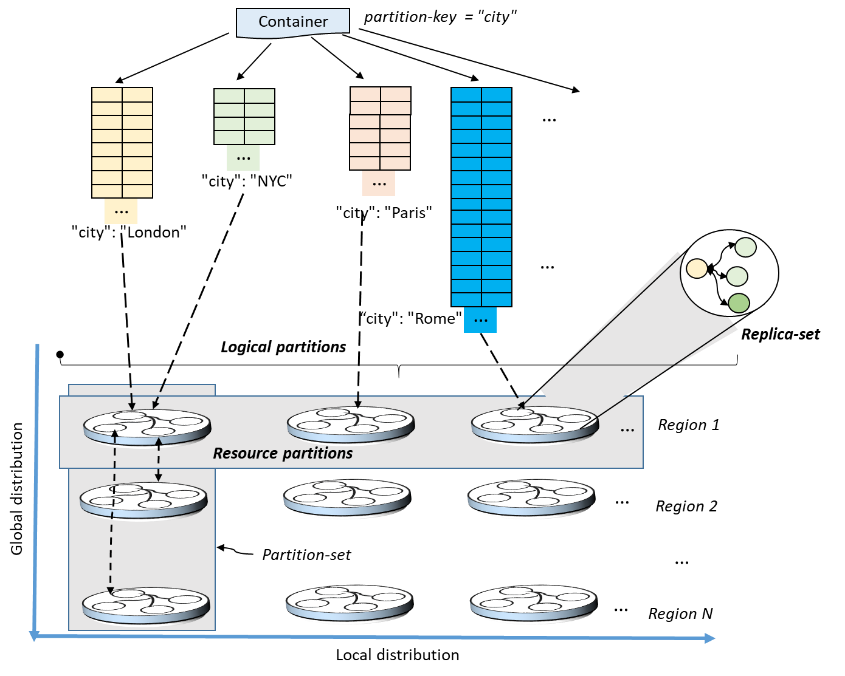

Let's see how Cosmos DB is designed to scale out by utilizing many regional machines and then mirroring this structure geographically to bring content closer to users worldwide.

Scale-Out Architecture in SQL Server

Let's see

-

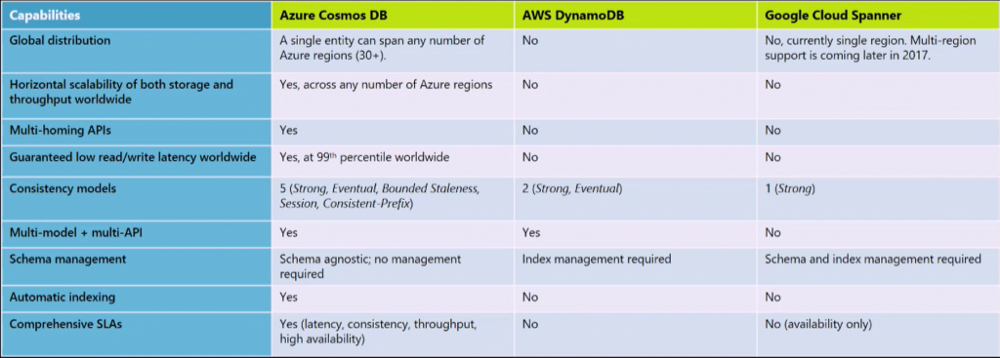

Key Capabilities

-

Partitions

-

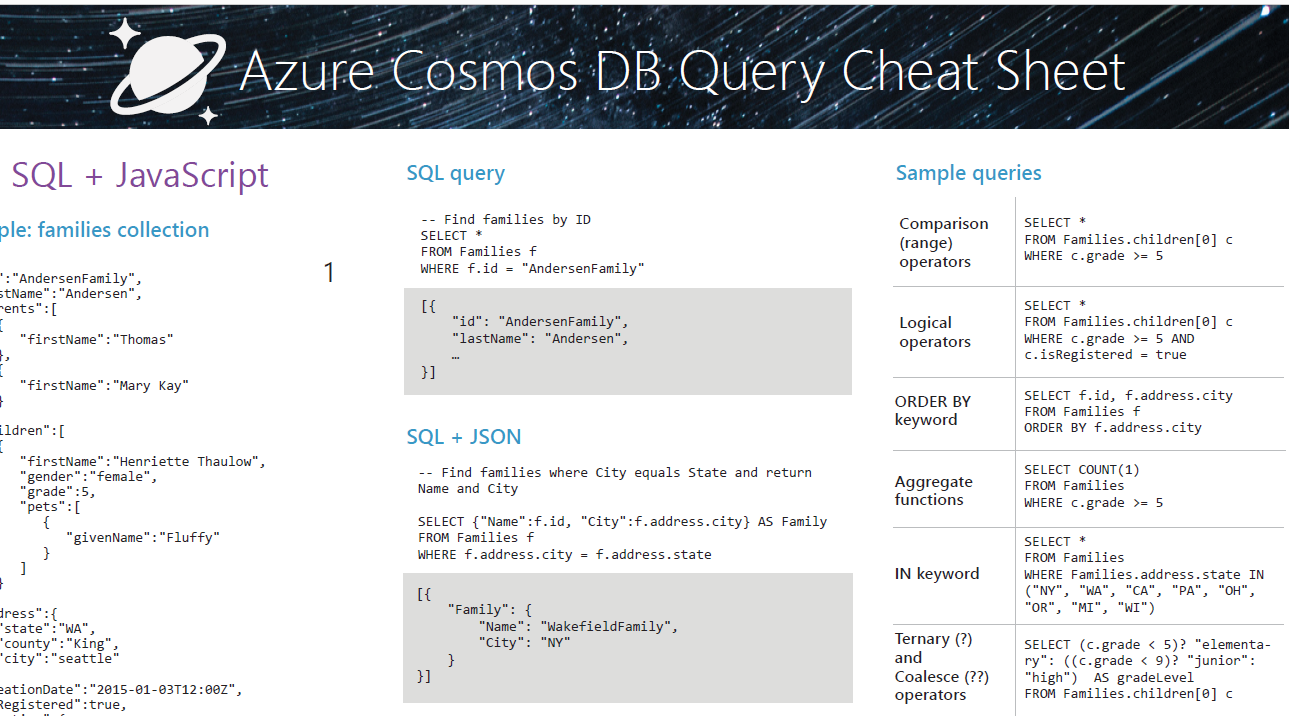

SQL in CosmosDB

-

Performance

-

More info and Links

Key capabilities

-

High Availability

-

Global read and write scalability

-

Elastically and independently scale

-

No database schema

-

Multiple data models and APIs.

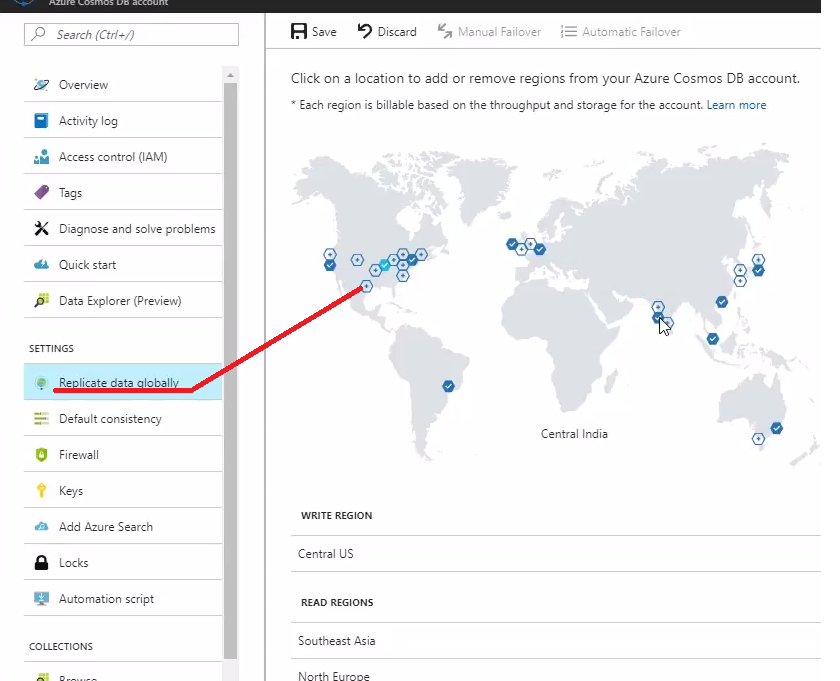

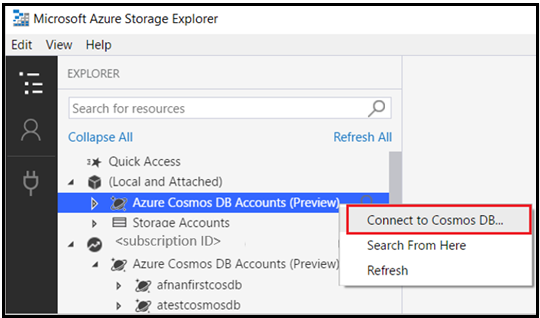

Set up Azure Cosmos DB global distribution using the SQL API

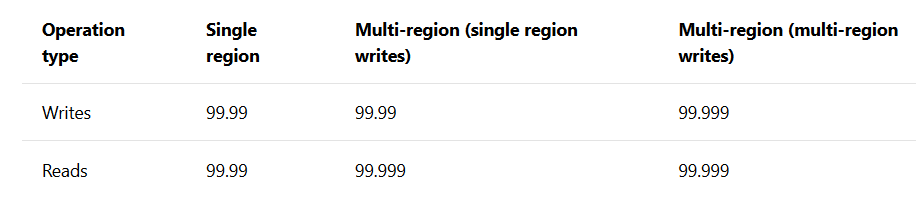

High Availability

High Availability

Global read & Write

Elastically and independently scale

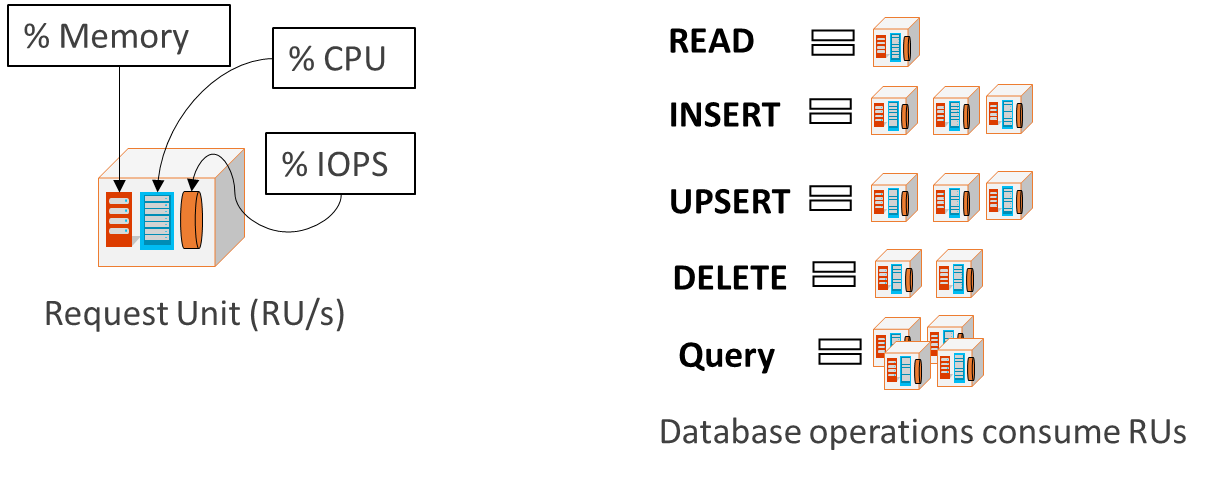

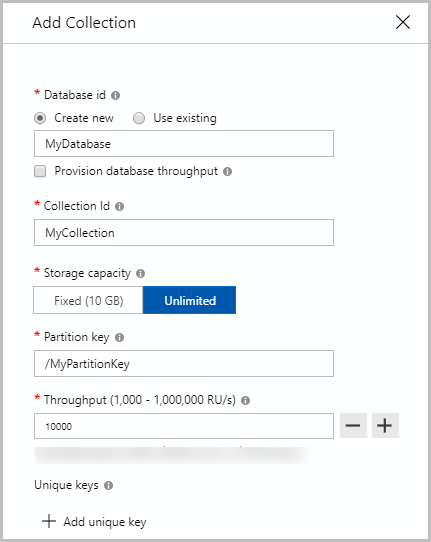

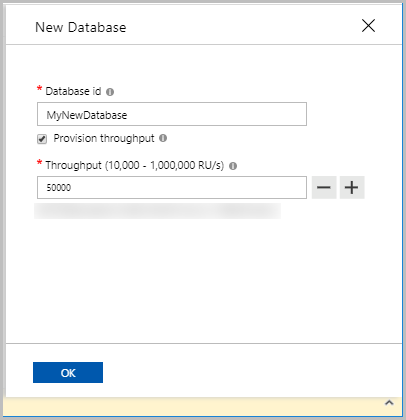

The cost of all database operations is normalized by Cosmos DB and is expressed in terms of

Request Units (RUs) = Get 1-KB item is 1 Request Unit (1 RU)

Throughput measures the number of requests that the service can handle in a specific period of time

Elastically and independently scale

Provision throughput on

# Create a container with a partition key

and provision throughput of 1000 RU/s

az cosmosdb collection create \

--resource-group $resourceGroupName \

--collection-name $containerName \

--name $accountName \

--db-name $databaseName \

--partition-key-path /myPartitionKey \

--throughput 1000// Create a container with a partition key

// and provision throughput of 1000 RU/s

DocumentCollection myCollection =

new DocumentCollection();

myCollection.Id = "myContainerName";

myCollection.PartitionKey.Paths.Add("/myPartitionKey");

await client.CreateDocumentCollectionAsync(

UriFactory.CreateDatabaseUri("myDatabaseName"),

myCollection,

new RequestOptions { OfferThroughput = 1000 });database schema

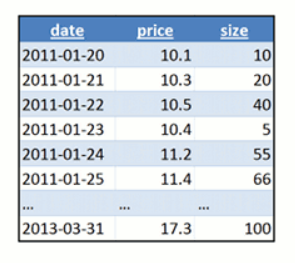

{

"date":"2018-11-22",

"price": 33,

"size": 10

}No

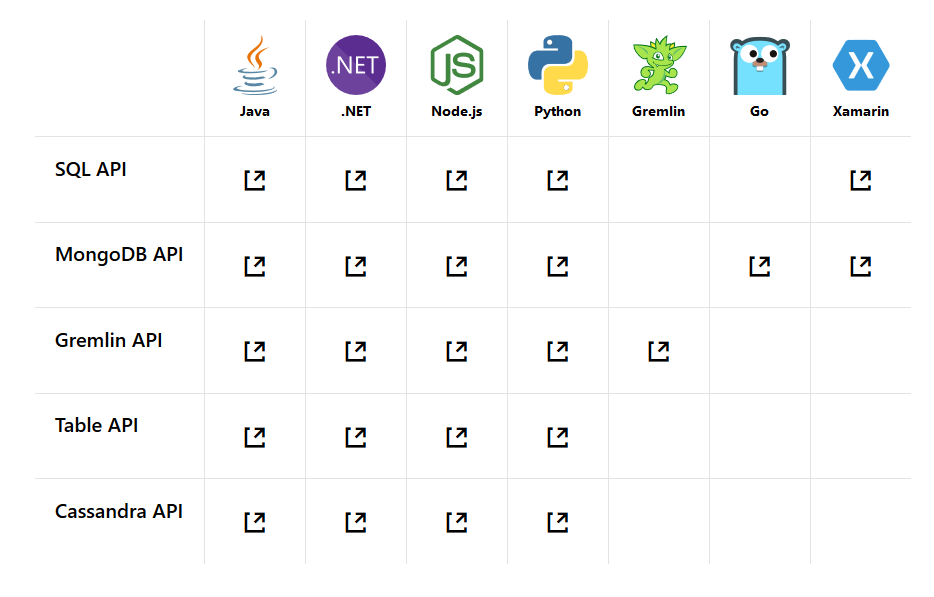

Multiples Apis

Multiple data models and popular APIs for accessing and querying data

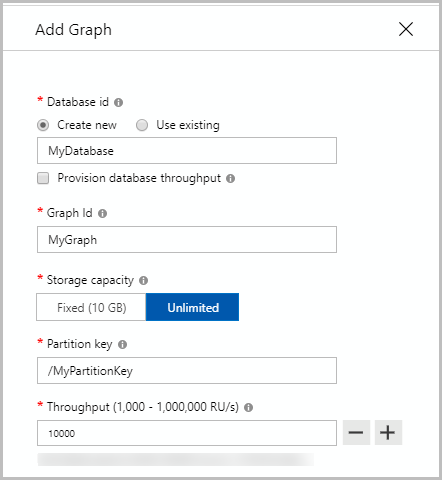

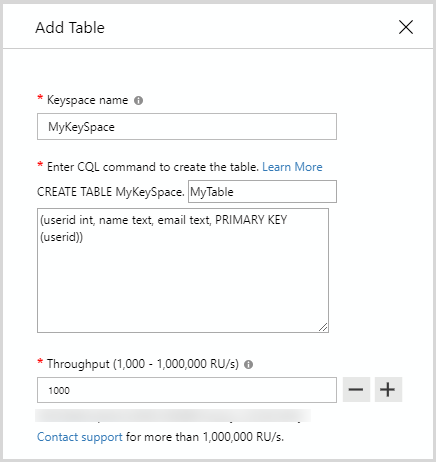

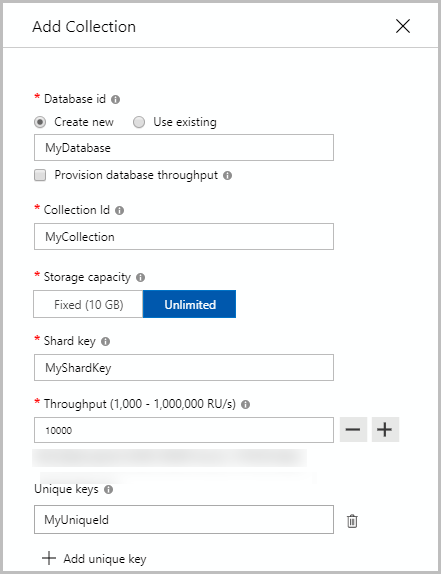

How to create container in different models

MongoDb

Casandra

Gremlin

Multiples Apis

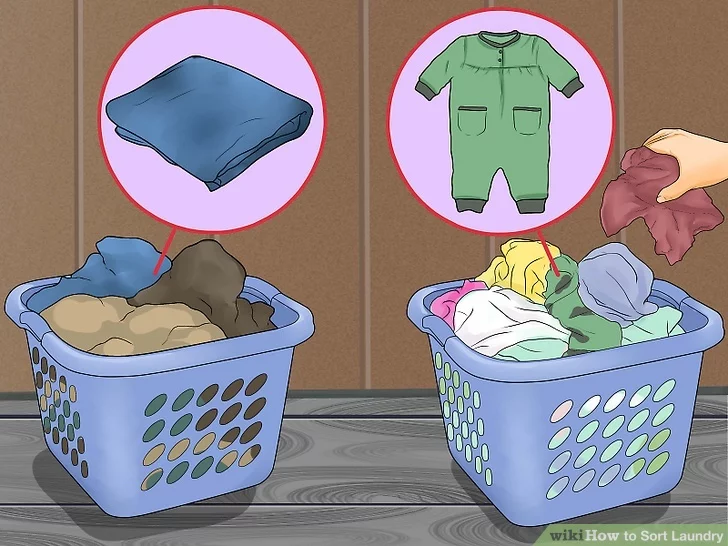

How will you store tones of clothes ?

All in the same cloth box?

Partitions of the cloths depending on the colour?

Partitions of the cloths depending on the fabric weight?

Partitions of the cloths depending on how old is it?

Partitions of the cloths depending on the type?

Find the correct way to store your cloth to save money

Find the correct way to store your cloth to save money

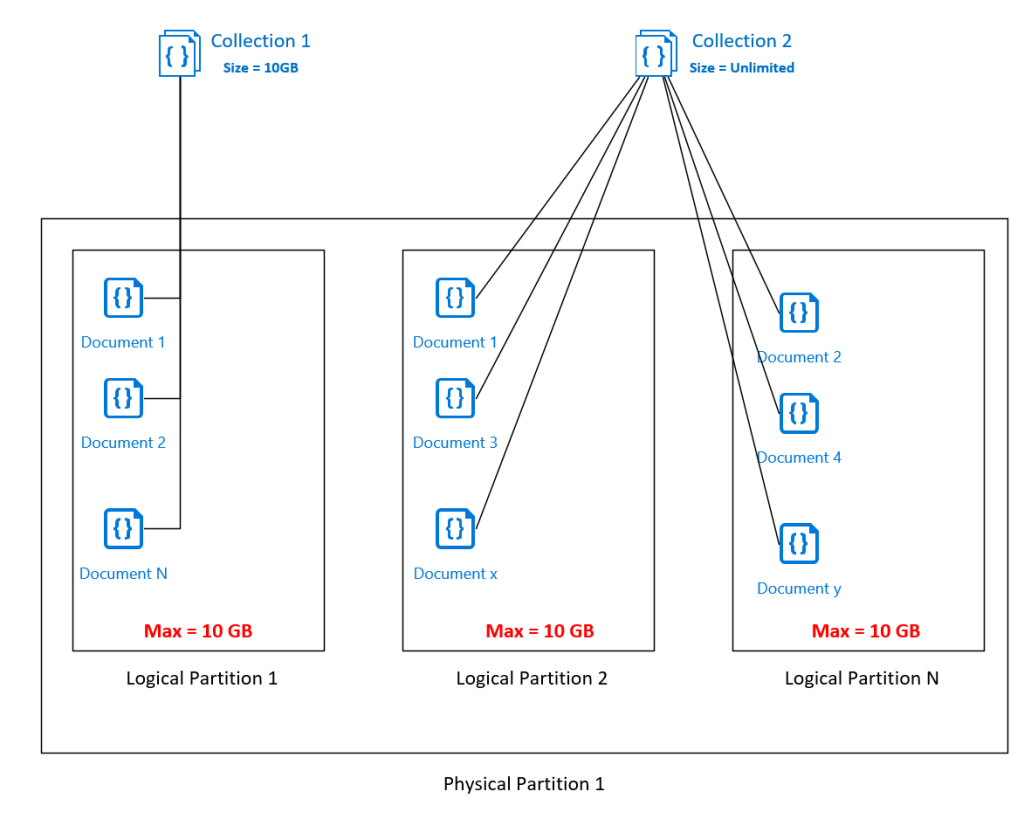

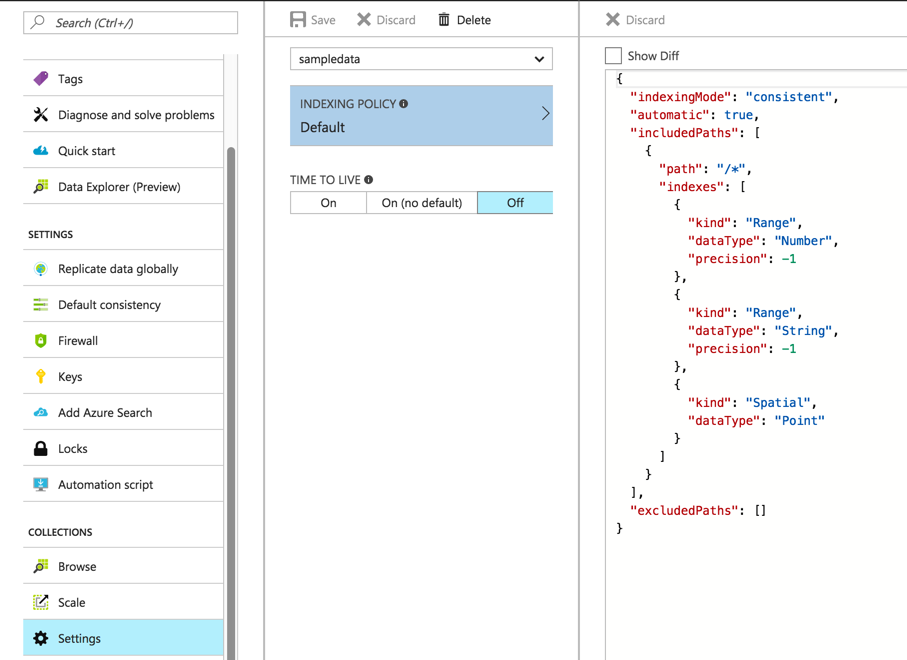

Logical Partition

-

Set of items with the same key is a

logical partition. -

The fact of spreading the documents across multiple Logical Partitions, is called partitioning

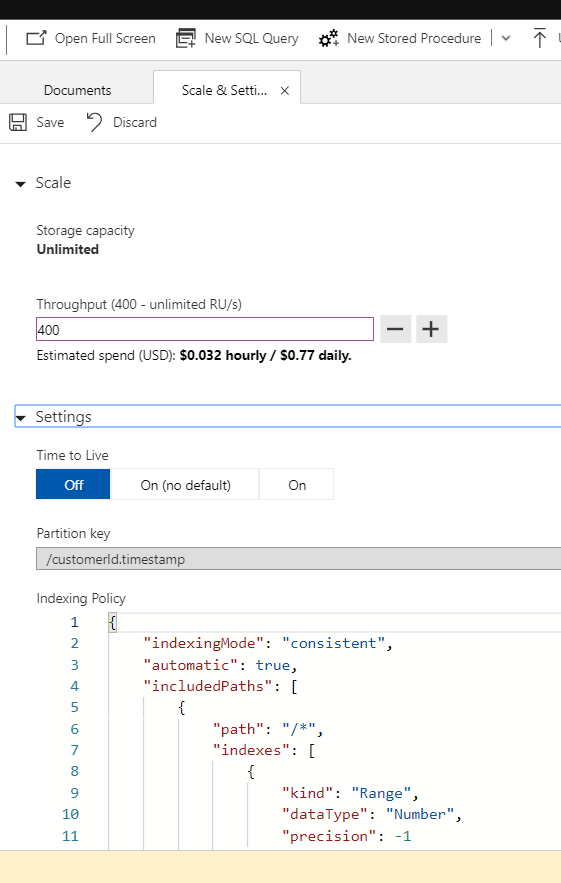

- Synthetic partition key concatenating multiple properties of an item

-

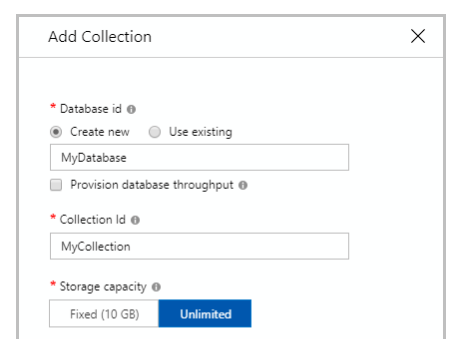

When the size of a container exceeds 10GB, We needs to spread data over multiple Logical Partitions.

-

Partitioning is mandatory if you select Unlimited storage for your container

-

Minimum throughput of 400 RU/s and the cost in 2018 is around 0.77$ per day

Partition Keys

Partition Keys

- The partitionKey never change. If you try to modify you will get an error.

- So, if you need to change a document from partition Key. Delete the document...

- It is a good idea to use properties in the partition Key that you would use to filter the collections. Always think, in which is your more typically query.

- Extreme case, use the id as partition Key.

- The id is unique only in the partition key. So you can use the same id in different partition Keys.

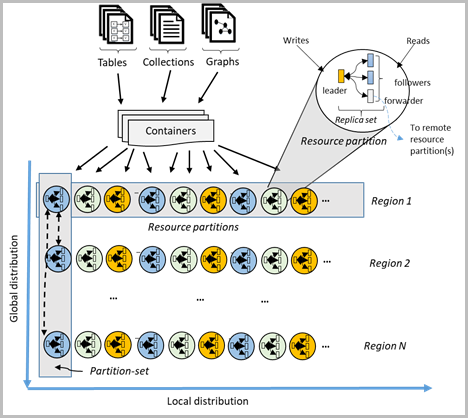

how logical partitions are mapped to physical partitions that are distributed globally

Physical Partitions

Physical Partitions

- In a physical partition could be more than one logical partition

- Each physical partition can have maximum 10 gbs

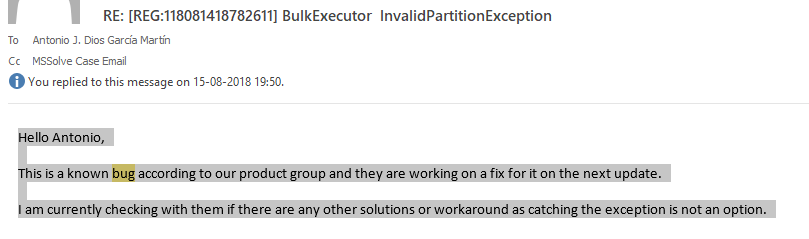

- If a physical partition is full. A logical partition will be moved to other physical partition. Currently there is a bug in Microsoft Bulk Insert that raise an exception while it is moving the logical partition the insert that coming cannot find the partition.

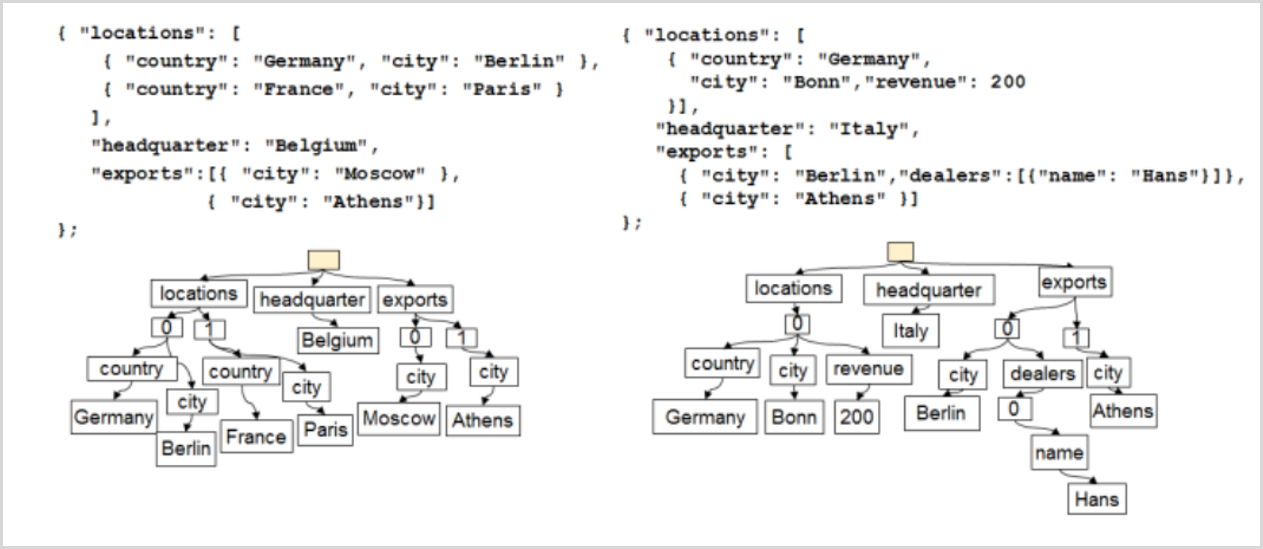

Database index

Sql in CosmosDB

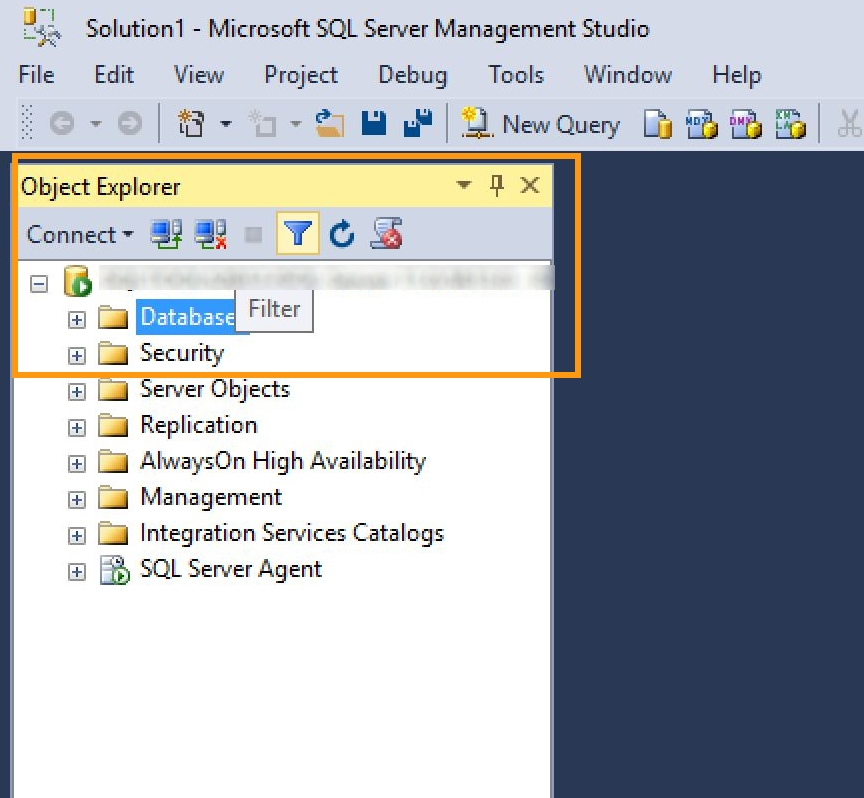

Sql Management

- How can I connect to CosmosDb?

- can I open sql profiler?

Let's try some SQL Queries

Cosmos Db Performance

- Networking

- PartitionKeys, Cross Partition

- Queries

- Uris

- Insert and Indexing Policy

- BulkInsert

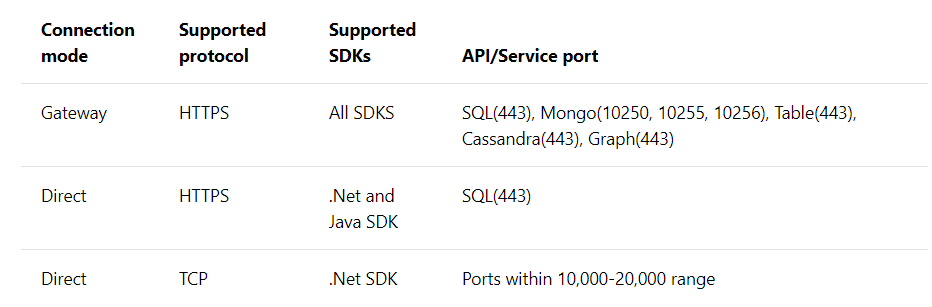

Networking

Connection policy: Use direct connection mode

Gateway

Direct Mode

Recommended with limited conection like azure functions

Better performance

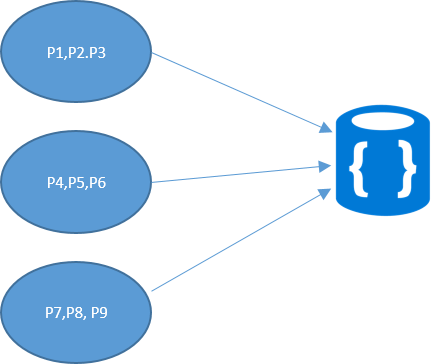

Cross Partition

When you do a query without partition key you are querying all the logical partitions!!

Then you need to wait to all of them

They run in parallel.

The number of queries in parallel is MaxParalelDegree flag.

You need to have enabled CrossPartition flag!!

2

Queries

The throughput of the queries are generated depending of the next factors:

- Nature and number of predicates

- Size of returning data

- you can find the RUs of a query in the variable x-ms-request-charge

- Enable advanced metrics PopulateQueryMetrics

Uris

Each document has a uri that is a selflink to identify directly the document in the collection.

- It is the faster way to access to the document.

- A typical suggestion to get performance is to cache the uri to get the same document later instead of cache the document. If you cache the document and the document is modified you will not know that is was updated.

Insert

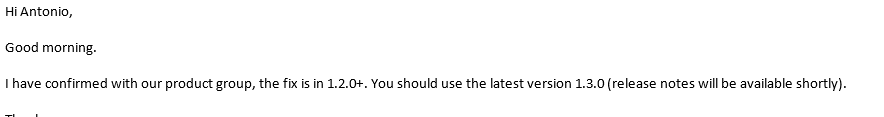

It is a slow operation because in cosmos we index all the fields by default.

- The field we do not need to filter by them we should exclude from the indexing.

- If you want to start using indexing features on your current Azure Cosmos containers. Hash (equality queries), Range (equality, range or ORDER BY queries), or Spatial.

- Precision in the index to reduce the size of the container and the performance.

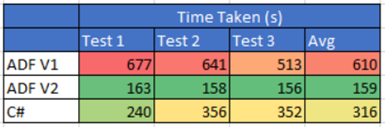

BulkInsert

Last Microsoft Update

Now we are able to insert one millon of document in just one insert. It is much faster.

bulkExecutor.

BulkImportResponse bulkImportResponse = await bulkExecutor.BulkImportAsync( documents: documentsToImportInBatch, enableUpsert: true, disableAutomaticIdGeneration: true, maxConcurrencyPerPartitionKeyRange: null, maxInMemorySortingBatchSize: null, cancellationToken: token);

Performance From my Experience

- Understanding cross partition.

- Breaking your SQL database mind.

- Small tips

Understanding Cross Partition

- Do queries without partition keys without many data in the collections.

- Do queries without partition keys with a huge amount of data

With partition Key

With Partition Key

Understanding Cross Partition

Query partition keys with id even faster!!

Understanding Cross Partition

Add partition keys and ids to everything and the problems start

Understanding Cross Partition

- The improvement does not scale well. When you query to a lot partition keys. The speed up start to degrade.

- Query the partition keys and the id make sense only when you want query few partitions keys and ids.

- Use the partition keys should makes to reduce the number of predicates. However if you query to many partitions keys we can get new error...

The maximun length is 30720 characters

deviceId-customerId-type-month-year

where deviceId=2 && customerId="b52f92a3-f88f-4532-a3f6-02da94d4d0c2" and type="3"

and month="6" and year="2018"where partitionKey="1-b52f92a3-f88f-4532-a3f6-02da94d4d0c2-3-5-2018"Understanding Cross Partition

Split the query into different partition keys queries:

When we have to many task in parallel doing querys you can get HTTP 429 error. To many Request.

- I will get smaller length

- Reduce the number of cross partition in the same query.

- I could take advantage of TPL having task doing the queries.

Understanding Cross Partition

Once, you have got the values with the best thoughput possible

It was really slow!

MaxItemCount = 20000;

while (query.HasMoreResults)

{

var results = query.ExecuteNextAsync<CosmosPeriodHour>()).ConfigureAwait(false);

periodHours.AddRange(results.ToList());

}

Breaking your SQL mind

Real problem. Get the latest two weeks/ 1 months documents from a collections for a device.

You do not know when was the latest date for that device.

- Get the latest date for that device.

- Get the previous two weeks/1months from that date.

Select top 1 * from c where c.deviceId=95394 order by c.timestamp desc

Select top 300 * from c where c.deviceId=95394 order by c.timestamp desc

Breaking your SQL mind

Solution

- New collection with replicated data

- Simplificated data

- Partition Key == Id == DeviceId

- Subcollection == the latest two weeks/1 months.

Each new data insert new data in the other collection. I modified the subcollection

One quick access,

I get all the data

Document size is important!

Small tips

- Avoid using *

- Do not cross partition order by. Try to do after getting the values.

- Linq is more efficient than use CSQL

- You can modify the Throughput for a single query. But the throughput charge will be for the whole hour.

- Think the collection for the queries that you need to do. Wrong collections, wrong partition keys could be very expensive.

Good articles