Frontend ELK Logging

LIU JING

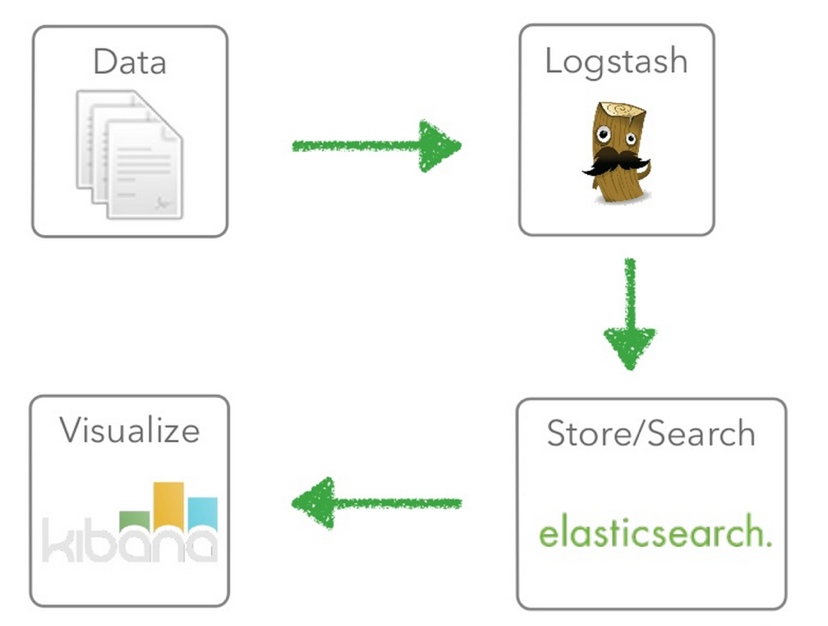

Meet ELK

What we will talk about

- Collect data

- Transform data

- Analyse data

- Deployment process

General Dataflow

- Collecting data

- Transforming data

- Analysing data

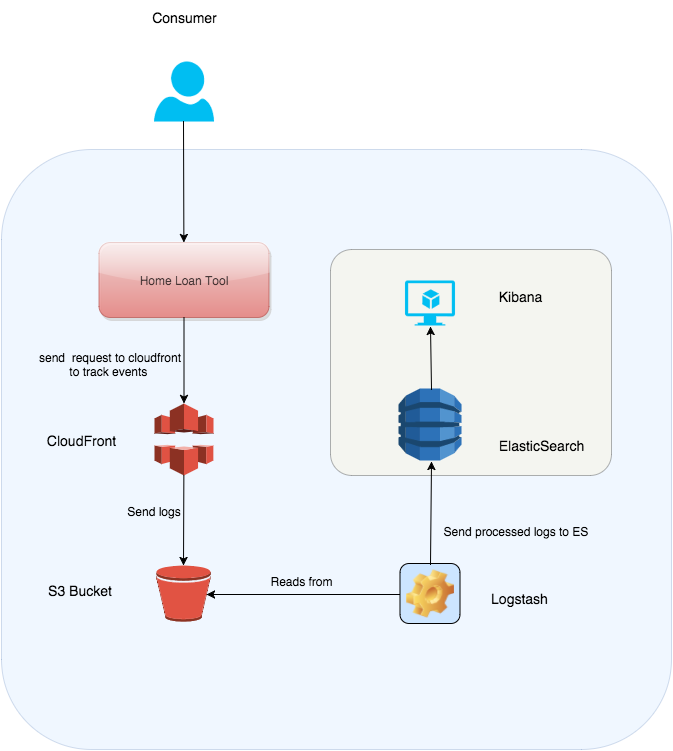

Collecting Data

- User visit our tool

- Application fires events which send request to Cloudfront distribution

- Requests logs are stored in S3 bucket

How to fire events

- Use Javascript client-side library elkt.js

- Send events data using json, otherwise ELK can not handle it

FinanceLeads.Repository.Elkt.trackEvent(

{

"page": "universal",

"eventName": "pageload",

"url": document.location.href

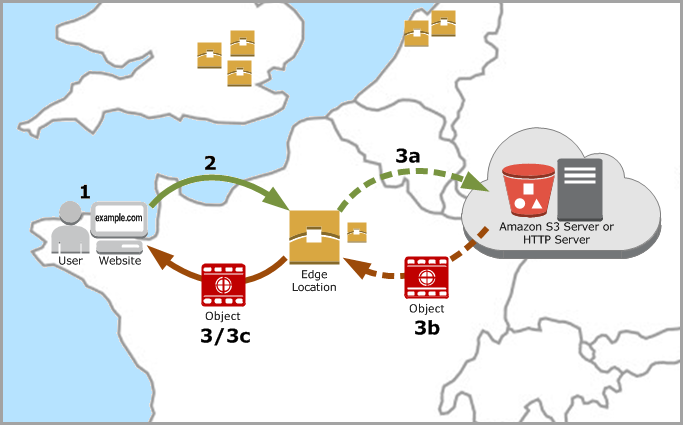

});Cloudfront

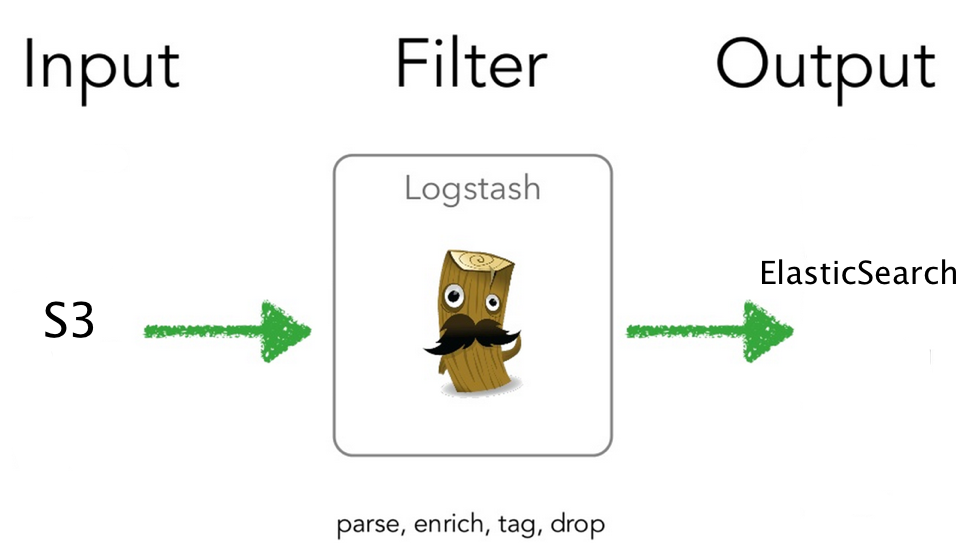

Transforming data

Logstash

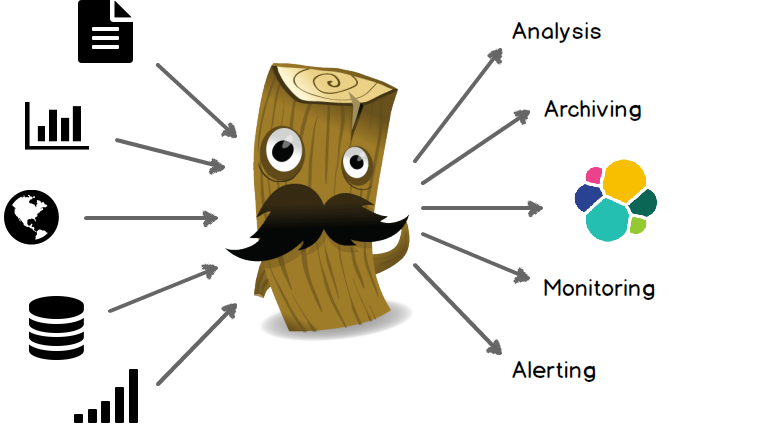

What does logstash do

Ships logs from any source

Parse them

Normalize the data into your destinations

Process Any Data, From Any Source

How logstash works

In our case

Logstash config

input {

s3 { 'bucket' => "your_bucket_which_store_logs" }

}

filter {

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

output {

elasticsearch { host => localhost protocol => http }

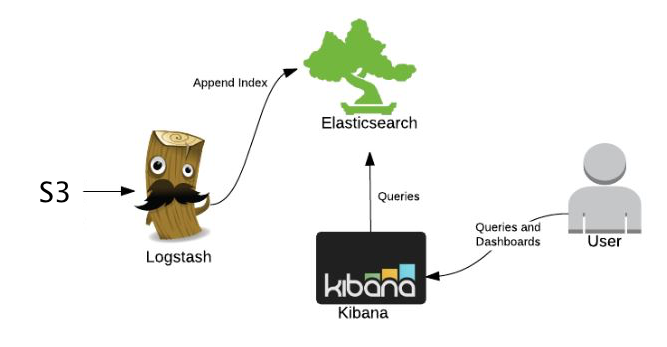

}Index and analyse data

-

ElasticSearch

- Kibana

ElasticSearch

- Real-Time Data

- Real-Time Analytics

- RESTful api

- Document-Oriented

- Full text search based on Lucene

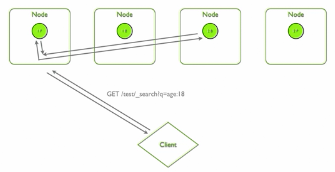

- Distributed

Distributed

-

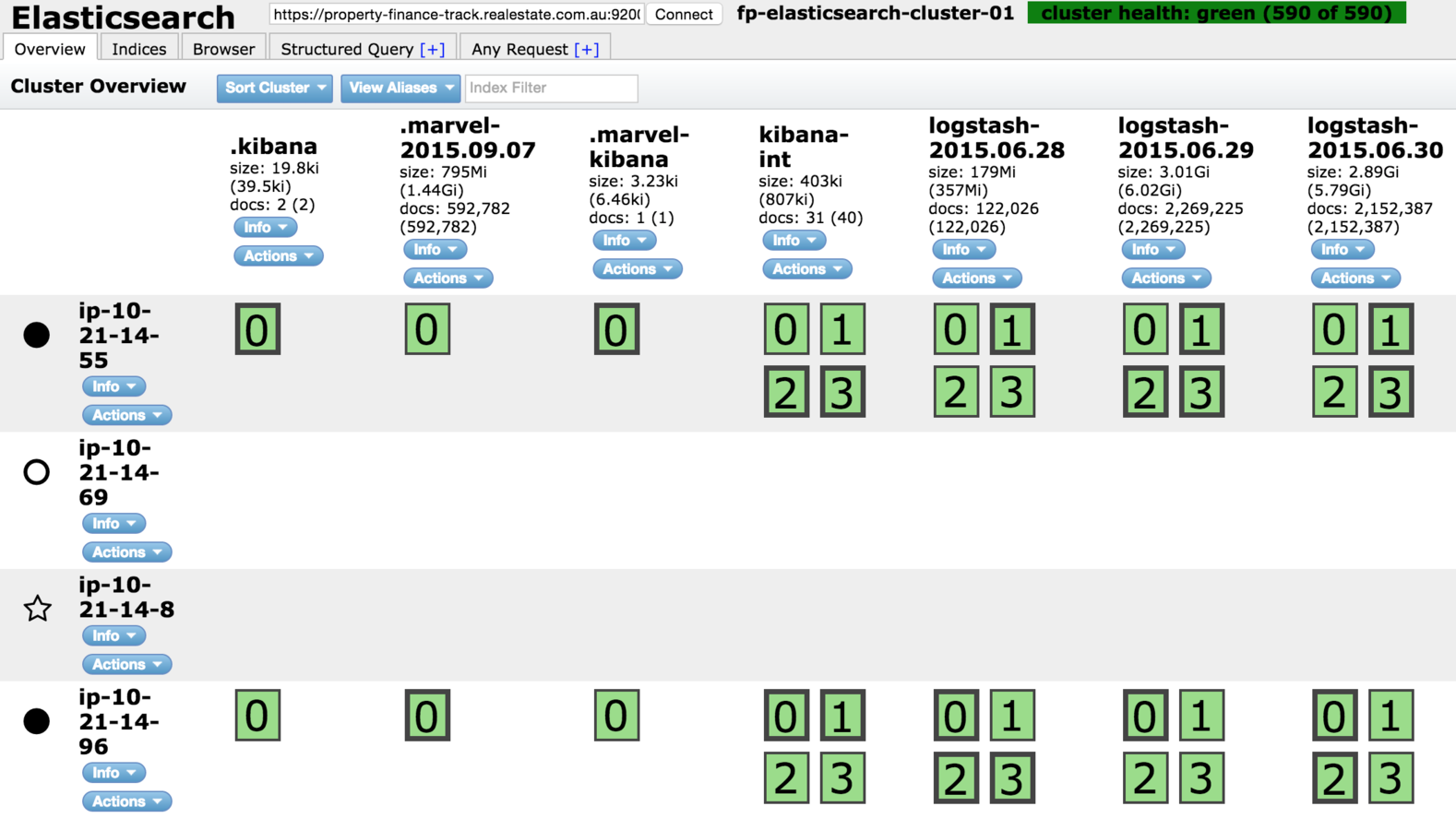

head

-

marvel

-

elasticsearch-cloud-aws

Plugins

head plugin

Visualize data from ES server with search queries

- Clean and simple UI

- Seamless Integration with elasticsearch

- Give shape to your data

- Instant sharing and embedding of dashboards

Kibana

Deploy Progress

-

deploy

-

cloudformation template

-

build pipeline

Deploy

MAD-walking-skeleton way except the bake an AMI

Ansible gets run through the AWS EC2 UserData scripts.

- Install awscli & ansible

- aws s3 sync s3://PROVISIONING_CODE_BUCKET/master/by-build/BUILD-NUMBER

- ansible-playbook /root/provisioning/path/to/playbook-and-roles

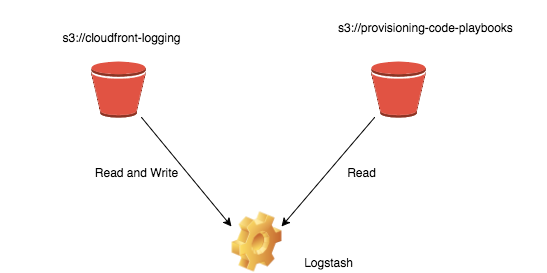

Logstash stack

-

Need to fetch data from 2 S3 buckets

-

Send processed data to ES via private ES ELB

-

node to node communication (9300 by default)

-

HTTP traffic(9200 by default)

-

Public ELB for query and Kibana

-

Private ELB for logstash to send data in

-

Install plugins head, marvel during boot

Stack template

Build pipeline

-

Deploy Cloudfront

-

Deploy Logstash

-

Deploy ElasticSearch

Trouble shooting

- What should I do if disk is full?

- no data coming to Kibana since yesterday?

- log data is not correctly indexed?

Cloudfront

-

need enable logging ability

- the policy to be able to request the track image

- No need to redeploy the stack again, otherwise the cloudfront endpoint will change, the elkt.js library will need to be changed