Web scraping & Data

Analysis with Python

J rogel-salazar

@quantum_tunel / @DT_science

Jun 2017

General Assembly London

Recap

Lists

Dictionaries

Recap

Recap

Homework

This workshop

these slides

Data on the web

Structured in databases

- structured and indexed

- hidden on the server-side of a web platform

- may be accessible via API (Application Programming Interface)

-

different structure for every page

- available in your browser

- extractable by scraping

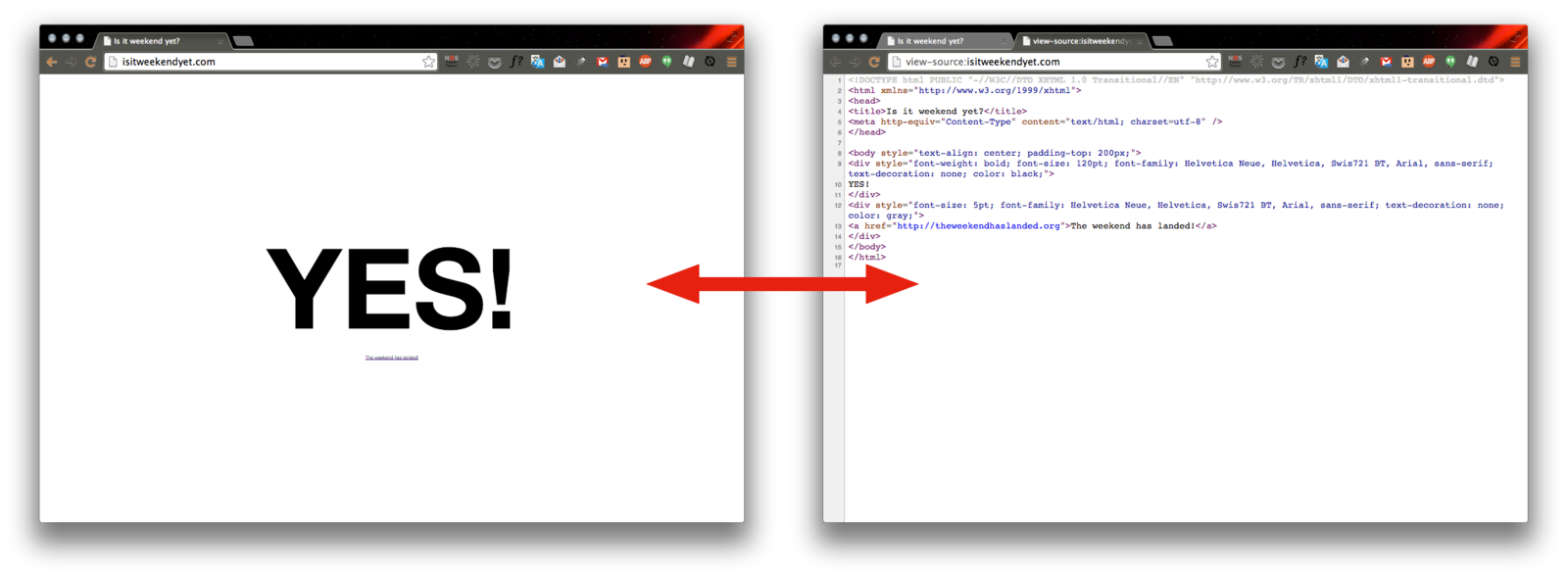

Web Scraping

Extracting data from a web page’s source

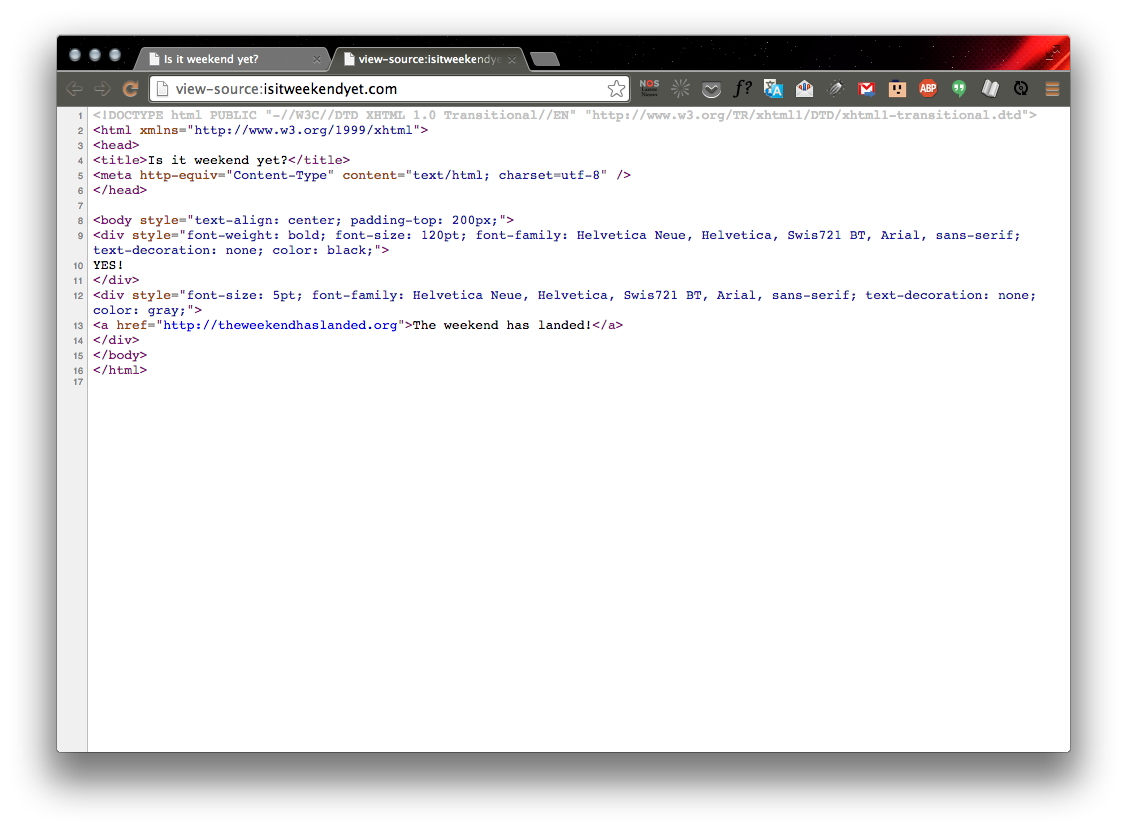

For example: http://isitweekendyet.com/

Pseudo code

- Read page HTML

- Parse raw HTML string into nicer format

- Extract what we’re looking for

- Process our extracted data

- Store/print/action based on data

Html?

HTML is a markup language for describinga small html doc

<!DOCTYPE html>

<html>

<head>

<title>Page Title</title>

</head>

<body>

<h1>My First Heading</h1>

<p>My first paragraph.</p>

</body>

</html>

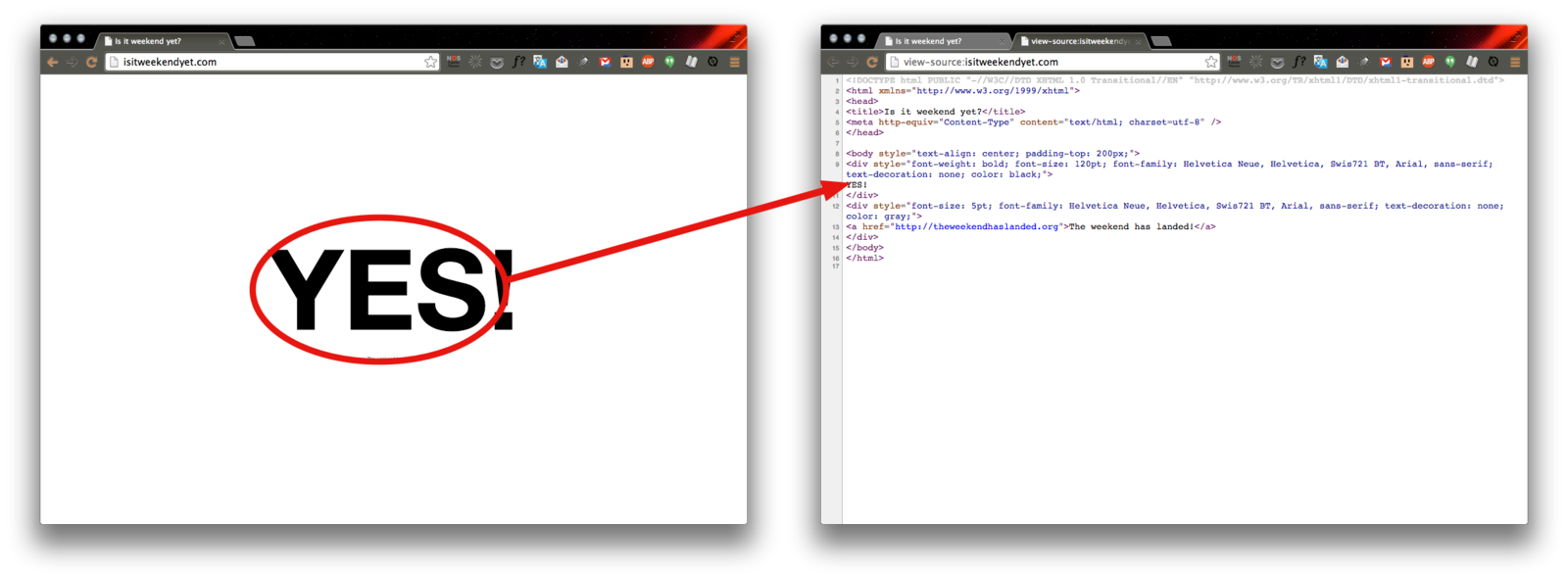

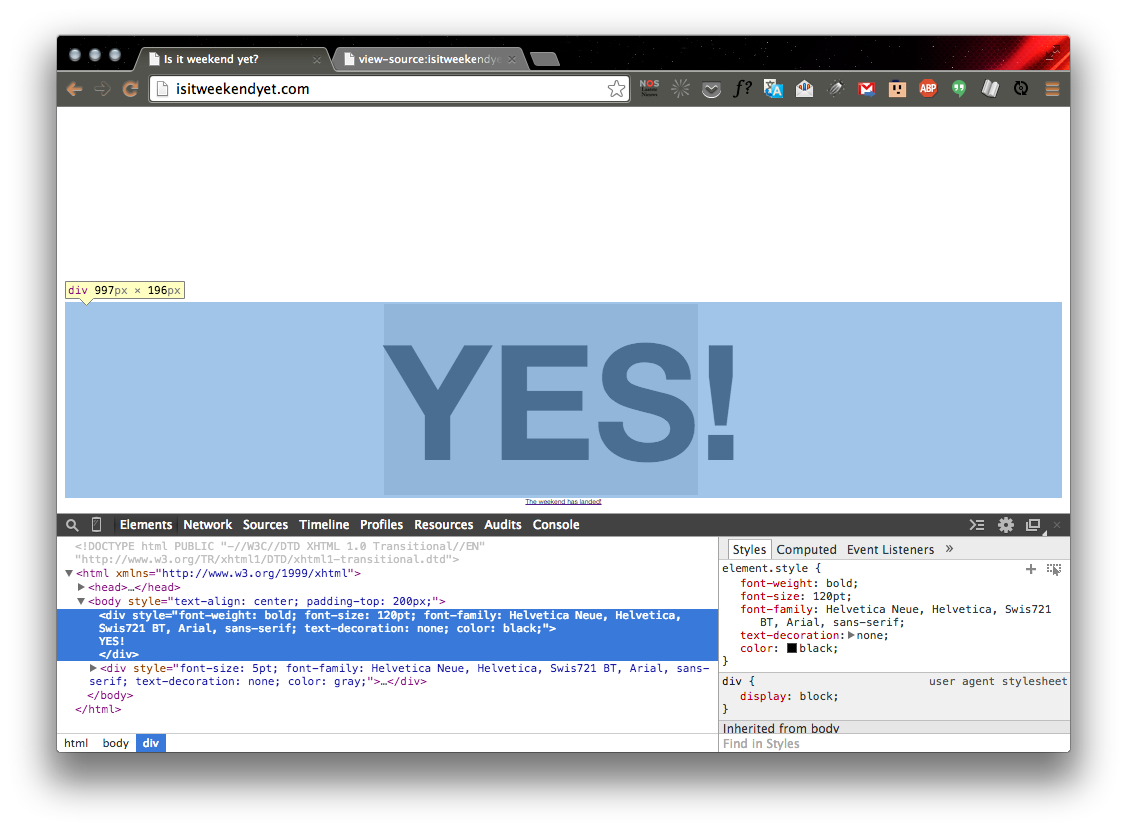

0. Determine what you're looking for

& where it lives on the page

Pro Tip: use your browser's inspector

1. Read page HTML

# Python 3

from urllib.request import urlopen

url = 'http://isitweekendyet.com/'

pageSource = urlopen(url).read()

# Python 2

from urllib import urlopen

url = 'http://isitweekendyet.com/'

pageSource = urlopen(url).read()

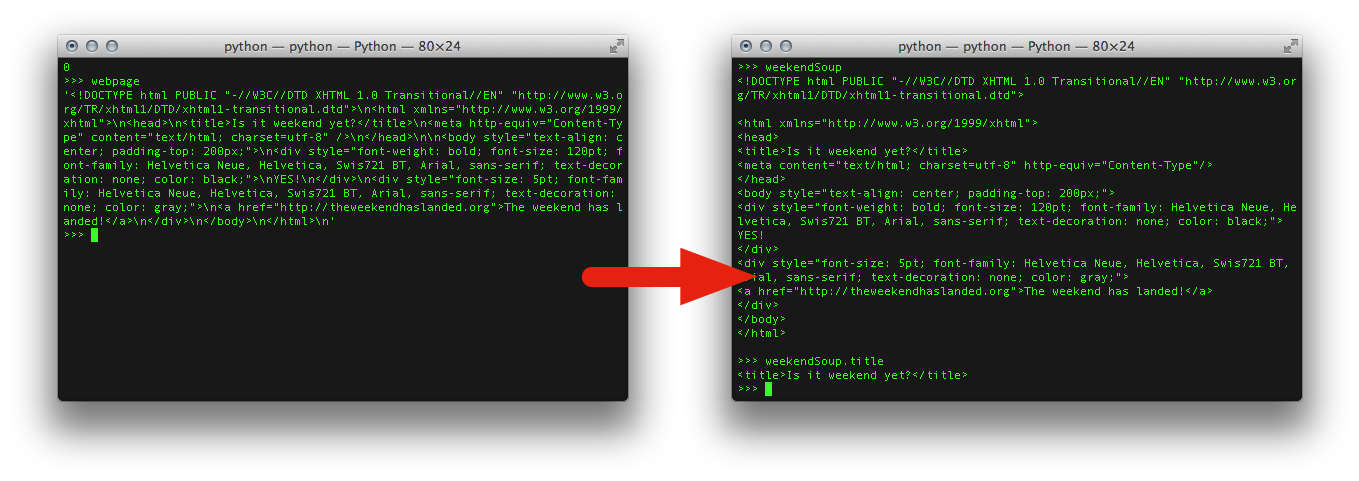

2. Parse from Page to soup

from bs4 import BeautifulSoup

weekendSoup = BeautifulSoup(pageSource, 'lxml')Beautiful Soup

You didn't write that awful page.

You're just trying to get some data out of it.

Beautiful Soup is here to help.

Since 2004, it's been saving programmers hours or days of work on quick-turnaround screen scraping projects.http://www.crummy.com/software/BeautifulSoup/

Powered by Beautiful Soup

Powered by Beautiful Soup

Parse from Page to soup

>>> from bs4 import BeautifulSoup

>>> weekendSoup = BeautifulSoup(pageSource, 'lxml')Now we have easy access to stuff like:

>>> weekendSoup.title

<title>Is it weekend yet?</title>

>>> weekendSoup.title.string

u'Is it weekend yet?'

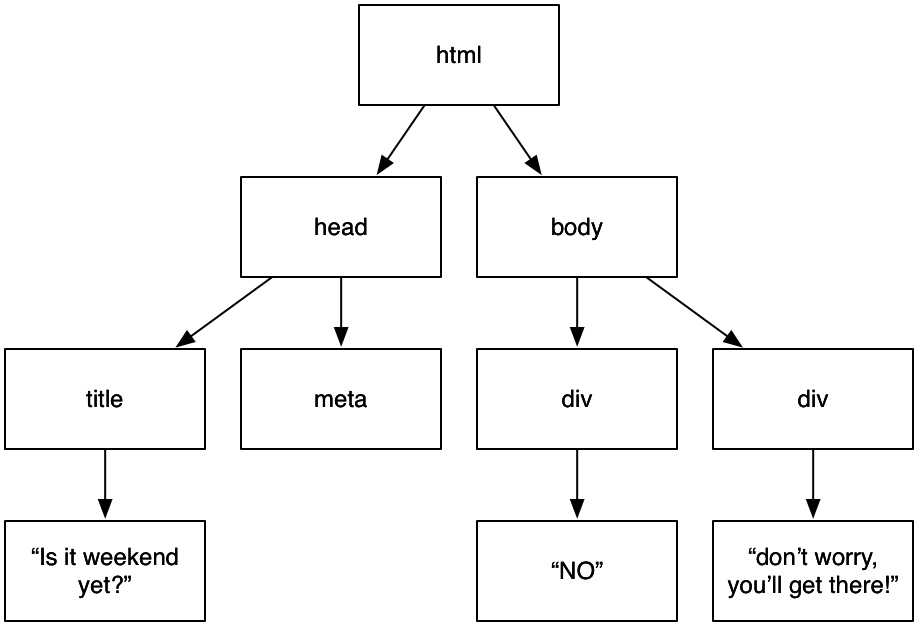

The Shape of HTMl?

The Shape of HTMl

Objects in Soup

Tag

>>> tag = weekendSoup.div

>>> tag

<div style="font-weight: bold; font-size: 120pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: black;">

YES!

</div>

>>> type(tag)

<class 'bs4.element.Tag'> String

>>> tag.string

u'\nYES!\n'

>>> type(tag.string) <class 'bs4.element.NavigableString'>

.

Beautiful Soup

>>> type(weekendSoup)weekendSoup.name <class 'bs4.BeautifulSoup'>

>>>u'[document]'

Comment

>>> markup = "<b><!--This is a very special message--></b>"

>>> cSoup = BeautifulSoup(markup)

>>> comment = cSoup.b.string

>>> type(comment)

<class 'bs4.element.Comment'>

>>> print(cSoup.b.prettify())

<b>

<!--This is a very special message-->

</b>

3. Extract what we’re looking for

Navigating the parsed HTML tree

Search functions

Bonus: CSS Selectors

Navigating the Soup Tree

Navigating the Soup Tree

.strings & .stripped_strings

.contents & .children

.descendants

.parent & .parents

.next_sibling(s)

.previous_sibling(s)

Going Down the Tree

>>> bodyTag = weekendSoup.body

>>> bodyTag.contents

[u'\n', <div class="answer text" id="answer" style="font-weight: bold; font-size: 120pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: black;">

YES!

</div>, u'\n', <div style="font-size: 5pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: gray;">

<a href="http://theweekendhaslanded.org">The weekend has landed!</a>

</div>, u'\n']

<div class="answer text" id="answer" style="font-weight: bold; font-size: 120pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: black;">

YES!

</div>

<div style="font-size: 5pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: gray;">

<a href="http://theweekendhaslanded.org">The weekend has landed!</a>

</div>

Going Down the Tree

.strings & .stripped_strings

>>> weekendSoup.div.string

u'\nYES!\n'

>>> for ss in weekednSoup.div.stripped_strings:

print(ss)

...

YES!

.

Going Down the Tree

>>> for d in bodyTag.descendants: print d... <div class="answer text" id="answer" style="font-weight: bold; font-size: 120pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: black;"> YES! </div> YES! <div style="font-size: 5pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: gray;"> <a href="http://theweekendhaslanded.org">The weekend has landed!</a> </div> <a href="http://theweekendhaslanded.org">The weekend has landed!</a> The weekend has landed!

Going Up the Tree

>>> weekendSoup.a.parent.name

u'div'

>>> for p in weekendSoup.a.parents: print p.name

...

div

body

html

[document]

Going Sideways

>>> weekendSoup.div.next_sibling

u'\n'

>>> weekendSoup.div.next_sibling.next_sibling

<div style="font-size: 5pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: gray;">

<a href="http://theweekendhaslanded.org">The weekend has landed!</a>

</div>

Exercise

Write a script:

Use Beautiful Soup to navigate to the answer to our question:

Is it weekend yet?

'''A simple script that tells us if it's weekend yet'''

# import modules

from urllib.request import urlopen

from bs4 import BeautifulSoup

# open webpage

# parse HTML into Beautiful Soup

# extract data from parsed soup

Searching the Soup-Tree

Using a filter in a search function

to zoom into a part of the soup

String Filter

most simple matching

>>> weekendSoup.find_all('div')[<div style="font-weight: bold; font-size: 120pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: black;">

NO

</div>, <div style="font-size: 5pt; font-family: Helvetica Neue, Helvetica, Swis721 BT, Arial, sans-serif; text-decoration: none; color: gray;">

don't worry, you'll get there!

</div>]

Bonus: more filters

besides using a String as argument in a search function, you can also use:

Regular Expression

List

True

Function

more details: http://www.crummy.com/software/BeautifulSoup/bs4/doc/#kinds-of-filters

Regular Expression Filter

>>> import re

>>> for tag in weekendSoup.find_all(re.compile("^b")):

... print(tag.name)

...

body

List Filter

>>> weekendSoup.find_all(["a", "li"])

[<a href="http://theweekendhaslanded.org">The weekend has landed!</a>]

[<a href="http://theweekendhaslanded.org">The weekend has landed!</a>]

True Filter

>>> for tag in weekendSoup.find_all(True):

... print(tag.name)

...

html

head

title

meta

body

div

div

a

Function Filter

>>> def has_class_but_no_id(tag):

return tag.has_attr('class') and not tag.has_attr('id')

>>> weekendSoup.find_all(has_class_but_no_id)

[]Search Functions

find_all()

find()

using Find_all()

find_all( name of tag )

find_all( attribute filter )

find_all( name of tag, attribute filter)

searching by attribute filters

careful to use "class_" when filtering based on class name

urlGA = 'https://gallery.generalassemb.ly/' pageSourceGA = urlopen(urlGA).read() GASoup = BeautifulSoup(pageSourceGA, 'lxml') wdiLinks = GASoup.find_all('a', href='WD') projects = GASoup.find_all('li', class_='project')

Find()

>>> soup.find_all('title', limit=1) [<title> Monty Python's reunion is about nostalgia and heroes, not comedy | Stage | theguardian.com </title>]>>> soup.find('title') <title> Monty Python's reunion is about nostalgia and heroes, not comedy | Stage | theguardian.com </title>

Search All the directions!

Bonus: using Css Selectors

soup.select("#content")soup.select("div#content")

soup.select(".byline")soup.select("li.byline")

soup.select("#content a") soup.select("#content > a") Bonus: using Css Selectors

soup.select('a[href]')

soup.select('a[href="http://www.theguardian.com/profile/brianlogan"]')soup.select('a[href^="http://www.theguardian.com/"]')

soup.select('a[href$="info"]')

[<a class="link-text" href="http://www.theguardian.com/info">About us,</a>, <a class="link-text" href="http://www.theguardian.com/info">About us</a>]

>>> guardianSoup.select('a[href*=".com/contact"]')

[<a class="rollover contact-link" href="http://www.theguardian.com/contactus/2120188" title="Displays contact data for guardian.co.uk"><img alt="" class="trail-icon" src="http://static.guim.co.uk/static/ac46d0fc9b2bab67a9a8a8dd51cd8efdbc836fbf/common/images/icon-email-us.png"/><span>Contact us</span></a>]4. Process extracted data

We generally want to:

clean up

calculate

process

Cleaning up + processing

>>> answer = soup.div.string

>>> answer

'\nNO\n'

>>> cleaned = answer.strip()

>>> cleaned

'NO'

>>> isWeekendYet = cleaned == 'YES'

>>> isWeekendYet

False many useful string methods: https://docs.python.org/3/library/stdtypes.html#string-methods

5. Do stuff with our extracted data

# print info to screen

print('Is it weekend yet? ', isWeekendYet)

or save to .csv file

import csv

with open('weekend.csv', 'w', newline='') as csvfile:

weekendWriter = csv.writer(csvfile)

if isWeekendYet:

weekendWriter.writerow(['Yes'])

else:

weekendWriter.writerow(['No'])

https://docs.python.org/3.3/library/csv.html

Mini Project

Mini Project

- As before open the website and load it to an object called "IrisSoup"

-

Check the type of class that the table has in the page and use find to get the table

- Iterate through each row (tr) and assign each element

- (get_text() and strip()) to a variable and append it to a list.

- Make a list of lists with the output of step 3 above

-

NOTE: Consider using a nested list comprehension to do steps 3 and 4 in one go!

-

What would be useful to do with the headers (th)

Let us analyse the iris dataset

Questions?

Useful Resources

google; “python” + your problem / question

python.org/doc/; official python documentation, useful to find which functions are available

stackoverflow.com; huge gamified help forum with discussions on all sorts of programming questions, answers are ranked by community

codecademy.com/tracks/python; interactive exercises that teach you coding by doing

wiki.python.org/moin/BeginnersGuide/Programmers; tools, lessons and tutorials

Useful Modules etc

2 vs 3

Python Usage Survey 2014 visualised

http://www.randalolson.com/2015/01/30/python-usage-survey-2014/

Python 2 & 3 Key Differences

Thank you

Extracting Iris Dataset

'''

Extracting the Iris dataset table from Wikipedia

'''

# import modules

from urllib.request import urlopen

from bs4 import BeautifulSoup

# open webpage

url = 'https://en.wikipedia.org/wiki/Iris_flower_data_set'

pageSource = urlopen(url).read()

# parse HTML into Beautiful Soup

IrisSoup = BeautifulSoup(pageSource, 'lxml')

# Get the table

right_table=IrisSoup.find('table', class_='wikitable sortable')

# Extract rows

tmp = right_table.find_all('tr')

first = tmp[0]

allRows = tmp[1:]

# Construct headers

headers = [header.get_text().strip() for header in first.find_all('th')]

# Construct results

results = [[data.get_text() for data in row.find_all('td')]

for row in allRows]

Creating a pandas dataframe

#import pandas to convert list to data frame

import pandas as pd

df = pd.DataFrame(data = results,

columns = headers)

df['Species'] = df['Species'].map(lambda x: x.replace('\xa0',' '))

Pandas Analysis

import pandas as pd

%pylab inline

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

iris_data = pd.read_csv('irisdata.csv')

iris_data.head()

iris_data.shape

iris_data[0:10][['Sepal width', 'Sepal length' ]]

# Summarise the data

iris_data.describe()

# Now let's group the data by the species

byspecies = iris_data.groupby('Species')

byspecies.describe()

byspecies['Petal length'].mean()

# Histograms

iris_data.loc[iris_data['Species'] == 'I. setosa', 'Sepal width'].hist(bins=10)

iris_data['Sepal width'].plot(kind="hist")

Navigating to the weekend yet answer

>>> from urllib.request import urlopen

>>> from bs4 import BeautifulSoup

>>> url = "http://isitweekendyet.com/"

>>> source = urlopen(url).read()

>>> soup = BeautifulSoup(source, 'lxml')

>>> soup.body.div.string

'\nNO\n'

# an alternative:

>>> list(soup.body.stripped_strings)[0]

'NO'