Frontend Components

powered by

Deep Learning

Workflow

- Deep Learning Model

- Find or generate dataset

- Preprocessing

- Train/test split (optional)

- Define model

- Train model

- Testing model (optional)

- Save model and convert

- Frontend Component

- Setup project

- Load and wrap model

- Preprocess user input

- Predict

- Process prediction and display

Deep Learning Model

Step 1: Dataset

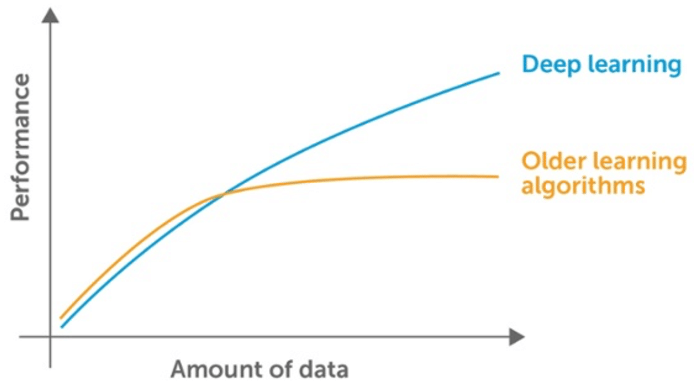

Machine Learning models learn from data. In Deep Learning, the more data we have the better the performance.

Step 2: Preprocessing

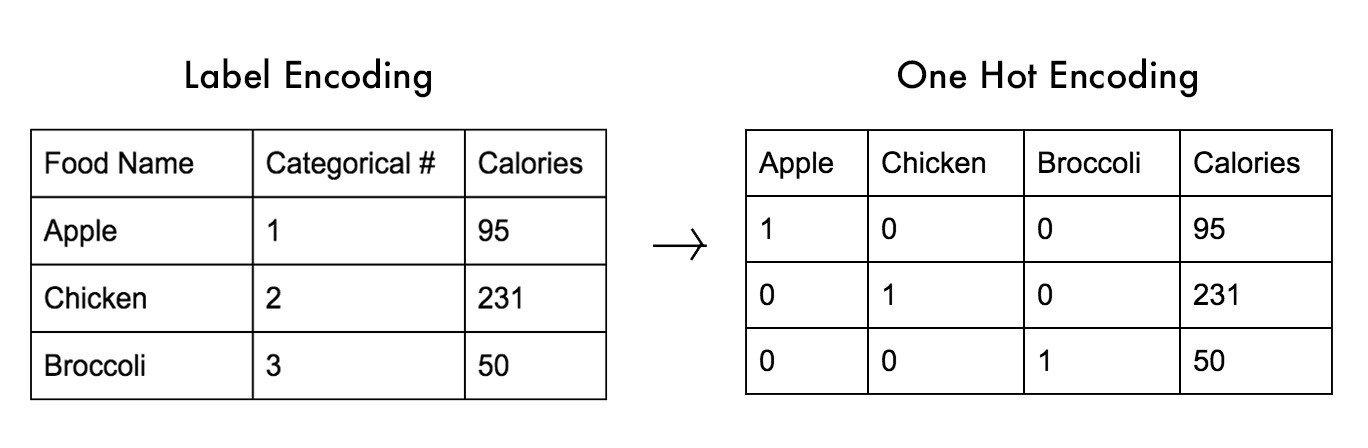

We need to transform the input data in a way the algorithms understand

Step 3: Train/Test split

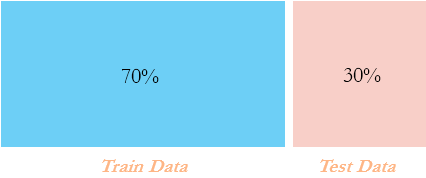

The idea is to test your model with data never seen before

Step 4: Model

Step 5: Training

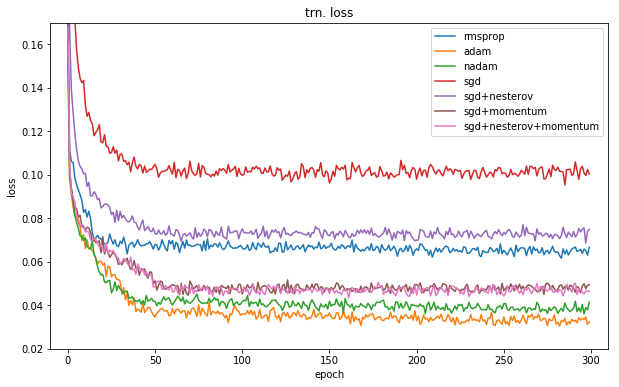

Hyperparameter configuration and fit the model with the training set and the target label

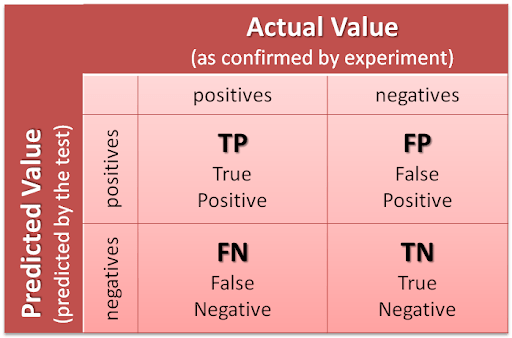

Step 6: Test

Test and evaluate the model using the test set and metrics

Step 7: Save and convert Model

Once we're happy with the results, we save and convert the model to be consumed by Javascript.

+

=

Fontend Component

Step 1: Setup

Project set up. Most important:

$> npm install @tensorflow/tfjsTensorFlow.js is a JavaScript library for training and deploying ML models in the browser and on Node.js

Step 2: Load Model

Our component needs to import tensorflowjs and load the converted model:

import * as tf from '@tensorflow/tfjs'

//...

async function loadModel(path) {

postMessage({ loading: true })

const model = await tf.loadModel(path)

postMessage({ loading: false })

return model

}Step 3: Preprocess

Same preprocessing as the one we did during model training. Luckily TensorFlow.js provides us with a nice API to help us with this

//...

new Promise(resolve => {

const source = str2int(value)

const onehotSource = tf.oneHot(tf.tensor1d(source, 'int32'), numClasses)

const reshapedSource = onehotSource.reshape([numSamples].concat(onehotSource.shape))

//...

})Step 4: Predict

Common principle that underlies all supervised machine learning is that they learn a mapping of input to output

Step 5: Process and Display

In most cases the output of the prediction needs to be converted back to a format we can easily display

//...

const prediction = model.predict(/*...*/)

const date = prediction.reduce((acc, pred) => {

const pIdx = pred

.reshape([lenMachineVocab])

.argMax()

.get()

return acc + invMachineVocab[pIdx]

}, '')

//...