Scaling Django To 1 Million Users

(or at least trying to get close)

A bit about me...

- Lead Infrastructure Engineer @iStrategylabs (here)

- Python developer

- Budding Linux Kernel Contributor

- Hobbyist Operating Systems tinkerer

- @JulianGindi

- julian@isl.co

A Quick Poll

tally.tl/3Yv7Y

But first...

The Contract

- You will leave with a better understand of how to build Django projects with scaling in mind

- You will learn some fundamental "rules" of building a modern, scalable infrastructure

- You will learn about a few tools that you can potentially bring into your stack that will help improve performance

Roadmap

- Concepts/Philosophy

- Django

- Server

- Cluster

Meet Rhino, Our Trusty Scaling Companion

Concepts

12 Factor App

- 12factor.net

- Developed by Heroku. It's how they encourage you to build apps that are "Heroku-ready"

- Great resource on how to build modern, scalable applications

Quick Summary

- Codebase: One codebase tracked in VC, many deploys

- Dependencies: Explicitly declare and isolate dependencies

- Config: Store config in the environment

- Backing Services: Treat backing services as attached resources

- Build, release, run: Strictly separate build and run stages

- Processes: Run app as one or more stateless processes

- Port binding: Export services via port binding

- Concurrency: Scale out via the process model

- Disposability: Fast startup, graceful shutdown

- Dev/prod parity: Dev, staging, and prod the same

Example Scenario

- Single Server

- Save images to local filesystem (think old school Django)

- You get hit with a ton of traffic. What do you do?

Lesson: Stateless Servers

Don't even think about storing stuff on your server!

Why?

- Vertical scaling is not a long-term solution

- Good for resource modifications

- Redundancy: Do you really only want one server?

- State is your worst enemy

- Kill all the servers!

- Freedom

- Containers (future)

The Cluster is your friend

Services are your friends

- Unix Philosophy

- Small, sharp tools

- All additional components should be treated as "attached" resources

- Distribute and schedule services based on availability and resource need

The Application

First line of defense for optimizations

Optimizing Django: Basics

- Beware of premature optimization

- Data-driven optimizations

- 80/20 rule

- Think about scale early on

- "Brute force is usually the most performant option when 'n' is small"

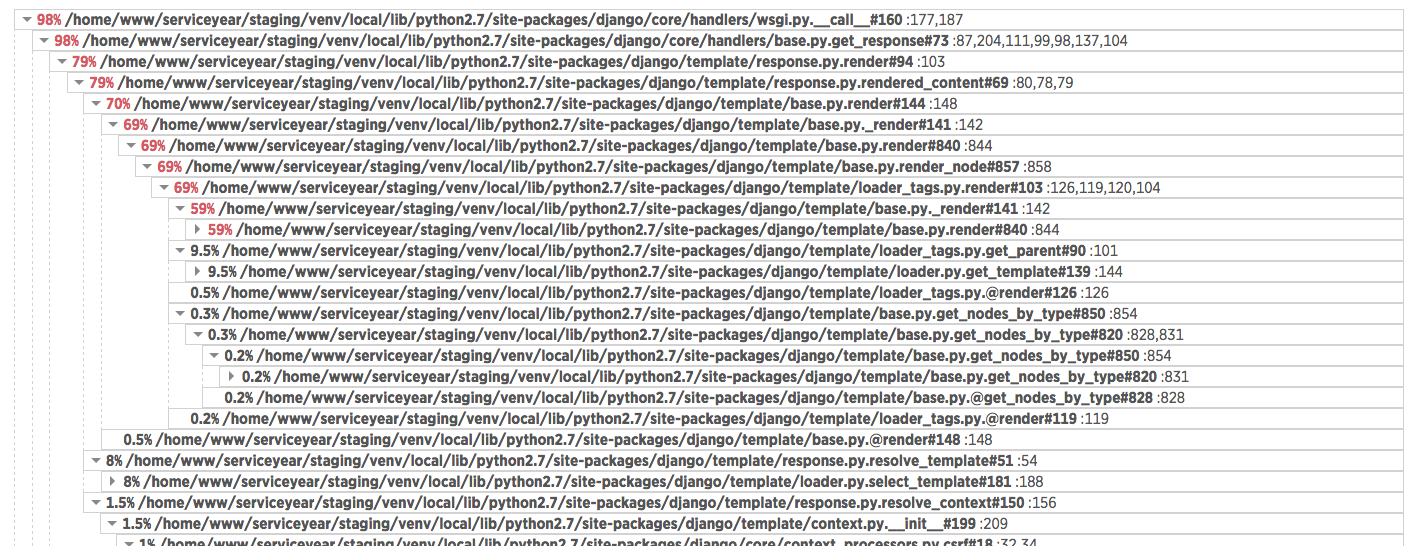

Application Monitoring

- Use NewRelic (even the free tier is helpful)

- Django debug toolbar

- Django profiler

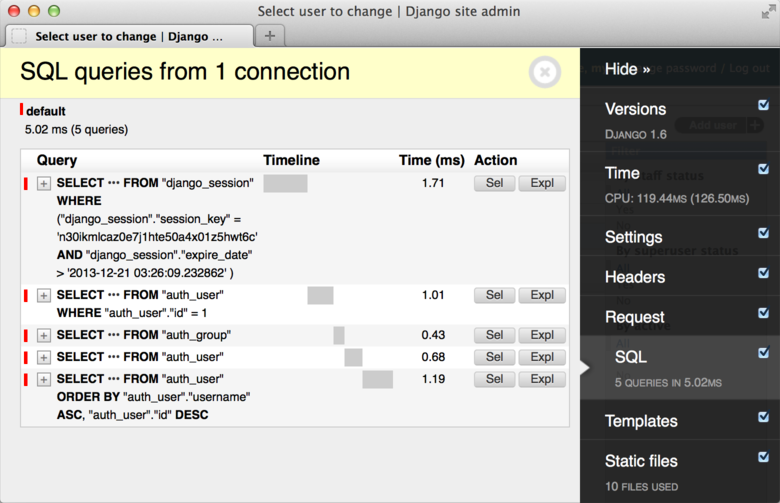

Debug Toolbar

Finding Bottlenecks

Beware of out of control queries

# Bad

def check_for_me(self):

users = Users.objects.filter(role='developer')

for el in users:

# This would execute a db query

# each iteration

prof = el.profile

if prof.real_name is 'Julian Gindi':

return True

return False

# Good

def check_for_me(self):

# This is 'lazy'

users = Users.objects.filter(role='developer')

users = users.select_related('profile')

for el in users:

# This would NOT execute an additional db query

prof = el.profile

if prof.real_name is 'Julian Gindi':

return True

return False

Learn to love caching

from django.core.cache import cache

def get_manifest():

cached = cache.get('asset_manifest')

if not cached:

manifest_file = settings.PROJECT_ROOT + '/dist/manifests/assets-manifest.json'

json_manifest = yaml.load(open(manifest_file))

cache.set('asset_manifest', json_manifest, 43200)

return json_manifest

else:

return cached

Scenario: Expensive API Call

Celery is Badass

from celery import shared_task

import requests

# If you set up your backend, you can query for status information

# and request the result when the request is complete

@shared_task

def api_call(url, method):

if method is 'GET':

r = requests.get(url)

return r.json()

Kicking Things Into High Gear

uWSGI and Nginx: A love story

Why uWSGI

- Fast!

- Speaks many different protocols

- We use unix sockets + wsgi

- Highly scalable

- Zerg mode

- Cheap mode

- Highly configurable

- The Art of Graceful Reloading

Example Config

[uwsgi]

socket = {{ base }}/run/myProject.sock

# Django's wsgi file

module = configuration.wsgi:application

# Creating a pidfile to control individual vassals

pidfile = {{ base }}/run/myProject.pid

# the virtualenv (full path)

home = {{ base }}/venv

master = true

enable-threads = true

single-interpreter = true

# Turning on webscale

cheaper-algo = spare

# number of workers to spawn at startup

cheaper-initial = 2

# maximum number of workers that can be spawned

workers = 10

# how many workers can be spawned at a time

cheaper-step = 2

uWSGI and Nginx

A great pair: They Speak the same language

upstream django {

server unix:///{{ base }}/run/myProject;

}

# Send all non-media requests to the Django server.

location @uwsgi {

...

uwsgi_pass django;

include /etc/nginx/uwsgi_params;

proxy_redirect off;

}

Scenario: You notice a number of connections are erring out and not being handled

Solution

# OS Limits this for you (default 128)

# modify the below file for more

/proc/sys/net/core/somaxconn

# UWSGI Setting

listen = 2000Modify the listen queue

Increase number of allowed open files (unix sockets are just files)

> vi /etc/sysctl.conf

fs.file-max = 70000

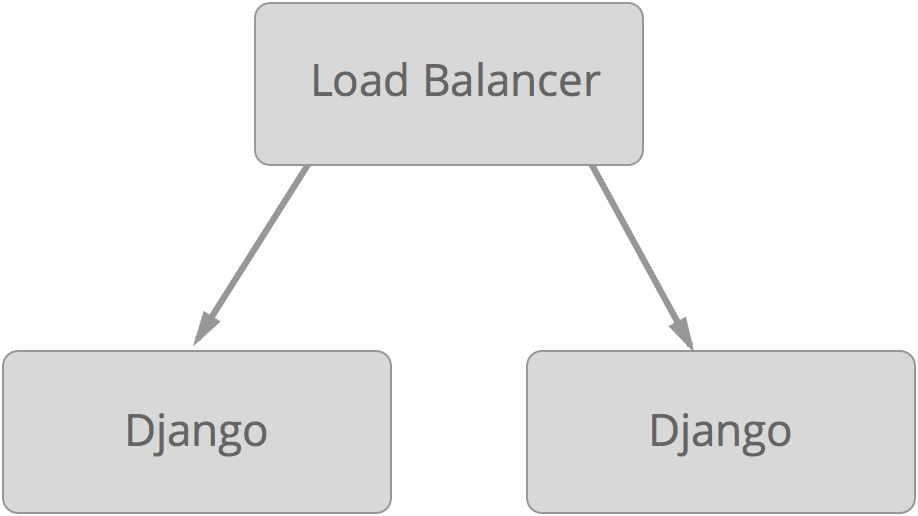

Scaling at the Cluster Level

Steps to ensure a highly scalable app

- Use a load balancer

- Utilize auto-scaling

- Kill servers randomly

- Re-think your deployment system

You better be using a load balancer!

- Utilize health checks

- Kill unhealthy servers quickly

- Spread load across many servers in many availability zones

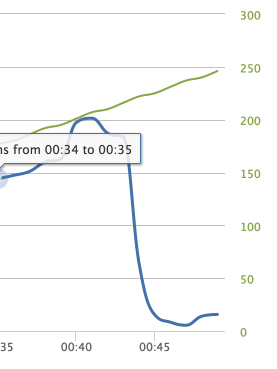

You better be using autoscaling!

- Scale based on resource need

- Set resource threshholds to trigger autoscaling

- Not possible without stateless servers

- Can also autoscale based on times you receive a higher baseline of traffic

Old Deployment

Would install dependencies (hopefully) using a configuration management system. Servers take a while to become 'ready' to receive new code.

New Deployment

Pre-built images are used that already contain all your dependencies. New code is deployed to a fresh image and deployed using a "canary" system. Since image is "compiled", if the deployment succeeds, one or many servers become active.

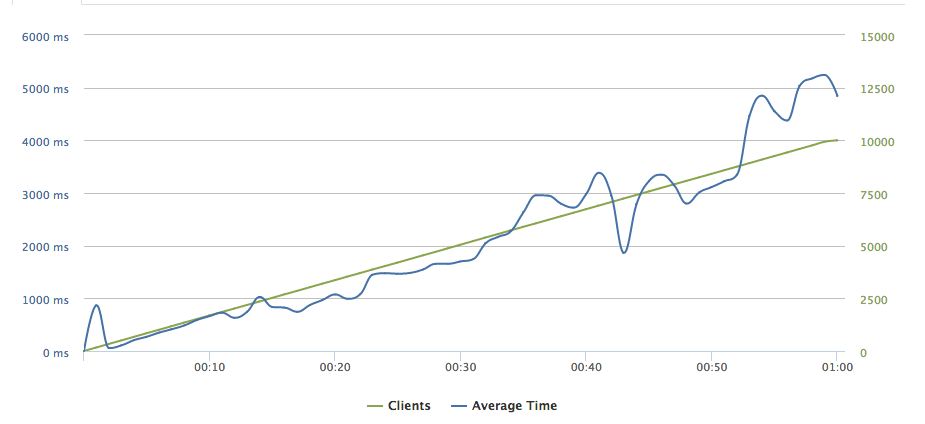

Load Test Early and Often

That's a wrap. Questions?