Having it all: distributed services with Django, Boto, and SQS queues

Julio Vicente Trigo Guijarro

@juliotrigo

PyConEs 2015

Valencia, 22nd November

➜ Computer Science Engineer

About me

➜ University of Alicante

➜ London

➜ Software Engineering

➜ Scrum / TDD

➜ Python

Index

What we had / What we wanted / What we did NOT want

Different approaches

Our solution

Implementation

Thank you / QA

Our infrastructure

Our infrastructure

Python 2.7

Ubuntu

RDS

EC2

ELB

S3

SQS

AWS:

Our infrastructure (II)

indexer

www

www2

www3

www4

www5

www-elb

solr-elb

solr

solr2

products

api

images

api

Marketing

What we had

Servers

Teams

Processes to run

Common resources

indexer

www

solr

Development

Product Information

Update product availability

Generate marketing feeds

Solr indexer

Import products into the DB

Update product pricing

Products DB (MySQL)

Availability DB (Redis)

Product images (S3 bucket)

Marketing feeds (S3 bucket)

What we had (II)

➜ Growing fast

➜ Development team:

➜ Implementing services (internal use: operational)

➜ Running them manually (when requested)

➜ Expected failures (data issue...): re-run

➜ Long processes

What we wanted

✔ Allow other teams to run the processes

✔ Run them remotely

✔ Authentication

✔ Authorization

✔ Secuential access to common resources

➜ Semaphores

What we wanted (II)

✔ Simplify process invocation

➜ Easy user interface (command line)

➜ No technical skills required

✔ Run processes asynchronously

✔ Quick solution (internal tool)

✔ Robust

What we did NOT want

✘ Give other teams SSH access to our servers

✘ Maintain server SSH keys / passwords in our services

✘ Concurrent access to common resources

✘ Our own (service invocation) implementation

➜ Development time, blocked resources, bugs...

✘ A single entity (server, service...) with access to all the servers

Different approaches

Give other teams access to our servers

✘ All the teams would need SSH access

✘ Cannot predict how they would use it

➜ Multiple (concurrent?) executions

➜ Unpredictable results

➜ Coordination between teams

Different approaches (II)

✔ Easy user interface

✔ Teams don't need server access

✘ Single point of failure

✘ Access to all the servers

✘ Guarantee sequentiality ("common resources")

Central application that calls the remote services

Different approaches (III)

Queues

✔ Central service that calls the services remotely

✔ Central service that queues messages

✔ Sequentiality handled by the queues

✔ Asynchronism

✔ No need to know services location

✔ Robust

Our solution

Queues!

SQS

Implementation

Boto

Control Panel (Django)

Distributed Services

Upstart

Queues (SQS)

Implementation

Queues (SQS)

Boto

Control Panel (Django)

Distributed Services

Upstart

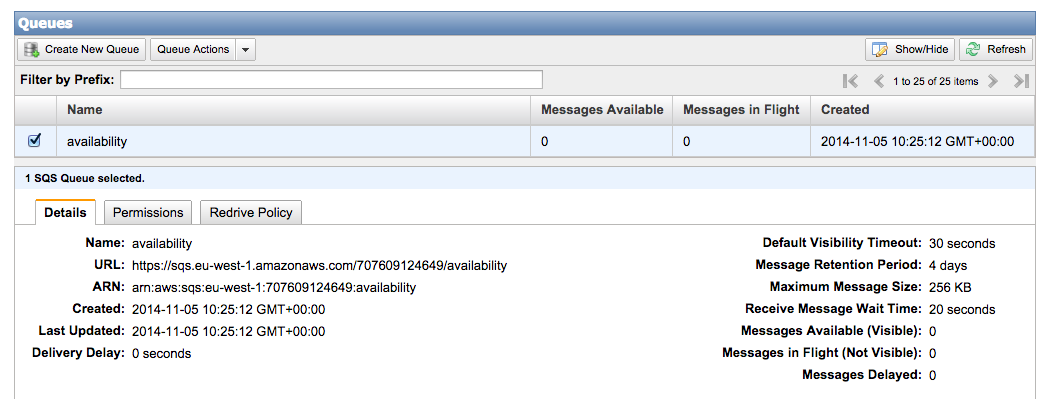

Queues

➜ AWS Management Console

Queues (II)

➜ Push messages to the queues from any server

➜ Pull messages from the queues from any server

➜ Each critical section has its own queue

common_resources = ['products', 'availability', 'S3_feeds', 'S3_images']➜ Represent common resources, not services

Implementation

Queues (SQS)

Boto

Distributed Services

Upstart

Control Panel (Django)

Boto

➜ +46 Million downloads (top 10)

➜ Boto 3:

- "Stable and recommended for general use"

- Work is under way to support Python 3.3+

➜ Amazon Web Services SDK for Python

Boto (II)

# sqs_handler.py

import boto.sqs

from boto.sqs.message import Message

class SqsHandler(object):

service_name = None

def __init__(

self, aws_region, aws_access_key_id, aws_secret_access_key,

aws_queue_name, logger, sleep_sec

):

self.conn = boto.sqs.connect_to_region(

aws_region,

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key

)

self.queue = self.conn.get_queue(aws_queue_name)

self.logger = logger

self.sleep_sec = sleep_sec

# ...Boto (III)

# ...

def mq_loop(self):

while True:

try:

message = self.queue.read()

if message is not None:

body = message.get_body()

if body["service"] == self.service_name:

self.queue.delete_message(message)

self.process_message(body)

except Exception:

self.logger.error(traceback.format_exc())

finally:

time.sleep(self.sleep_sec)

# ...Boto (IV)

# ...

def process_message(self, body):

raise NotImplementedError()

def write_message(self, body):

m = Message()

m.set_body(body)

self.queue.write(m)

Implementation

Queues (SQS)

Boto

Control Panel (Django)

Distributed Services

Upstart

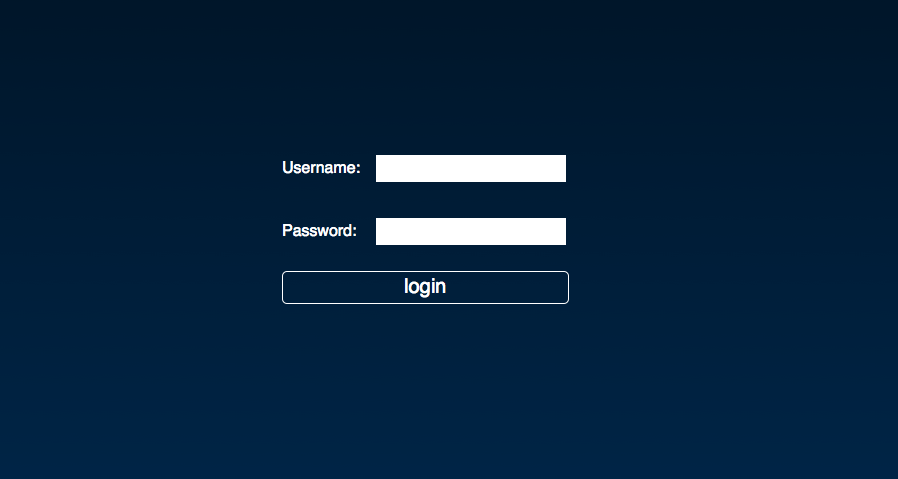

Control Panel

➜ Django website: invoke services

➜ Django admin: authentication (HTTS) & authorization

Control Panel (II)

# ...settings.base.py

# ...

GROUP_CONTROL_PANEL = 'control_panel'

GROUP_DASHBOARD = 'dashboard'

GROUP_SERVICES = 'services'

GROUP_AVAILABILITY = 'availability'

GROUP_AVAILABILITY_UPDATE = 'availability_update'

# ...

AWS_REGION = os.environ['AWS_REGION']

AWS_ACCESS_KEY_ID = os.environ['AWS_ACCESS_KEY_ID']

AWS_SECRET_ACCESS_KEY = os.environ['AWS_SECRET_ACCESS_KEY']

# ...➜ Authorization according to permissions (Django Goups)

Control Panel (III)

➜ Services invoked from different pages

➜ Message displayed after invocation

Control Panel (IV)

# ...settings.production.py

from .base import *

# ...

AWS_AVAILABILITY_QUEUE_NAME = 'availability'

# ...Control Panel (V)

# ...permissions.py

def group_check(user, groups):

if not user.is_authenticated():

return False

if user.is_superuser:

return True

for group in groups:

if not user.groups.filter(name=group).exists():

raise PermissionDenied

return True

def control_panel_group_check(user):

groups = [settings.GROUP_CONTROL_PANEL]

return group_check(user, groups)

# ...Control Panel (VI)

# ...

def avl_update_group_check(user):

groups = [

settings.GROUP_CONTROL_PANEL,

settings.GROUP_DASHBOARD,

settings.GROUP_SERVICES,

settings.GROUP_AVAILABILITY,

settings.GROUP_AVAILABILITY_UPDATE,

]

return group_check(user, groups)

# ...Control Panel (VII)

# ...views.availability.py

@require_POST

@login_required

@user_passes_test(avl_update_group_check)

def avl_update(request):

# ....

test_only = request.POST.get('test_only', False)

send_email = request.POST.get('send_email', False)

queue_name = settings.AWS_AVAILABILITY_QUEUE_NAME

body = {

"service": "availability",

"parameters": {

"test_only": test_only,

"send_email": send_email,

}

}

mq = SqsHandler(

settings.AWS_REGION,

settings.AWS_ACCESS_KEY_ID,

settings.AWS_SECRET_ACCESS_KEY,

queue_name,

logger,

settings.MQ_LOOP_SLEEP_SEC

)

mq.write_message(json.dumps(body))

# ....Control Panel (VIII)

➜ For each service:

- Queue name (may be shared across services)

- Payload

➜ Send messages to the correct queue

Implementation

Queues (SQS)

Boto

Control Panel (Django)

Distributed Services

Upstart

Distributed Services

➜ Read messages from the correct queue (sequentially)

➜ Check messages (service name)

➜ Delete messages

➜ Deployed on any server

➜ Process messages (service logic)

Distributed Services (II)

# mq_loop.py

# ...

class SqsAvlHandler(SqsHandler):

service_name = "availability"

def process_message(self, body):

message = json.loads(body)

service = message["service"]

if service == service_name:

test_only = message["parameters"]["test_only"]

send_email = message["parameters"]["send_email"]

avl(test_only, send_email)

else:

raise Exception(

"Service {0} not supported.".format(service))

# ...Distributed Services (III)

# mq_loop.py

# ...

if __name__ == "__main__":

# ...

mq = SqsAvlHandler(

settings.AWS_REGION,

settings.AWS_ACCESS_KEY_ID,

settings.AWS_SECRET_ACCESS_KEY,

settings.AWS_AVAILABILITY_QUEUE_NAME,

logger,

settings.AVAILABILITY_LOOP_SLEEP_SEC

)

mq.mq_loop()Distributed Services (IV)

# mq_loop.sh

#!/bin/bash

source /var/.virtualenvs/avl/bin/activate

cd /var/avl

python mq_loop.pyImplementation

Queues (SQS)

Boto

Control Panel (Django)

Distributed Services

Upstart

Upstart

➜ Keep services up and running

➜ Debian and Ubuntu moved to systemd

➜ "Upstart is an event-based replacement for the /sbin/init daemon which handles starting of tasks and services during boot, stopping them during shutdown and supervising them while the system is running."

Upstart (II)

# /etc/init/avl.conf

description "Availability update"

# Start up when the system hits any normal runlevel, and

# shuts down when the system goes to shutdown or reboot.

#

# 0 : System halt.

# 1 : Single-User mode.

# 2 : Graphical multi-user plus networking (DEFAULT)

# 3 : Same as "2", but not used.

# 4 : Same as "2", but not used.

# 5 : Same as "2", but not used.

# 6 : System reboot.

start on runlevel [2345]

stop on runlevel [06]

# respawn the job up to 10 times within a 5 second period.

# If the job exceeds these values, it will be stopped and

# marked as failed.

respawn

respawn limit 10 5

setuid avl

setgid avl

exec /var/avl/mq_loop.shUpstart (III)

/etc/init/avl.conf

/etc/init.d/avl > /lib/init/upstart-jobBut this is just a quick

and simple solution...

➜ Internal use

➜ A few messages per day

➜ Expected exceptions processing messages

Going further...

SQJobs

Microservices

"The term "Microservice Architecture" has sprung up over the last few years to describe a particular way of designing software applications as suites of independently deployable services. While there is no precise definition of this architectural style, there are certain common characteristics around organization around business capability, automated deployment, intelligence in the endpoints, and decentralized control of languages and data." - Martin Fowler

http://martinfowler.com/articles/microservices.html

Nameko

➜ Framework for building microservices in Python

➜ Built-in support for:

➜ RPC over AMQP

➜ Asynchronous events (pub-sub) over AMQP

➜ Simple HTTP GET and POST

➜ Websocket RPC and subscriptions (experimental)

➜ Encourages the dependency injection pattern

Nameko (II)

# helloworld.py

from nameko.rpc import rpc

class GreetingService(object):

name = "greeting_service"

@rpc

def hello(self, name):

return "Hello, {}!".format(name)$ nameko run helloworld

starting services: greeting_service

...$ nameko shell

>>> n.rpc.greeting_service.hello(name="Julio")

u'Hello, Julio!'Nameko (III)

# http.py

import json

from nameko.web.handlers import http

class HttpService(object):

name = "http_service"

@http('GET', '/get/<int:value>')

def get_method(self, request, value):

return json.dumps({'value': value})

@http('POST', '/post')

def do_post(self, request):

return "received: {}".format(request.get_data(as_text=True))$ nameko run http

starting services: http_service

...Nameko (IV)

$ curl -i localhost:8000/get/42

HTTP/1.1 200 OK

Content-Type: text/plain; charset=utf-8

Content-Length: 13

Date: Fri, 13 Feb 2015 14:51:18 GMT

{'value': 42}$ curl -i -d "post body" localhost:8000/post

HTTP/1.1 200 OK

Content-Type: text/plain; charset=utf-8

Content-Length: 19

Date: Fri, 13 Feb 2015 14:55:01 GMT

received: post bodyThank you!

@juliotrigo

https://slides.com/juliotrigo/pycones2015-distributed-services