Diffeomorphic random sampling by optimal information transport

Klas Modin

Joint work with

Sarang Joshi

University of Utah

Martin Bauer

Florida State University

Draw samples from non-uniform distribution on \(M\)

Smooth probability densities

Problem 1: given \(\mu\in\mathrm{Prob}(M)\) generate \(N\) samples from \(\mu\)

Most cases: use Monte-Carlo based methods

Special case here:

- \(M\) low dimensional

- \(\mu\) very non-uniform

- \(N\) very large

transport map approach

might be useful

Transport problem

Problem 2: given \(\mu\in\mathrm{Prob}(M)\) find \(\varphi\in\mathrm{Diff}(M)\) such that

Method:

- \(N\) samples \(x_1,\ldots,x_N\) from uniform distribution \(\mu_0\)

- Compute \(y_i = \varphi(x_i) \)

Diffeomorphism \(\varphi\) not unique!

Optimal transport problem

Problem 3: given \(\mu\in\mathrm{Prob}(M)\) find \(\varphi\in\mathrm{Diff}(M)\) minimizing

under constraint \(\varphi_*\mu_0 = \mu\)

Studied case: (Moselhy and Marzouk 2012, Reich 2013, ...)

- \(\mathrm{dist}\) = \(L^2\)-Wasserstein distance

- \(\Rightarrow\) optimal mass transport problem

- \(\Rightarrow\) solve Monge-Ampere equation (heavily non-linear PDE)

Our notion:

- use optimal information transport

Optimal information transport

Remarkable fact:

- solution to transport problem almost explicit in this setting

Right-invariant Riemannian \(H^1\)-metric on \(\mathrm{Diff}(M)\)

Use induced distance on \(\mathrm{Diff}(M)\)

Riemannian submersion

\(H^1\) metric

Fisher-Rao metric = explicit geodesics

Horizontal lifting equations

Theorem: solution to optimal information transport is \(\varphi(1)\) where \(\varphi(t)\) fulfills

where \(\mu(t)\) is Fisher-Rao geodesic between \(\mu_0\) and \(\mu\)

Leads to numerical time-stepping scheme: Poisson problem at each time step

MATLAB code: github.com/kmodin/oit-random

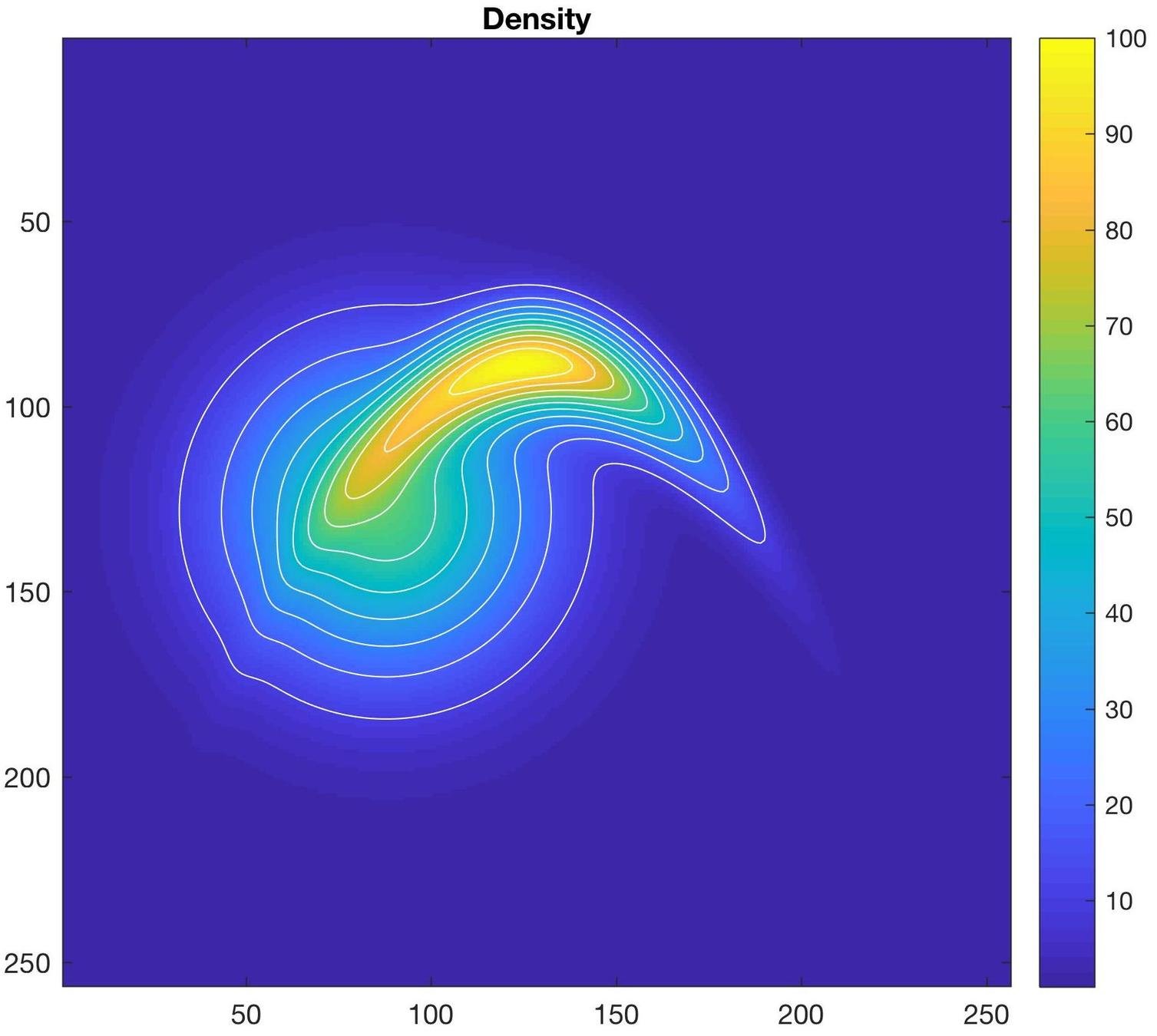

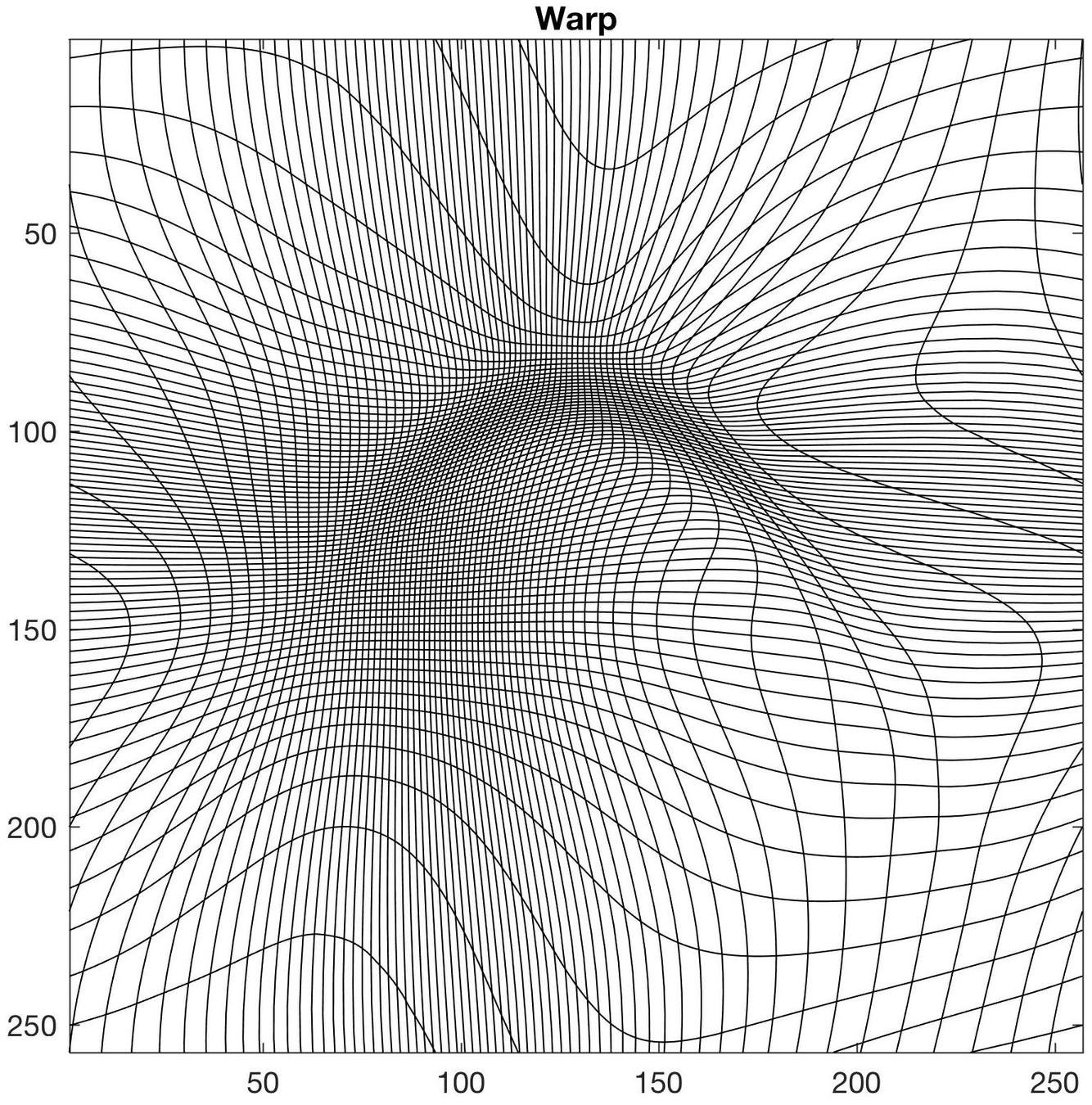

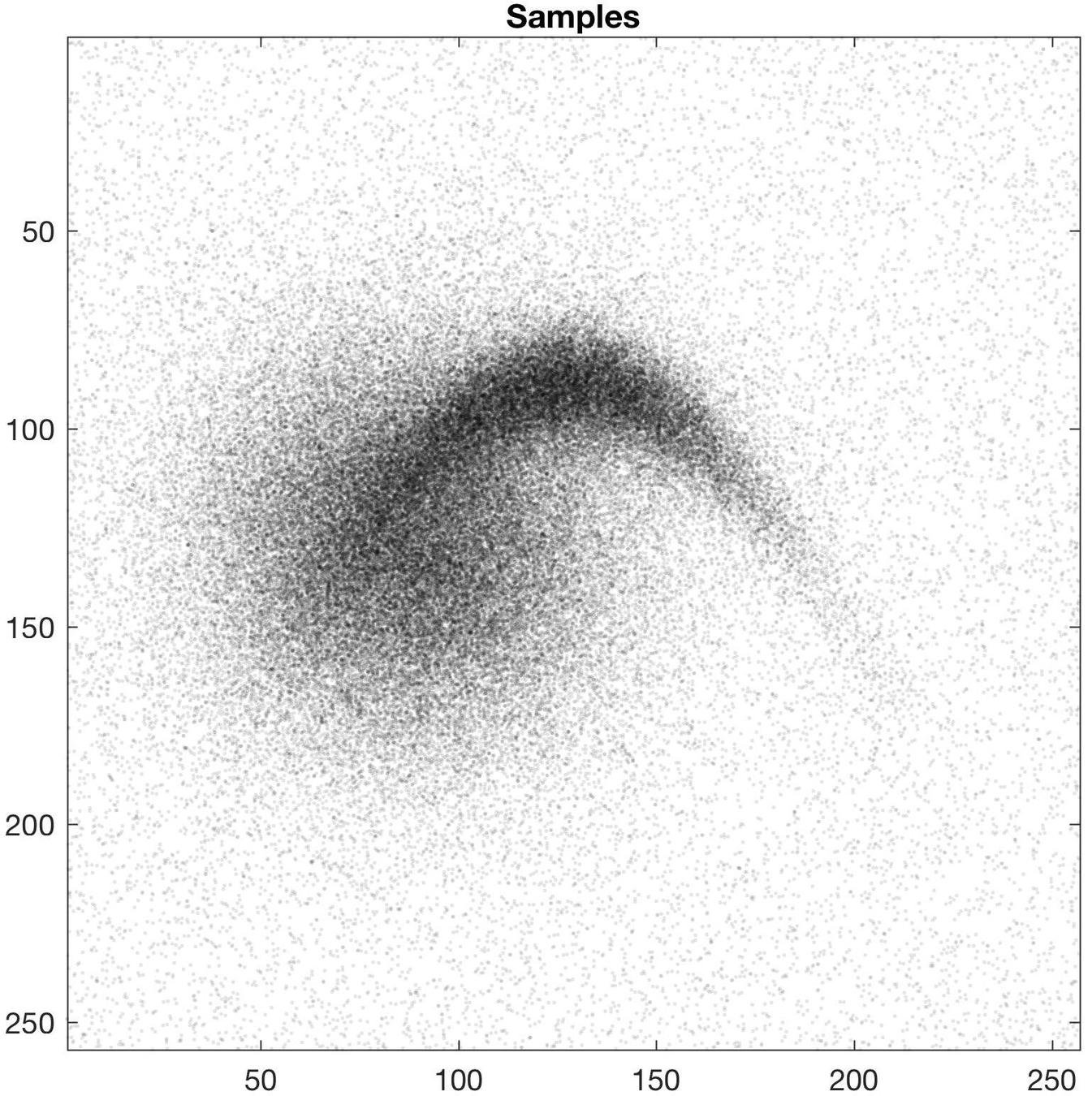

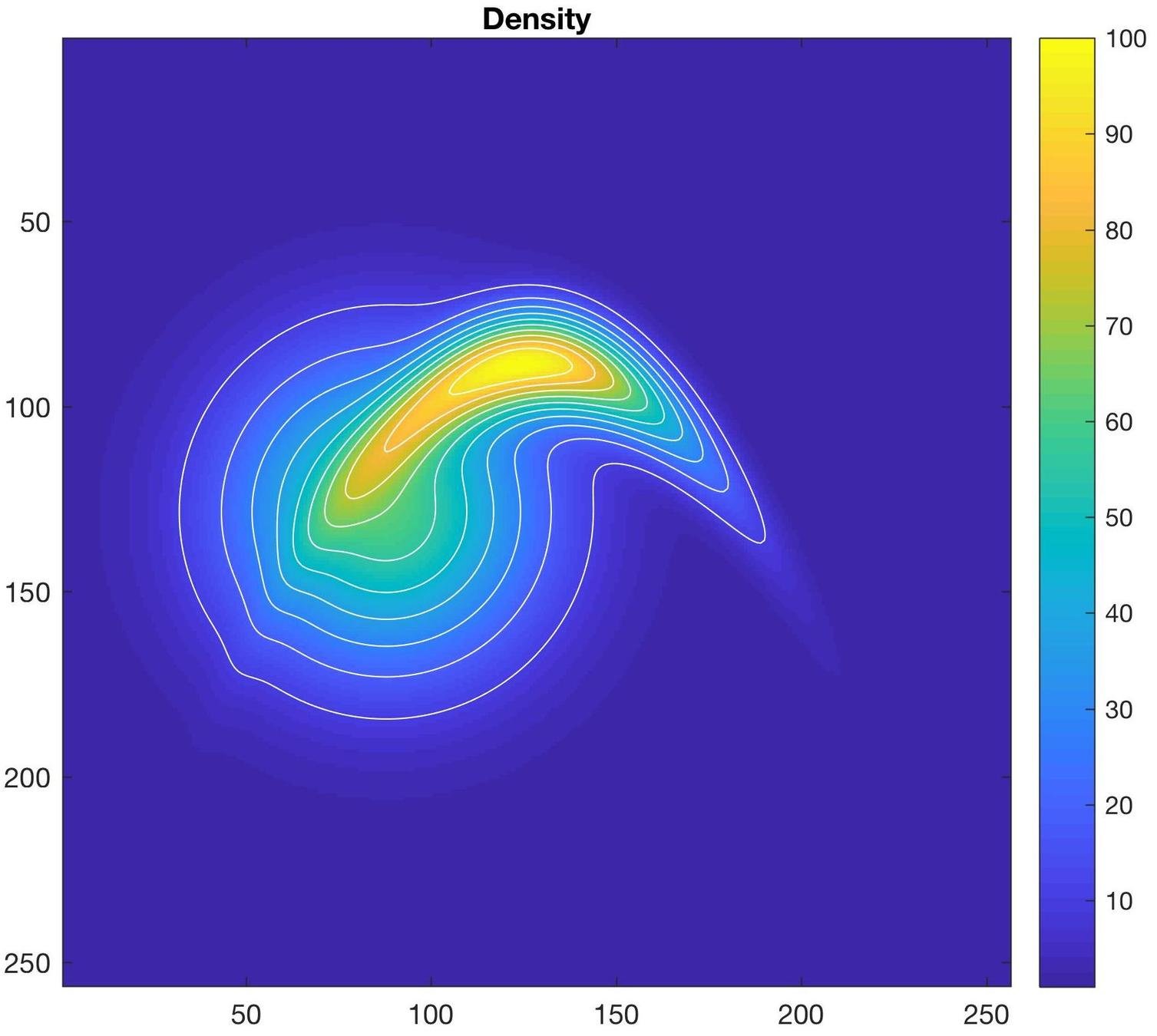

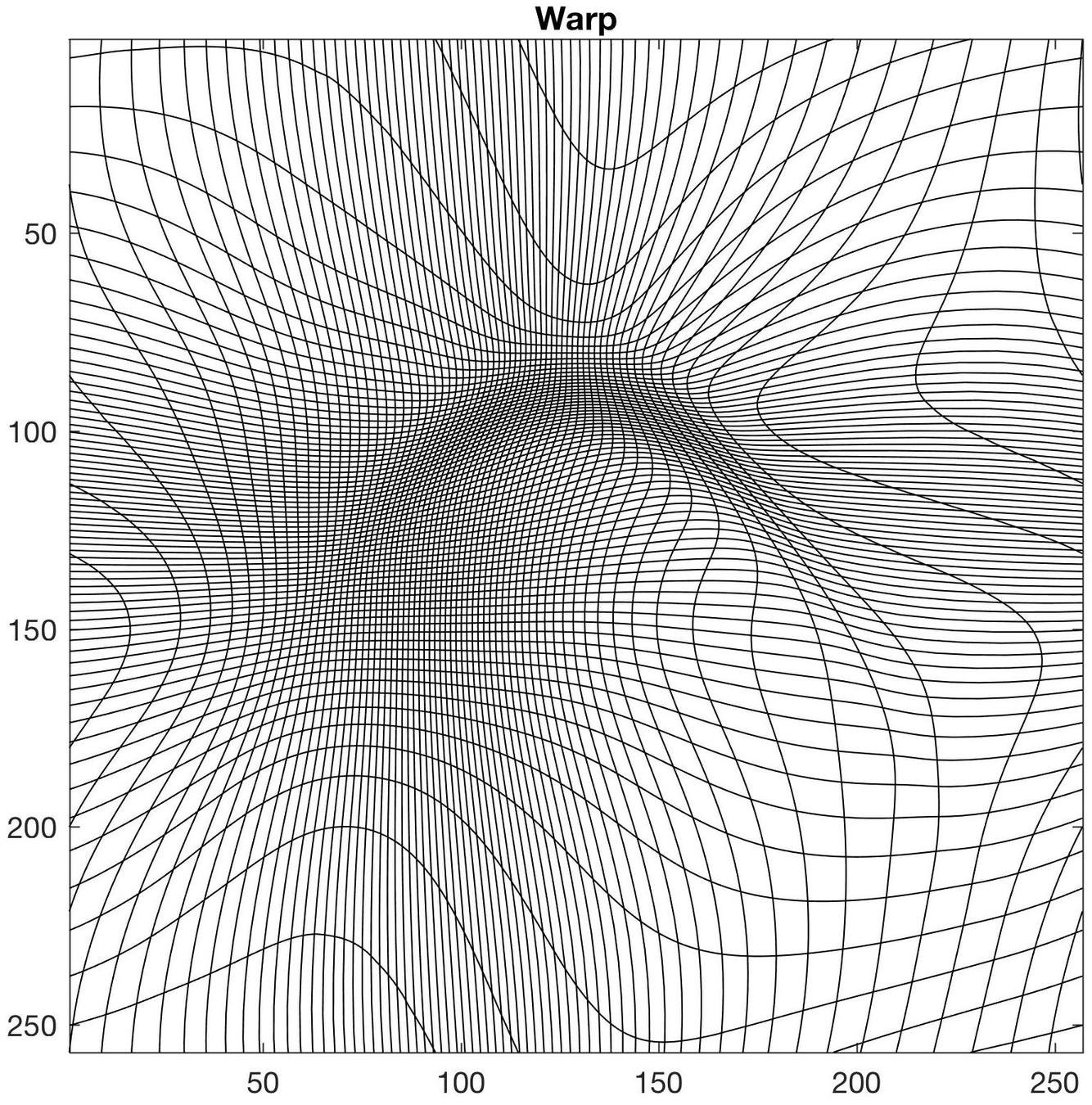

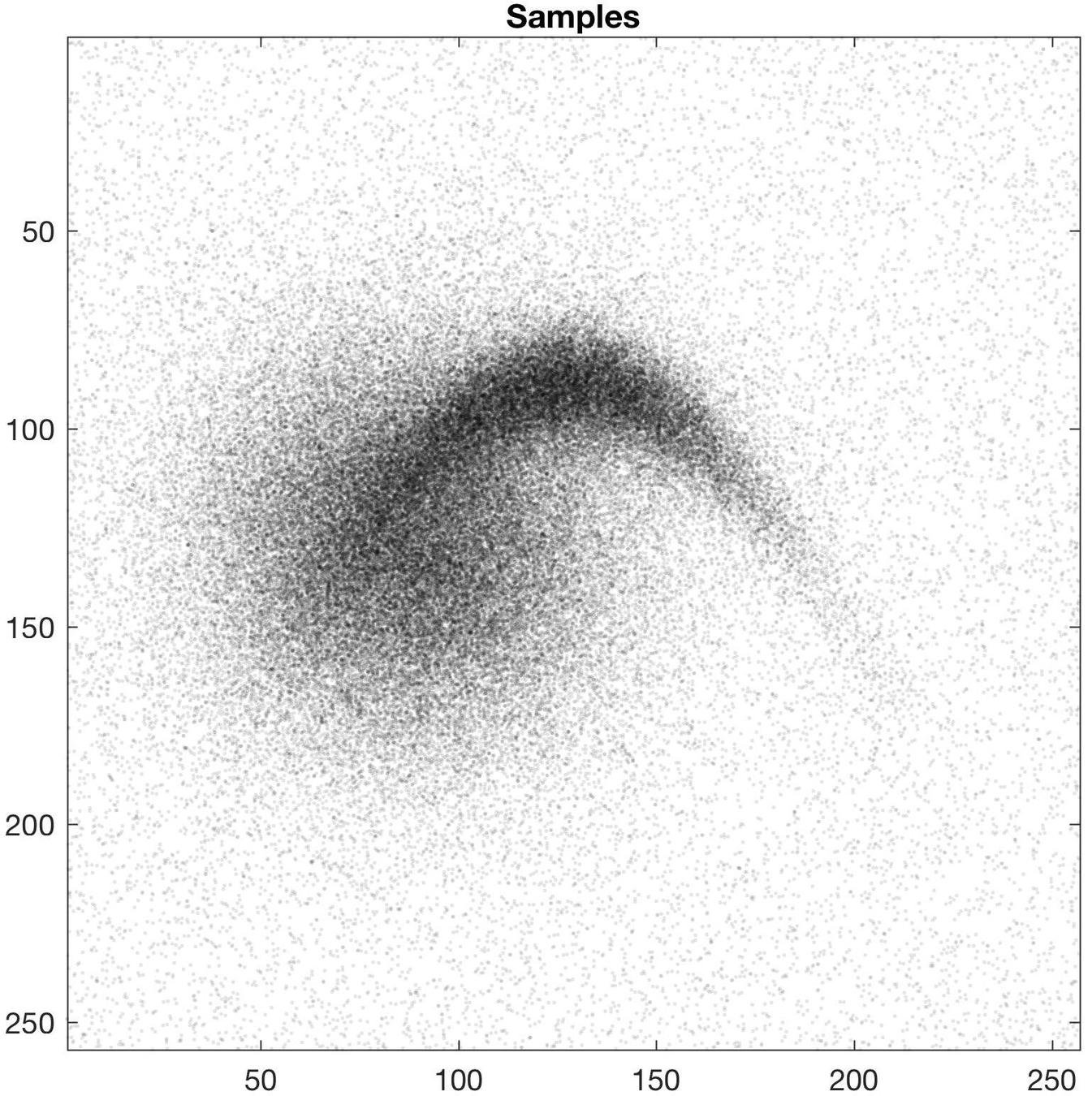

Simple 2D example

Warp computation time (256*256 gridsize, 100 time-steps): ~1s

Sample computation time (10^7 samples): < 1s

Summary

Pros

- Can handle very non-uniform densities

- Draw samples ultra-fast once warp is generated

Cons

- Useless in high dimensions (dimensionality curse)

THANKS!

Slides available at: slides.com/kmodin

MATLAB code available at: github.com/kmodin/oit-random