Advanced Istio

Decoupling at Layer 5

cloud native and its management

Service Mesh Patterns

Abishek Kumar

Thank you to our lab assistant!

Connect

Collaborate

Contribute

Join the Community

Confirm Prerequisites

-

Start Docker Desktop, Minikube or other.

(either single-node or multi-node clusters will work) -

Verify that you have a functional Docker environment by running :

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

1b930d010525: Pull complete

Digest: sha256:0e11c388b664df8a27a901dce21eb89f11d8292f7fca1b3e3c4321bf7897bffe

Status: Downloaded newer image for hello-world:latest

Hello from Docker!Docker and Kubernetes

Prereq

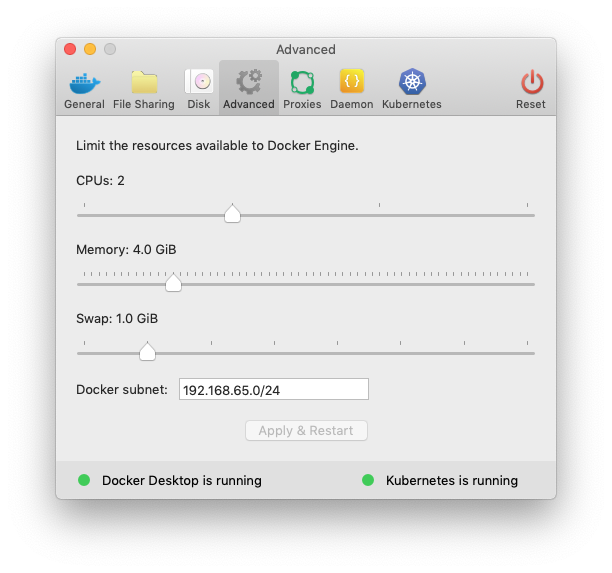

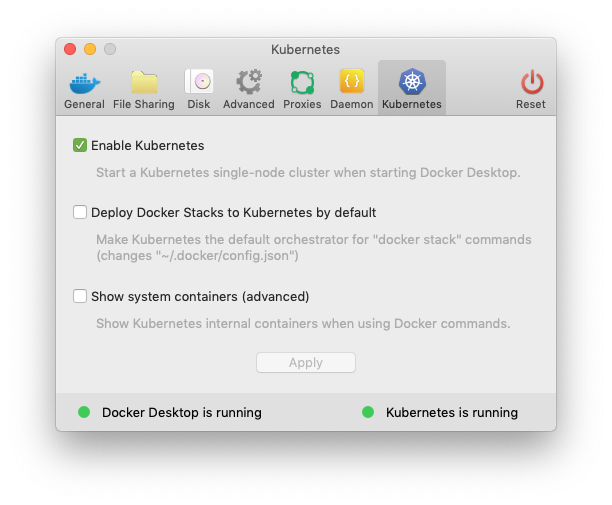

Prepare Docker Desktop

Ensure your Docker Desktop VM has 4GB of memory assigned.

Ensure Kubernetes is enabled.

Prereq

Deploy Kubernetes

- Confirm access to your Kubernetes cluster.

$ kubectl version --short

Client Version: v1.19.2

Server Version: v1.18.8$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

docker-desktop Ready master 10m v1.18.8

Prereq

v1.9 or higher

meshery.io

Deploy Management Plane

Management

Plane

Provides expanded governance, backend system integration, multi-mesh, federation, expanded policy, and dynamic application and mesh configuration.

Control Plane

Data Plane

brew install layer5io/mesheryctl

mesheryctl system startPrereq

Using brew

Using bash

curl -L https://git.io/meshery | sudo bash - mesheryctl system config minikube --token auth.jsonUsing minikube:

What is a Service Mesh?

a services-first network

layer5.io/books/the-enterprise-path-to-service-mesh-architectures

Traffic Control

control over chaos

Resilency

content-based traffic steering

Observability

what gets people hooked on service metrics

Security

identity and policy

Service Mesh Functionality

Expect more from your infrastructure

Business Logic

in-network application logic

What is Istio?

an open platform to connect, manage, and secure microservices

-

Observability

-

Resiliency

-

Traffic Control

-

Security

-

Policy Enforcement

@IstioMesh

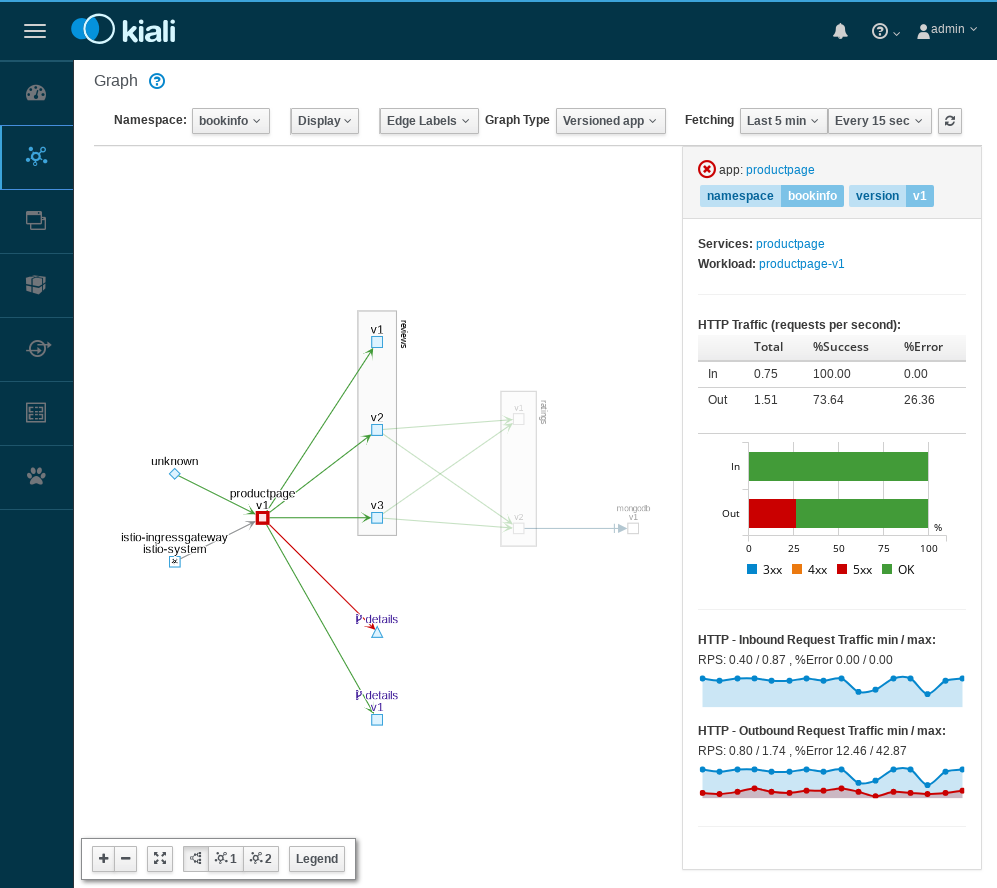

Observability

what gets people hooked on service metrics

Goals

-

Metrics without instrumenting apps

-

Consistent metrics across fleet

-

Trace flow of requests across services

-

Portable across metric back-end providers

You get a metric! You get a metric! Everyone gets a metric!

Traffic Control

control over chaos

- Traffic splitting

- L7 tag based routing?

- Traffic steering

- Look at the contents of a request and route it to a specific set of instances.

- Ingress and egress routing

Resilency

- Systematic fault injection

-

Timeouts and Retries with timeout budget

-

Control connection pool size and request load

-

Circuit breakers and Health checks

content-based traffic steering

Missing: application lifecycle management, but not by much

Missing: distributed debugging; provide nascent visibility (topology)

DEVELOPER

OPERATOR

Decoupling at Layer 5

where Dev, Ops, Product meet

Empowered and independent teams can iterate faster

PRODUCT OWNER

DEVELOPER

OPERATOR

Decoupling at Layer 5

where Dev, Ops, Product meet

Empowered and independent teams can iterate faster

PRODUCT OWNER

Service Mesh Patterns by the Book

Service Mesh Patterns

| Service Communication | Low inter-service communication. | High inter-service communication. |

|---|---|---|

| Observability | Edge focus—metrics and usage are for response time to clients and request failure rates. | Uniform and ubiquitious—observability is key for understanding service behavior. |

| Client Focus | Strong separation of external and internal users. Focused on external API experience. | Equal and undifferentiated experience for internal and external users. |

| World-view of APIs | Primary client-facing interaction is through APIs are for clients only (separation of client-facing or service-to-service communication) | APIs are treated as a product; APIs are how your application exposes its capabilities. |

| Security Model | Security at perimeter | Encryption between all services |

| Security Model... | Subnet zoning (firewalling) | Zero-trust mindset |

| Security Model... | Trusted internal networks (gooey center) | authN and authZ between all services |

| Size of Org | Smaller organizations | Larger organizations |

| # of Services | 1 to 5 services | 5 or more services |

| Service Relability | Either don't need, are willlilng to handcode, or bring in other infrastructure to provide resiliency guarantees. | Need strong controls over the resiliency properties of your services and to granularly control ingress, between, and egress service request traffic. |

| Diversity of Application Stack | Single language | One or more languages |

Lightly consider a service mesh

Strongly consider a service mesh

Consideration

(external client focus)

(internal/external client focus)

layer5.io/landscape

It's meshy out there.

Strengths of Service Mesh Implementations

Different tools for different use cases

a sample

Service mesh abstractions

Meshery is interoperable with these abstractions.

Service Mesh Interface

(SMI)

Multi-Vendor Service Mesh Interoperation (Hamlet)

Service Mesh Performance Specification (SMPS)

A standard interface for service meshes on Kubernetes.

A set of API standards for enabling service mesh federation.

A format for describing and capturing service mesh performance.

to the rescue

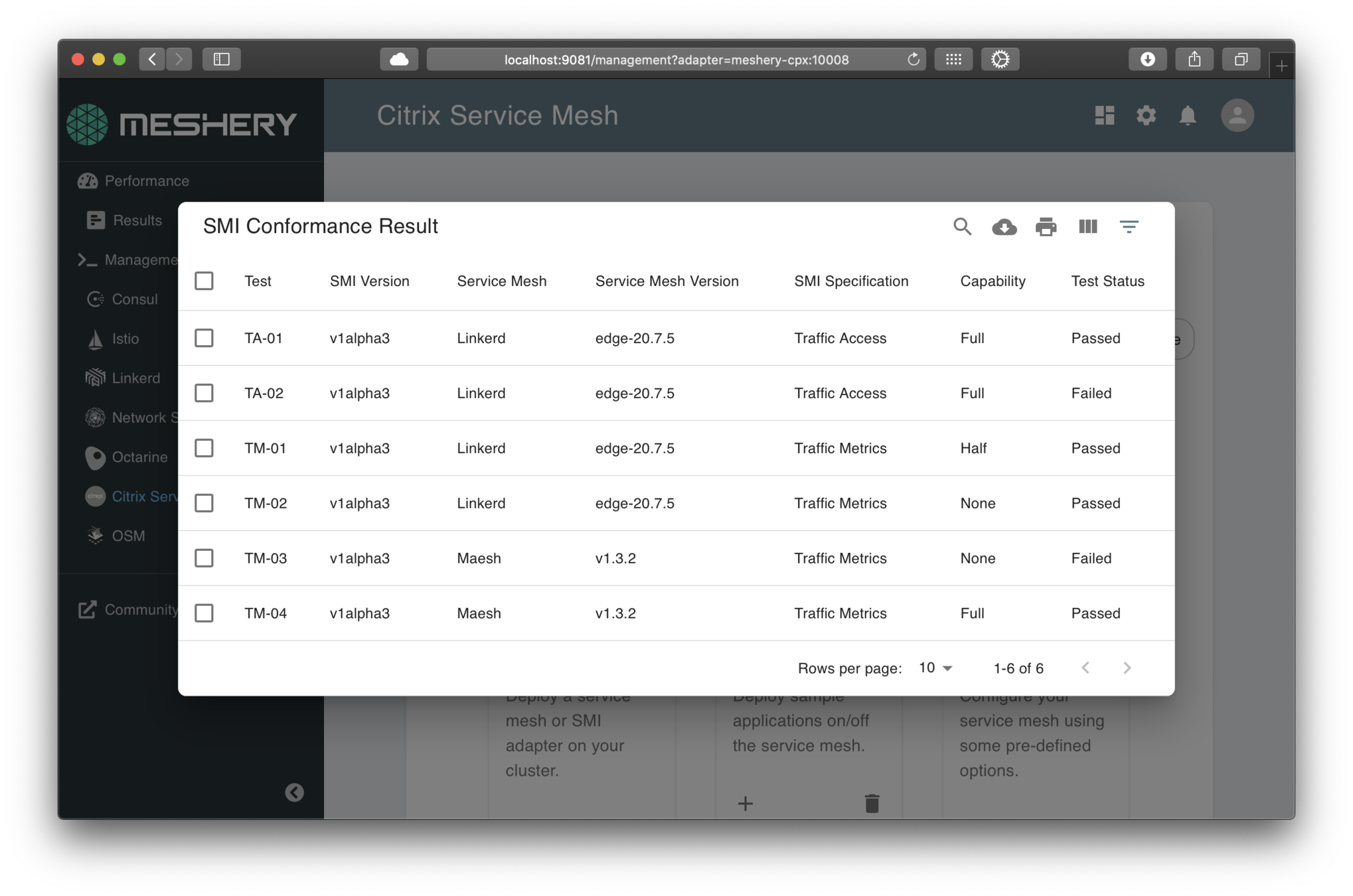

Service Mesh Interface (SMI) Conformance

Operate and upgrade with confirmation of SMI compatibility

✔︎ Learn Layer5 sample application used for validating test assertions.

✔︎ Defines compliant behavior.

✔︎ Produces compatibility matrix.

✔︎ Ensures provenance of results.

✔︎ Runs a set of conformance tests.

✔︎ Built into participating service mesh’s release pipeline.

Q&A

Service Mesh Architectures

Service Mesh Architectures

Data Plane

Touches every packet/request in the system.

Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

Ingress Gateway

Egress Gateway

Service Mesh Architecture

No control plane? Not a service mesh.

Control Plane

Provides policy, configuration, and platform integration.

Takes a set of isolated stateless sidecar proxies and turns them into a service mesh.

Does not touch any packets/requests in the data path.

Data Plane

Touches every packet/request in the system.

Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

Ingress Gateway

Egress Gateway

Service Mesh Architecture

Control Plane

Data Plane

-

Touches every packet/request in the system.

-

Responsible for service discovery, health checking, routing, load balancing, authentication, authorization, and observability.

-

Provides policy, configuration, and platform integration.

-

Takes a set of isolated stateless sidecar proxies and turns them into a service mesh.

-

Does not touch any packets/requests in the data path.

You need a management plane.

Ingress Gateway

Management

Plane

-

Provides backend system integration, expanded policy and governance, continuous delivery integration, workflow, chaos engineering, configuration and performance management and multi-mesh federation.

Egress Gateway

Service Mesh Architecture

Pilot

Citadel

Mixer

Control Plane

Data Plane

istio-system namespace

policy check

Foo Pod

Proxy Sidecar

Service Foo

tls certs

discovery & config

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow

application traffic

Application traffic

application namespace

telemetry reports

Istio Architecture

Galley

Ingress Gateway

Egress Gateway

Control Plane

Data Plane

octa-system namespace

policy check

Foo Pod

Proxy

Sidecar

Service Foo

discovery & config

Foo Container

Bar Pod

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

reports

Control flow

application traffic

Application traffic

application namespace

telemetry reports

Policy

Engine

Security Engine

Visibility

Engine

+

Proxy

Sidecar

+

Octarine Architecture

Control Plane

Data Plane

linkerd namespace

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

telemetry

scarping

Control flow during request processing

application traffic

Application traffic

application namespace

telemetry scraping

Architecture

Prometheus

Grafana

web

CLI

public-api

Linkerd

linkerd-controller

sp-validator

Control Plane

destination

tap

web

CLI

proxy-injector

identity

Leader

Agent

Control Plane

Data Plane

intentions

Foo Pod

Proxy Sidecar

Service Foo

discovery, config,

tls certs

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Control flow

application traffic

Application traffic

application namespace

Follower

Consul Client

Consul Servers

Follower

policy

check

Consul Architecture

layer5.io/service-mesh-architectures

WASM Filter

node

Our service mesh of study: Istio

Deploy Istio

- Install Istio using Meshery

- Find control plane namespace

- Inspect control plane services

- Inspect control plane components

open http://localhost:9081kubectl get namespaceskubectl get svc -n linkerdkubectl get pod -n linkerdLab 1

github.com/layer5io/advanced-istio-service-mesh-workshop

What are Galley and Pilot?

provides service discovery to sidecars

manages sidecar configuration

Pilot

Citadel

the head of the ship

Ingress

istio-system namespace

system of record for service mesh

}

provides abstraction from underlying platforms

Galley

Control Plane

What's Mixer for?

- Point of integration with infrastructure back ends

- Intermediates between Istio and back ends, under operator control

- Enables platform and environment mobility

- Responsible for policy evaluation and telemetry reporting

- Provides granular control over operational policies and telemetry

- Has a rich configuration model

- Intent-based config abstracts most infrastructure concerns

an attribute-processing and routing machine

operator-focused

- Precondition checking

- Quota management

- Telemetry reporting

Pilot

Citadel

Telemetry Filter

istio-system namespace

Galley

Control Plane

Ingress

Data Plane

Mixer

Mixer

Control Plane

Data Plane

istio-system namespace

Foo Pod

Proxy sidecar

Service Foo

Foo Container

Out-of-band telemetry propagation

Control flow during request processing

application traffic

application traffic

application namespace

telemetry reports

an attribute processing engine

What's Citadel for?

-

Verifiable identity

- Issues certs

- Certs distributed to service proxies

- Mounted as a Kubernetes secret

- Secure naming / addressing

- Traffic encryption

security at scale

security by default

Orchestrate Key & Certificate:

- Generation

- Deployment

- Rotation

- Revocation

Pilot

Citadel

Mixer

istio-system namespace

Galley

Control Plane

Q&A

Break

Deploy Sample App

- Multi-language, multi-service application

-

Automatic vs manual sidecar injection

- Verify install

Use Meshery or install manually

Lab 2

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Reviews Service

Ratings Service

Details Service

Product Service

BookInfo Sample App

BookInfo Sample App on Service Mesh

Reviews v1

Reviews Pod

Reviews v2

Reviews v3

Product Pod

Details Container

Details Pod

Ratings Container

Ratings Pod

Product Container

Envoy sidecar

Envoy sidecar

Envoy sidecar

Envoy sidecar

Envoy sidecar

Reviews Service

Enovy sidecar

Envoy ingress

Product Service

Ratings Service

Details Service

Sidecar Injection

Automatic sidecar injection leverages Kubernetes' Mutating Webhook Admission Controller.

- Verify whether your Kubernetes deployment supports these APIs by executing:

- Inspect the linkerd-proxy-injector-webhook-config webhook:

If your environment does NOT this API, then you may manually inject the Linkerd proxy.

Lab 2

github.com/layer5io/advanced-istio-service-mesh-workshop

kubectl get mutatingwebhookconfigurations

kubectl get mutatingwebhookconfigurations linkerd-proxy-injector-webhook-config -o yamlkubectl api-versions | grep admissionregistrationSidecars proxy can be either manually or automatically injected into your pods.

Deploy Sample App with Automatic Sidecar Injection

- Verify presence of the sidecar injector

kubectl get deployment linkerd-proxy-injector -n linkerdLab 2

github.com/layer5io/advanced-istio-service-mesh-workshop

2. Confirm namespace annotation

kubectl describe namespace linkerdsidecar proxy

Deploy Sample App with Manual Sidecar Injection

In namespaces without the linkerd.io/inject annotation, you can use linkerd inject to manually inject Linkerd proxies into your application pods before deploying them:

kubectl kustomize kustomize/deployment | \

linkerd inject - | \

kubectl apply -f -Lab 2

github.com/layer5io/advanced-istio-service-mesh-workshop

sidecar proxy

Expose BookInfo via Istio Ingress Gateway

- Inspecting the Istio Ingress Gateway

- Configure Istio Ingress Gateway for Bookinfo

- Inspect the Istio proxy of the productpage pod

Istio ingress gateway

application traffic

Lab 3

github.com/layer5io/advanced-istio-service-mesh-workshop

Q&A

Telemetry

-

benchmarking of service mesh performance

-

exchange of performance information from system-to-system / mesh-to-mesh

-

apples-to-apples performance comparisons of service mesh deployments.

-

MeshMark - a universal performance index to gauge a service mesh’s efficiency against deployments in other organizations’ environments

Service Mesh Performance

https://smp-spec.io

Directly provides:

Indirectly facilitates:

- a vendor neutral specification for capturing details of infrastructure capacity, service mesh configuration, and workload metadata.

Service Mesh Performance (SMP)

Configuration

Security

Telemetry

Control Plane

Data

Plane

service mesh ns

Foo Pod

Proxy Sidecar

Service Foo

Foo Container

Bar Pod

Proxy Sidecar

Service Bar

Bar Container

Out-of-band telemetry propagation

Control flow

application

traffic

Application traffic

application namespace

Meshery Architecture

Ingress Gateway

Egress Gateway

Management

Plane

meshery

adapters

gRPC

kube-api

kube-system

generated load

http / gRPC traffic

fortio

wrk2

nighthawk

UI

API

workloads

Meshery WASM Filter

CLI

perf analysis

patterns

@mesheryio

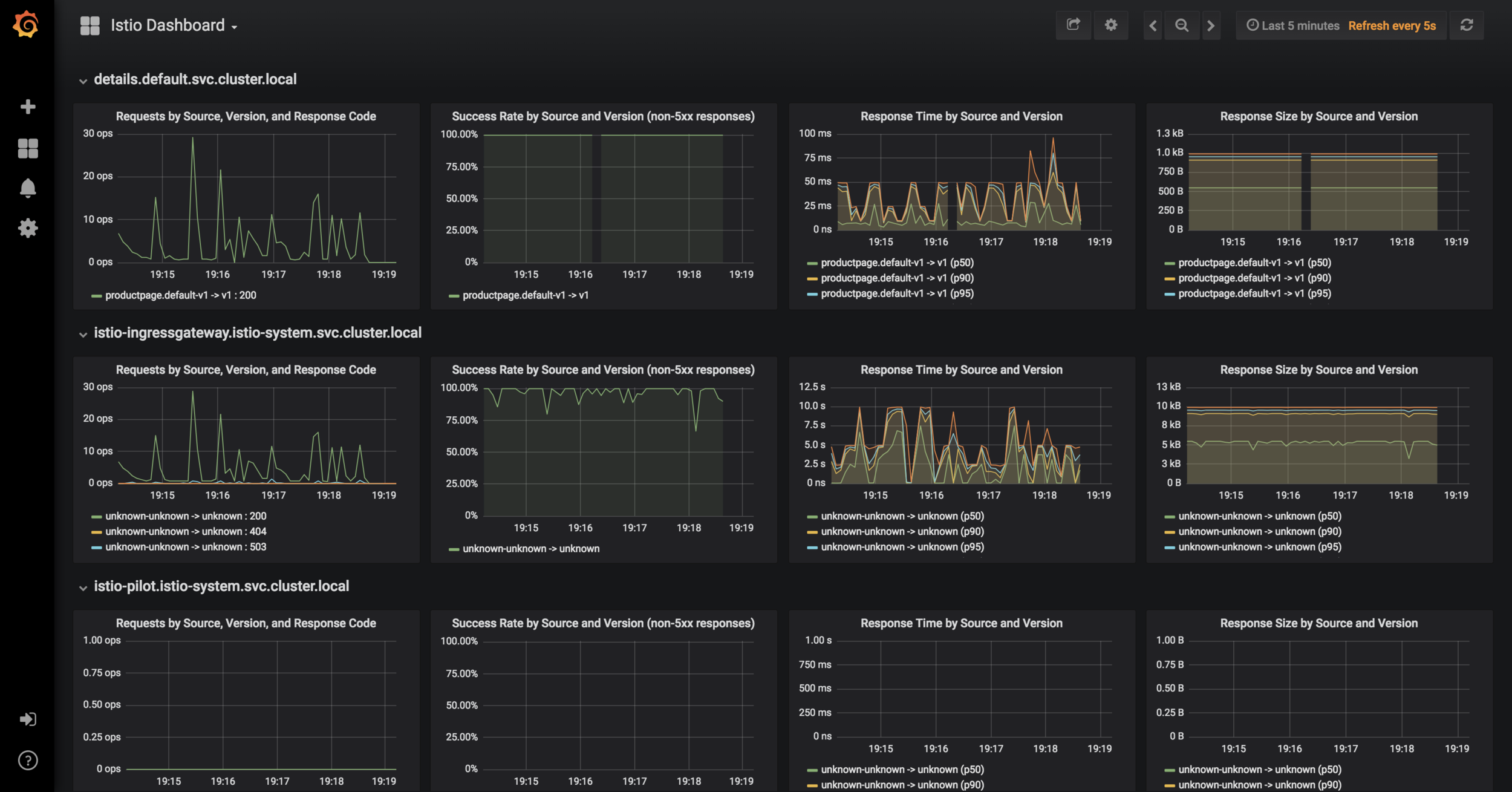

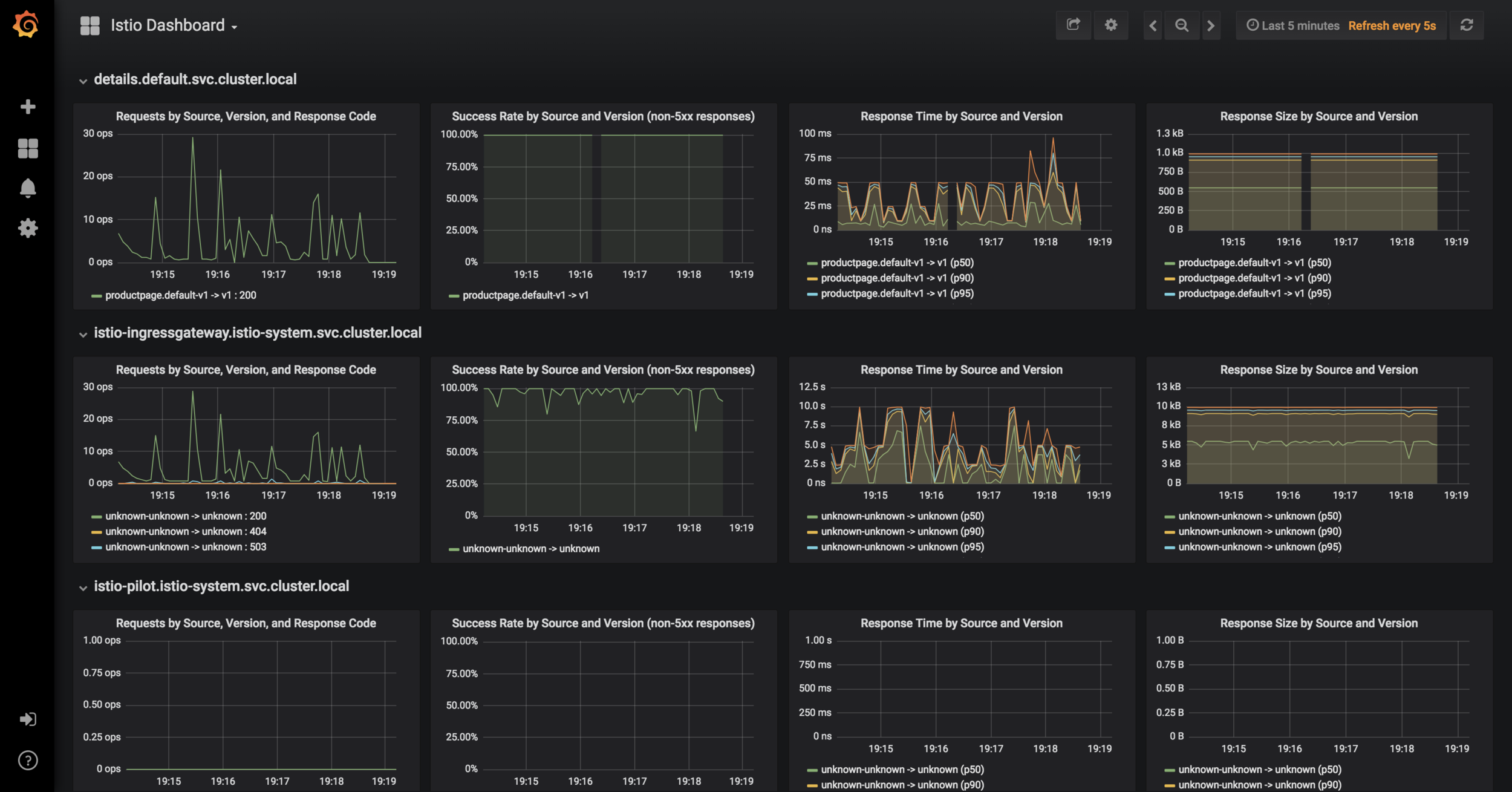

Metrics Dashboard

Lab 4

- Expose services with NodePort:

- Find port assigned to Grafana:

kubectl -n istio-system edit svc grafanakubectl -n istio-system get svc grafana

Grafana

Lab 4

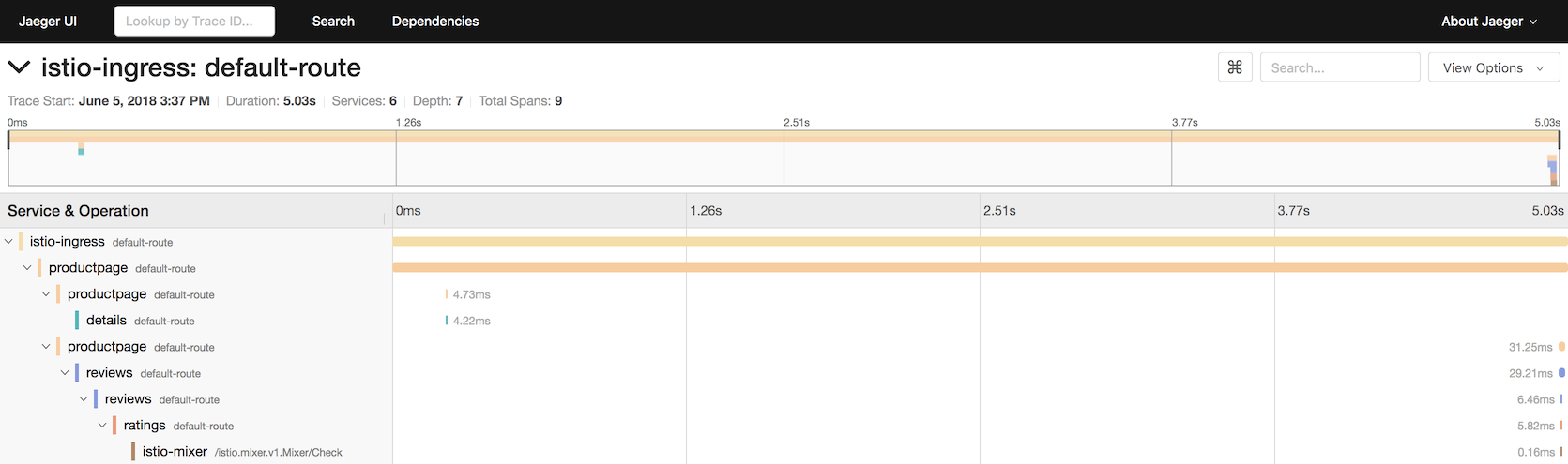

Distributed Tracing

Jaeger

github.com/layer5io/advanced-istio-service-mesh-workshop

Distributed Tracing

Jaeger

The istio-proxy collects and propagates the following headers from the incoming request to any outgoing requests:

- x-request-id

- x-b3-traceid

- x-b3-spanid

- x-b3-parentspanid

- x-b3-sampled

- x-b3-flags

- x-ot-span-context

Lab 4

kubectl -n istio-system edit svc tracinggithub.com/layer5io/advanced-istio-service-mesh-workshop

Load Generation

Lab 4

@smp_spec

smp-spec.io

1. Use Meshery to generate load on sample application

2. View in monitoring tools

Lab 4 Tasks

Q&A

Break

Request Routing and Canary Testing

- Configure the default route for all services to V1

- Content based routing

- Canary Testing - Traffic Shifting

Lab 5

Fault Injection and Latency

- Inject a route rule to create a fault using HTTP delay

- Inject a route rule to create a fault using HTTP abort

- Verify fault injection

Lab 5

Circuit Breaking

- Deploy a client for the app

- With manual sidecar injection:

- Initial test calls from client to server

- Time to trip the circuit

Lab 5

Timeouts & Retries

Web

Service Foo

Timeout = 600ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Service Bar

Database

Timeout = 500ms

Retries = 3

Timeout = 300ms

Retries = 3

Timeout = 900ms

Retries = 3

Deadlines

Web

Service Foo

Deadline = 600ms

Deadline = 496ms

Service Bar

Database

Deadline = 428ms

Deadline=180ms

Elapsed=104ms

Elapsed=68ms

Elapsed=248ms

What is WebAssembly?

for the web, malware and beyond

- A small, fast binary format that promises near-native performance for web applications.

- Most modern browsers support it.

- Safe and sandboxed execution environment.

- Over 40 languages that support WASM as a compilation target.

- Originally used to speed up large web-applications.

webassembly.org

Workhorses

- WASM VMs in Envoy run the filters in sandboxes.

- Envoy is using the V8 runtime.

- Attempts to allow execution of WASM on machines natively.

- Portable and dynamically loadable.

- Uses WebAssembly System Interface (WASI)

- Envoy exposes an Application Binary Interface (ABI)

Data Plane

Pod

Proxy sidecar

App Container

+

WASM modules as Envoy filters

WASM is becoming a WORA runtime

An optimization game

Latency, throughput, and the proxies’ CPU and memory consumption affected by these factors

Data Plane

Proxy sidecar

App Container

Pod

- Number of client connections

- Target request rate

- Request size and Response size

- Number of proxy worker threads

- Protocol

- CPU cores

- Number and types of proxy filters

with many variables

Data plane performance depends on many factors, for example:

Context-based routing

Understanding the trade-off between power and speed

Data Plane

Proxy sidecar

App Container

Pod

Speed

Data Plane

Proxy sidecar

App Container

Pod

Round robin load balancing

Data Plane

Proxy sidecar

App Container

Pod

Path-based routing

Comparing types of functions

Power

Speed

Comparing types of Data Plane filtering

Data Plane

Pod

Proxy sidecar

App Container

Comparing approaches to data plane filtering

Data Plane

App Container

Pod

Client Library

Proxy sidecar

Rate limiting with Go client library

-

100 RPS

- p50: 3.19ms

-

500 RPS

- p50: 2.44ms

-

Unlimited RPS - 4417

- p50: 0.66ms

Rate limiting with WASM module (Rust filter)

-

100 RPS

- p50: 2.1ms

-

500 RPS

- p50: 2.22ms

-

Unlimited RPS - 5781

- p50: 0.62ms

Power

Speed

Data Plane

Proxy sidecar

App Container

Pod

Image Hub

github.com/layer5io/image-hub

| Functionality | In the app | In the filter |

|---|---|---|

| User / Token | ||

| Subscription Plans | ||

| Plan Enforcement |

a sample app

Two

application containers

Hub UI Pod

Image Storage Container

Image Storage Pod

Hub UI

Container

Image Storage Service

Hub UI Service

github.com/layer5io/image-hub

Image Hub on Docker Desktop

Hub UI Pod

Image Access Container

Image Access Pod

Hub UI

Container

Image Access Service

Hub UI Service

Image Hub on a Service Mesh

Envoy sidecar

Envoy sidecar

github.com/layer5io/image-hub

with Consul

Leader

Follower

Consul Servers

Follower

agent

Consul Client

node

Image Access Container

Image Access Pod

Image Access Service

Image Hub on Consul

Envoy sidecar

github.com/layer5io/image-hub

WASM Filter

with a Rust-based WASM filter

apiVersion: apps/v1

kind: Deployment

spec:

template:

metadata:

labels:

app: api-v1

annotations:

"consul.hashicorp.com/connect-inject": "true"

"consul.hashicorp.com/service-meta-version": "1"

"consul.hashicorp.com/service-tags": "v1"

"consul.hashicorp.com/connect-service-protocol": "http"

"consul.hashicorp.com/connect-wasm-filter-add_header": "/filters/optimized.wasm"

spec:

containers:

- name: api

image: layer5/image-hub-api:latestLeader

Follower

Consul Servers

Follower

agent

node

Lee Calcote

cloud native and its management

layer5.io/subscribe

Relating to

Service Meshes

Which is why...

I have a container orchestrator.

Core

Capabilities

-

Cluster Management

-

Host Discovery

-

Host Health Monitoring

-

-

Scheduling

-

Orchestrator Updates and Host Maintenance

-

Service Discovery

-

Networking and Load Balancing

-

Stateful Services

-

Multi-Tenant, Multi-Region

Additional

Key Capabilities

-

Application Health and Performance Monitoring

-

Application Deployments

-

Application Secrets

minimal capabilities required to qualify as a container orchestrator

Service meshes generally rely on these underlying layers.

Which is why...

I have an API gateway.

Microservices API Gateways

-

Ambassador uses Envoy

-

Kong uses Nginx

-

OpenResty uses Nginx

north-south vs. east-west

Which is why...

I have client-side libraries.

Enforcing consistency is challenging.

Foo Container

Flow Control

Foo Pod

Go Library

A v1

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Bar Container

Flow Control

Bar Pod

Go Library

A v2

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Baz Container

Flow Control

Baz Pod

Java Library

B v1

Network Stack

Service Discovery

Circuit Breaking

Application / Business Logic

Retry Budgets

Rate Limiting

Help with Modernization

-

Can modernize your IT inventory without:

-

Rewriting your applications

-

Adopting microservices, regular services are fine

-

Adopting new frameworks

-

Moving to the cloud

-

address the long-tail of IT services

Get there for free

Why use a Service Mesh?

-

Bloated service (application) code

-

Duplicating work to make services production-ready

-

Load balancing, auto scaling, rate limiting, traffic routing...

-

-

Inconsistency across services

-

Retry, tls, failover, deadlines, cancellation, etc., for each language, framework

-

Siloed implementations lead to fragmented, non-uniform policy application and difficult debugging

-

-

Diffusing responsibility of service management

to avoid...

Services Observation

Kiali

Lab 4

- Expose services with NodePort:

- Find port assigned to Kiali:

kubectl -n istio-system edit svc kialikubectl -n istio-system get svc kiali

github.com/layer5io/linkerd-service-mesh-workshop

Mutual TLS & Identity Verification

- Verify mTLS

- Understanding SPIFFE